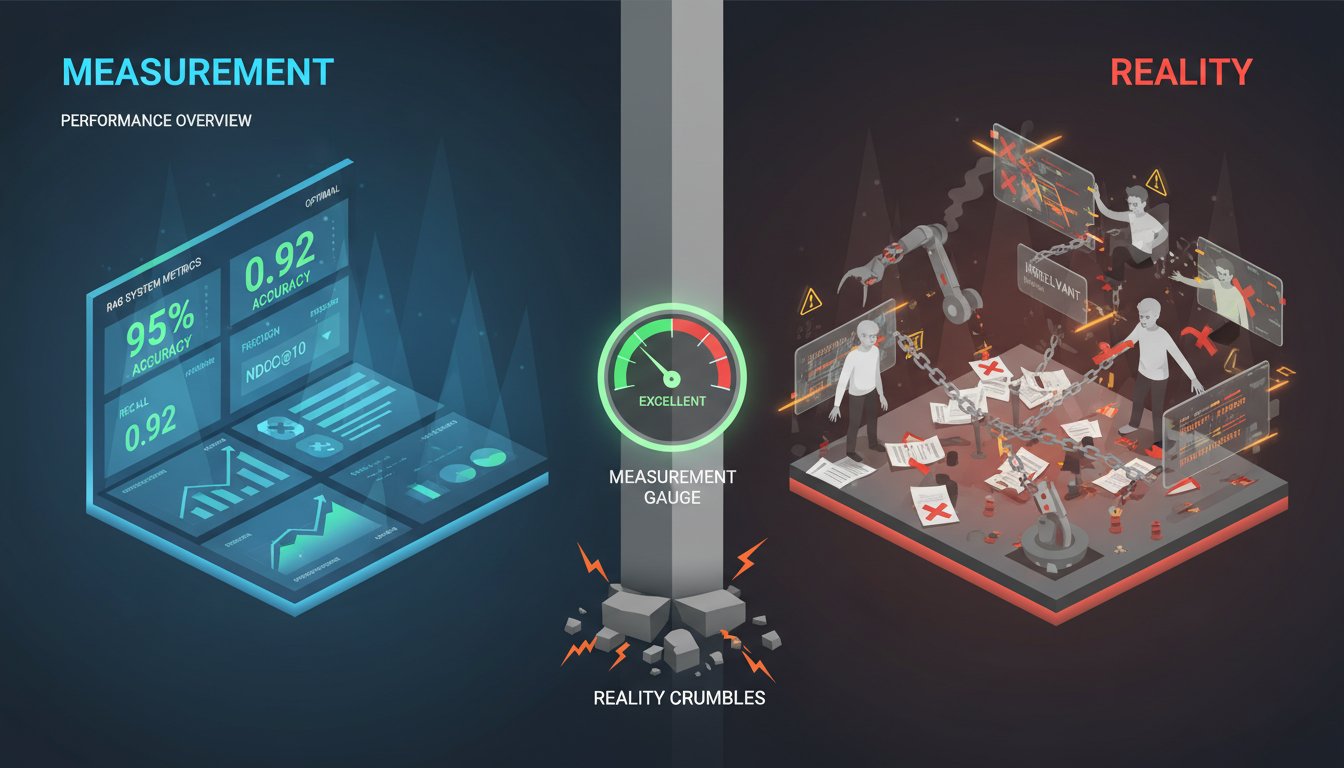

Your RAG system shows 95% retrieval accuracy in testing. Your precision metrics are stellar. Your NDCG scores would make any ML engineer proud. Yet somehow, three months into production, your enterprise RAG deployment is quietly collapsing—and your dashboards never saw it coming.

This is the measurement paradox that’s plaguing enterprise RAG deployments in 2024: the gap between what we measure and what actually matters is wider than the gap between prototype and production. While teams obsess over retrieval metrics that look impressive in reports, the real determinants of RAG success—data freshness, domain relevance, end-to-end latency, and contextual accuracy—remain largely unmeasured and unmonitored.

Recent research from VentureBeat confirms what many enterprise teams are discovering the hard way: we’re measuring the wrong part of RAG. And this measurement blindness is contributing to the 80% failure rate of enterprise RAG projects before they reach production.

The Illusion of Retrieval Excellence

Here’s what most enterprise RAG evaluation looks like today: teams benchmark their retrieval systems using academic metrics like Recall@K, Normalized Discounted Cumulative Gain (NDCG), and Mean Reciprocal Rank (MRR). They test against datasets like HotpotQA, NaturalQuestions, or BEIR benchmarks. They achieve impressive scores—often above 90%—and declare victory.

Then they deploy to production.

Within weeks, users start complaining. The system returns irrelevant documents. It misses critical context. It provides outdated information. The responses feel disconnected from the actual question. But when the team checks their monitoring dashboards, everything looks fine. Retrieval accuracy: 94%. Precision: 91%. NDCG: 0.87. The metrics say success while the business experiences failure.

This disconnect reveals a fundamental flaw in how we’re evaluating RAG systems. We’re optimizing for what’s easy to measure—whether the right document chunk appears in the top K results—rather than what actually matters: whether the retrieved context enables the LLM to generate a useful, accurate, and timely response to the user’s actual need.

The Five Critical Gaps in Standard RAG Measurement

NVIDIA’s research on enterprise-grade RAG evaluation identifies several key limitations in how teams typically assess their systems:

Gap 1: Benchmark Misalignment – Teams evaluate on generic academic datasets (HotpotQA, NaturalQuestions) that bear little resemblance to their actual enterprise use case. A model that excels at Wikipedia-based question answering may fail miserably at retrieving relevant clauses from legal contracts or technical specifications from engineering manuals.

Gap 2: In-Domain vs. Out-of-Domain Performance – Models trained on benchmark datasets show inflated performance when tested on those same benchmarks but often collapse when faced with real production queries. The impressive recall score was measuring memorization, not generalization.

Gap 3: Chunk Relevance vs. Answer Quality – Standard retrieval metrics measure whether relevant chunks were retrieved, not whether those chunks contained the specific information needed to answer the question. A document chunk can be topically relevant while missing the critical detail that makes the difference between a useful and useless response.

Gap 4: Static Evaluation vs. Dynamic Reality – Most evaluation happens at a point in time with a fixed dataset. But in production, RAG systems face constantly evolving data, shifting user needs, and emerging edge cases. Yesterday’s 95% accuracy becomes today’s 60% effectiveness as the knowledge base grows stale or user expectations shift.

Gap 5: Component Metrics vs. End-to-End Outcomes – Teams measure retrieval performance in isolation, ignoring how retrieved context actually performs when combined with the LLM, prompt engineering, and post-processing. A perfectly retrieved chunk can still lead to a failed response if the LLM misinterprets it or the context window is poorly structured.

What We Should Be Measuring Instead

The shift from prototype to production-grade RAG requires a fundamental rethink of evaluation. Instead of focusing solely on retrieval metrics, enterprise teams need to measure the factors that actually determine whether their RAG system delivers value in the real world.

Domain-Specific Relevance Over Generic Accuracy

Meilisearch’s 2024 research on RAG evaluation methodologies emphasizes the critical importance of domain-specific benchmarking. Rather than testing against NaturalQuestions, enterprise teams need to build evaluation datasets from their own production queries and labeled data.

This means creating custom benchmarks that reflect:

– The actual vocabulary and terminology users employ

– The specific types of questions your system needs to answer

– The domain-specific context required for accurate responses

– The edge cases and ambiguous queries that occur in practice

A pharmaceutical company evaluating a RAG system for drug interaction queries shouldn’t care about performance on Wikipedia articles. They need to know whether the system can accurately retrieve relevant clinical trial data, safety warnings, and contraindication information from their internal knowledge base.

Data Freshness and Temporal Relevance

One of the most overlooked aspects of RAG evaluation is temporal accuracy—whether the system retrieves the most current and relevant information. Your retrieval metrics might show perfect performance while your system serves outdated product specifications, deprecated policies, or superseded regulations.

Effective RAG measurement must track:

– Index staleness – How long between source data updates and index updates

– Version awareness – Whether the system distinguishes between current and historical versions

– Temporal query understanding – How well the system interprets time-sensitive queries (“current policy,” “latest version,” “as of Q4”)

– Deprecation handling – Whether outdated information is properly flagged or excluded

This temporal dimension is especially critical for regulated industries (finance, healthcare, legal) where providing outdated information isn’t just unhelpful—it’s potentially dangerous and legally problematic.

End-to-End Response Quality

Ultimately, what matters isn’t whether the right chunks were retrieved but whether the final response to the user is accurate, complete, and useful. This requires evaluating the entire RAG pipeline, not just the retrieval component.

Key end-to-end metrics include:

Contextual Sufficiency – Does the retrieved context contain all the information needed to answer the question? Measuring this requires human evaluation or LLM-as-judge approaches that assess whether critical information is missing.

Answer Accuracy – Is the final generated response factually correct based on the retrieved context? This goes beyond retrieval metrics to measure the LLM’s ability to correctly interpret and synthesize the retrieved information.

Response Completeness – Does the response fully address all aspects of the user’s query? A system might retrieve relevant chunks but still produce incomplete responses if the LLM fails to synthesize them properly.

Hallucination Detection – Does the response include information not present in the retrieved context? Even with perfect retrieval, LLMs can hallucinate, making this a critical production metric.

User Satisfaction Signals – In production, track behavioral signals like response acceptance rates, follow-up query patterns, and explicit feedback. These real-world signals often reveal problems that synthetic metrics miss.

Latency and Cost at Scale

Academic benchmarks rarely consider performance constraints, but in production, latency and cost determine whether a RAG system is viable. A system that achieves 98% accuracy but takes 30 seconds to respond or costs $5 per query won’t survive in production.

Critical operational metrics:

– End-to-end latency – Total time from query to response, not just retrieval time

– Latency distribution – P50, P95, P99 response times to understand tail latency

– Cost per query – Including embedding costs, vector search costs, LLM API costs

– Throughput – Queries per second the system can handle

– Resource utilization – Infrastructure costs relative to query volume

These operational metrics often expose the real barriers to production deployment that pure accuracy metrics completely miss.

The Evaluation Framework That Actually Predicts Production Success

Based on research from BCG, NVIDIA, and emerging best practices from enterprise RAG teams, here’s a more complete evaluation framework:

Layer 1: Retrieval Quality (But Done Right)

Yes, measure retrieval metrics—but do it properly:

– Use domain-specific evaluation datasets built from real production queries

– Test out-of-domain performance on queries the system hasn’t seen

– Measure both topical relevance and information sufficiency

– Track performance across different query types (simple factual, complex analytical, ambiguous)

Layer 2: Context Quality Assessment

Evaluate the quality of retrieved context before it reaches the LLM:

– Coherence – Do retrieved chunks form a coherent narrative or are they disjointed?

– Redundancy – Is the same information repeated across chunks, wasting context window?

– Completeness – Are critical supporting details included or fragmented?

– Noise ratio – How much irrelevant information is included alongside relevant content?

Layer 3: End-to-End Response Evaluation

Measure what the user actually experiences:

– Factual accuracy – Automated fact-checking against source documents

– Completeness – Coverage of query aspects

– Hallucination rate – Claims not supported by retrieved context

– Citation accuracy – If sources are cited, are they correct and relevant?

Layer 4: Production Reality Metrics

Track how the system performs in the real world:

– User satisfaction scores – Explicit feedback mechanisms

– Behavioral signals – Query reformulation rates, session abandonment

– Error analysis – Categorization of failure modes

– Temporal accuracy – Whether responses reflect current information

– Edge case handling – Performance on unusual or complex queries

Layer 5: Operational Viability

Measure whether the system can actually operate at scale:

– Latency profiles – Response time distributions

– Cost efficiency – Expense per query and per user

– Scalability – Performance degradation as data and users grow

– Reliability – Uptime, error rates, failure recovery

The Hidden Cost of Measurement Theater

Why does measurement matter so much? Because what we measure determines what we optimize. When teams focus exclusively on retrieval metrics, they invest in improving recall and precision while ignoring the factors that actually determine production success.

This creates what we might call “measurement theater”—impressive-looking metrics that give false confidence while real problems go undetected. Teams celebrate 95% retrieval accuracy while their system slowly fails in production because:

- The knowledge base has become stale (unmeasured)

- User queries have shifted to patterns not covered in the test set (unmeasured)

- The LLM hallucinates despite perfect retrieval (unmeasured)

- Latency has crept up to unacceptable levels (unmeasured)

- Domain-specific edge cases fail consistently (unmeasured)

The cost of this measurement theater is real: wasted engineering time optimizing the wrong metrics, delayed recognition of production failures, lost user trust, and ultimately, abandoned RAG projects that could have succeeded with better evaluation.

Moving Beyond Retrieval-Centric Evaluation

The path forward requires a cultural shift in how enterprise teams think about RAG evaluation. Instead of treating evaluation as a one-time benchmark before deployment, it needs to become an ongoing practice that evolves with the system.

Build Domain-Specific Evaluation Datasets

Invest time in creating evaluation datasets from real production queries and labeled by domain experts. A few hundred high-quality, representative examples are more valuable than thousands of generic benchmark queries. Update these datasets regularly as new use cases and edge cases emerge.

Implement Multi-Layer Evaluation

Measure performance at every layer of the RAG pipeline—retrieval quality, context quality, response quality, user satisfaction, and operational metrics. No single metric tells the complete story. The goal is a balanced scorecard that reveals both strengths and weaknesses.

Automate End-to-End Testing

Use LLM-as-judge approaches to automatically evaluate response quality at scale. While not perfect, these automated evaluations can catch issues that pure retrieval metrics miss. Combine automated evaluation with periodic human review to validate the judges and catch edge cases.

Monitor Production Continuously

Don’t stop evaluating after deployment. Implement continuous monitoring of key metrics with alerts for degradation. Track how performance changes as data grows, user patterns shift, and the knowledge base evolves. The system that worked perfectly at launch may quietly degrade over time.

Connect Metrics to Business Outcomes

Ultimately, RAG systems exist to deliver business value. Connect your technical metrics to business outcomes—increased productivity, reduced support costs, faster decision-making, improved compliance. When technical metrics and business outcomes diverge, the metrics are wrong.

The Measurement Paradox Resolved

The measurement paradox exists because we’ve inherited evaluation frameworks from academic research that optimized for what was easy to measure and compare across research papers. But enterprise RAG deployments operate in a fundamentally different context—one where domain specificity, temporal accuracy, operational viability, and end-to-end response quality matter more than generic retrieval benchmarks.

Resolving this paradox doesn’t mean abandoning retrieval metrics. Recall, precision, and NDCG still provide valuable signals about one component of the system. But they’re necessary, not sufficient. They tell you whether you’re retrieving the right chunks, not whether you’re delivering the right outcomes.

The teams that succeed with enterprise RAG are those that measure what actually matters: domain-specific accuracy, temporal relevance, end-to-end response quality, user satisfaction, and operational viability. They build custom evaluation datasets. They automate multi-layer testing. They monitor production continuously. They connect technical metrics to business outcomes.

Your RAG system’s retrieval accuracy might be 95%, but if users are reformulating queries, abandoning sessions, and reporting incorrect information, your system is failing regardless of what the dashboard says. The measurement paradox is only a paradox if we keep measuring the wrong things.

It’s time to stop optimizing for impressive retrieval metrics and start measuring what determines whether enterprise RAG actually works in production. Because the gap between what we measure and what matters is the gap between prototype and production—and it’s costing enterprises millions in failed deployments.

Before you celebrate your next retrieval benchmark victory, ask yourself: are we measuring what makes our users successful, or just what makes our metrics look good? The answer might be the difference between a RAG system that thrives in production and one that quietly fails while the dashboards report success.