The Vector Database Performance Wall: Why Enterprise RAG Hits a Latency Ceiling at Scale

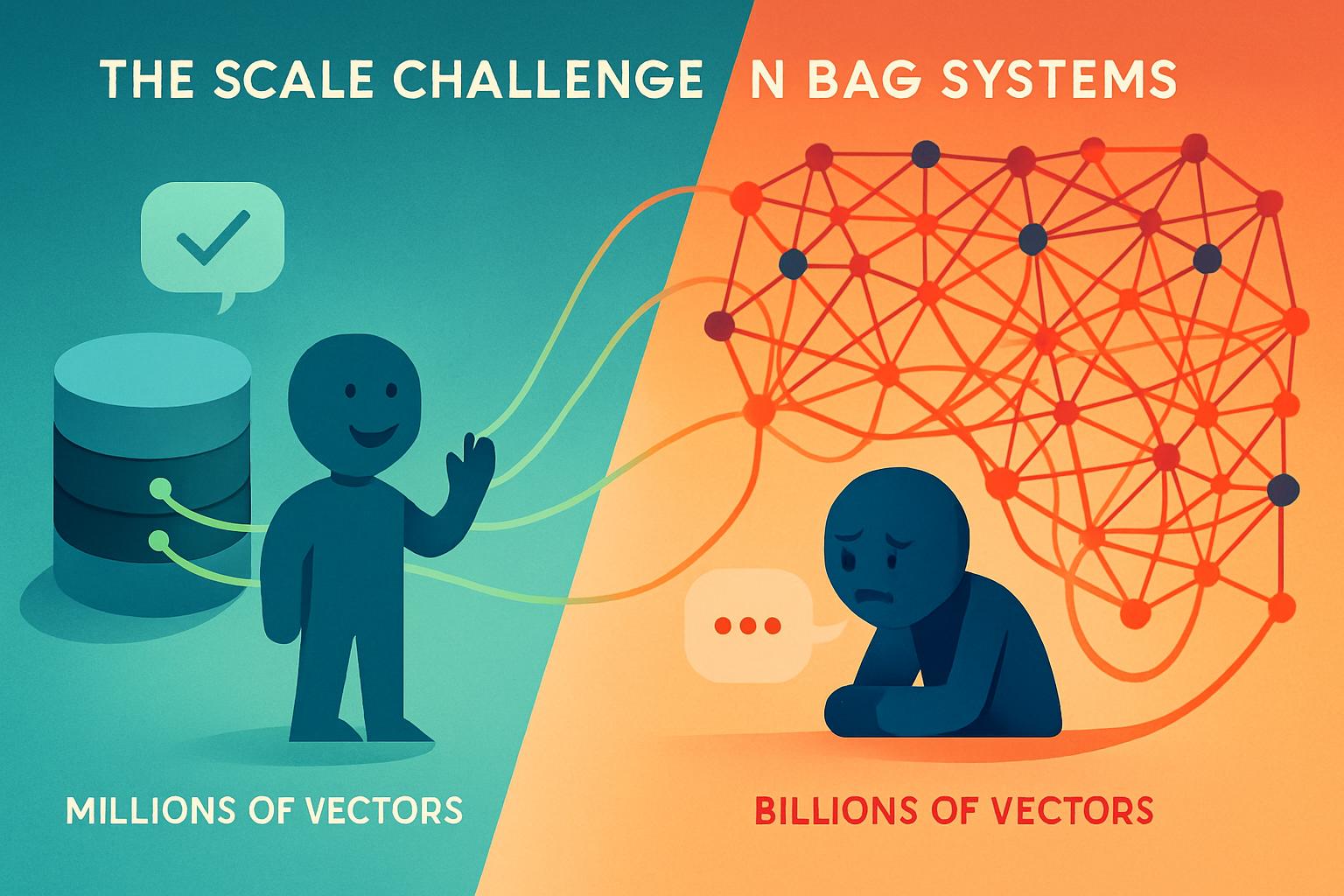

Your RAG system worked beautifully in the proof-of-concept phase. The retrieval was snappy. Latency hovered around 200-300 milliseconds. Your team celebrated. Then came production—with millions of real documents, billions of vectors, and actual users hammering your system simultaneously. Suddenly, that 300ms response time ballooned to 2-3 seconds. Customer support agents complained. Financial trading systems couldn’t meet execution windows. Healthcare clinicians abandoned the AI-assisted diagnostic tool because waiting for results cost them critical minutes.

This isn’t a hypothetical scenario. Enterprise teams are experiencing this performance degradation at scale right now, and most organizations didn’t plan for it. The culprit isn’t bad code or inadequate infrastructure—it’s a fundamental architectural challenge in vector database retrieval that emerges precisely when your RAG system should be delivering the most value.

The problem starts with a misleading assumption: that vector databases scale linearly. They don’t. As enterprise vector repositories grow from millions to billions of embeddings, traditional indexing strategies create performance bottlenecks that no amount of additional compute can fully resolve. High-cardinality metadata filtering becomes a chokepoint. Dense vector search through massive indexes introduces latency spikes. Concurrent query loads expose resource contention. What worked at 10 million vectors fails spectacularly at 10 billion.

The good news? This problem has solutions—but they require rethinking your vector database architecture from the ground up. Companies like Pinecone, AWS OpenSearch, Google Cloud, and open-source projects like Milvus are deploying breakthrough techniques: GPU acceleration, intelligent partitioning strategies, hybrid indexing, and dedicated read node infrastructure. But understanding which approach fits your enterprise RAG footprint requires deep architectural clarity.

Let’s explore exactly where the performance wall emerges, why it happens, and the specific technical frameworks that enterprises are using to break through it.

The Latency Crisis: Where Vector Retrieval Hits Its Limits

Traditional vector database retrieval follows a simple pattern: index embeddings using approximate nearest neighbor (ANN) algorithms like HNSW or IVF, then search by computing distances between query vectors and stored vectors. This works fine when your vector repository is small. When you’re managing billions of vectors across enterprise-scale datasets, the math changes dramatically.

The Hidden Bottleneck: High-Cardinality Metadata Filtering

Real enterprise RAG systems don’t just search raw vectors—they apply metadata filters. A financial services RAG might filter by account type, transaction date range, and regulatory jurisdiction. A healthcare RAG retrieves documents only from patients within a specific age cohort and diagnostic code. A legal RAG filters by client, matter type, and document classification. These metadata constraints are essential for compliance and accuracy, but they’re performance killers at scale.

Here’s why: when your metadata cardinality (number of unique filter values) grows large, the vector database must perform pre-filtering before distance calculations. A query for “documents matching account_type=business AND date_range=[2025-01-01, 2025-12-31] AND jurisdiction=EU” forces the database to first identify which vectors satisfy all three conditions, then perform nearest-neighbor search only within that subset. With millions of unique values across filtering dimensions, this pre-filtering step becomes the bottleneck, not the vector search itself.

Pinecone’s recent deployment patterns show this concretely: enterprises filtering billion-scale vector databases by 50+ unique metadata dimensions experience 5-10x latency increases compared to unfiltered searches. The performance ceiling hits precisely when you need it most—in highly regulated industries where filtering is non-negotiable.

The Concurrent Query Load Problem

Proof-of-concept RAG systems typically handle 5-10 simultaneous queries from pilot users. Production systems handle hundreds or thousands. Vector databases experience severe resource contention at this scale. Multiple concurrent queries competing for index memory, CPU cycles, and I/O bandwidth create unpredictable latency spikes.

AWS OpenSearch data shows that sustained query loads above 100 concurrent requests on billion-scale vector indexes cause tail latencies (p99) to spike from 400ms to 2-4 seconds, even with optimized hardware. This isn’t just slow—it’s unusable for customer-facing applications or real-time decision systems. One enterprise customer described it: “Our system worked flawlessly with 20 concurrent users. With 200, queries started timing out randomly. With 500, the entire vector search degraded to unacceptable levels.”

The Indexing Strategy Trap

Vector databases offer multiple indexing algorithms, each with different latency-accuracy trade-offs. HNSW (Hierarchical Navigable Small World) delivers excellent recall but consumes massive memory and slows down at scale. IVF (Inverted File) is memory-efficient but degrades recall quality. SCANN (Scalable Nearest Neighbors) offers middle ground but requires specific hardware optimization. Most enterprises default to HNSW because it’s the “safest” choice, then discover it doesn’t scale to their actual data volumes. By the time they realize the performance problem, they’ve already indexed billions of vectors using the wrong algorithm.

GPU Acceleration: The Hardware-Software Breakthrough

The first major solution emerging in 2025 is GPU-accelerated vector indexing and search. This isn’t just faster computation—it’s a qualitative shift in what’s possible at scale.

How GPU Acceleration Transforms Vector Database Performance

GPUs excel at parallel vector operations because they’re built for matrix math. Traditional CPU-based vector databases process distance calculations sequentially or with limited parallelism. GPUs can compute distances for thousands of vector pairs simultaneously. AWS’s recent announcement demonstrates this concretely: using GPU acceleration on Amazon OpenSearch, enterprises can build billion-scale vector indexes in under an hour, compared to 6-8 hours on CPU-only infrastructure. More importantly, GPU-accelerated search reduces query latency by 3-5x for high-concurrency workloads.

The mechanism is straightforward: GPU memory holds the indexed vectors, while GPU cores parallelize distance calculations across thousands of query threads. When a customer support chatbot sends 200 concurrent retrieval requests, GPU-accelerated databases handle them in parallel instead of sequential queuing. The latency ceiling that plagued CPU-only systems simply doesn’t exist with GPU infrastructure.

The Cost-Performance Reality

GPU infrastructure costs more upfront—a single high-performance GPU node (NVIDIA A100) runs $10,000-15,000. But AWS data reveals this scales efficiently: deploying GPU acceleration for billion-scale vector workloads costs roughly 10% more than CPU equivalents while delivering 3-5x better throughput. For enterprises running millions of daily RAG queries, GPU infrastructure becomes economically rational despite higher per-node costs.

However, not all use cases need GPU acceleration. Low-latency, high-concurrency systems (customer support, real-time recommendations) absolutely require it. Lower-concurrency systems (legal document search with 5-10 daily queries) can operate efficiently on optimized CPU infrastructure. The key is right-sizing: enterprises must honestly assess whether their production query patterns justify GPU investment.

Partitioning and Hybrid Indexing: Software-Level Solutions

While GPU acceleration addresses hardware constraints, software architecture changes provide orthogonal performance improvements that don’t require expensive hardware upgrades.

Data Partitioning: Dividing the Problem Space

Instead of maintaining a single monolithic billion-vector index, enterprises partition vector data by logical boundaries. A financial services RAG might partition by account_type (retail, institutional, corporate). A healthcare RAG partitions by clinical_department (cardiology, oncology, radiology). A legal RAG partitions by client or matter_type.

The performance benefit is substantial: querying a 100-million vector partition is 10x faster than querying a 1-billion vector index, even with identical hardware. When a query arrives with metadata filters specifying “account_type=retail”, the system routes to only the retail partition instead of searching the entire dataset. This reduces both search space and index memory pressure.

Partitioning introduces operational complexity—the system must manage multiple indexes, ensure partitioning logic is performant, and handle edge cases where queries span multiple partitions. But for enterprises already experiencing latency ceilings, the performance gains justify this complexity.

Google Cloud’s recent deployments show partitioning strategies reducing p99 query latency by 4-6x while maintaining 99.5%+ recall accuracy. The trade-off is operational overhead: maintaining 20-30 smaller indexes requires more sophisticated management than a single monolithic index.

Hybrid Indexing: Combining Vector and Keyword Search

A second software strategy combines vector search with traditional keyword (BM25) indexing. This isn’t new, but enterprises are implementing it specifically to improve performance at scale.

The insight: certain queries are more efficiently answered through keyword search than vector similarity. A query like “Show me all documents mentioning product code XR-450” is faster resolved through keyword matching than vector embeddings. When a healthcare system searches for “bilateral pneumonia with pleural effusion,” vector search excels. Hybrid indexing routes queries intelligently: keyword queries go to the BM25 index, semantic queries go to vector search, and complex queries use both and merge results.

This routing intelligence dramatically reduces vector search load. If 30% of production queries are keyword-based, offloading them to BM25 reduces vector index pressure by 30%. Latency for keyword queries drops from 200ms (vector search) to 20ms (keyword search). Overall system throughput increases.

Milvus, the open-source vector database project that recently surpassed 40,000 GitHub stars, has made hybrid indexing its core architectural principle. Enterprise deployments using Milvus’s hybrid strategy report 2-3x latency reductions compared to pure vector-only systems.

Dedicated Read Nodes: Infrastructure Pattern for Consistency

A third emerging pattern addresses resource contention through infrastructure specialization. Pinecone’s recent deployment of Dedicated Read Nodes (DRN) exemplifies this.

The concept is straightforward: separate read and write infrastructure. Write operations (indexing new vectors, updating existing ones) run on primary nodes. Read operations (query retrieval) run on dedicated read-only nodes that replicate index data. This prevents indexing workload from competing with query workload for resources.

In traditional vector database deployments, a bulk indexing operation (loading 1 million new vectors) causes query latency spikes because indexing and querying compete for the same CPU, memory, and I/O resources. With dedicated read nodes, indexing happens on primary infrastructure while queries flow through read replicas unaffected. Query latency remains predictable even during heavy indexing periods.

Enterprises managing mission-critical RAG systems (financial trading, clinical decision support) report that dedicated read node infrastructure provides the operational stability they require. While this adds infrastructure complexity and cost, it eliminates the unpredictable latency spikes that plague single-node deployments.

The Emerging Best Practice: Composite Strategies

Leading enterprises aren’t choosing a single solution—they’re combining multiple approaches into composite strategies tailored to their specific workload patterns.

A typical high-performance RAG architecture in 2025 looks like this:

- Partition the data by logical business boundaries (customer segment, content type, regulatory domain)

- Deploy GPU-accelerated indexes for partitions serving high-concurrency production workloads

- Implement hybrid indexing routing keyword queries to BM25 and semantic queries to vector search

- Use dedicated read nodes for query-heavy partitions, separating indexing from retrieval

- Monitor query patterns continuously, adjusting partitioning and routing logic based on production telemetry

This layered approach addresses the performance wall at multiple levels simultaneously. AWS’s recent GPU acceleration announcement, combined with Zilliz and Pliops’ partnership to build affordable hardware for RAG scaling, suggests this composite pattern is becoming the enterprise standard.

One quantified example: a financial services company deployed this composite strategy and reduced p99 query latency from 3.2 seconds to 420 milliseconds—an 87% improvement. More importantly, they eliminated tail latency spikes, achieving consistent sub-500ms response times across 95% of queries even during peak loads.

Why This Matters Now: The Production Reality Check

The vector database performance wall isn’t a theoretical concern—it’s hitting production systems right now. Enterprises that successfully deployed RAG proofs-of-concept in 2024 are discovering in late 2025 that their architecture doesn’t scale to production query volumes and data sizes.

This creates a critical inflection point. Organizations have three choices:

- Accept degraded performance, potentially making their RAG systems unusable for latency-sensitive applications

- Rearchitect immediately, investing in GPU infrastructure, partitioning, and hybrid indexing—expensive and disruptive

- Design for scale from the start, implementing these patterns before hitting the performance wall

The third approach is obviously optimal, but it requires clarity during the design phase about production query patterns, concurrency expectations, and data volumes. Most enterprise RAG programs lack this foresight, making option 2 (emergency rearchitecting) the common path.

The good news: the techniques are proven. Enterprises deploying GPU acceleration, partitioning, hybrid indexing, and dedicated read nodes consistently achieve the latency performance required for production systems. The challenge is recognizing the performance wall early enough to act before it damages user experience or mission-critical workflows.

Moving Forward: Evaluating Your RAG Architecture

If your RAG system is still in pilot phase, use this framework to design for scale:

Quantify your production requirements: What’s the acceptable p99 query latency? What concurrent query load must you support? How many vectors will your index contain in year 1? Year 2?

Categorize your query patterns: What percentage are keyword-based vs. semantic? Which queries are latency-critical? Which tolerate 1-2 second responses?

Model your infrastructure: Based on latency requirements and query patterns, determine whether CPU-only infrastructure, GPU acceleration, or hybrid approaches fit your economics.

Plan your partitioning strategy: How will you logically partition your vector data to reduce search space while remaining operationally manageable?

Choose your vector database deliberately: Evaluate options (Pinecone, AWS OpenSearch, Google Vector Search, Milvus, Weaviate) based on whether their architecture supports your chosen optimization strategy.

Enterprise RAG success in 2025 depends on understanding vector database scaling fundamentals before you hit production bottlenecks. The technical solutions exist. The key is applying them systematically to your specific architecture and workload patterns.

The vector database performance wall is real. But with the right architectural strategy and infrastructure choices, it’s entirely surmountable.