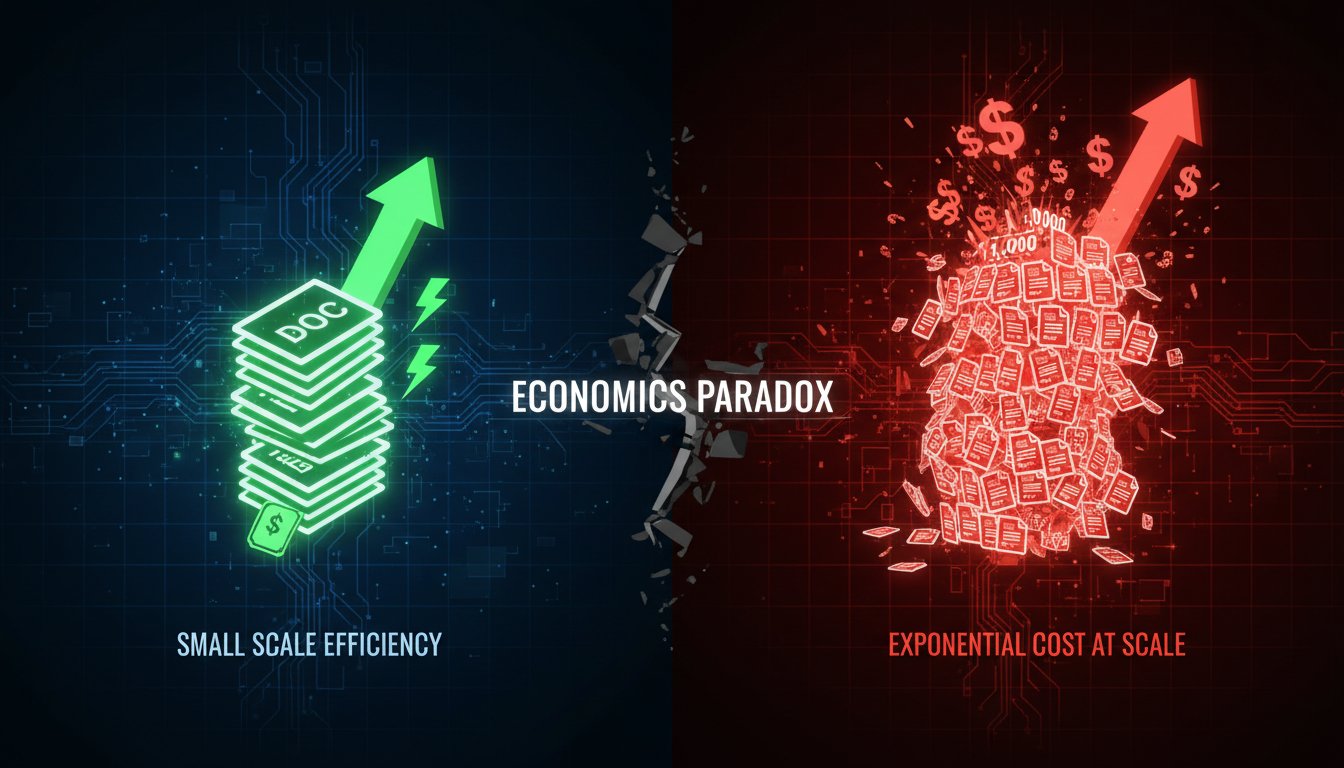

When your enterprise RAG system works perfectly with 100 documents, you feel invincible. The retrieval is lightning-fast, your embedding costs are negligible, and your team celebrates the successful proof-of-concept. Then reality hits at production scale. The same system that processed documents in milliseconds now takes seconds. Your embedding bill climbs from $50 to $5,000 monthly. Your stakeholders suddenly care about the vector database spend you weren’t even tracking. And nobody warned you this would happen because the tutorials all end at the “happy path.”

This isn’t a technical failure—it’s an economics failure. The majority of enterprise RAG systems don’t fail because they hallucinate or because the retrieval algorithm is flawed. They fail because engineering teams discover, too late, that the cost structure changes fundamentally as document repositories grow beyond a certain threshold. You can optimize away the technical problems, but you can’t optimize away basic economics. Yet almost nobody talks about this before it becomes a crisis.

The consequence is that enterprises either accept unsustainable costs and abandon their RAG investment, or they gut the system by removing features that actually make it work. This cost paradox has become the silent killer in enterprise RAG deployments—more prevalent than hallucinations, more expensive than latency issues, and almost entirely preventable with the right architecture decisions made at the start.

The problem isn’t that RAG scales poorly. The problem is that most teams build RAG systems as if cost doesn’t exist until production proves otherwise. They optimize for accuracy and speed, then get blindsided when the math suddenly matters. This guide walks through the specific cost inflection points where RAG systems break economically, how to architect around them, and how to model your true cost-per-query before you’re locked into an expensive decision.

Understanding the Cost Inflection Points

The Embedding Cost Cliff

When you move from 100 documents to 10,000 documents, your embedding costs don’t grow linearly—they grow exponentially if you’re not intentional about chunking strategy. Here’s why: Most teams chunk documents naively, creating fragments 5-10x larger than necessary. A 50-page financial document becomes 200 chunks. A 100-document collection becomes 20,000 embedding vectors. At $0.02 per 1M tokens for input embeddings (typical rates), you’ve crossed from “free tier” into “noticeable budget” territory without changing a single line of code.

The inflection point typically arrives between 5,000 and 10,000 documents. At this scale, you can no longer ignore chunking strategy. Your embedding model needs to be deliberate: Do you use a small, fast model (384 dimensions, $0.001 per 1M tokens) or a dense model (1536 dimensions, $0.02 per 1M tokens)? The performance difference might be minimal for your use case, but the cost difference is 20x. Most teams only discover this after spending months tuning retrieval quality with the expensive model.

The Reranking Bottleneck

Production RAG systems at scale almost always need a reranking stage—your initial retrieval returns 100 candidates, a reranker picks the top 5, and those get passed to the LLM. Reranking improves accuracy dramatically, but it’s expensive at scale. If you’re reranking 100 documents per query and running 1,000 queries daily, you’re spending $0.50+ daily just on reranking. That’s $15 monthly, which feels trivial until you scale to 100,000 daily queries—suddenly it’s $1,500 monthly for a microservice that’s just ranking documents.

Worse, most teams don’t plan for reranking from the start. They bolt it on later when they realize their retrieval quality dropped below acceptable thresholds at scale. By then, they’re managing two separate cost centers (retrieval + reranking) instead of one optimized system. The cost-optimal architecture decides upfront: Do we use a cheaper retrieval model and expensive reranking, or a better retrieval model and skip reranking? This decision compounds over millions of queries.

The Storage and Metadata Tax

Vector databases have hidden costs that scale with your corpus size. Pinecone’s serverless pricing, for example, charges $0.03 per 1M read units. At scale, metadata filtering (“show me documents from 2024 only”) adds read operations that aren’t visible in your benchmark tests. You test with 1,000 documents in a dev environment where there’s no metadata filtering, then deploy to production with 50,000 documents and aggressive filtering—and suddenly your read costs triple.

Additionally, some vector databases charge for storage (Pinecone: $0.25 per 1GB monthly), while others don’t but charge for compute instead. At 10,000 documents with rich metadata, you’re looking at 5-10GB of storage depending on your embedding model. That’s $1.25-$2.50 monthly—again, trivial at small scale but hidden until you do the accounting at production scale.

The Cost Model Nobody Builds Before Launch

Let’s walk through a realistic enterprise scenario: You’re building a document retrieval system for customer support. Your company has 50,000 historical documents (FAQs, guides, past tickets). You plan to process 500 support queries daily with a 6-month timeline.

Cost-Naive Approach (What Most Teams Do):

– Embedding model: OpenAI text-embedding-3-large (1536 dims, $0.02 per 1M input tokens)

– Vector database: Pinecone serverless

– Reranking: None (“We’ll optimize later if needed”)

– Initial embedding pass: 50,000 documents × 2,000 avg tokens per chunk = 100M tokens = $2,000 (one-time)

– Monthly queries: 500/day × 30 = 15,000 queries

– Embedding for queries: 15,000 × 100 tokens = 1.5M tokens = $0.03/month

– Vector DB reads: 15,000 × 2 reads per query = 30K reads = $0.0009/month

– Total first month: $2,000. Recurring: ~$0.03/month (ignores storage)

This model looks amazing until production, where:

– Your average document chunk is 5,000 tokens (not 2,000)

– You have 200,000 total chunks (not 50,000)

– Your metadata filtering requires 5 read operations per query (not 1)

– Actual cost: $8,000 initial + $2-3/month recurring

Now add reranking because retrieval accuracy dropped at scale (50 docs per query through reranker at $0.0001 per operation):

– Reranking: 15,000 queries × 50 docs × $0.0001 = $75/month

– True recurring cost: $77-78/month (~$900 annually)

Cost-Optimized Approach (What Teams Should Do):

– Embedding model: Open-source model (e.g., BAAI/bge-small-en-v1.5, 384 dims, no API cost)

– Vector database: Self-hosted Qdrant ($100/month for reasonable compute)

– Reranking: Lightweight, batched reranking (compute cost built into Qdrant)

– Reranking model: Open-source (Cohere’s free tier or self-hosted)

– Infrastructure: Single c5.2xlarge EC2 instance: ~$300/month

– Total: ~$400/month with better performance and zero dependency on API pricing

The delta is $400-500 monthly difference, but the strategic difference is massive: The first approach locks you into vendor pricing scaling, while the second scales with compute, which you can rightsize.

The Hidden Cost Drivers at 10,000+ Documents

1. Chunk Overlap Explosions

Most RAG systems use sliding-window chunking: 512-token chunks with 10% overlap. This is reasonable at small scale but becomes expensive at production scale. If you have 50,000 documents at average 10,000 tokens each, that’s 500M tokens total input. With 10% overlap, you’re actually storing and retrieving from 550M token equivalents. At $0.02 per 1M tokens, that’s an extra $1 per document in embedding costs.

Better approach: Use hierarchical chunking (summary at document level, detailed chunks at section level) or time-decay windowing (older documents get less overlap). This cuts redundant embeddings by 30-50%.

2. The Batch Size Paradox

Embedding services charge per API call or per token, and most teams batch embed in groups of 128 documents. But at 10,000 documents, you’re making 78 API calls for one full embedding pass. Each call has latency overhead, and some services charge per call, not per token. Self-hosted embedding gives you unlimited batch sizing—embed 10,000 documents at once with no per-call overhead.

3. Query-Time Inflation

Production queries are messier than test queries. Real users ask longer questions, ask clarifications, and ask follow-ups. Your test harness averages 50 tokens per query; production averages 200. That 4x token inflation scales across all downstream costs: embedding the query, reranking more candidates, handling longer context windows in the LLM.

A production system needs to budget for 3-5x token inflation from test estimates.

Architectural Decisions That Lock in Cost

Dense Retrieval vs. Hybrid vs. Sparse

- Dense only (most tutorials suggest this): Requires high-quality embeddings, expensive at scale, but single API dependency.

- Hybrid (dense + BM25): Requires two retrieval systems, but BM25 is free. Gets you 30% better recall without 30% more cost.

- Sparse-first with dense reranking: Retrieves candidates via cheap BM25, reranks with expensive dense embeddings only on top 50 candidates. Best cost-per-query at scale.

The choice made at architecture time determines your cost curve. Switching from dense-only to hybrid when you hit production scale is expensive and disruptive.

Embedding Model Size Selection

Open-source embedding models now outperform OpenAI’s models on many tasks (MTEB leaderboard shows BAAI/bge-large-en-v1.5 outperforming text-embedding-3-large on retrieval tasks). Switching from a 1536-dimension model ($0.02/M tokens) to a 384-dimension model (self-hosted, $0) is a one-time decision that compounds to massive savings:

- 1M daily queries at 100 tokens each = 100B tokens monthly

- OpenAI text-embedding-3-large: $2,000/month

- Self-hosted BAAI bge-small: $0 + $50/month compute on a t4 GPU = $50/month

- 40x cost difference on the embedding layer alone

Real-Time vs. Batch Indexing

Real-time embedding indexing (new documents embedded immediately) is expensive at scale. Batch indexing (documents queued, embedded in bulk nightly) is dramatically cheaper:

- Real-time: Every document embedded individually via API = $0.02 per 1M tokens per embedding

- Batch: 10,000 documents embedded once nightly via GPU = $0.0001 per embedding (if self-hosted) or $0.001 per embedding (if using batch APIs)

For 50 new documents daily, real-time costs $1/month; batch costs $0.05/month. This scales to $20/month vs. $1/month at 250 new documents daily.

Building Your Cost Model Before Deployment

Step 1: Calculate Baseline Token Volumes

For each component, estimate token usage:

– Indexing pass: (Total documents × avg tokens per document) × (1 + overlap %)

– Query embedding: (Avg queries/month) × (avg query tokens)

– Reranking: (Avg queries/month) × (candidate docs per query) × (avg tokens per rerank)

– LLM inference: (Avg queries/month) × (context tokens + output tokens)

Example for 50,000 documents, 500 daily queries:

– Indexing: 50K docs × 3K tokens × 1.1 overlap = 165B tokens

– Query embedding: 15K queries × 100 tokens = 1.5B tokens

– Reranking: 15K queries × 50 candidates × 500 tokens = 375B tokens

– LLM: 15K queries × 3K context tokens = 45B tokens

– Total: ~587B tokens monthly

Step 2: Model Component Costs

For each token volume, apply your chosen model pricing:

– Embedding: $0.02/M tokens (OpenAI) vs. $0/M tokens (self-hosted)

– Reranking: $0.0001/operation (API) vs. $0/operation (self-hosted)

– Vector DB: Per-read pricing vs. flat compute pricing

– LLM: $0.001/M tokens input, $0.003/M tokens output (typical)

Step 3: Model Sensitivity Analysis

Test how costs change with realistic variations:

– 2x document corpus: How much does embedding cost increase?

– 3x query volume: Does vector DB pricing tier change?

– Smaller embedding model: How much accuracy do you lose vs. cost saved?

This reveals the cost cliff before you hit it.

Step 4: Define Cost Thresholds

Establish decision rules: “If embedding costs exceed $500/month, we switch to self-hosted,” or “If vector DB costs exceed $200/month, we evaluate Qdrant vs. Pinecone.”

The 10,000-Document Decision Tree

When you approach 10,000 documents in production, use this framework:

Is your LLM API cost (input + output) the largest expense?

– Yes → Focus on reducing context tokens (better retrieval, reranking, or summarization)

– No → Proceed

Is your embedding cost the largest expense?

– Yes → Evaluate self-hosted embeddings or smaller models

– No → Proceed

Is your vector database cost the largest expense?

– Yes → Consider hybrid search (BM25 + dense) or self-hosted solution

– No → Your system is cost-optimized

Are your reranking costs >10% of total RAG cost?

– Yes → Consider sparse-first retrieval (cheaper candidates) with selective reranking

– No → Your retrieval pipeline is efficient

Real-World Cost Trajectories

Scenario A: E-commerce Product Support (100,000 products, 5,000 daily queries)

– Naive approach: $15,000-20,000/month (dense embeddings + API reranking + Pinecone)

– Optimized: $1,500-2,000/month (hybrid retrieval + self-hosted embeddings + Qdrant)

– Breakeven: 6-8 months of savings = $70,000-100,000 annually

Scenario B: Legal Research System (500,000 documents, 10,000 daily queries)

– Naive approach: $40,000-60,000/month (dense-only, expensive reranking, Pinecone serverless)

– Optimized: $5,000-7,000/month (hierarchical chunking + sparse-dense hybrid + self-hosted)

– Breakeven: 3-4 months of savings = $300,000-400,000+ annually

The larger your corpus and query volume, the faster you reach the inflection point where self-hosted infrastructure becomes mandatory.

Avoiding the Cost Trap

The cost paradox in enterprise RAG isn’t new—it’s a solved problem in search and recommendation systems. Companies learned decades ago that API-based retrieval doesn’t scale beyond a certain point. RAG is repeating the same learning curve, but faster. The teams that avoid expensive mistakes follow three principles:

1. Model Your Economics Before Deployment

Don’t launch on API-based embeddings and hope to optimize later. Calculate token volumes upfront and stress-test the model with realistic production assumptions. A 4-6 hour exercise prevents $50,000+ mistakes.

2. Build for Hybrid Architectures From Day One

If you’re starting with dense retrieval only, architecture your system to support BM25 fallback and reranking without major rewrites. This gives you escape routes when costs become unsustainable.

3. Establish Cost Monitoring and Thresholds

Track cost per query weekly, not monthly. Establish thresholds that trigger architectural reviews: “If embedding costs exceed $X/month, we evaluate self-hosted options.” Catch cost inflation before it becomes strategic pain.

The companies building sustainable enterprise RAG systems aren’t the ones with the fanciest retrieval algorithms. They’re the ones who did the math first and architected for scale from the beginning. Your system works at 100 documents because economics don’t matter yet. At 10,000 documents, economics are everything. The time to learn that lesson is now, not in six months when your bill arrives.