Your RAG system retrieves documents in milliseconds. Your LLM generates responses in seconds. But somewhere between those two steps, your accuracy plateaus.

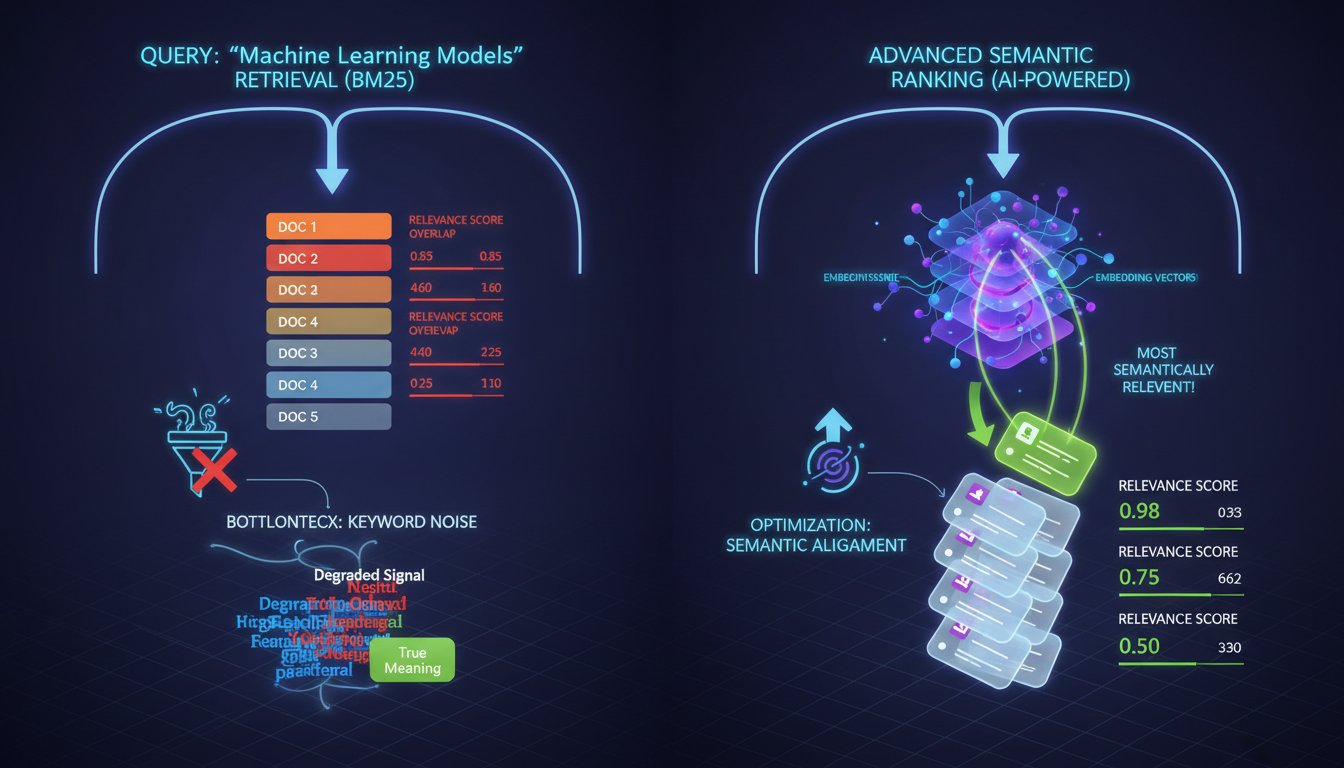

This isn’t a retrieval problem. Your embedding model is probably working fine—pulling relevant documents from your knowledge base with reasonable precision. The issue isn’t speed either; your latency metrics look solid. The real problem is that you’re ranking retrieved results the way search engines did in 1998: by keyword overlap and simple relevance scores.

By the time your LLM sees the “ranked” results, you’re already working with degraded signal. The most semantically relevant document might be buried at position 15, pushed down by keyword-matching noise at positions 1-3. Your model then generates answers based on suboptimal context, leading to hallucinations, inconsistent grounding, and the kind of accuracy loss that keeps enterprise teams from deploying RAG systems at scale.

This is the retrieval-ranking bottleneck—and it’s costing you far more than the computational overhead of fixing it.

Why Simple Ranking Fails in Enterprise RAG Systems

Most enterprise RAG implementations rely on one of two retrieval mechanisms: BM25 (keyword-based) or dense embedding similarity (vector cosine distance). Both methods have served their purpose, but they share a fundamental limitation in the context of semantic understanding.

Keyword-based retrieval like BM25 excels at exact matching but misses semantic nuance. A query like “What are the financial implications of supply chain disruptions?” might miss a document titled “How manufacturing delays impact quarterly earnings” because it doesn’t contain the exact phrase “supply chain.” Meanwhile, an unrelated document mentioning all three keywords in different contexts might rank higher, contaminating your retrieval set.

Dense embeddings solve some of these problems by encoding meaning into vector space. However, embeddings use bi-encoder architecture—your query and each document are encoded independently, then compared via cosine similarity. This approach is fast and scalable, but it loses critical context. A document that’s semantically relevant in one query context might be irrelevant in another, yet embeddings can’t account for this nuance without recomputing the entire dataset.

The practical result: you retrieve 50 documents, but only 3-4 are truly valuable for your LLM’s generation task. Your model wastes tokens processing noise, increases hallucination risk, and your accuracy ceiling gets locked in before generation even begins.

Enter Cross-Encoder Re-Ranking: The Two-Stage Ranking Revolution

Cross-encoders represent a fundamentally different approach to semantic ranking. Instead of encoding queries and documents separately, cross-encoders process the entire query-document pair simultaneously. This means the model can understand not just individual meanings, but how the query and document relate to each other—context that’s invisible to bi-encoders.

Here’s how it works in practice: Your first-stage retriever (embedding model) fetches 50 candidate documents in ~100ms. These documents are rough candidates—potentially high-signal, but unrefined. Then your cross-encoder re-ranker takes those 50 documents and scores each query-document pair by examining their full semantic relationship. The top 5-10 documents, re-ranked by actual relevance rather than embedding distance, get passed to your LLM.

The improvement is measurable. Research from 2025 shows that cross-encoder re-ranking improves retrieval accuracy by 20-35% compared to embedding-only pipelines. In practical terms, if your embedding-only RAG system achieves 65% accuracy on fact-grounding tasks, adding cross-encoder re-ranking pushes you to 80-85%. That’s not incremental—that’s the difference between a research demo and a production system.

Why This Works at Scale

The elegance of two-stage ranking is that it doesn’t require recomputing embeddings for your entire knowledge base every time the ranking algorithm changes. Your first stage (dense embeddings) remains stable and fast. Your second stage (cross-encoder re-ranking) can be swapped, fine-tuned, or optimized without touching your retrieval infrastructure.

Moreover, cross-encoders can leverage instruction-tuned models like BERT variants or even lightweight LLMs that understand your specific domain. You can fine-tune a cross-encoder on your own query-document pairs, teaching it what relevance means in your context. An embedding model can’t do this—you’d have to retrain the entire vector database.

Implementation: Turning Theory Into Production Gains

Building a cross-encoder re-ranking layer into your RAG system requires three key decisions: model selection, latency management, and integration patterns.

Choosing Your Cross-Encoder Model

For most enterprise applications, you’re choosing between open-source and proprietary models. Open-source options like CrossEncoder models from the Sentence Transformers library (e.g., cross-encoder/ms-marco-MiniLM-L-12-v2) offer good performance at low cost. They’re lightweight—often under 200MB—and can run on modest hardware. Proprietary models from OpenAI, Cohere, or specialized ranking-as-a-service providers offer higher accuracy but introduce vendor lock-in and per-API-call costs.

The trade-off is speed versus accuracy. MiniLM models add 150-250ms latency per query (scoring 50 documents). Larger models add 300-500ms but improve accuracy by another 5-10%. Most enterprises find the sweet spot with medium-sized models that balance latency and accuracy.

Managing Latency: The 500ms Rule

Here’s the uncomfortable truth: adding a re-ranking stage increases query latency. Your first-stage retriever completes in 50-100ms. Your re-ranker adds another 200-500ms depending on model size and batch processing. For interactive applications, this matters.

But here’s why it’s worth it: a slower pipeline with accurate context beats a fast pipeline with degraded signal every time. Your LLM can generate lower-quality responses faster, or high-quality responses at 600ms total latency. Users don’t mind waiting for accuracy; they notice hallucinations immediately.

Optimization strategies help: batch processing multiple queries, caching re-ranker scores for common queries, and using asynchronous ranking for background systems. Some enterprises deploy re-ranking on GPU clusters to parallelize scoring across dozens of queries simultaneously, bringing per-query overhead down to 100-150ms.

Architecture Patterns: Where Re-Ranking Lives

Three patterns dominate:

Pattern 1: Pipeline Integration

Your RAG orchestrator calls the retriever, then immediately calls the re-ranker on results before passing to the LLM. This is the simplest approach—requires minimal infrastructure changes. Most modern RAG frameworks (LangChain, LlamaIndex, Haystack) support this natively.

Pattern 2: Microservice Architecture

The re-ranker runs as an independent service that can be scaled separately from retrieval. This lets you handle traffic spikes without overprovisioning your embedding database. Enterprise deployments often use this pattern, treating re-ranking as a shared utility across multiple applications.

Pattern 3: Adaptive Ranking

For high-stakes queries, you run full cross-encoder scoring. For low-stakes queries or time-sensitive paths, you skip re-ranking. The RAG system learns which queries need what level of ranking sophistication. This requires instrumentation but maximizes overall throughput.

The Real-World Impact: Three Enterprise Examples

Consider a legal research platform indexing 10 million case documents. An attorney queries: “What precedents exist for contract disputes involving international shipping?”

Without re-ranking, the embedding model returns 50 cases with “contract,” “dispute,” and “international” scattered throughout. Five of these are actually relevant; 45 are noise. The LLM generates an answer mixing signals from false positives, producing a response that’s technically grounded but incomplete and potentially misleading.

With cross-encoder re-ranking, the re-ranker understands that a document must connect contract disputes AND international shipping in a meaningful relationship. The top 10 re-ranked results include 8-9 genuinely relevant cases. The LLM generates a focused, accurate answer in 600ms total latency.

Or take a financial services firm using RAG to synthesize regulatory guidance. A query comes in about “capital requirements for derivative hedging strategies.” Embedding-only retrieval pulls documents on capital requirements and documents on derivatives, but misses the specific guidance on how these interact. Cross-encoder re-ranking surfaces the precise guidance document that connects both concepts, cutting research time from 15 minutes to 90 seconds.

These aren’t theoretical gains. They’re the difference between RAG systems that scale to production and those that remain pilots.

Challenges and When Re-Ranking Breaks Down

Cross-encoder re-ranking isn’t a universal solution. It struggles in specific scenarios that enterprise teams encounter:

Multi-hop reasoning poses a challenge. If your query requires synthesizing information across five different documents, a cross-encoder only sees pairwise query-document relationships. It can’t reason about document-to-document connections. Advanced systems combine re-ranking with graph-based retrieval to handle this.

Domain-specific terminology can confuse even fine-tuned cross-encoders if training data doesn’t include your domain. A manufacturing company’s jargon around “line balancing” or “takt time” might confuse a general-purpose re-ranker trained on web text. This requires domain fine-tuning, adding 2-4 weeks of ML engineering work.

Cold-start problems emerge when you deploy a new knowledge base and lack labeled query-document pairs for fine-tuning. Off-the-shelf cross-encoders help, but you’re leaving accuracy on the table until you accumulate sufficient training data.

Beyond these technical challenges lies a resource constraint: implementing cross-encoder re-ranking requires either ML expertise in-house or outsourcing to a vendor. Not every organization has a team capable of fine-tuning and deploying ranking models. This is why managed RAG platforms are gaining traction—they abstract re-ranking complexity into a simple API.

The Optimization Frontier: Re-Ranking in 2025 and Beyond

The field is evolving rapidly. Recent research shows three promising directions:

Learned re-ranking with reinforcement learning trains re-rankers not just on relevance, but on downstream task performance. Instead of scoring “relevance,” you score “likelihood of enabling accurate LLM generation.” Early results show 5-10% additional accuracy gains over standard cross-encoders.

Sparse re-ranking is emerging as an alternative to cross-encoders. These models score documents based on attention patterns over query-document term interactions, offering comparable accuracy at 40% lower latency. They’re less mature but approaching production viability.

Adaptive re-ranking depth adjusts how many documents you re-rank based on query complexity and result confidence. Simple queries might use top-3 re-ranking; complex queries trigger full re-ranking of top-50. This optimizes latency without sacrificing accuracy.

Most practically, enterprises are moving toward ensemble ranking—combining multiple re-ranking signals (cross-encoder scores, citation counts, temporal freshness, user feedback) into a single ranking score. This approach is more robust than any single model and handles edge cases better.

From Theory to Your Production System

If your RAG system is plateauing at 70-75% accuracy, the bottleneck isn’t your retriever or your LLM. It’s the ranking step between them. You’re feeding your model contaminated context.

The fix is implementable in weeks, not months. Most enterprises start by integrating an open-source cross-encoder model (start with cross-encoder/ms-marco-MiniLM-L-12-v2) into their existing RAG pipeline. You’ll add 200-300ms to query latency and gain 15-25% accuracy improvement. From there, you can fine-tune on your domain, experiment with re-ranking depth, and optimize latency through batching and GPU acceleration.

The infrastructure cost is minimal—a modest GPU handles re-ranking for thousands of queries daily. The returns are massive: production-grade RAG systems that actually ground LLM outputs in retrieved context instead of hallucinating plausible but false information.

Your retrieval pipeline is already working. Your LLM is already capable. The missing piece is the bridge between them—and that bridge is built with cross-encoder re-ranking. Deploy it, measure the accuracy gain, and watch your RAG system finally scale past the semantic ceiling that’s kept it locked at the pilot stage.

Ready to implement cross-encoder re-ranking in your system? Start by auditing your current retrieval accuracy with and without re-ranking on 100 representative queries from your domain. This 2-hour exercise will show you exactly how much accuracy you’re leaving on the table. Then, integrate your first cross-encoder model and measure again. The data will tell you whether re-ranking is worth the latency trade-off for your specific use case—and in most enterprise scenarios, it will be.