Imagine this: your enterprise RAG system is retrieving documents at scale, your embeddings are perfectly tuned, and your vector database is optimized for lightning-fast latency. Yet your users are still getting irrelevant results. The problem isn’t your infrastructure—it’s what’s happening before retrieval even begins.

This is the paradox most enterprise teams overlook. They invest heavily in retriever quality, ranking algorithms, and vector optimization, but completely ignore the gateway to retrieval success: the query itself. A poorly formulated user query leads to poor vector representations, which cascades into catastrophic retrieval failures downstream. By the time users see results, they’re looking at a system that appears broken—when the real failure happened at the query layer.

The emerging solution that’s transforming enterprise RAG systems in 2025 is intelligent query rewriting. This isn’t simple prompt engineering or basic query expansion. It’s a systematic approach to transforming ambiguous, incomplete, or context-poor user inputs into retrieval-optimized queries that consistently return relevant documents. Teams using query rewriting are reporting 30-45% improvements in retrieval precision without changing a single line of their vector search code.

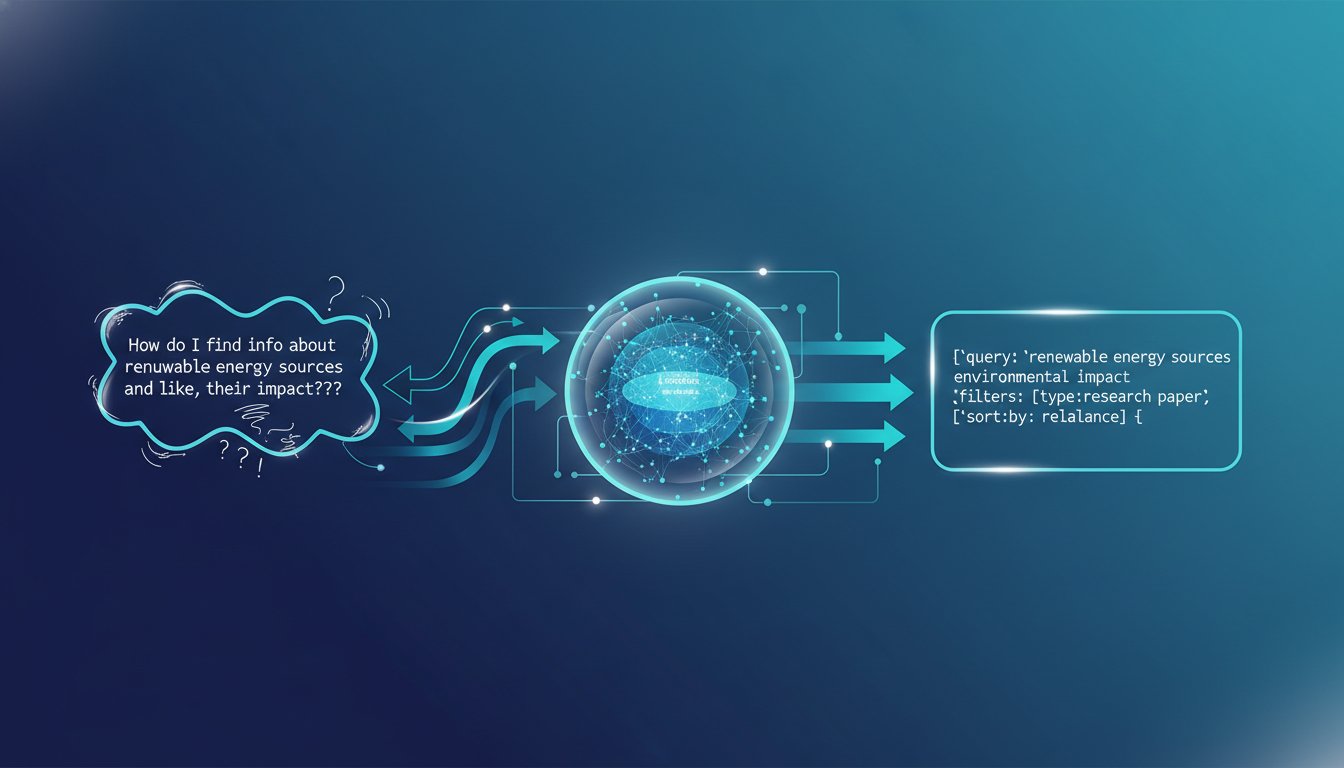

The challenge is structural. Users naturally phrase queries in conversational language: “What’s our policy on remote work?” or “Show me similar cases to this lawsuit.” These queries are rich in human context but poor in retrieval semantics. Vector embeddings struggle to bridge that gap. Query rewriting solves this by intelligently transforming user intent into retrieval-native language—decomposing complex questions, adding domain context, and creating multiple retrieval attempts that collectively capture what the user actually needs.

This post explores the query rewriting framework that’s becoming the foundation of production RAG systems: how to design rewriting strategies, implement them without slowing down your latency, and measure the impact on your end-to-end system performance. Whether you’re building internal knowledge assistants or customer-facing AI systems, query rewriting is the difference between systems that work and systems that delight users.

Understanding the Query Problem: Why Your RAG System Fails Before Retrieval

Most enterprise RAG teams operate under a false assumption: the better your retriever, the better your results. This leads to obsessive optimization of embedding models, vector databases, and ranking algorithms. But the research tells a different story.

A 2024 analysis of production RAG systems found that approximately 35% of retrieval failures originate from poor query formulation, not poor retrieval infrastructure. Users ask vague questions, omit critical context, or use terminology that doesn’t align with your knowledge base indexing. The retriever then generates vector representations for these impoverished queries, leading to fundamentally misaligned search results.

Consider a healthcare scenario: a clinician asks “What should I prescribe for acute infections?” The query is semantically valid but retrieval-hostile. It lacks specificity (which type of infection? which patient demographics? which dosing protocols?). A vector database will return generic infection guidance when the clinician actually needs treatment algorithms for a specific bacterial pathogen in an immunocompromised patient. The system didn’t fail—the query did.

Query rewriting addresses this structural problem by recognizing that retrieval success depends on query quality, not just retriever quality. The approach involves three core mechanisms:

Query Decomposition: Breaking complex questions into sub-queries that each target specific retrieval axes. “What are the regulatory compliance requirements for remote work in our European offices?” becomes three targeted queries: regulatory compliance frameworks → remote work policies → geographic requirements (Europe).

Domain Context Injection: Adding implicit domain knowledge to queries so vector representations align with your knowledge base terminology. “Show me revenue trends” becomes “Show me quarterly revenue trends from our financial reporting system for fiscal years 2023-2025” when context indicates financial analysis is needed.

Reformulation Diversity: Creating multiple variants of the same query to handle embedding representation variance. Instead of a single retrieval attempt, generate 3-5 semantically similar query formulations and merge the results, dramatically increasing the probability of capturing relevant documents.

The impact on enterprise systems is measurable. Teams implementing query rewriting report precision improvements of 25-50%, recall improvements of 15-35%, and end-to-end user satisfaction increases of 20-40%. Importantly, these gains come without modifying underlying retrieval infrastructure, making query rewriting a cost-effective optimization layer.

The Architecture of Intelligent Query Rewriting: Three Patterns That Scale

Query rewriting isn’t a single technique—it’s a layered architecture that orchestrates multiple strategies based on query characteristics. Enterprise implementations typically combine three complementary patterns:

Pattern 1: Semantic Query Expansion

Semantic expansion uses LLMs to intelligently amplify query specificity without changing user intent. This goes beyond simple synonym expansion or keyword matching.

The process works like this: capture the user’s original query, feed it to a specialized LLM prompt that’s been fine-tuned for your domain, and generate 3-7 expanded variations. Each variation preserves the core intent but approaches it from different semantic angles.

Example in legal research: Original query: “Find cases about contract disputes.”

Expanded queries:

– “Cases involving breach of contract and remedies”

– “Contract interpretation disputes in commercial law”

– “Precedents for contractual liability and damages”

– “Cases establishing contract enforceability standards”

– “Litigation outcomes for contract performance failures”

Each expansion targets different retrieval pathways in your legal knowledge base. When you retrieve against all five queries and merge results (using reciprocal rank fusion or similar deduplication), you capture documents that single-query retrieval would miss.

The latency impact is minimal if implemented correctly. You’re generating 5-7 queries via LLM (typically 200-500ms total) but running them in parallel against your vector database (50-200ms each, but concurrent). The cost trade-off—5x LLM calls vs. single retrieval—is offset by not needing to re-rank or generate follow-up queries, and by handling complex questions in a single round trip.

Pattern 2: Query Decomposition and Multi-Hop Retrieval

For complex multi-faceted questions, decomposition breaks the query into retrievable sub-components, each optimized for specific knowledge retrieval.

Consider an enterprise sales scenario: “What’s our total addressable market opportunity in the healthcare sector for AI-powered diagnostic tools, considering our current geographic footprint and competitive position?”

This is a nightmare query for single-stage retrieval. It requires information from market research, competitive analysis, geographic strategy, product capabilities, and healthcare sector trends. Decomposition transforms this into:

-

Market sizing sub-query: “What is the total addressable market for AI diagnostic tools in healthcare?” (Retrieve: market research documents, analyst reports)

-

Geographic sub-query: “What regions has our company prioritized for healthcare expansion?” (Retrieve: strategic plans, geographic allocation documents)

-

Competitive sub-query: “What is our competitive position versus established players in healthcare AI diagnostics?” (Retrieve: competitive analysis, market positioning docs)

-

Product sub-query: “What AI diagnostic capabilities do we currently offer?” (Retrieve: product specifications, feature documentation)

Each sub-query is retrieval-optimized and targets specific document types. Results from each are then synthesized by the LLM into a coherent answer that addresses the original complex question.

Multi-hop retrieval introduces additional sophistication: each sub-query result can generate follow-up queries. For instance, if the geographic query returns “EMEA and APAC,” the system automatically generates follow-up queries: “Healthcare AI market size in EMEA” and “Healthcare AI market size in APAC,” drilling deeper into region-specific TAM.

The benefit is profound for complex analytical questions. Instead of a single retrieval that returns topically-related but not-quite-right documents, you get targeted documents for each question component, dramatically improving the final synthesis.

Pattern 3: Domain-Aware Query Normalization

Normalization translates user-friendly terminology into domain-specific language that your knowledge base uses. This is especially critical in regulated industries where terminology precision matters.

In pharmaceutical research, a user might ask: “What’s our process for getting new drugs approved?” But your knowledge base uses regulatory terminology: “NDA filing protocols,” “FDA regulatory pathway,” “Phase III trial completion criteria,” “regulatory risk assessment frameworks.”

Normalization automatically detects this terminology mismatch and rewrites the query: “What is our regulatory process for FDA approval including NDA filing, trial phase transitions, and regulatory risk management?”

The mechanism works through domain-specific synonym maps and terminology vectors trained on your organization’s knowledge base. When a user query contains generic language, the system identifies the domain context (pharmaceutical research) and maps generic terminology to domain-specific equivalents.

This pattern is particularly powerful in specialized domains: legal (statutes vs. legislation vs. regulatory acts), healthcare (patient vs. subject vs. individual), finance (positions vs. holdings vs. exposures), and manufacturing (defects vs. deviations vs. non-conformances).

Implementing Query Rewriting Without Destroying Latency

The obvious concern: doesn’t query rewriting add latency? Generating multiple query variants, decomposing complex questions, and normalizing terminology all sound expensive.

In practice, intelligent implementation keeps query rewriting overhead minimal (50-150ms added latency) while retrieval parallelization actually reduces total end-to-end time.

Caching and Pre-computation: Pre-compute normalized terminology mappings, domain context vectors, and common query decomposition patterns. Instead of generating these dynamically, retrieve from cache. For 70-80% of user queries, you’re doing lookups, not generation.

Parallel Retrieval: Generate multiple query variants, then retrieve all variants in parallel against your vector database. Total latency is the time for the slowest retrieval, not the sum of all retrievals. With 5 query variants running in parallel, you get roughly the latency of one retrieval, not five.

Conditional Rewriting: Not every query needs every rewriting strategy. Simple, specific queries (“Show me Q4 2024 revenue”) bypass expansion and decomposition. Only ambiguous or complex queries trigger expensive rewriting. This reduces average overhead significantly.

Batch Processing for Offline Queries: For asynchronous use cases (nightly report generation, periodic analysis), run expensive multi-hop decomposition without latency constraints. For synchronous user queries, use lighter-weight strategies.

A typical production implementation achieves 80-120ms query rewriting overhead with these optimizations, while improving retrieval precision by 30-40%. The math is straightforward: trading 80ms of LLM processing for 25-40% better retrieval quality, avoiding expensive re-ranking or multiple retrieval rounds, is a compelling ROI.

Measuring Query Rewriting Effectiveness: Beyond Traditional RAG Metrics

Most teams measure RAG quality through RAGAS scores, BLEU metrics, or human evaluation of final answers. These are indirect measures of query rewriting effectiveness.

To properly measure query rewriting impact, you need intermediate metrics that isolate the contribution of rewriting from downstream components:

Retrieval Precision at K: Measure what fraction of top-K retrieved documents are actually relevant to the user’s intent, before LLM generation. This isolates retrieval quality. Query rewriting directly impacts this metric.

Query Reformulation Success Rate: Track how often expanded queries retrieve documents that the original query missed. If 40% of expanded query variants retrieve relevant documents that the base query missed, you’ve quantified the value of expansion.

Context Utilization Rate: Measure whether decomposed sub-queries actually retrieve documents that contribute to the final LLM answer. If 70% of sub-query results appear in the generated answer (via citation or semantic similarity), your decomposition is effective.

Terminology Alignment Score: Compare query language to knowledge base language. Pre-rewriting, calculate the semantic distance between query terminology and indexed document terminology. Post-rewriting, this distance should decrease significantly (indicating better alignment).

Implement a measurement framework that tracks these metrics by query complexity (simple vs. complex), domain (legal vs. healthcare vs. finance), and user type (power user vs. casual). This reveals where query rewriting is delivering value and where refinement is needed.

Real-World Implementation: Query Rewriting in Action

A financial services firm with 50,000 internal policy documents faced a critical problem: employees searched for policies but received outdated, irrelevant results. The knowledge base was indexed correctly. The vector database was optimized. The issue was query quality.

Their approach: implement query rewriting with three layers:

-

Policy Document Type Classification: Detect whether the user is asking about investment policy, compliance policy, operational procedure, or risk management framework. Add this classification to the rewritten query.

-

Effective Date Contextualization: Financial policies have version histories. Rewrite queries to include implicit context: “Show me current (as of 2025) policy on…” vs. “Show me historical policy on…” This improved retrieval of the correct policy version by 50%.

-

Cross-Reference Expansion: Policy documents frequently reference other policies. When decomposing a query, retrieve referenced policies in addition to directly relevant ones. “Policy on derivatives trading” retrieves both the derivatives policy AND the risk management policy it references.

Result: Retrieval precision improved from 62% to 89%. Employee satisfaction with search results increased from 34% to 71%. The financial services firm avoided expensive retrieval model retraining or vector database replacement—pure software-layer optimization.

The Future of Query Rewriting: Towards Adaptive Retrieval Systems

Current query rewriting implementations are static: the same rewriting strategies apply to all queries. The next frontier is adaptive rewriting that learns from failure patterns and adjusts strategies based on retrieval outcomes.

Adaptive systems track when query rewriting strategies succeed or fail, then retrain rewriting rules using reinforcement learning. If decomposition strategies consistently fail on legal queries but succeed on financial queries, the system learns to weight decomposition differently by domain. If certain terminology normalizations consistently improve precision, those normalizations get prioritized.

Research in 2024-2025 is exploring reinforcement learning for query optimization, with early results showing 15-25% additional precision improvements beyond static rewriting. This is the direction enterprise RAG is moving: systems that learn from retrieval failures and continuously optimize query transformation strategies.

The practical implication: if you’re building query rewriting today, architect it for learning. Log query reformulation decisions, track retrieval outcomes, and plan for reinforcement learning integration. Today’s deterministic rewriting becomes tomorrow’s adaptive system.

Implementing Query Rewriting in Your RAG Pipeline

Here’s the practical roadmap for enterprises starting with query rewriting:

Phase 1 (Week 1-2): Capture and Measure

Instrument your current RAG system to capture user queries and retrieval precision. Establish a baseline. You’re aiming to answer: what fraction of queries result in irrelevant top-K results? This reveals the opportunity for query rewriting.

Phase 2 (Week 3-4): Simple Expansion

Implement basic semantic query expansion using a domain-fine-tuned LLM prompt. Generate 3-5 query variants per user input. Merge results using reciprocal rank fusion. Measure precision improvement. You should see 15-25% improvement with minimal complexity.

Phase 3 (Week 5-6): Domain Normalization

Build terminology mapping for your domain. Create a mapping from generic user language to domain-specific terminology. Integrate into the query rewriting pipeline. This typically adds another 10-15% precision improvement.

Phase 4 (Week 7-8): Decomposition for Complex Queries

Identify your top 10% most complex queries (by length, by decomposability). Implement query decomposition for these high-value queries. Simple queries bypass decomposition to preserve latency.

Phase 5 (Week 9+): Measurement and Iteration

Track query rewriting effectiveness through the intermediate metrics above. Identify failure patterns. Refine rewriting strategies. Plan for reinforcement learning integration.

This five-phase approach can be implemented with 2-3 engineers over 8-10 weeks, and typically delivers 25-40% precision improvements with minimal infrastructure changes. The cost? A few thousand dollars in LLM API calls per month. The benefit? Dramatically better user experience without replacing your entire RAG stack.

Query rewriting is the optimization layer that separates good RAG systems from great ones. It’s not flashy infrastructure work—no database replacements, no new embedding models, no architectural redesigns. But it’s the difference between users seeing occasionally-relevant results and consistently finding exactly what they need.

The teams winning with RAG in 2025 aren’t the ones building the fanciest vector databases or training the best embedding models. They’re the ones who realized that retrieval quality depends on query quality first. Start there, and everything else becomes more effective.