Your CTO walks into the boardroom with a $500K budget proposal: fine-tune your LLM on proprietary data to “lock in” company knowledge. It sounds strategic. It sounds permanent. It sounds like progress. But six weeks later, your legal team updates compliance policy, your product documentation ships with critical changes, and your expensively fine-tuned model is already stale—rendering that half-million-dollar investment obsolete before it solves a single production problem.

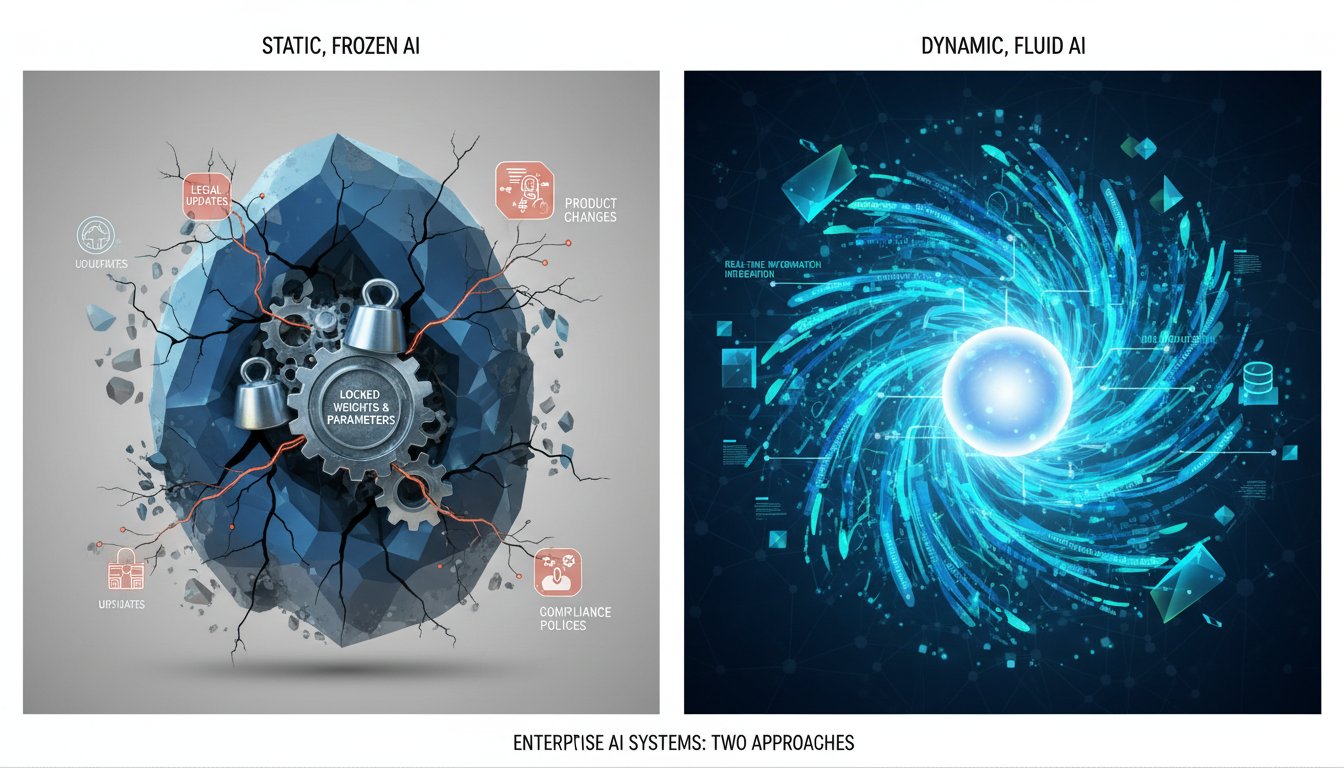

This is the fine-tuning illusion: the seductive belief that embedding knowledge directly into your model’s weights delivers competitive advantage. In reality, enterprises adopting this approach are discovering a painful truth—fine-tuning trades adaptability for permanence, scalability for control, and flexibility for computational cost. Meanwhile, their competitors are shipping RAG systems that evolve in real-time, scale without retraining, and cost a fraction as much to maintain.

The irony is that the data supporting this shift has been hiding in plain sight. Recent enterprise deployments across financial services, legal tech, and healthcare consistently show the same pattern: organizations that rushed into fine-tuning spend 3-4 times more on model maintenance than those investing in retrieval optimization. Yet most teams still frame the decision as binary—as though static models and dynamic retrieval are competing technologies rather than addressing fundamentally different business needs.

This post reveals why the enterprise market is quietly moving away from fine-tuning toward RAG, the specific metrics that make this shift inevitable, and the decision framework you need to avoid building the next generation of expensive, inflexible AI systems. More importantly, you’ll learn when fine-tuning does make sense—because it absolutely does in specific, high-control scenarios—and how to distinguish those from the 80% of enterprise AI projects where retrieval-augmented generation delivers dramatically better outcomes.

The Hidden Cost of Fine-Tuning: Why Your “Permanent” Knowledge Is Actually Expiring Daily

Fine-tuning feels like the right approach because it promises something seductive: permanent knowledge. You take your LLM, feed it thousands of company documents, adjust the model weights through gradient descent, and emerge with a model that “knows” your business. The training is done. The knowledge is locked in. What could go wrong?

Everything. And it starts the moment your first data point becomes outdated.

The Static Knowledge Problem

Consider a real scenario: A financial services firm fine-tunes a GPT-3.5 derivative on their 2024 compliance frameworks, market data, and risk policies. They invest $300K in infrastructure, annotation, and compute. The model ships to production in March 2025. By May, the SEC updates three critical regulations affecting their risk assessment workflows. Their compliance team publishes new internal guidance. A major client contract requires updated handling procedures.

Their fine-tuned model? Still operating on March’s snapshot of knowledge.

Now they face a choice: retrain the model (another $150K, 4-6 weeks of downtime, and coordination across engineering and compliance teams), or deploy the outdated model and accept compliance risk. Most organizations choose to live with the stale model—because retraining is too expensive to do frequently.

In contrast, a RAG-based competitor makes the same changes by updating their knowledge database—a process that takes hours, not weeks, requires no model retraining, and carries zero risk of breaking existing inference.

The Retraining Tax

Research from enterprise AI platforms shows that maintaining a fine-tuned model in production costs approximately $8-12K per retraining cycle. This includes compute infrastructure, data annotation, validation, and deployment coordination. Most enterprises retrain quarterly at minimum (when business data changes seasonally) and often monthly in fast-moving sectors like finance or healthcare.

That’s $32-48K annually just to keep a single fine-tuned model from becoming completely obsolete. For organizations with multiple models (customer service, compliance, technical support), the number climbs to $200K+ per year—before considering the engineering time spent managing retraining pipelines.

Meanwhile, maintaining a RAG system’s knowledge base costs roughly $500-1,000 monthly for storage, retrieval infrastructure, and embeddings updates—approximately $6-12K annually. A single retraining cycle for a fine-tuned model costs nearly as much as a year of RAG system maintenance.

The Knowledge Latency Problem

Here’s the hidden killer: even when organizations commit to frequent retraining, they still face knowledge latency—the delay between when information becomes true and when their model “knows” it.

Compare two scenarios:

Fine-tuned Model Timeline:

– 9:00 AM: New product feature launches; compliance team publishes guidance

– 9:30 AM: Your support team needs to answer customer questions about the new feature

– Your fine-tuned model has no knowledge of it. It was trained 6 weeks ago

– 2-week cycle: You schedule a retraining job, gather the new documentation, annotate training data, validate the model, deploy it to production

– 2:00 PM two weeks later: The model finally “knows” about the feature

– Customer frustration: Two weeks of responses that range from incomplete to dangerously incorrect

RAG-based Timeline:

– 9:00 AM: New product feature launches; documentation is published to your knowledge base

– 9:30 AM: A customer asks about the feature

– 0.5 seconds: The RAG system retrieves the relevant documentation and grounds the response in real-time knowledge

– Your answer is accurate and current

The latency difference isn’t theoretical—it’s the difference between a support system that builds customer trust and one that erodes it with every deflection.

When Fine-Tuning Actually Wins: The High-Control Scenarios

Before declaring fine-tuning dead, let’s acknowledge the truth: there are specific, high-value scenarios where fine-tuning delivers capabilities that RAG simply cannot match.

Scenario 1: Consistent Style and Tone Control

If your organization requires extremely consistent output formatting, tone, or compliance with specific communication guidelines, fine-tuning offers direct control over model behavior. Financial institutions that must maintain specific terminology (“equity” vs. “stock”), legal disclaimers, and communication tone across all outputs benefit from baking these patterns directly into model weights.

RAG can approximate this through prompt engineering, but fine-tuning provides tighter behavioral guarantees. The tradeoff: you lose adaptability for that consistency.

Scenario 2: Specialized Domain Reasoning with Constrained Data

In domains where reasoning patterns are highly specialized and external knowledge is limited or expensive to retrieve, fine-tuning can be superior. Example: medical coding specialists need deep pattern recognition trained on thousands of medical records to assign correct diagnostic codes quickly. The knowledge set is stable (diagnostic codes change slowly), the reasoning is domain-specific, and retrieval latency matters for throughput.

Here, fine-tuning on historical medical records + real-time RAG for updated code sets is often the winning hybrid approach.

Scenario 3: Offline-First or Low-Latency Inference

If your deployment environment cannot support retrieval infrastructure—think edge devices, offline-first applications, or systems where sub-100ms latency is non-negotiable—fine-tuned models embedded in the deployment environment make sense. All knowledge is baked into weights; no external retrieval required.

But this scenario applies to <5% of enterprise RAG deployments. Most organizations have sufficient network infrastructure for retrieval.

Scenario 4: Proprietary Reasoning Patterns You Cannot Disclose

If your competitive advantage depends on reasoning patterns you cannot expose (imagine a proprietary investment strategy or manufacturing optimization algorithm), fine-tuning that pattern into the model weights keeps it hidden. RAG-based approaches necessarily expose your retrieval documents in logs, audit trails, and debugging—making proprietary knowledge visible.

This is a legitimate reason to fine-tune critical IP—but it requires the other trade-offs (cost, latency, brittleness) to be acceptable.

The RAG Advantage: Flexibility, Cost, and Scale

Now let’s examine why the market is shifting toward RAG for 80% of enterprise use cases.

Cost Comparison: The 3-4x Advantage

A typical enterprise scenario: building a customer support system that answers questions about products, policies, and procedures.

Fine-tuning Approach (Year 1):

– Initial model fine-tuning: $300K

– Quarterly retraining cycles (4x): $160K

– Model hosting and inference infrastructure: $120K

– Total Year 1: $580K

RAG Approach (Year 1):

– Vector database setup and hosting: $60K

– Embedding infrastructure: $40K

– Retrieval pipeline development and maintenance: $80K

– LLM inference API costs (using third-party provider): $120K

– Total Year 1: $300K

Year 2 and beyond amplify the advantage because RAG’s maintenance costs stay relatively flat, while fine-tuning organizations commit to quarterly retraining cycles indefinitely. By Year 3, the cumulative cost difference reaches $1M+.

Adaptability: Knowledge Updates in Hours, Not Weeks

When your product team ships a critical bug fix, your compliance team updates policy, or your market data needs refreshing, RAG systems adapt in hours. Fine-tuned models require scheduling a retraining cycle—weeks of delay.

For fast-moving industries (fintech, e-commerce, SaaS), this difference directly translates to competitive advantage. Your competitors’ models are answering questions based on yesterday’s information while yours reflects today’s reality.

Scale Without Retraining

As your data grows—from 1,000 documents to 100,000 documents—RAG systems scale by adding storage and retrieval capacity. Fine-tuned models eventually hit a ceiling. Training on massive datasets becomes prohibitively expensive, and managing model versions becomes operationally complex.

Enterprise customers deploying RAG have reported handling document repositories 100x larger than their fine-tuned model’s training data—with better performance and lower latency.

Observability and Auditability

Regulatory bodies increasingly require organizations to explain AI decisions. “Because the model was trained on this data 6 weeks ago” doesn’t satisfy compliance officers. RAG systems provide explicit, auditable chains of reasoning: “The model retrieved these three documents, synthesized them, and generated this response.”

This auditability has become table stakes for regulated industries, giving RAG a structural advantage in compliance scenarios.

The Decision Framework: When to Choose Each Approach

Here’s the framework that separates winning decisions from costly mistakes:

Choose Fine-Tuning If:

- Knowledge domain is stable (changes <1x quarterly)

- Consistency and control matter more than adaptability

- Latency requirements are sub-100ms with no external retrieval infrastructure

- Proprietary reasoning patterns cannot be exposed in logs

- Cost of knowledge updates exceeds cost of model retraining

Choose RAG If:

- Knowledge domain is dynamic (changes monthly or more frequently)

- Adaptability and freshness are critical to business outcomes

- Latency requirements allow 500ms-2s retrieval overhead

- Auditability and explainability are regulatory requirements

- Data volume is growing faster than fine-tuning can accommodate

- Cost is a primary constraint

The Hybrid Winner: Fine-tuning + RAG

The most sophisticated enterprises are running both:

– Fine-tune on stable, proprietary reasoning patterns (your competitive moat)

– Augment with RAG for dynamic knowledge, real-time data, and frequently-updated information

This hybrid approach gives you fine-tuning’s reasoning consistency plus RAG’s knowledge freshness. Example: a financial services firm fine-tunes a model on historical trading patterns and proprietary risk algorithms, then augments it with RAG that pulls real-time market data, compliance updates, and client-specific information.

The Market Reality: Where Enterprise Adoption Is Heading

Market research indicates that 70% of enterprises now use RAG, with growth accelerating at 49% CAGR. Meanwhile, fine-tuned model adoption is plateauing—most organizations attempting it are discovering the maintenance burden exceeds the benefit.

The trend is clear: enterprises are learning that dynamic knowledge environments demand dynamic retrieval, not static models. As business cycles accelerate and data freshness becomes competitive necessity, the fine-tuning approach increasingly looks like a legacy technology—powerful for specific use cases, but economically unjustifiable for most modern AI deployments.

The organizations winning in 2025 are those that made this shift early and built their infrastructure around retrieval-augmented generation as the default approach, reserving fine-tuning for the rare, high-control scenarios where it genuinely adds value.

Taking Your Next Step

If your organization is considering a significant AI investment in 2025, resist the siren call of “permanent” knowledge embedded in model weights. The data overwhelmingly favors dynamic retrieval: lower costs, faster updates, better scale, and superior auditability.

Start with a RAG architecture as your foundation. If specific use cases later justify fine-tuning—because your reasoning patterns are truly proprietary or your latency constraints are genuinely prohibitive—layer it in selectively rather than making it your system’s core.

The enterprises that made this architectural choice early are shipping better products, maintaining lower infrastructure costs, and responding to market changes faster than their fine-tuning competitors. They’re also avoiding the painful realization (now hitting the fine-tuning crowd) that expensive permanence was never the point—adaptability was.

Ready to architect your first RAG system, or evaluating how to migrate away from fine-tuning? The decision framework above will guide you toward the approach that matches your actual business constraints, not the one that sounds most strategically permanent.