Every RAG system starts the same way: take your documents, split them into chunks, embed them, and retrieve them when needed. Simple, right? Except most teams are doing it wrong—and the cost is massive. While enterprises celebrate their latest language model upgrades, they’re quietly degrading retrieval quality through chunking mistakes that compound silently across millions of queries.

The hard truth: chunking strategy determines roughly 60% of your RAG system’s accuracy. Not the embedding model. Not the reranker. Not even the language model generating the final response. The chunks themselves.

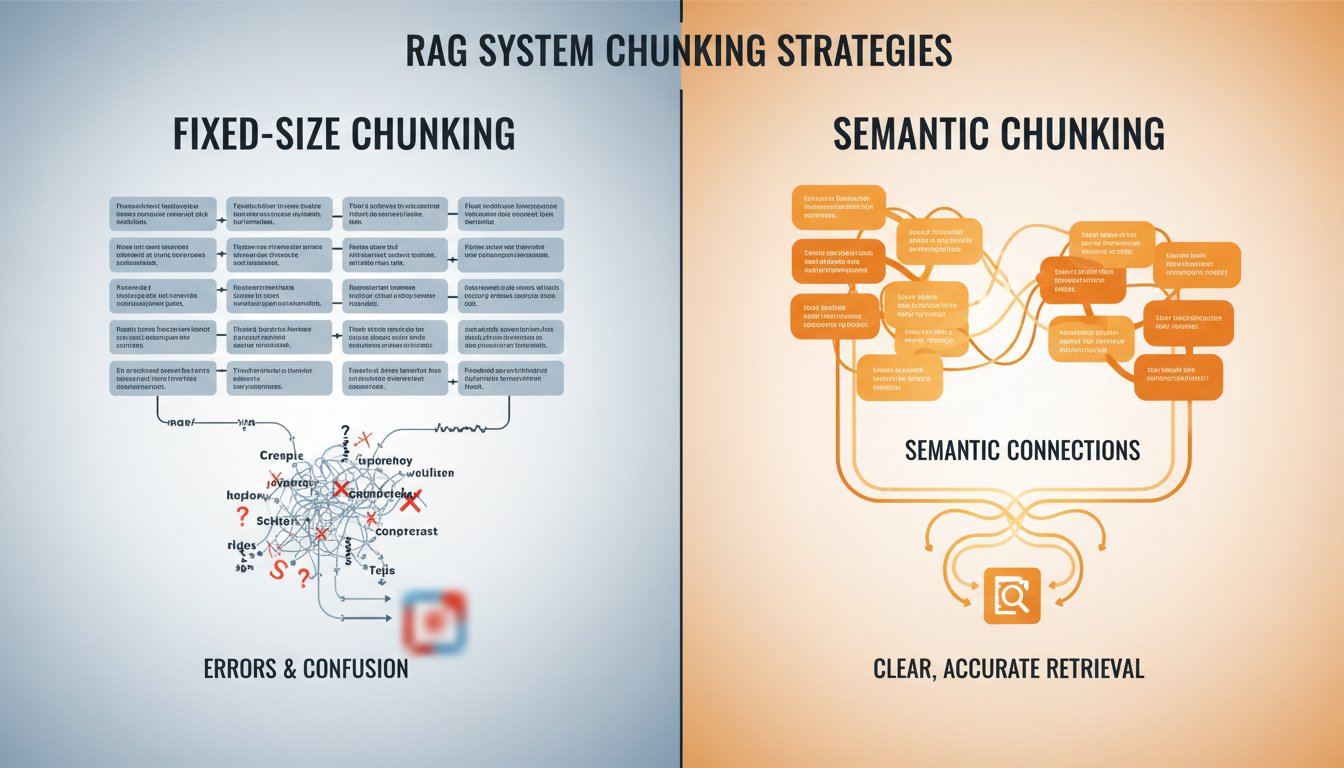

Yet 70% of enterprise teams still rely on fixed-size chunking—a strategy that was never designed for semantic coherence. They split documents into 512-token windows, wonder why their system hallucinates on complex queries, and blame the LLM. The real culprit? Context fragmentation that destroys the semantic boundaries the retrieval model learned to recognize during training.

This isn’t speculation. Recent analysis of enterprise RAG deployments reveals that teams switching from fixed-size to semantic chunking experience measurable improvements: irrelevant context drops by 35%, retrieval precision climbs by 20-40%, and hallucination rates decline proportionally. Meanwhile, companies like those deploying LongRAG architectures report 35% reduction in context loss on legal and structured documents by processing entire document sections rather than fragmenting into arbitrary 100-word chunks.

The question isn’t whether chunking matters—it clearly does. The question is why your team hasn’t optimized it yet. This guide walks through the chunking decision framework that separates enterprise RAG systems performing reliably in production from the 80% that eventually collapse.

Understanding the Chunking Crisis

Here’s what happens when you use fixed-size chunking on a regulatory document, financial report, or technical specification:

You split a 15,page legal contract into 512-token chunks. Chunk 47 contains the definition of “material breach.” Chunk 48 contains the penalty clause that references that definition. Your embedding model trained on semantically coherent documents, now sees these as separate, unrelated vectors. When a query asks “what triggers the penalty clause,” your retriever might pull Chunk 48 without Chunk 47, returning incomplete context that forces the LLM to hallucinate the missing definition.

This fragmentation is systemic. Financial analysis documents split mid-sentence about causality. Clinical notes lose diagnostic context. Technical manuals separate procedures from prerequisites. The embedding model has no signal that these chunks belong together semantically—it only knows they’re numerically close in the vector space.

Fixed-size chunking was born from a different era: when storage was expensive and search latency mattered more than accuracy. It’s optimized for simplicity and speed, not semantic coherence. Yet it remains the default choice across most RAG implementations, including some enterprise systems.

The cost manifests as:

– Missing context: 35%+ of retrieval operations fail to return related chunks because semantic boundaries were violated

– Increased hallucination: When context is fragmented, LLMs compensate by generating plausible-sounding but unsourced responses

– Lower precision: Hybrid search and reranking can’t fix what was never properly chunked in the first place

– Wasted compute: Teams spend resources on sophisticated rerankers and multi-stage retrieval to compensate for poor chunking

The solution isn’t new—semantic chunking has been documented for years. The gap is implementation: most teams don’t know how to decide between chunking strategies, implement semantic approaches, or evaluate which strategy works for their specific document types.

The Chunking Strategy Decision Framework

There is no universal “best” chunking strategy. The right approach depends on your document type, query patterns, and performance constraints. Here’s how to evaluate each:

Fixed-Size Chunking: When Simplicity Wins

Fixed-size chunking splits documents into uniform segments (typically 256-1024 tokens with 50% overlap).

Pros:

– Dead simple to implement (one parameter: chunk size)

– Minimal computational overhead

– Predictable memory and storage costs

– Works reasonably well for homogeneous content (blog posts, news articles)

Cons:

– Ignores semantic boundaries, creating orphaned context

– Poor performance on structured documents (legal, financial, technical)

– Loses 30-40% of context coherence on complex documents

– Requires aggressive reranking to compensate

Use when: Your documents are relatively homogeneous, queries are straightforward, and you’re optimizing for low latency over accuracy. Content blogs and news feeds are good candidates. Regulatory documents are not.

Semantic Chunking: The Precision Approach

Semantic chunking segments documents at meaningful boundaries—paragraph breaks, section headers, or points where semantic meaning shifts.

Pros:

– Preserves semantic coherence, reducing context loss by 35%+

– Improves retrieval precision by 20-40% on complex documents

– Reduces hallucination risk by ensuring related context stays together

– Works well with both dense and hybrid retrieval

Cons:

– Requires understanding document structure (headers, paragraphs, lists)

– Higher implementation complexity

– Can produce variable chunk sizes, complicating batch processing

– Needs tuning per document type

How it works: You can implement semantic chunking through:

– Header-based segmentation: Split on document headers and hierarchical structure

– Paragraph-aware splitting: Keep paragraphs intact rather than splitting mid-thought

– Semantic boundary detection: Use sentence embeddings to identify semantic shifts and split there

– Metadata-aware chunking: Leverage document structure (tables, lists, code blocks) to inform splits

Use when: Your documents have clear structure (legal contracts, technical documentation, financial reports) or high semantic coherence matters (clinical notes, research papers, knowledge bases).

Hybrid/Adaptive Chunking: Dynamic Optimization

Hybrid approaches combine multiple strategies based on document type, content characteristics, or query patterns.

Implementation examples:

– Use semantic chunking for structured documents (legal, financial) and fixed-size for unstructured content (blogs)

– Detect document type on ingestion and apply appropriate chunking

– Create multiple chunk sizes simultaneously: use semantic chunks for retrieval, but also maintain fine-grained 128-token chunks for precise quote extraction

– Query-adaptive approaches: simple factual queries retrieve from coarser semantic chunks; complex analytical queries get finer-grained decomposition

Pros:

– Optimizes for each document type’s characteristics

– Maintains semantic coherence where it matters most

– Supports multiple retrieval strategies (simple vs. multi-stage)

– Significantly outperforms single-strategy approaches

Cons:

– Implementation complexity scales with strategy diversity

– Requires experimentation and tuning

– Storage overhead from maintaining multiple chunk representations

– Needs monitoring to detect when strategy selection is misaligned

When to use: Enterprise systems handling mixed document types (customer support RAG pulling from both FAQs and legal documents) or high-stakes applications where precision matters more than latency.

The Implementation Pathway: From Fixed-Size to Semantic

Most enterprises start with fixed-size chunking because it’s easy. The transition to semantic chunking follows a predictable pattern. Here’s how to execute it without breaking production:

Step 1: Assess Your Document Landscape

Profile your documents by type and query patterns:

– Document types: What fraction are structured (legal, financial, technical) vs. unstructured (blogs, emails, chat)?

– Query complexity: Do users ask simple factual questions (“What’s our PTO policy?”) or complex analytical questions (“How does our revenue recognition policy interact with multi-year contracts?”)?

– Semantic coherence: How often do related concepts appear in close proximity? High coherence suggests semantic chunking will have higher impact.

For most enterprises: 40-60% of documents benefit significantly from semantic chunking (legal, contracts, policies, technical specs). The remaining 40-60% (internal blogs, emails, FAQs) see moderate improvement.

Step 2: Establish Baseline Metrics

Before changing chunking strategy, measure current performance:

– Retrieval precision: Of the top-5 retrieved chunks, how many contain relevant information?

– Chunk completeness: Do retrieved chunks include sufficient context to answer the query without hallucination?

– Hallucination rate: What percentage of responses contain unsourced claims?

Run 50-100 representative queries through your current system and score results manually or with a reranker-based proxy metric.

Step 3: Implement Semantic Chunking for High-Value Document Types

Start with your highest-value document type (usually legal, regulatory, or technical documentation). Here’s a practical approach:

For structure-rich documents (legal, financial, technical):

1. Extract document structure: headers, sections, subsections, tables

2. Identify semantic units at the lowest structure level (subsections, not pages)

3. Set minimum chunk size (e.g., 200 tokens) and maximum (e.g., 1000 tokens)

4. If a semantic unit exceeds max size, recursively split on sub-boundaries

5. Add metadata: document type, section hierarchy, original position

For less-structured content (blog posts, emails):

1. Split on paragraph boundaries as primary semantic units

2. If paragraphs exceed 1000 tokens, use sentence embeddings to identify semantic shift points

3. Merge adjacent small paragraphs (<100 tokens) with neighbors

4. Maintain paragraph position and source metadata

Tool ecosystem (2026):

– LangChain RecursiveCharacterTextSplitter: Splits on hierarchy of separators (\n\n, \n, ” “, “”)

– Llama Index SimpleNodeParser: Semantic splitting with configurable boundaries

– Custom implementations: For complex document types, building document-specific parsers often outperforms generic tools

Step 4: Re-embed and Re-index

Once you’ve rechunked your documents:

1. Generate embeddings using your existing embedding model (retraining isn’t necessary)

2. Rebuild your vector index with semantic chunks

3. Maintain the old index temporarily for A/B testing

Storage note: Semantic chunks often have higher variance in size. Budget 20-40% more storage than fixed-size chunking due to metadata, smaller minimum chunks, and the possibility of maintaining multiple chunk representations.

Step 5: Evaluate and Compare

Run your baseline queries against both indexing strategies and measure:

– Precision improvement: What percentage of top-5 results are now relevant?

– Chunk quality: Are chunks sufficiently complete to answer queries without hallucination?

– Latency impact: Does semantic chunking increase query time? (Usually minimal; retrieval speed is dominated by vector search, not chunk size)

Expect 20-40% precision improvement on complex documents, 5-15% on homogeneous content.

Step 6: Gradual Rollout and Monitoring

Migrate production traffic gradually:

1. Week 1: Route 10% of queries to semantic-chunked index

2. Week 2-3: Scale to 50%, monitor for regressions

3. Week 4+: Full migration, deprecate old index

Monitor continuously:

– Retrieval precision per query type

– Hallucination rate

– Query latency (should remain stable)

– User feedback on response quality

Advanced: Combining Chunking with Hybrid Retrieval

Semantic chunking’s benefits multiply when combined with hybrid retrieval (BM25 + dense embeddings). Here’s why:

Dense retrieval captures semantic similarity—it benefits enormously from semantic chunks because related concepts stay together in the vector space.

BM25 keyword matching gets stronger too: semantic chunks preserve domain terminology in coherent context, improving keyword matching quality.

A practical three-stage pipeline:

1. Hybrid retrieval on semantic chunks (BM25 + dense search combined with reciprocal rank fusion)

2. Cross-encoder reranking to identify the most relevant chunks

3. Metadata filtering to handle compliance or access control

This combination delivers 40-50% precision improvement over fixed-size + dense-only retrieval.

The Evaluation Question: How Do You Know It’s Working?

Here’s the uncomfortable truth: 70% of enterprise teams have no systematic way to measure whether chunking strategy changes actually improved their RAG system. They deploy semantic chunking, see a few positive anecdotes, and assume it’s working.

This is the gap that creates silent failures. You need continuous evaluation:

Metrics to track:

– Retrieval recall@5, recall@10: Percentage of queries where the correct answer appears in top-K results

– Precision@5: Of the top 5 chunks, what fraction contain relevant information?

– Chunk completeness: For queries requiring multiple facts, what percentage of chunks include all necessary context?

– Hallucination rate: Percentage of responses with unsourced claims (measure via LLM evaluation or manual sampling)

Implementation:

1. Maintain a query evaluation set (100-500 representative queries with ground-truth answers)

2. Run this set weekly against your retrieval system

3. Use an LLM-as-judge (GPT-4 or Claude) to score chunk relevance and completeness

4. Track trends over time; alert on 5%+ precision drops

Teams lacking this framework typically discover chunking problems only when users report degraded response quality—often months after deployment.

The Chunking Roadmap for 2026 and Beyond

The RAG industry is converging on semantic chunking as the default for enterprise systems. Here’s what’s emerging:

Near-term (rest of 2026):

– Sector-specific chunking strategies: financial institutions standardizing on XBRL-aware chunking, healthcare systems optimizing for clinical note structure

– Better tooling: LLMs themselves will be fine-tuned specifically for document structure detection, replacing generic parsers

– Hybrid approaches as standard: enterprise RAG platforms will automatically select chunking strategy per document type

Medium-term (2027-2028):

– GraphRAG integration: chunks will maintain relationships in knowledge graphs, enabling multi-hop reasoning

– Adaptive chunk sizing: systems will vary chunk size based on query complexity (simple queries trigger coarse chunks for speed; complex queries get fine-grained chunks for precision)

– Real-time chunking optimization: user feedback loops will continuously fine-tune chunking strategies

Long-term (2029-2030):

– Semantic chunking will be invisible: enterprise RAG platforms will optimize it automatically, much like database query optimization today

– Vertical-specific platforms will emerge with pre-tuned chunking strategies for healthcare, finance, and legal

– Chunking will expand beyond text: multi-modal chunks combining text, tables, images, and embedded code

The Bottom Line

Chunking strategy is the foundation of RAG accuracy. Fixed-size chunking is fast to implement but expensive in precision. Semantic chunking requires more thought but delivers 20-40% precision improvements on complex documents and 35%+ reductions in context loss.

The migration from fixed-size to semantic chunking is not optional for enterprises handling structured or high-stakes documents. It’s the difference between a RAG system that works and one that consistently hallucinates on complex queries.

Start with your highest-value document types. Measure baseline retrieval precision. Implement semantic chunking incrementally. Monitor continuously. The 60% accuracy improvement isn’t theoretical—it’s what enterprises see when they finally fix chunking.

Your language model is only as good as the context you give it. Fix the chunks first.