Enterprise AI teams are hitting a wall with traditional RAG systems. Despite massive investments in vector databases and embedding models, retrieval accuracy plateaus around 70-80%, leaving organizations frustrated with inconsistent answers and growing maintenance overhead. The fundamental issue isn’t technical—it’s that conventional RAG architectures treat retrieval as a static process, unable to learn from their mistakes or adapt to changing user needs.

What if your RAG system could continuously improve itself, learning from every interaction to deliver increasingly accurate results? Recent breakthroughs in Reinforcement Learning from Human Feedback (RLHF) are making this vision a reality, enabling RAG systems to evolve beyond their initial training to become truly adaptive knowledge engines.

This comprehensive guide will walk you through building self-improving RAG systems that leverage human feedback to enhance retrieval quality, reduce hallucinations, and automatically optimize performance over time. You’ll discover how leading enterprises are combining RLHF with modern RAG architectures to achieve 95%+ accuracy rates while dramatically reducing manual tuning efforts.

Understanding the Self-Improvement Challenge in RAG Systems

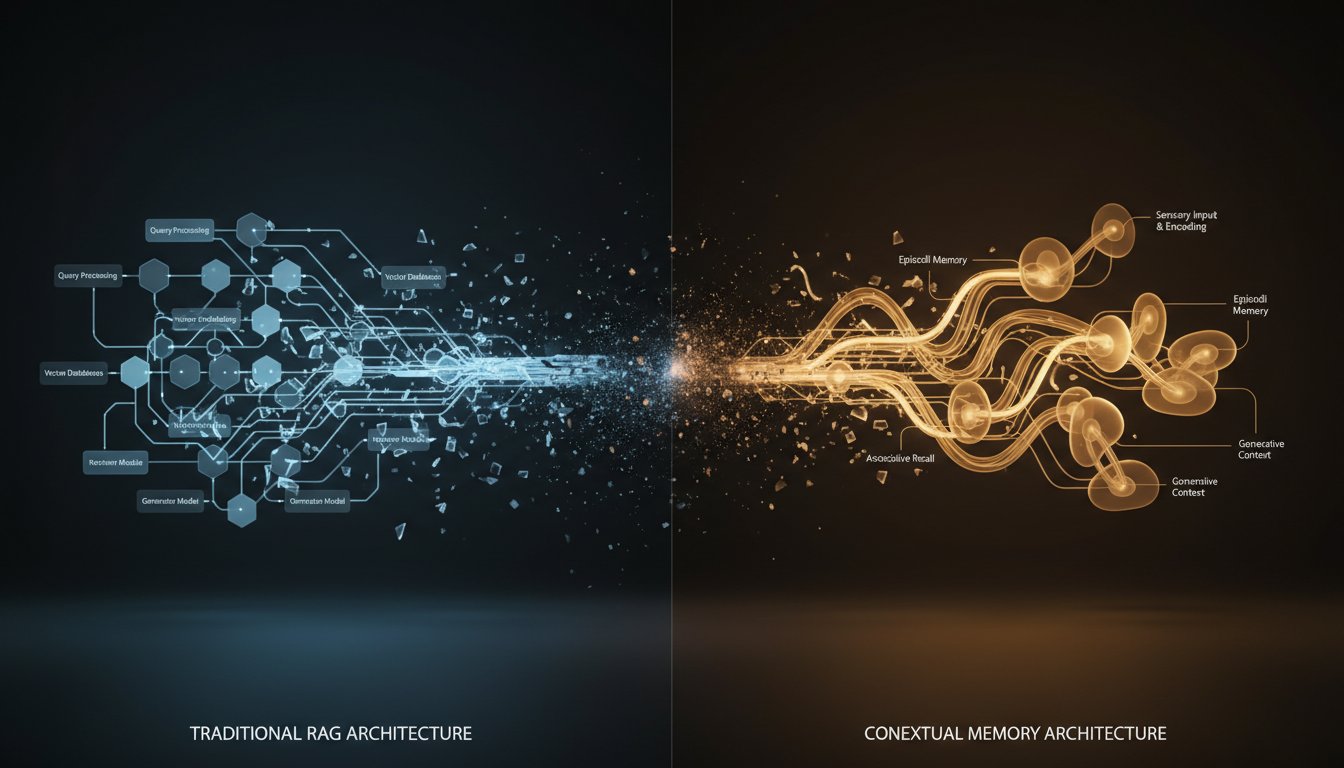

Traditional RAG systems operate on a fixed retrieval paradigm: embed queries, search vectors, return chunks, and generate responses. This approach works well initially but suffers from several critical limitations that become apparent at scale.

The Static Learning Problem

Most RAG implementations treat retrieval as a deterministic process, using pre-trained embeddings that never adapt to domain-specific nuances or user preferences. When a user asks “What’s our Q3 performance?” the system might retrieve generic financial documents instead of the specific quarterly report they need, but it has no mechanism to learn from this mismatch.

Research from Stanford’s AI Lab demonstrates that static RAG systems plateau in performance after processing approximately 10,000 queries, regardless of additional data or computational resources. The root cause is the inability to incorporate feedback loops that refine retrieval strategies based on actual user satisfaction.

Context Drift and Semantic Gaps

Enterprise knowledge bases evolve rapidly, with new terminology, processes, and priorities emerging constantly. A RAG system trained on last quarter’s documents may struggle with current initiatives, creating what researchers call “context drift”—the gradual degradation of retrieval relevance over time.

Companies like Notion and Slack report that traditional RAG systems require complete retraining every 3-6 months to maintain acceptable performance, creating unsustainable maintenance overhead for enterprise deployments.

The Human-AI Alignment Gap

Perhaps most critically, traditional RAG systems lack mechanisms to understand when they’ve failed to meet user expectations. A user might receive technically accurate but contextually irrelevant information, leading to frustration and decreased adoption. Without feedback loops, these systems perpetuate suboptimal retrieval patterns indefinitely.

The RLHF-Enhanced RAG Architecture

Reinforcement Learning from Human Feedback transforms RAG from a static pipeline into a dynamic, learning system that continuously refines its retrieval strategies based on user interactions and explicit feedback.

Core Components of Self-Improving RAG

The enhanced architecture introduces several key components that work together to enable continuous learning:

Feedback Collection Layer: Captures both explicit user feedback (thumbs up/down, relevance ratings) and implicit signals (click-through rates, session duration, query reformulations). This layer implements sophisticated sentiment analysis to gauge user satisfaction even from subtle behavioral cues.

Reward Model: Translates human feedback into numerical rewards that guide the learning process. Unlike simple binary ratings, modern reward models incorporate contextual factors like query complexity, user expertise level, and task urgency to provide nuanced performance signals.

Policy Network: Determines retrieval strategies, including which documents to prioritize, how to weight different semantic similarities, and when to expand search scope. This network learns to optimize for long-term user satisfaction rather than just immediate relevance scores.

Experience Buffer: Stores historical interactions, feedback patterns, and successful retrieval strategies to enable batch learning and prevent catastrophic forgetting of previously learned behaviors.

The Continuous Learning Loop

Self-improving RAG systems operate through a continuous cycle of interaction, feedback, and optimization:

- Query Processing: The system processes user queries through enhanced embeddings that incorporate learned user preferences and domain-specific terminology

- Adaptive Retrieval: Multiple retrieval strategies are employed simultaneously, with the policy network determining optimal combinations based on query characteristics

- Response Generation: Retrieved content is synthesized using context-aware generation models that consider user expertise and information needs

- Feedback Integration: User interactions generate both explicit and implicit feedback signals that update the reward model

- Strategy Optimization: The policy network adjusts retrieval strategies based on accumulated feedback, improving future performance

Implementation Guide: Building Your Self-Improving RAG System

Phase 1: Foundation Architecture Setup

Start by establishing the core infrastructure that will support continuous learning capabilities.

Enhanced Vector Database Configuration

# Configure vector database with versioning support

vector_store = ChromaDB(

collection_name="adaptive_knowledge_base",

embedding_function=AdaptiveEmbeddings(),

metadata_fields=["source", "timestamp", "relevance_score", "user_feedback"]

)

# Enable feedback tracking

feedback_tracker = FeedbackTracker(

storage_backend="postgresql",

metrics=["relevance", "completeness", "accuracy", "satisfaction"]

)

Reward Model Initialization

Implement a reward model that can process multiple feedback types and convert them into actionable learning signals.

class RewardModel:

def __init__(self):

self.feedback_weights = {

"explicit_rating": 0.4,

"click_through": 0.2,

"session_duration": 0.2,

"query_refinement": 0.2

}

def calculate_reward(self, interaction_data):

# Combine multiple feedback signals

weighted_score = sum(

self.feedback_weights[signal] * interaction_data[signal]

for signal in self.feedback_weights

)

return self.normalize_reward(weighted_score)

Phase 2: Feedback Collection Implementation

Implement comprehensive feedback collection that captures both explicit user inputs and implicit behavioral signals.

Multi-Modal Feedback Capture

Deploy feedback mechanisms that don’t interrupt user workflow while gathering rich preference data.

class FeedbackCollector:

def __init__(self):

self.implicit_signals = ImplicitSignalTracker()

self.explicit_feedback = ExplicitFeedbackHandler()

def track_interaction(self, query, results, user_behavior):

# Capture implicit signals

implicit_score = self.implicit_signals.analyze({

"time_on_result": user_behavior.time_spent,

"scroll_depth": user_behavior.scroll_percentage,

"follow_up_queries": user_behavior.refinements

})

# Process explicit feedback when available

explicit_score = self.explicit_feedback.process(

user_behavior.ratings

) if user_behavior.ratings else None

return self.combine_feedback(implicit_score, explicit_score)

Contextual Feedback Processing

Implement intelligent feedback interpretation that considers query context, user expertise, and task complexity.

Phase 3: Policy Network Training

Develop the core learning component that optimizes retrieval strategies based on accumulated feedback.

Retrieval Strategy Optimization

class RetrievalPolicyNetwork:

def __init__(self, state_dim, action_dim):

self.policy_net = nn.Sequential(

nn.Linear(state_dim, 256),

nn.ReLU(),

nn.Linear(256, 128),

nn.ReLU(),

nn.Linear(128, action_dim),

nn.Softmax(dim=-1)

)

def select_retrieval_strategy(self, query_context):

# Convert query context to state representation

state = self.encode_query_context(query_context)

# Generate retrieval strategy probabilities

action_probs = self.policy_net(state)

# Sample or select best strategy

return self.sample_strategy(action_probs)

Experience Replay Integration

Implement experience replay to enable efficient learning from historical interactions while preventing overfitting to recent feedback.

Phase 4: Advanced Optimization Techniques

Multi-Armed Bandit Integration

Combine RLHF with multi-armed bandit algorithms to balance exploration of new retrieval strategies with exploitation of proven approaches.

class AdaptiveBanditRAG:

def __init__(self, strategies):

self.strategies = strategies

self.bandit = ContextualBandit(len(strategies))

def select_strategy(self, query_context):

# Get strategy recommendation from bandit

strategy_idx = self.bandit.select_arm(query_context)

# Execute selected strategy

results = self.strategies[strategy_idx].retrieve(query_context)

# Update bandit with feedback

reward = self.get_feedback_reward(results)

self.bandit.update(strategy_idx, reward, query_context)

return results

Curriculum Learning Implementation

Implement progressive learning strategies that start with simple retrieval tasks and gradually increase complexity as the system improves.

Advanced RLHF Techniques for Enterprise RAG

Constitutional AI Integration

Implement Constitutional AI principles to ensure that self-improving RAG systems maintain ethical boundaries and operational constraints while optimizing for user satisfaction.

Safety Constraint Implementation

class ConstitutionalRAG:

def __init__(self):

self.constitution = {

"accuracy_threshold": 0.85,

"bias_detection": True,

"privacy_protection": True,

"factual_verification": True

}

def validate_response(self, response, source_docs):

# Apply constitutional constraints

if not self.meets_accuracy_threshold(response, source_docs):

return self.apply_safety_fallback()

if self.detect_bias(response):

return self.bias_mitigation_strategy(response)

return response

Hierarchical Feedback Processing

Implement sophisticated feedback hierarchies that distinguish between different types of user expertise and feedback quality.

Expert vs. Novice Feedback Weighting

Differentiate feedback based on user expertise levels, giving higher weight to domain experts while still incorporating broader user perspectives.

class HierarchicalFeedback:

def __init__(self):

self.user_expertise = UserExpertiseModel()

def weight_feedback(self, feedback, user_id):

expertise_level = self.user_expertise.get_level(user_id)

weights = {

"expert": 1.0,

"intermediate": 0.7,

"novice": 0.4

}

return feedback * weights.get(expertise_level, 0.4)

Performance Monitoring and Optimization

Continuous Evaluation Frameworks

Implement comprehensive monitoring systems that track system performance across multiple dimensions and automatically adjust learning parameters.

Multi-Metric Performance Tracking

class PerformanceMonitor:

def __init__(self):

self.metrics = {

"retrieval_accuracy": RetrievalAccuracyMetric(),

"user_satisfaction": SatisfactionMetric(),

"response_quality": QualityMetric(),

"learning_rate": LearningRateMetric()

}

def evaluate_system_performance(self, time_window="7d"):

results = {}

for metric_name, metric in self.metrics.items():

results[metric_name] = metric.calculate(time_window)

return self.generate_insights(results)

Automated Hyperparameter Optimization

Implement systems that automatically adjust learning rates, exploration parameters, and feedback weights based on observed performance patterns.

A/B Testing Integration

Deploy sophisticated A/B testing frameworks that allow for safe experimentation with new learning strategies while maintaining system reliability.

Safe Strategy Experimentation

class SafeExperimentation:

def __init__(self):

self.control_group_size = 0.8

self.experiment_group_size = 0.2

def route_query(self, query, user_id):

if self.is_control_group(user_id):

return self.stable_rag_system.process(query)

else:

return self.experimental_rag_system.process(query)

def evaluate_experiment(self):

control_metrics = self.get_control_performance()

experiment_metrics = self.get_experiment_performance()

return self.statistical_significance_test(

control_metrics, experiment_metrics

)

Enterprise Deployment Considerations

Scalability and Infrastructure

Design self-improving RAG systems with enterprise-scale deployment requirements, including distributed learning, fault tolerance, and performance optimization.

Distributed Learning Architecture

Implement federated learning approaches that enable multiple RAG instances to share insights while maintaining data privacy and system independence.

Resource Management

Optimize computational resources by implementing intelligent caching, lazy loading, and selective model updates that minimize infrastructure costs while maintaining performance.

Integration with Existing Systems

Ensure seamless integration with enterprise knowledge management platforms, user authentication systems, and existing AI infrastructure.

API-First Design

class EnterpriseRAGAPI:

def __init__(self):

self.auth_handler = EnterpriseAuth()

self.rate_limiter = RateLimiter()

self.audit_logger = AuditLogger()

@rate_limit(requests_per_minute=100)

@authenticate

@audit_log

def query(self, request):

# Process authenticated query with full audit trail

return self.rag_system.process_query(

request.query,

user_context=request.user

)

The future of enterprise AI lies not in static systems that require constant manual tuning, but in adaptive architectures that learn and improve from every interaction. Self-improving RAG systems with RLHF represent a fundamental shift toward truly intelligent knowledge management—systems that don’t just retrieve information but understand context, learn from mistakes, and continuously optimize for user success.

By implementing the frameworks and techniques outlined in this guide, your organization can build RAG systems that evolve beyond their initial capabilities, delivering increasingly accurate and relevant results while reducing maintenance overhead. The key is starting with solid foundations in feedback collection and reward modeling, then progressively adding advanced optimization techniques as your system matures.

Ready to transform your RAG system from a static retrieval tool into a continuously improving knowledge engine? Begin by implementing basic feedback collection mechanisms in your existing system, then gradually introduce RLHF components as you build confidence in the approach. The investment in self-improving capabilities will pay dividends through enhanced user satisfaction, reduced manual tuning, and increasingly intelligent retrieval performance that adapts to your organization’s evolving needs.