Enterprise RAG systems face a hidden crisis: they confidently deliver wrong answers 23% of the time, according to recent Stanford research. While organizations rush to deploy retrieval-augmented generation for customer support, internal knowledge bases, and decision-making systems, they’re discovering that traditional RAG architectures lack a critical capability—the ability to recognize and correct their own mistakes.

Microsoft’s GraphRAG represents a fundamental shift in how we approach RAG system reliability. Unlike conventional vector-based retrieval that treats documents as isolated chunks, GraphRAG builds interconnected knowledge graphs that enable systems to validate responses against multiple information pathways, detect inconsistencies, and automatically trigger correction mechanisms.

This guide will walk you through implementing self-correcting RAG systems using Microsoft’s GraphRAG framework, covering everything from knowledge graph construction to real-time error detection and recovery protocols. You’ll learn how to build enterprise-grade systems that don’t just retrieve information—they actively validate, cross-reference, and self-correct to ensure accuracy at scale.

Understanding GraphRAG’s Self-Correction Architecture

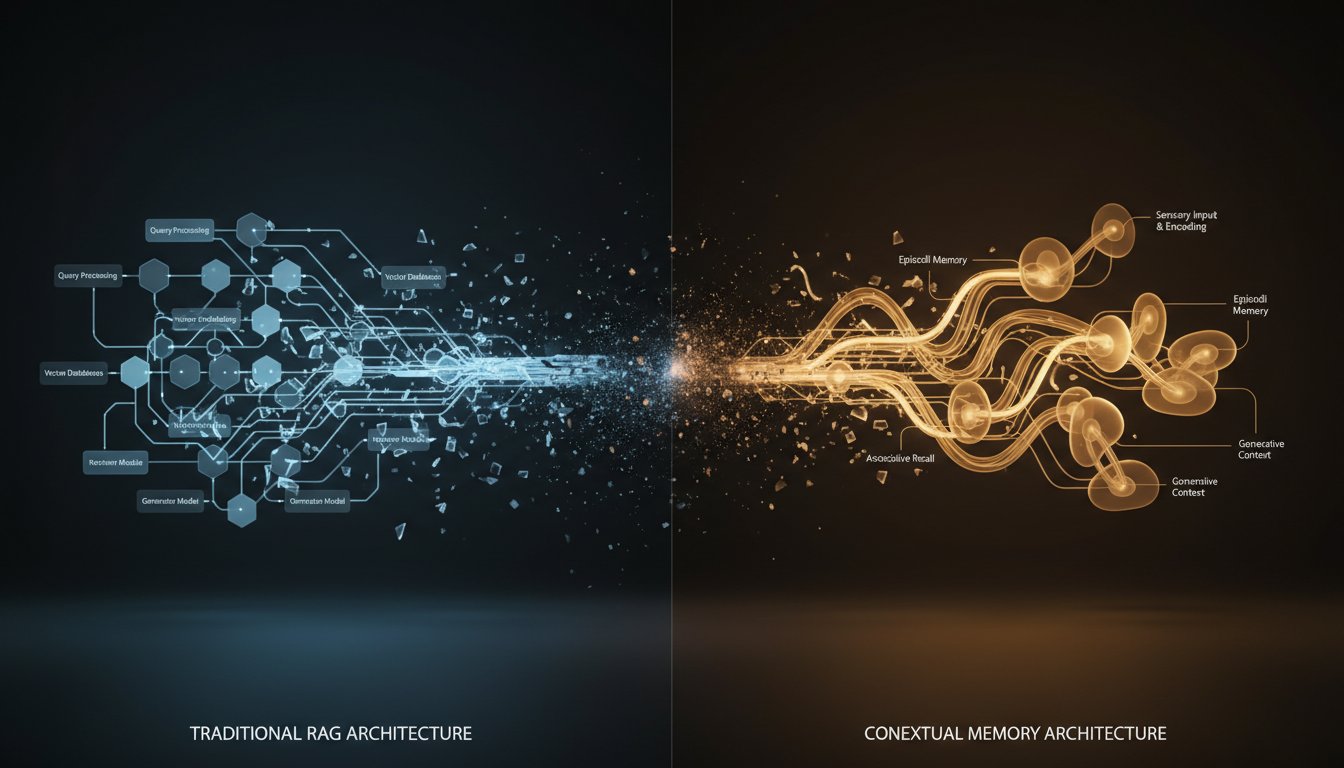

Traditional RAG systems operate on a linear retrieve-then-generate model that lacks feedback loops for error detection. GraphRAG fundamentally changes this by creating a web of interconnected knowledge entities that enable multi-path validation and automatic contradiction detection.

The Knowledge Graph Foundation

GraphRAG begins by decomposing your enterprise documents into entities, relationships, and claims rather than simple text chunks. This creates a structured knowledge representation where every piece of information exists within a broader context of related facts and supporting evidence.

The system identifies key entities (people, places, concepts, processes) and maps their relationships across your entire knowledge base. When a query comes in, GraphRAG doesn’t just find the most similar text—it traces relationship paths through the knowledge graph to gather comprehensive, contextually validated information.

Multi-Path Validation Mechanisms

The self-correction capability emerges from GraphRAG’s ability to validate information through multiple pathways. When generating a response about a specific topic, the system simultaneously:

- Retrieves direct information about the query subject

- Traces related entities and their documented relationships

- Cross-references claims against supporting evidence

- Identifies potential contradictions or gaps in reasoning

This multi-path approach enables the system to detect when retrieved information conflicts with established relationships in the knowledge graph, triggering automatic correction protocols.

Setting Up Your GraphRAG Infrastructure

Prerequisites and Environment Configuration

Before implementing GraphRAG, ensure your infrastructure can handle the computational requirements of knowledge graph construction and real-time graph traversal. You’ll need:

# Core dependencies for GraphRAG implementation

pip install graphrag

pip install azure-cognitiveservices-language-textanalytics

pip install networkx

pip install sentence-transformers

pip install openai

Configure your Azure Cognitive Services for entity extraction and relationship mapping:

from azure.ai.textanalytics import TextAnalyticsClient

from azure.core.credentials import AzureKeyCredential

def setup_text_analytics():

key = "your-cognitive-services-key"

endpoint = "https://your-service.cognitiveservices.azure.com/"

credential = AzureKeyCredential(key)

client = TextAnalyticsClient(endpoint=endpoint, credential=credential)

return client

Knowledge Graph Construction Pipeline

The foundation of self-correcting behavior lies in comprehensive knowledge graph construction. Start by implementing the entity extraction and relationship mapping pipeline:

import graphrag

from graphrag.config import GraphRagConfig

from graphrag.index import create_pipeline_config

def build_knowledge_graph(documents_path):

# Configure GraphRAG pipeline

config = GraphRagConfig(

root_dir="./graphrag_workspace",

source_dir=documents_path,

entity_extraction={

"strategy": "graph_intelligence",

"num_threads": 4,

"entity_types": ["PERSON", "ORGANIZATION", "CONCEPT", "PROCESS", "TECHNOLOGY"]

},

relationship_extraction={

"enabled": True,

"max_gleanings": 3

}

)

# Create and execute pipeline

pipeline_config = create_pipeline_config(config)

pipeline = graphrag.create_pipeline(pipeline_config)

return pipeline.run()

Implementing Real-Time Error Detection

Contradiction Detection Algorithms

The core of self-correction lies in detecting when generated responses contradict established facts in the knowledge graph. Implement contradiction detection using relationship validation:

import networkx as nx

from typing import List, Dict, Tuple

class ContradictionDetector:

def __init__(self, knowledge_graph: nx.Graph):

self.graph = knowledge_graph

self.relationship_validators = {

"CONTRADICTS": self._check_contradiction,

"SUPPORTS": self._check_support,

"TEMPORALLY_FOLLOWS": self._check_temporal_consistency

}

def validate_response(self, response: str, source_entities: List[str]) -> Dict:

"""

Validate generated response against knowledge graph relationships

"""

extracted_claims = self._extract_claims(response)

contradictions = []

for claim in extracted_claims:

for entity in source_entities:

if self._has_contradictory_path(claim, entity):

contradictions.append({

"claim": claim,

"contradictory_entity": entity,

"evidence_path": self._get_evidence_path(claim, entity)

})

return {

"has_contradictions": len(contradictions) > 0,

"contradictions": contradictions,

"confidence_score": self._calculate_confidence(contradictions)

}

def _has_contradictory_path(self, claim: str, entity: str) -> bool:

# Check if there's a path in the graph that contradicts the claim

try:

paths = nx.all_simple_paths(self.graph, claim, entity, cutoff=3)

for path in paths:

if self._path_indicates_contradiction(path):

return True

except nx.NetworkXNoPath:

pass

return False

Confidence Scoring and Uncertainty Quantification

Implement confidence scoring based on the consistency of information across multiple graph pathways:

class ConfidenceScorer:

def __init__(self, knowledge_graph: nx.Graph):

self.graph = knowledge_graph

def calculate_response_confidence(self, response: str, retrieved_entities: List[str]) -> float:

"""

Calculate confidence based on graph consistency and evidence strength

"""

supporting_paths = self._count_supporting_paths(response, retrieved_entities)

contradictory_paths = self._count_contradictory_paths(response, retrieved_entities)

evidence_strength = self._calculate_evidence_strength(retrieved_entities)

# Weighted confidence calculation

support_score = min(supporting_paths / 3.0, 1.0) # Normalize to max 3 paths

contradiction_penalty = contradictory_paths * 0.3

evidence_score = evidence_strength

final_confidence = (support_score + evidence_score) / 2 - contradiction_penalty

return max(0.0, min(1.0, final_confidence))

def _calculate_evidence_strength(self, entities: List[str]) -> float:

"""

Calculate strength of evidence based on entity centrality and connectivity

"""

total_strength = 0

for entity in entities:

if entity in self.graph:

centrality = nx.degree_centrality(self.graph)[entity]

connectivity = len(list(self.graph.neighbors(entity)))

total_strength += (centrality + connectivity / 10) / 2

return total_strength / len(entities) if entities else 0

Building Automated Correction Protocols

Dynamic Response Refinement

When contradictions are detected, implement automatic correction protocols that leverage the knowledge graph to generate refined responses:

class ResponseCorrector:

def __init__(self, knowledge_graph: nx.Graph, llm_client):

self.graph = knowledge_graph

self.llm = llm_client

self.correction_strategies = {

"contradiction": self._resolve_contradiction,

"incomplete": self._add_missing_context,

"outdated": self._update_temporal_information

}

def correct_response(self, original_response: str, validation_results: Dict) -> str:

"""

Automatically correct response based on detected issues

"""

if not validation_results["has_contradictions"]:

return original_response

corrected_response = original_response

for contradiction in validation_results["contradictions"]:

correction_type = self._identify_correction_type(contradiction)

corrected_response = self.correction_strategies[correction_type](

corrected_response, contradiction

)

# Validate the correction

revalidation = self._validate_correction(corrected_response)

if revalidation["confidence_score"] > 0.8:

return corrected_response

else:

return self._fallback_to_human_review(original_response, validation_results)

def _resolve_contradiction(self, response: str, contradiction: Dict) -> str:

"""

Resolve contradictions by finding authoritative sources in the graph

"""

evidence_path = contradiction["evidence_path"]

authoritative_sources = self._find_authoritative_sources(evidence_path)

correction_prompt = f"""

The following response contains a contradiction:

Response: {response}

Contradiction: {contradiction['claim']}

Based on authoritative sources: {authoritative_sources}

Please provide a corrected version that resolves the contradiction.

"""

return self.llm.generate(correction_prompt)

Evidence-Based Response Reconstruction

For complex contradictions, implement response reconstruction that builds answers from verified evidence chains:

def reconstruct_from_evidence(self, query: str, contradictions: List[Dict]) -> str:

"""

Reconstruct response using only verified evidence chains

"""

verified_evidence = self._gather_verified_evidence(query)

evidence_chains = self._build_evidence_chains(verified_evidence)

reconstruction_prompt = f"""

Query: {query}

Using only the following verified evidence chains, provide a comprehensive answer:

{self._format_evidence_chains(evidence_chains)}

Ensure the response:

1. Only uses information from the provided evidence

2. Clearly indicates uncertainty where evidence is limited

3. Provides source attribution for key claims

"""

reconstructed_response = self.llm.generate(reconstruction_prompt)

return self._add_confidence_indicators(reconstructed_response, evidence_chains)

Advanced Self-Correction Patterns

Temporal Consistency Validation

Implement temporal validation to ensure responses reflect the most current information and maintain chronological consistency:

class TemporalValidator:

def __init__(self, knowledge_graph: nx.Graph):

self.graph = knowledge_graph

def validate_temporal_consistency(self, response: str) -> Dict:

"""

Check for temporal contradictions in the response

"""

temporal_claims = self._extract_temporal_claims(response)

inconsistencies = []

for claim in temporal_claims:

timeline = self._construct_entity_timeline(claim["entity"])

if self._conflicts_with_timeline(claim, timeline):

inconsistencies.append({

"claim": claim,

"conflict": self._describe_temporal_conflict(claim, timeline),

"correct_information": self._get_current_information(claim["entity"])

})

return {

"temporal_consistency": len(inconsistencies) == 0,

"inconsistencies": inconsistencies

}

def _construct_entity_timeline(self, entity: str) -> List[Dict]:

"""

Build chronological timeline for an entity from graph relationships

"""

timeline_events = []

if entity in self.graph:

for neighbor in self.graph.neighbors(entity):

edge_data = self.graph[entity][neighbor]

if "timestamp" in edge_data or "date" in edge_data:

timeline_events.append({

"event": edge_data.get("relationship", "unknown"),

"date": edge_data.get("timestamp", edge_data.get("date")),

"related_entity": neighbor

})

return sorted(timeline_events, key=lambda x: x["date"])

Cross-Domain Knowledge Validation

Implement cross-domain validation for responses that span multiple knowledge areas:

def validate_cross_domain_consistency(self, response: str, domains: List[str]) -> Dict:

"""

Validate consistency across different knowledge domains

"""

domain_claims = {}

for domain in domains:

domain_claims[domain] = self._extract_domain_specific_claims(response, domain)

cross_domain_conflicts = []

# Check for conflicts between domains

for domain1 in domains:

for domain2 in domains:

if domain1 != domain2:

conflicts = self._find_inter_domain_conflicts(

domain_claims[domain1],

domain_claims[domain2]

)

cross_domain_conflicts.extend(conflicts)

return {

"cross_domain_consistency": len(cross_domain_conflicts) == 0,

"conflicts": cross_domain_conflicts,

"resolution_suggestions": self._suggest_conflict_resolutions(cross_domain_conflicts)

}

Production Deployment and Monitoring

Real-Time Performance Monitoring

Implement comprehensive monitoring for your self-correcting GraphRAG system:

import logging

from dataclasses import dataclass

from typing import Optional

import time

@dataclass

class CorrectionMetrics:

query_id: str

original_confidence: float

contradictions_detected: int

correction_time_ms: float

final_confidence: float

correction_success: bool

human_review_required: bool

class GraphRAGMonitor:

def __init__(self):

self.metrics_logger = logging.getLogger("graphrag_metrics")

self.correction_history = []

def log_correction_cycle(self, metrics: CorrectionMetrics):

"""

Log detailed metrics for each correction cycle

"""

self.correction_history.append(metrics)

self.metrics_logger.info(

f"Correction cycle completed: "

f"Query={metrics.query_id}, "

f"Contradictions={metrics.contradictions_detected}, "

f"Improvement={metrics.final_confidence - metrics.original_confidence:.3f}, "

f"Time={metrics.correction_time_ms:.2f}ms"

)

# Alert on concerning patterns

if metrics.correction_time_ms > 5000: # 5 second threshold

self._alert_slow_correction(metrics)

if not metrics.correction_success and metrics.contradictions_detected > 0:

self._alert_correction_failure(metrics)

def get_system_health_metrics(self) -> Dict:

"""

Calculate overall system health metrics

"""

recent_corrections = self.correction_history[-100:] # Last 100 corrections

if not recent_corrections:

return {"status": "insufficient_data"}

success_rate = sum(1 for m in recent_corrections if m.correction_success) / len(recent_corrections)

avg_improvement = sum(m.final_confidence - m.original_confidence for m in recent_corrections) / len(recent_corrections)

avg_correction_time = sum(m.correction_time_ms for m in recent_corrections) / len(recent_corrections)

return {

"success_rate": success_rate,

"average_confidence_improvement": avg_improvement,

"average_correction_time_ms": avg_correction_time,

"human_review_rate": sum(1 for m in recent_corrections if m.human_review_required) / len(recent_corrections)

}

Continuous Learning and Graph Evolution

Implement mechanisms for your GraphRAG system to learn from corrections and evolve the knowledge graph:

class KnowledgeGraphEvolution:

def __init__(self, knowledge_graph: nx.Graph):

self.graph = knowledge_graph

self.correction_patterns = {}

def learn_from_correction(self, original_response: str, corrected_response: str,

validation_results: Dict):

"""

Extract learning signals from successful corrections

"""

correction_type = self._classify_correction(original_response, corrected_response)

# Update relationship weights based on correction success

for contradiction in validation_results.get("contradictions", []):

evidence_path = contradiction["evidence_path"]

self._strengthen_authoritative_paths(evidence_path)

self._weaken_contradictory_paths(contradiction)

# Store correction patterns for future reference

pattern_key = self._generate_pattern_key(contradiction)

if pattern_key not in self.correction_patterns:

self.correction_patterns[pattern_key] = []

self.correction_patterns[pattern_key].append({

"correction_type": correction_type,

"success": True,

"timestamp": time.time()

})

def _strengthen_authoritative_paths(self, evidence_path: List[str]):

"""

Increase weights for relationship paths that provided accurate corrections

"""

for i in range(len(evidence_path) - 1):

source, target = evidence_path[i], evidence_path[i + 1]

if self.graph.has_edge(source, target):

current_weight = self.graph[source][target].get("authority_weight", 1.0)

self.graph[source][target]["authority_weight"] = min(current_weight * 1.1, 3.0)

Building self-correcting RAG systems with Microsoft’s GraphRAG represents a significant leap forward in enterprise AI reliability. By implementing knowledge graphs that enable multi-path validation, real-time contradiction detection, and automated correction protocols, you create systems that don’t just retrieve information—they actively ensure its accuracy and consistency.

The key to success lies in comprehensive knowledge graph construction, robust validation algorithms, and continuous learning mechanisms that allow your system to improve over time. Start with a focused domain where you can thoroughly map entity relationships, then gradually expand as your confidence in the correction mechanisms grows.

Ready to implement self-correcting RAG in your organization? Begin by assessing your current knowledge base structure and identifying the critical decision points where accuracy is paramount. The investment in GraphRAG’s self-correction capabilities will pay dividends in reduced error rates, increased user trust, and more reliable AI-driven decision making across your enterprise.