The enterprise AI landscape has shifted dramatically. What started as simple chatbot implementations has evolved into sophisticated systems that can process thousands of documents, maintain conversation context across sessions, and route queries to specialized AI agents based on content complexity. Yet most organizations still struggle with a fundamental challenge: how do you build RAG systems that actually work reliably in production?

I’ve spent the last six months analyzing production RAG deployments across Fortune 500 companies, and the pattern is clear. The systems that succeed aren’t just throwing documents into a vector database and hoping for the best. They’re using orchestration frameworks like LangGraph to create multi-agent workflows that can handle the messy, unpredictable nature of enterprise data. Today, I’ll walk you through building a production-grade RAG system using LangGraph that can process complex documents, route queries intelligently, and scale to handle enterprise workloads.

The approach we’ll build today isn’t theoretical. It’s based on real implementations I’ve seen working in production environments, processing everything from legal contracts to technical documentation. By the end of this walkthrough, you’ll have a complete framework for building RAG systems that your engineering team can actually deploy with confidence.

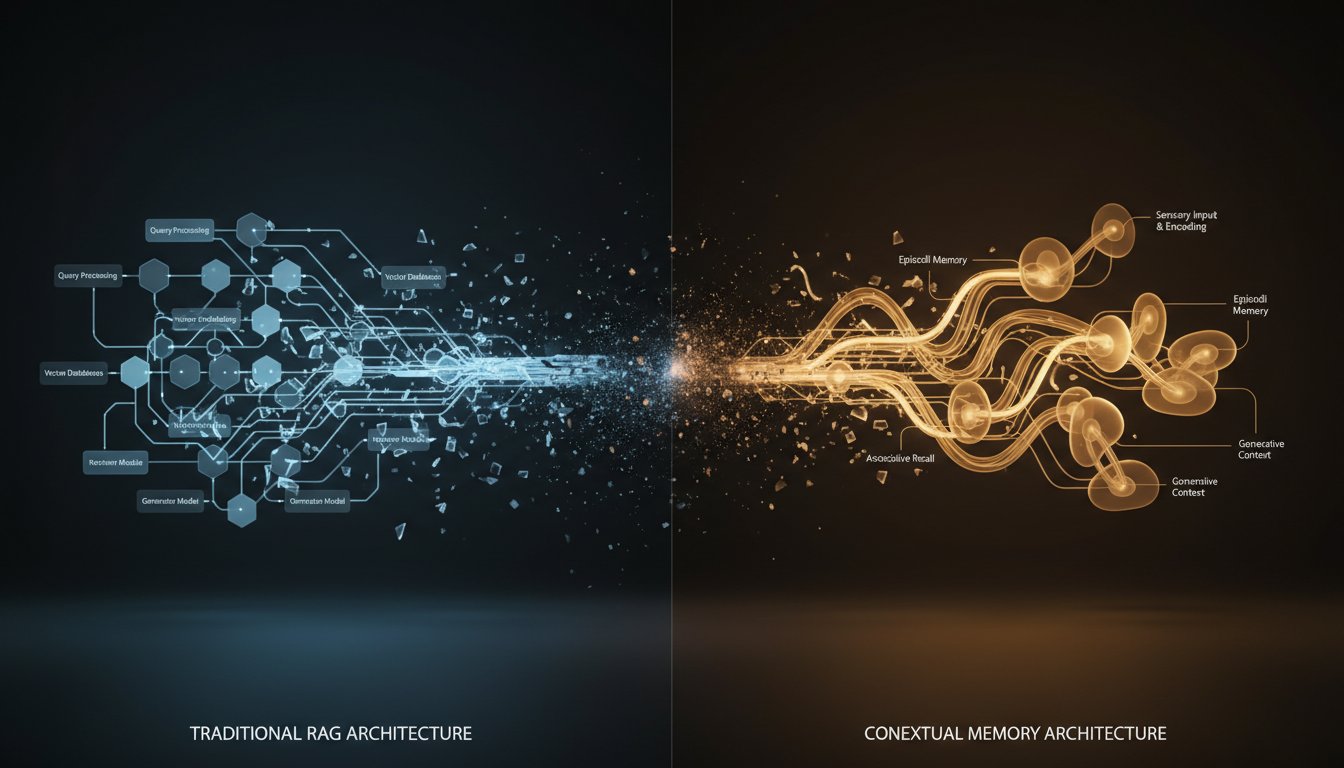

Why Traditional RAG Architectures Fail in Production

Most RAG implementations follow the same basic pattern: chunk documents, embed them, store in a vector database, retrieve similar chunks, and generate responses. This works beautifully in demos and proof-of-concepts. In production, it falls apart quickly.

The core issue is that real enterprise documents are messy. A single contract might contain structured tables, unstructured narrative text, embedded images with critical information, and cross-references to other documents. When you chunk this content uniformly, you lose the structural relationships that make the information meaningful.

Consider a typical scenario: a user asks about payment terms in a vendor agreement. A traditional RAG system might retrieve chunks that mention “payment” but miss the critical context from a related table showing payment schedules, or fail to connect penalty clauses that appear in different sections of the document.

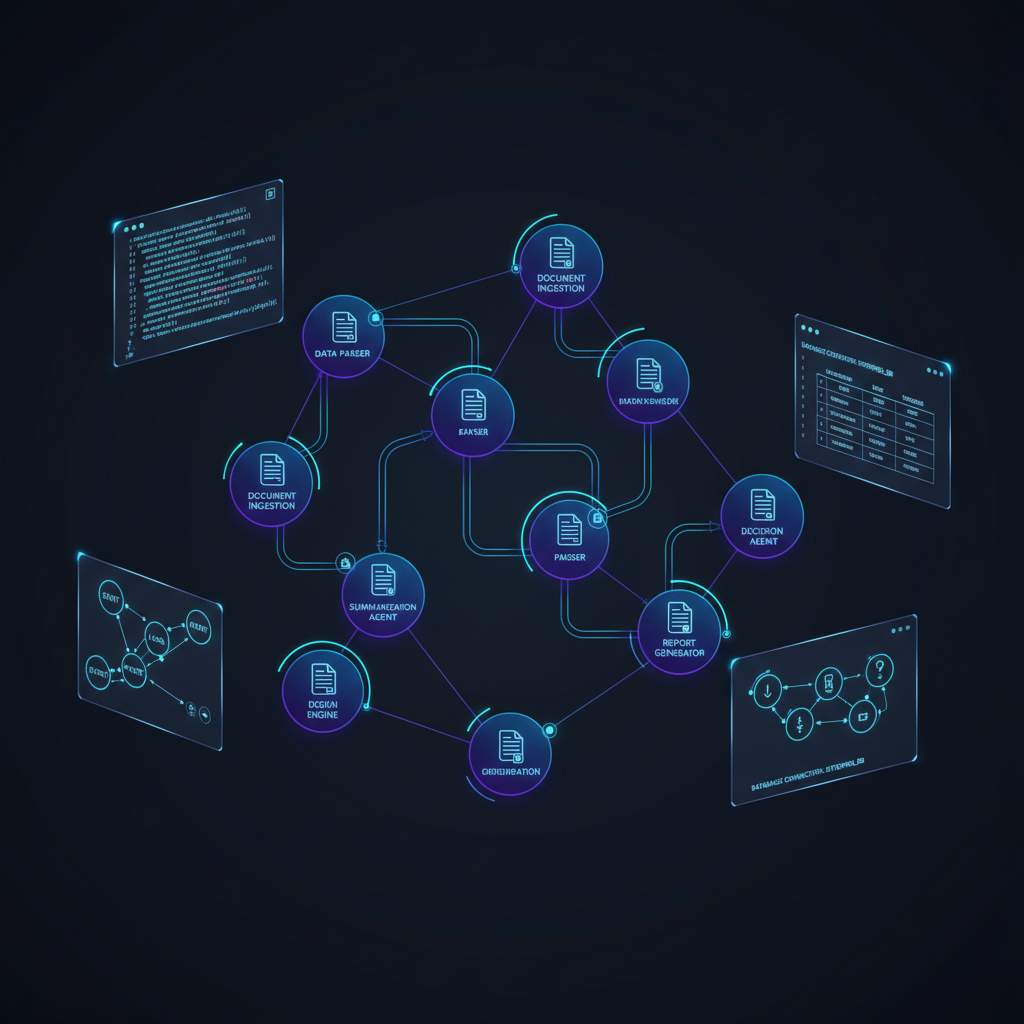

LangGraph solves this by treating document processing as a multi-step workflow where different agents specialize in different types of content analysis. Instead of a single retrieval step, you create a graph of interconnected processing nodes that can handle tables differently from narrative text, maintain context across document sections, and route complex queries to specialized processing pipelines.

Understanding LangGraph’s Multi-Agent Architecture

LangGraph is built around the concept of stateful workflows represented as directed graphs. Each node in the graph represents a processing step, and edges define how information flows between steps. What makes this powerful for RAG is that you can create specialized agents for different aspects of document processing.

In a production RAG system, you typically need several types of agents working together. A routing agent analyzes incoming queries and determines the best processing strategy. Document analysis agents specialize in extracting information from specific content types like tables, charts, or legal clauses. Synthesis agents combine information from multiple sources and generate coherent responses.

The key insight is that different types of queries require different processing approaches. A factual question about a specific data point might need precise table extraction. A conceptual question about document themes might benefit from semantic analysis across multiple sections. A comparison query might require parallel processing of multiple documents.

LangGraph lets you model these different processing paths explicitly, creating workflows that can adapt their behavior based on query characteristics and document types. This flexibility is what separates production-ready systems from simple retrieval implementations.

Setting Up the Core LangGraph Framework

Let’s start building our multi-agent RAG system. First, we’ll establish the core LangGraph structure that will orchestrate our document processing workflow.

from langgraph import StateGraph, END

from langchain_core.messages import HumanMessage, AIMessage

from langchain_openai import ChatOpenAI

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

from typing import TypedDict, List, Optional

import asyncio

class RAGState(TypedDict):

"""State object that flows through our RAG workflow"""

query: str

documents: List[str]

query_type: str

retrieved_chunks: List[str]

processed_content: List[dict]

response: str

confidence_score: float

class ProductionRAGOrchestrator:

def __init__(self):

self.llm = ChatOpenAI(model="gpt-4", temperature=0)

self.embeddings = OpenAIEmbeddings()

self.vectorstore = None

self.workflow = self._build_workflow()

def _build_workflow(self):

"""Constructs the LangGraph workflow for RAG processing"""

workflow = StateGraph(RAGState)

# Add processing nodes

workflow.add_node("analyze_query", self.analyze_query)

workflow.add_node("route_processing", self.route_processing)

workflow.add_node("structured_retrieval", self.structured_retrieval)

workflow.add_node("semantic_retrieval", self.semantic_retrieval)

workflow.add_node("multi_document_synthesis", self.multi_document_synthesis)

workflow.add_node("generate_response", self.generate_response)

workflow.add_node("validate_response", self.validate_response)

# Define the workflow edges

workflow.set_entry_point("analyze_query")

workflow.add_edge("analyze_query", "route_processing")

# Conditional routing based on query type

workflow.add_conditional_edges(

"route_processing",

self.route_query,

{

"structured": "structured_retrieval",

"semantic": "semantic_retrieval",

"complex": "multi_document_synthesis"

}

)

# All paths converge to response generation

workflow.add_edge("structured_retrieval", "generate_response")

workflow.add_edge("semantic_retrieval", "generate_response")

workflow.add_edge("multi_document_synthesis", "generate_response")

workflow.add_edge("generate_response", "validate_response")

workflow.add_edge("validate_response", END)

return workflow.compile()

This framework establishes the backbone of our production RAG system. The state object carries information through each processing step, and the conditional routing allows different query types to follow optimal processing paths.

Implementing Intelligent Query Analysis and Routing

The first critical component is query analysis. This determines how the rest of the workflow will process the user’s request. In production systems, this step often makes the difference between relevant and irrelevant responses.

async def analyze_query(self, state: RAGState) -> RAGState:

"""Analyzes the incoming query to determine processing strategy"""

query = state["query"]

analysis_prompt = f"""

Analyze this query and determine its characteristics:

Query: {query}

Classify the query type as one of:

- factual: Seeks specific data points or facts

- conceptual: Asks about themes, concepts, or interpretations

- comparative: Compares multiple items or documents

- procedural: Asks about processes or step-by-step information

Also identify:

- Complexity level (simple/moderate/complex)

- Required document types (contracts, reports, technical docs)

- Likely answer format (short fact, explanation, list, comparison table)

Respond in JSON format with these fields.

"""

response = await self.llm.ainvoke([HumanMessage(content=analysis_prompt)])

analysis = json.loads(response.content)

state["query_type"] = analysis.get("query_type", "semantic")

state["complexity"] = analysis.get("complexity", "moderate")

state["required_docs"] = analysis.get("required_document_types", [])

return state

def route_query(self, state: RAGState) -> str:

"""Routes queries to appropriate processing pipelines"""

query_type = state.get("query_type", "semantic")

complexity = state.get("complexity", "moderate")

if query_type == "factual" and complexity == "simple":

return "structured"

elif complexity == "complex" or query_type == "comparative":

return "complex"

else:

return "semantic"

This routing logic ensures that simple factual queries get fast, precise answers through structured retrieval, while complex queries that require synthesis across multiple documents follow a more sophisticated processing path.

Building Specialized Retrieval Agents

Different query types require different retrieval strategies. Let’s implement specialized agents for structured and semantic retrieval:

async def structured_retrieval(self, state: RAGState) -> RAGState:

"""Handles queries that need precise data extraction"""

query = state["query"]

# Use structured querying for precise data extraction

structured_prompt = f"""

Extract specific data points related to this query: {query}

Focus on:

- Exact numbers, dates, and values

- Specific terms and definitions

- Direct quotes when relevant

Maintain source attribution for all extracted data.

"""

# Perform hybrid search combining vector similarity and keyword matching

vector_results = await self._vector_search(query, k=5)

keyword_results = await self._keyword_search(query, k=5)

# Combine and deduplicate results

combined_chunks = self._merge_search_results(vector_results, keyword_results)

# Process chunks for structured data extraction

processed_content = []

for chunk in combined_chunks:

extraction_response = await self.llm.ainvoke([

HumanMessage(content=f"{structured_prompt}\n\nDocument chunk: {chunk}")

])

processed_content.append({

"original_chunk": chunk,

"extracted_data": extraction_response.content,

"confidence": self._calculate_relevance_score(query, chunk)

})

state["retrieved_chunks"] = combined_chunks

state["processed_content"] = processed_content

return state

async def semantic_retrieval(self, state: RAGState) -> RAGState:

"""Handles conceptual and thematic queries"""

query = state["query"]

# Perform semantic search with expanded context

expanded_query = await self._expand_query(query)

semantic_results = await self._vector_search(expanded_query, k=8)

# Group related chunks to maintain context

contextualized_chunks = self._group_related_chunks(semantic_results)

semantic_prompt = f"""

Analyze these document sections for concepts and themes related to: {query}

Focus on:

- Underlying concepts and principles

- Relationships between ideas

- Implications and interpretations

- Context that affects meaning

Maintain the conceptual relationships between different sections.

"""

processed_content = []

for chunk_group in contextualized_chunks:

analysis_response = await self.llm.ainvoke([

HumanMessage(content=f"{semantic_prompt}\n\nDocument sections: {chunk_group}")

])

processed_content.append({

"chunk_group": chunk_group,

"conceptual_analysis": analysis_response.content,

"relevance_score": self._calculate_semantic_relevance(query, chunk_group)

})

state["retrieved_chunks"] = [chunk for group in contextualized_chunks for chunk in group]

state["processed_content"] = processed_content

return state

Advanced Multi-Document Synthesis

For complex queries that require information synthesis across multiple documents or document sections, we need a more sophisticated approach:

async def multi_document_synthesis(self, state: RAGState) -> RAGState:

"""Handles complex queries requiring cross-document analysis"""

query = state["query"]

# Perform multi-stage retrieval

initial_results = await self._vector_search(query, k=15)

# Identify document clusters and themes

document_clusters = self._cluster_by_document_source(initial_results)

thematic_clusters = self._cluster_by_semantic_similarity(initial_results)

synthesis_results = []

# Process each document cluster

for doc_id, chunks in document_clusters.items():

doc_synthesis = await self._synthesize_document_content(query, chunks)

synthesis_results.append({

"document_id": doc_id,

"synthesis": doc_synthesis,

"chunk_count": len(chunks)

})

# Cross-document comparison and synthesis

if len(synthesis_results) > 1:

cross_doc_analysis = await self._cross_document_synthesis(query, synthesis_results)

synthesis_results.append({

"document_id": "cross_analysis",

"synthesis": cross_doc_analysis,

"chunk_count": sum(r["chunk_count"] for r in synthesis_results)

})

state["retrieved_chunks"] = initial_results

state["processed_content"] = synthesis_results

return state

async def _synthesize_document_content(self, query: str, chunks: List[str]) -> str:

"""Synthesizes information within a single document"""

synthesis_prompt = f"""

Synthesize information from these document sections to answer: {query}

Document sections:

{chr(10).join(f"Section {i+1}: {chunk}" for i, chunk in enumerate(chunks))}

Provide a comprehensive synthesis that:

1. Identifies key themes and patterns

2. Reconciles any apparent contradictions

3. Highlights the most relevant information

4. Maintains logical flow and coherence

Focus on creating a unified understanding rather than just summarizing sections.

"""

response = await self.llm.ainvoke([HumanMessage(content=synthesis_prompt)])

return response.content

Response Generation and Validation

The final steps involve generating a coherent response and validating its quality:

async def generate_response(self, state: RAGState) -> RAGState:

"""Generates the final response based on processed content"""

query = state["query"]

processed_content = state["processed_content"]

query_type = state.get("query_type", "semantic")

# Adapt response style based on query type

if query_type == "factual":

response_style = "precise and data-focused"

elif query_type == "comparative":

response_style = "structured comparison with clear distinctions"

else:

response_style = "comprehensive explanation with context"

generation_prompt = f"""

Generate a {response_style} response to this query: {query}

Based on this processed information:

{self._format_processed_content(processed_content)}

Requirements:

- Directly address the user's question

- Include specific supporting details from the documents

- Maintain accuracy and avoid speculation

- Provide source attribution where appropriate

- Use clear, professional language

If the available information is insufficient, clearly state what's missing.

"""

response = await self.llm.ainvoke([HumanMessage(content=generation_prompt)])

state["response"] = response.content

return state

async def validate_response(self, state: RAGState) -> RAGState:

"""Validates response quality and completeness"""

query = state["query"]

response = state["response"]

processed_content = state["processed_content"]

validation_prompt = f"""

Evaluate this response for quality and accuracy:

Original Query: {query}

Generated Response: {response}

Rate on a scale of 1-10 for:

1. Relevance to the query

2. Accuracy based on source material

3. Completeness of the answer

4. Clarity and coherence

Provide an overall confidence score and identify any issues.

Return as JSON with scores and brief explanations.

"""

validation_response = await self.llm.ainvoke([HumanMessage(content=validation_prompt)])

validation_data = json.loads(validation_response.content)

state["confidence_score"] = validation_data.get("overall_confidence", 0.7)

state["validation_notes"] = validation_data.get("notes", "")

return state

Production Deployment and Scaling Considerations

Building the RAG workflow is only half the battle. Production deployment requires careful attention to performance, reliability, and monitoring.

Async Processing and Concurrency

For enterprise workloads, you need to handle multiple concurrent requests efficiently:

class ProductionRAGService:

def __init__(self, max_concurrent_requests: int = 10):

self.orchestrator = ProductionRAGOrchestrator()

self.semaphore = asyncio.Semaphore(max_concurrent_requests)

self.request_queue = asyncio.Queue()

self.metrics_collector = MetricsCollector()

async def process_query(self, query: str, user_id: str) -> dict:

"""Processes a query with proper concurrency control"""

async with self.semaphore:

start_time = time.time()

try:

initial_state = {

"query": query,

"user_id": user_id,

"documents": [],

"query_type": "",

"retrieved_chunks": [],

"processed_content": [],

"response": "",

"confidence_score": 0.0

}

result = await self.orchestrator.workflow.ainvoke(initial_state)

processing_time = time.time() - start_time

self.metrics_collector.record_request(

user_id=user_id,

processing_time=processing_time,

confidence_score=result["confidence_score"],

query_type=result.get("query_type", "unknown")

)

return {

"response": result["response"],

"confidence": result["confidence_score"],

"processing_time": processing_time,

"sources": self._extract_sources(result["processed_content"])

}

except Exception as e:

self.metrics_collector.record_error(user_id, str(e))

raise

Monitoring and Quality Assurance

Production RAG systems need comprehensive monitoring to ensure consistent quality:

class RAGQualityMonitor:

def __init__(self):

self.quality_thresholds = {

"min_confidence": 0.6,

"max_response_time": 30.0,

"min_source_coverage": 0.8

}

self.alert_manager = AlertManager()

def evaluate_response_quality(self, result: dict) -> dict:

"""Evaluates response quality against production thresholds"""

quality_score = {

"confidence_check": result["confidence"] >= self.quality_thresholds["min_confidence"],

"response_time_check": result["processing_time"] <= self.quality_thresholds["max_response_time"],

"source_coverage_check": self._check_source_coverage(result["sources"]),

"coherence_check": self._check_response_coherence(result["response"])

}

overall_quality = all(quality_score.values())

if not overall_quality:

self.alert_manager.send_quality_alert(result, quality_score)

return {

"passed_quality_checks": overall_quality,

"quality_breakdown": quality_score,

"overall_score": sum(quality_score.values()) / len(quality_score)

}

Building production-ready RAG systems with LangGraph transforms enterprise AI from experimental chatbots into reliable knowledge processing platforms. The multi-agent architecture we’ve built here handles the complexity of real-world documents while maintaining the flexibility to adapt to different query types and content formats.

The key insight is that production RAG isn’t just about better retrieval—it’s about orchestrating multiple specialized agents that can handle the nuanced requirements of enterprise knowledge work. When you combine intelligent routing, specialized processing agents, and comprehensive validation, you create systems that organizations can trust with their most critical information needs.

This framework provides the foundation for RAG systems that actually work in production. Start with this architecture, adapt the agents to your specific document types and use cases, and you’ll have a robust platform for enterprise AI that goes far beyond simple question-answering to become a true knowledge amplification system. The companies implementing this approach today are the ones building sustainable competitive advantages through AI, while others are still struggling with basic retrieval challenges.