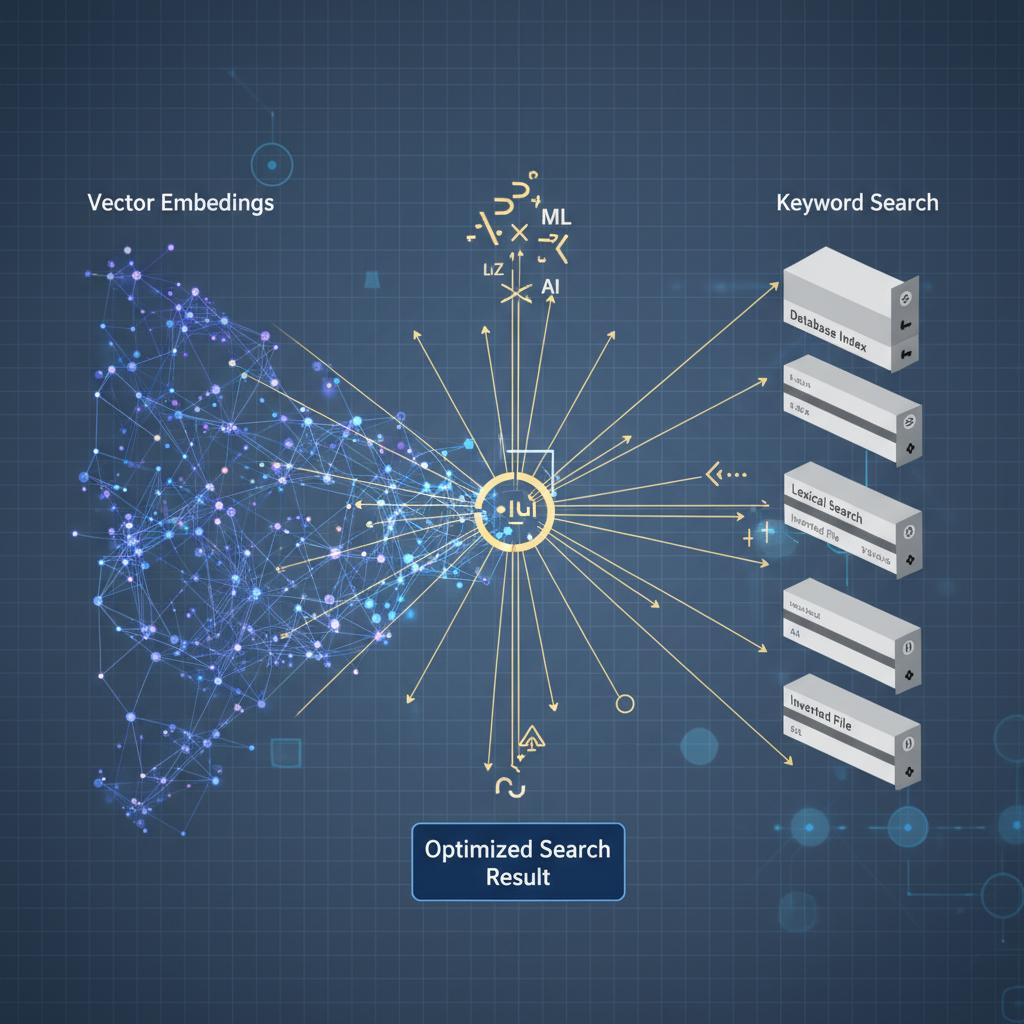

Enterprise AI systems face a fundamental challenge: how to retrieve the most relevant information from vast knowledge bases when user queries can be semantic, keyword-specific, or a complex combination of both. Traditional vector search excels at understanding meaning and context, while keyword search dominates in finding exact matches and specific terminology. The problem? Most RAG implementations force you to choose one approach, leaving performance gaps that can derail user trust and system effectiveness.

Hybrid search RAG systems solve this dilemma by intelligently combining vector embeddings with traditional keyword search, creating a retrieval mechanism that captures both semantic meaning and lexical precision. This approach has emerged as the gold standard for enterprise implementations, with companies like Elasticsearch, Pinecone, and Weaviate all integrating hybrid capabilities into their platforms. In this comprehensive guide, we’ll explore how to architect, implement, and optimize hybrid search RAG systems that deliver superior accuracy and user satisfaction.

You’ll learn the technical foundations of hybrid search, practical implementation strategies across different vector databases, advanced ranking algorithms, and real-world optimization techniques that separate production-ready systems from proof-of-concept experiments.

Understanding Hybrid Search Architecture

Hybrid search combines the strengths of two complementary retrieval methods. Vector search uses dense embeddings to capture semantic relationships, excelling at understanding synonyms, concepts, and contextual meaning. When a user asks “How do I improve customer satisfaction?”, vector search can retrieve documents about “enhancing client happiness” or “boosting user experience” even without exact keyword matches.

Keyword search, powered by traditional inverted indexes, excels at finding exact terms, acronyms, product names, and specific technical terminology. When someone searches for “API authentication JWT token”, keyword search ensures precise matches for these critical technical terms that vector embeddings might blur or miss.

The magic happens in the fusion layer, where results from both approaches are combined using sophisticated ranking algorithms. Popular fusion methods include Reciprocal Rank Fusion (RRF), which assigns scores based on ranking positions, and learned fusion models that use machine learning to optimize combination weights based on query characteristics.

Modern hybrid systems also incorporate query analysis to dynamically adjust the balance between vector and keyword search. Queries with specific technical terms might weight keyword results higher, while conceptual questions favor vector search. This adaptive approach ensures optimal retrieval across diverse query types.

Implementation Strategies Across Vector Databases

Elasticsearch Hybrid Implementation

Elasticsearch offers native hybrid search through its combined query structure. The implementation leverages both dense_vector fields for semantic search and traditional text fields for keyword matching:

{

"query": {

"bool": {

"should": [

{

"knn": {

"field": "content_embedding",

"query_vector": [0.1, 0.2, ...],

"k": 50,

"boost": 0.7

}

},

{

"multi_match": {

"query": "user query",

"fields": ["title^2", "content"],

"boost": 0.3

}

}

]

}

}

}

The boost parameters control the relative importance of each search method. Start with a 70-30 split favoring vector search, then optimize based on your specific use case and evaluation metrics.

Pinecone Hybrid Approach

Pinecone’s hybrid search combines vector similarity with sparse vector representations for keyword matching. The sparse vectors capture term frequency information while dense vectors handle semantic understanding:

results = index.query(

vector=dense_embedding,

sparse_vector={

'indices': term_indices,

'values': term_weights

},

alpha=0.7, # Weight between dense (1.0) and sparse (0.0)

top_k=20

)

The alpha parameter controls the balance, with values closer to 1.0 favoring semantic search and values near 0.0 emphasizing keyword matching. Most enterprise implementations find optimal performance between 0.6-0.8.

Weaviate Hybrid Configuration

Weaviate’s hybrid search uses the BM25 algorithm for keyword search combined with vector similarity. The fusion happens at the database level, providing clean API access:

result = client.query.get("Document", ["title", "content"]).with_hybrid(

query="user query",

alpha=0.75, # Balance between vector (1) and keyword (0)

vector=query_embedding

).with_limit(20).do()

Weaviate’s strength lies in its automatic query analysis, which can adjust alpha values based on query characteristics when configured with adaptive hybrid search.

Advanced Fusion Algorithms

Reciprocal Rank Fusion (RRF)

RRF remains the most popular fusion method due to its simplicity and effectiveness. The algorithm assigns scores based on ranking positions rather than raw similarity scores, making it robust across different search modalities:

def reciprocal_rank_fusion(vector_results, keyword_results, k=60):

scores = {}

for rank, doc in enumerate(vector_results):

scores[doc.id] = scores.get(doc.id, 0) + 1 / (k + rank + 1)

for rank, doc in enumerate(keyword_results):

scores[doc.id] = scores.get(doc.id, 0) + 1 / (k + rank + 1)

return sorted(scores.items(), key=lambda x: x[1], reverse=True)

The k parameter (typically 60) controls the fusion strength. Lower values give more weight to top-ranked results, while higher values create more balanced fusion.

Learned Fusion Models

Advanced implementations use machine learning models to optimize fusion weights based on query characteristics. These models analyze query features like length, term specificity, and semantic complexity to predict optimal balance ratios:

def adaptive_fusion_weight(query_text, query_embedding):

features = extract_query_features(query_text, query_embedding)

predicted_weight = fusion_model.predict(features)

return min(max(predicted_weight, 0.1), 0.9) # Clamp between 0.1-0.9

Training data comes from user interactions, click-through rates, and explicit relevance feedback. Companies like Microsoft and Google use similar approaches in their search systems.

Query Analysis and Adaptive Weighting

Intelligent hybrid systems analyze incoming queries to optimize search strategy. Query classification models identify different query types and adjust fusion parameters accordingly:

Factual Queries: “What is the capital of France?” – Favor keyword search for precise factual retrieval.

Conceptual Queries: “How can I improve team collaboration?” – Weight vector search higher for semantic understanding.

Technical Queries: “Configure SSL certificate nginx” – Balance both approaches to capture technical terms and procedural knowledge.

Conversational Queries: “I’m having trouble with my login process” – Emphasize vector search for intent understanding.

Implementation involves training a lightweight classification model on labeled query data:

def classify_query_intent(query_text):

features = extract_linguistic_features(query_text)

intent = intent_classifier.predict(features)

weight_mapping = {

'factual': 0.3, # Favor keyword search

'conceptual': 0.8, # Favor vector search

'technical': 0.5, # Balanced approach

'conversational': 0.7 # Slight vector preference

}

return weight_mapping.get(intent, 0.6) # Default balanced

Performance Optimization Techniques

Index Optimization

Efficient hybrid search requires optimized indexes for both vector and keyword components. For vector indexes, consider approximate nearest neighbor algorithms like HNSW or IVF that balance speed and accuracy. Keyword indexes benefit from proper tokenization, stemming, and stop word handling.

Index sharding strategies should account for both search methods. Co-locate vector and keyword data for the same documents to minimize cross-shard operations during hybrid queries.

Caching Strategies

Implement multi-level caching for hybrid search systems:

Query-level caching: Cache complete hybrid results for identical queries.

Component-level caching: Cache vector and keyword results separately, enabling reuse when only fusion parameters change.

Embedding caching: Store computed embeddings for frequently accessed queries and documents.

class HybridSearchCache:

def __init__(self):

self.query_cache = LRUCache(1000)

self.embedding_cache = LRUCache(5000)

self.keyword_cache = LRUCache(2000)

def get_results(self, query, vector_weight):

cache_key = f"{hash(query)}_{vector_weight}"

return self.query_cache.get(cache_key)

Parallel Processing

Execute vector and keyword searches in parallel to minimize latency. Most implementations see 30-50% latency improvements through parallel execution:

import asyncio

async def hybrid_search(query, embedding):

vector_task = asyncio.create_task(vector_search(embedding))

keyword_task = asyncio.create_task(keyword_search(query))

vector_results, keyword_results = await asyncio.gather(

vector_task, keyword_task

)

return fusion_algorithm(vector_results, keyword_results)

Evaluation and Quality Metrics

Comprehensive Evaluation Framework

Evaluate hybrid search systems across multiple dimensions:

Relevance Metrics: Use traditional IR metrics like NDCG, MAP, and MRR, but compute them separately for different query types to identify performance patterns.

Latency Analysis: Measure end-to-end response times, including fusion overhead. Target sub-200ms response times for interactive applications.

Coverage Analysis: Ensure hybrid systems don’t create coverage gaps where neither search method performs well.

def evaluate_hybrid_system(test_queries, ground_truth):

metrics = {

'overall_ndcg': [],

'vector_only_ndcg': [],

'keyword_only_ndcg': [],

'latency_p95': [],

'coverage_rate': []

}

for query in test_queries:

hybrid_results = hybrid_search(query)

vector_results = vector_search_only(query)

keyword_results = keyword_search_only(query)

metrics['overall_ndcg'].append(

calculate_ndcg(hybrid_results, ground_truth[query.id])

)

# Add other metric calculations...

return metrics

A/B Testing Strategies

Implement controlled experiments to optimize fusion parameters:

Parameter Sweeps: Test different alpha values across query types to find optimal balance points.

Fusion Algorithm Comparison: Compare RRF against learned fusion models to quantify improvement potential.

User Satisfaction Metrics: Track click-through rates, session success rates, and explicit user feedback across different hybrid configurations.

Real-World Implementation Challenges

Data Quality Considerations

Hybrid search amplifies data quality issues. Poor quality embeddings will degrade vector search performance, while inconsistent text preprocessing affects keyword search. Implement comprehensive data validation:

- Embedding quality checks using cosine similarity distributions

- Keyword index validation through term frequency analysis

- Cross-modal consistency checks to ensure vector and text representations align

Scaling Considerations

As document collections grow, hybrid search faces unique scaling challenges:

Index Synchronization: Ensure vector and keyword indexes remain synchronized during updates. Implement atomic update operations or use eventual consistency patterns with proper conflict resolution.

Resource Allocation: Vector search typically requires more computational resources than keyword search. Plan infrastructure allocation accordingly, potentially using different hardware optimizations for each component.

Cost Optimization: Vector storage and computation costs can be significant. Implement strategies like embedding quantization and selective indexing for less critical content.

Production Deployment Best Practices

Monitoring and Observability

Implement comprehensive monitoring for hybrid search systems:

class HybridSearchMonitor:

def track_query_performance(self, query, results, latency):

metrics = {

'query_type': classify_query_type(query),

'result_count': len(results),

'vector_contribution': calculate_vector_contribution(results),

'keyword_contribution': calculate_keyword_contribution(results),

'fusion_latency': latency.fusion_time,

'total_latency': latency.total_time

}

self.log_metrics(metrics)

self.update_dashboards(metrics)

Gradual Rollout Strategy

Deploy hybrid search systems gradually:

- Shadow Mode: Run hybrid search alongside existing systems without serving results to users

- Limited Rollout: Serve hybrid results to a small percentage of users

- Query-Type Rollout: Enable hybrid search for specific query types first

- Full Deployment: Complete migration after validating performance and user satisfaction

Fallback Mechanisms

Implement robust fallback strategies for production resilience:

def resilient_hybrid_search(query, embedding):

try:

return hybrid_search(query, embedding)

except VectorSearchException:

logger.warning("Vector search failed, falling back to keyword")

return keyword_search_only(query)

except KeywordSearchException:

logger.warning("Keyword search failed, falling back to vector")

return vector_search_only(embedding)

except Exception as e:

logger.error(f"Hybrid search completely failed: {e}")

return cached_popular_results()

Hybrid search RAG systems represent the evolution of enterprise information retrieval, combining the precision of keyword search with the intelligence of semantic understanding. By implementing the strategies outlined in this guide, you’ll build RAG systems that deliver superior accuracy, user satisfaction, and business value. The key lies in thoughtful architecture, careful optimization, and continuous evaluation based on real user needs and behaviors.

Ready to implement hybrid search in your RAG system? Start by evaluating your current retrieval performance across different query types, then choose the vector database and fusion approach that best fits your technical requirements and scale. The future of enterprise AI depends on systems that can understand both what users say and what they mean – hybrid search RAG systems deliver exactly that capability.