In the rapidly evolving landscape of enterprise AI, organizations are discovering that traditional vector-only RAG systems hit a wall when dealing with complex, multi-layered queries that require both semantic understanding and explicit relationship mapping. While vector databases excel at finding semantically similar content, they struggle with queries that demand understanding of hierarchical structures, temporal sequences, or intricate entity relationships.

Consider a financial services company trying to answer: “Show me all high-risk transactions from clients who have defaulted on loans in the past year, but also identify which of their business partners might be affected.” A pure vector search might find documents about high-risk transactions and loan defaults, but it would miss the critical relationship connections between clients and their business networks. This is where hybrid RAG architectures become game-changing.

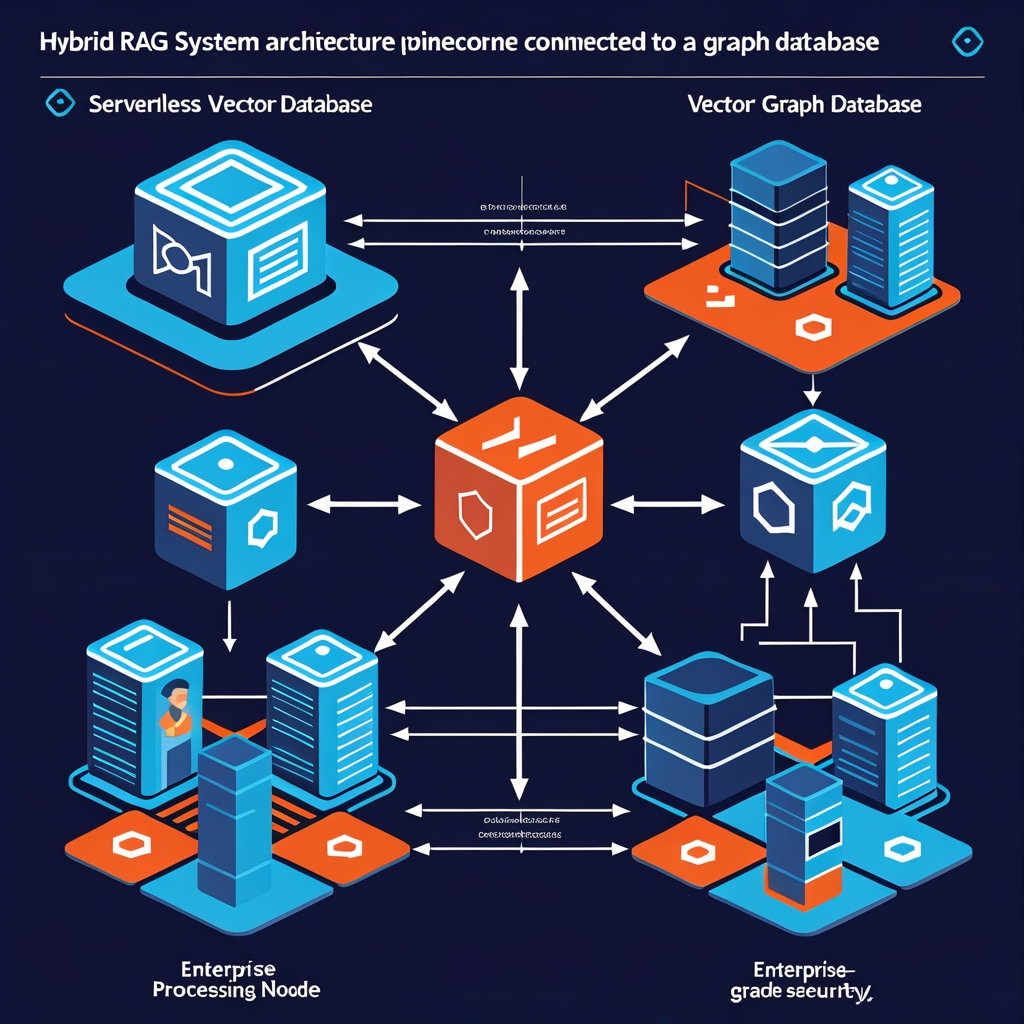

Pinecone’s latest serverless offering, combined with graph database capabilities, provides a solution that bridges this gap. By combining vector similarity search with graph traversal capabilities, enterprises can build RAG systems that understand both semantic meaning and explicit relationships. This hybrid approach doesn’t just retrieve relevant documents—it understands how those documents connect to create comprehensive, contextually rich responses.

In this comprehensive guide, we’ll walk through building a production-ready hybrid RAG system that leverages Pinecone Serverless for vector operations and integrates graph database functionality for relationship mapping. You’ll learn how to architect a system that handles both semantic queries and complex relationship traversals, all while maintaining the scalability and cost-effectiveness that serverless architectures provide.

Understanding Hybrid RAG Architecture Fundamentals

Hybrid RAG systems represent the next evolution in enterprise knowledge retrieval, combining the semantic understanding of vector databases with the relationship mapping capabilities of graph databases. Unlike traditional RAG systems that rely solely on embedding similarity, hybrid architectures maintain two complementary knowledge representations.

The vector component handles semantic similarity through high-dimensional embeddings, making it excellent for finding conceptually related content even when exact keywords don’t match. The graph component maintains explicit relationships between entities, enabling traversal of complex connection patterns that pure semantic search cannot uncover.

Pinecone Serverless brings unique advantages to this architecture. Traditional vector databases require infrastructure management, capacity planning, and scaling decisions. Pinecone Serverless eliminates these concerns by automatically scaling based on usage patterns while maintaining sub-50ms query latencies. This serverless approach particularly benefits hybrid systems where query patterns can be unpredictable—some queries might require extensive vector searches, others might focus on graph traversal, and many will need both.

The integration points between vector and graph components become critical architectural decisions. Successful hybrid systems typically employ one of three strategies: parallel querying where both systems are queried simultaneously and results are merged, sequential querying where one system’s results inform the other’s search parameters, or federated querying where a query router determines which system or combination to use based on query analysis.

Setting Up Pinecone Serverless for Hybrid Operations

Setting up Pinecone Serverless for hybrid RAG requires careful consideration of index configuration, embedding strategies, and integration patterns. The serverless model simplifies many operational aspects while introducing new considerations around cold starts and query routing.

First, configure your Pinecone Serverless index with appropriate dimensionality for your embedding model. Modern enterprise deployments typically use 1536-dimension embeddings from OpenAI’s text-embedding-3-large or 768-dimension embeddings from sentence-transformers models. The choice impacts both storage costs and query performance.

import pinecone

from pinecone import Pinecone, ServerlessSpec

# Initialize Pinecone client

pc = Pinecone(api_key="your-api-key")

# Create serverless index optimized for hybrid operations

index_name = "hybrid-rag-enterprise"

pc.create_index(

name=index_name,

dimension=1536,

metric="cosine",

spec=ServerlessSpec(

cloud="aws",

region="us-east-1"

)

)

index = pc.Index(index_name)

Metadata design becomes crucial in hybrid systems. Your vector entries need metadata that enables efficient filtering and provides connection points to your graph database. Include entity identifiers, relationship types, temporal markers, and hierarchical categories.

# Metadata structure for hybrid operations

metadata_schema = {

"entity_id": "unique identifier linking to graph",

"entity_type": "person|organization|document|transaction",

"relationships": ["list of connected entity IDs"],

"timestamp": "ISO timestamp for temporal queries",

"department": "organizational hierarchy marker",

"security_level": "access control classification",

"source_system": "origin system identifier"

}

Implement batch uploading strategies that optimize for Pinecone Serverless’s cost model. Serverless pricing includes both storage and compute components, making efficient batching important for cost management.

def batch_upsert_optimized(documents, batch_size=100):

"""Optimized batch upsert for serverless cost efficiency"""

vectors = []

for i, doc in enumerate(documents):

embedding = generate_embedding(doc['content'])

vector = {

"id": doc['id'],

"values": embedding,

"metadata": doc['metadata']

}

vectors.append(vector)

if len(vectors) >= batch_size or i == len(documents) - 1:

index.upsert(vectors=vectors)

vectors = []

return True

Integrating Graph Database Capabilities

The graph component of your hybrid RAG system handles explicit relationships that vector similarity cannot capture. Popular choices include Neo4j for complex graph analytics, Amazon Neptune for AWS-native deployments, or ArangoDB for multi-model flexibility.

Neo4j integration provides powerful graph query capabilities through Cypher queries. Establish your graph schema to mirror the entity types and relationships present in your vector metadata.

from neo4j import GraphDatabase

class GraphRAGConnector:

def __init__(self, uri, user, password):

self.driver = GraphDatabase.driver(uri, auth=(user, password))

def create_entity_relationships(self, entities):

"""Bulk create entities and relationships"""

with self.driver.session() as session:

for entity in entities:

session.run(

"""

MERGE (e:Entity {id: $id, type: $type})

SET e.properties = $properties

""",

id=entity['id'],

type=entity['type'],

properties=entity['properties']

)

# Create relationships

for rel in entity.get('relationships', []):

session.run(

"""

MATCH (a:Entity {id: $from_id})

MATCH (b:Entity {id: $to_id})

MERGE (a)-[r:RELATES_TO {type: $rel_type}]->(b)

""",

from_id=entity['id'],

to_id=rel['target_id'],

rel_type=rel['type']

)

Design your graph schema to support common enterprise query patterns. Financial services might emphasize transaction flows and risk relationships, while healthcare systems focus on patient-provider-treatment connections.

def find_related_entities(self, entity_id, relationship_types, max_depth=3):

"""Find entities connected through specified relationship types"""

with self.driver.session() as session:

result = session.run(

"""

MATCH path = (start:Entity {id: $entity_id})

-[r:RELATES_TO*1..$max_depth]->

(connected:Entity)

WHERE ALL(rel in relationships(path) WHERE rel.type IN $rel_types)

RETURN connected.id as entity_id,

connected.type as entity_type,

length(path) as distance,

[rel in relationships(path) | rel.type] as path_types

ORDER BY distance

""",

entity_id=entity_id,

rel_types=relationship_types,

max_depth=max_depth

)

return [record.data() for record in result]

Building the Query Processing Engine

The query processing engine determines how to route queries between vector and graph components, when to use each system, and how to merge results. Sophisticated hybrid systems employ machine learning models to classify query intent and route accordingly.

Implement a query analyzer that classifies incoming queries into categories: semantic-only, relationship-only, or hybrid queries requiring both systems.

import re

from typing import List, Dict, Tuple

class QueryAnalyzer:

def __init__(self):

self.relationship_patterns = [

r'\b(connected|related|linked)\s+to\b',

r'\b(partners?|colleagues?|associates?)\b',

r'\b(reports?\s+to|manages?|supervises?)\b',

r'\b(depends?\s+on|requires?|needs?)\b'

]

self.temporal_patterns = [

r'\b(before|after|during|since|until)\b',

r'\b(last|previous|next|following)\s+\w+\b',

r'\d{4}-\d{2}-\d{2}|\d{1,2}/\d{1,2}/\d{4}'

]

def analyze_query(self, query: str) -> Dict[str, any]:

"""Analyze query to determine processing strategy"""

query_lower = query.lower()

# Check for relationship indicators

has_relationships = any(

re.search(pattern, query_lower)

for pattern in self.relationship_patterns

)

# Check for temporal indicators

has_temporal = any(

re.search(pattern, query_lower)

for pattern in self.temporal_patterns

)

# Determine query strategy

if has_relationships and has_temporal:

strategy = "hybrid_complex"

elif has_relationships:

strategy = "graph_focused"

elif has_temporal:

strategy = "vector_temporal"

else:

strategy = "vector_semantic"

return {

"strategy": strategy,

"has_relationships": has_relationships,

"has_temporal": has_temporal,

"entities": self.extract_entities(query)

}

Implement the hybrid query processor that coordinates between Pinecone and your graph database based on the query analysis results.

class HybridQueryProcessor:

def __init__(self, pinecone_index, graph_connector):

self.vector_index = pinecone_index

self.graph = graph_connector

self.analyzer = QueryAnalyzer()

async def process_query(self, query: str, top_k: int = 10) -> List[Dict]:

"""Process query using hybrid approach"""

analysis = self.analyzer.analyze_query(query)

strategy = analysis['strategy']

if strategy == "vector_semantic":

return await self.vector_only_search(query, top_k)

elif strategy == "graph_focused":

return await self.graph_focused_search(query, analysis)

elif strategy == "hybrid_complex":

return await self.hybrid_search(query, analysis, top_k)

else:

return await self.vector_temporal_search(query, analysis, top_k)

async def hybrid_search(self, query: str, analysis: Dict, top_k: int) -> List[Dict]:

"""Execute hybrid search combining vector and graph results"""

# Generate query embedding

query_embedding = generate_embedding(query)

# Parallel execution of vector and graph searches

vector_results = self.vector_index.query(

vector=query_embedding,

top_k=top_k * 2, # Get more candidates for filtering

include_metadata=True

)

# Extract entities from vector results for graph expansion

entity_ids = [

match['metadata']['entity_id']

for match in vector_results['matches']

if 'entity_id' in match['metadata']

]

# Find related entities through graph traversal

related_entities = []

for entity_id in entity_ids[:5]: # Limit to prevent explosion

related = self.graph.find_related_entities(

entity_id,

relationship_types=['RELATES_TO', 'CONNECTED_TO'],

max_depth=2

)

related_entities.extend(related)

# Merge and rank results

return self.merge_results(vector_results, related_entities, top_k)

Implementing Advanced Retrieval Strategies

Advanced hybrid RAG systems employ sophisticated retrieval strategies that go beyond simple vector similarity and graph traversal. These include multi-hop reasoning, temporal-aware retrieval, and context-preserving result merging.

Multi-hop reasoning enables the system to answer complex queries that require connecting information across multiple documents and relationships. This is particularly valuable for enterprise scenarios involving supply chain analysis, organizational impact assessment, or regulatory compliance tracking.

class MultiHopRetriever:

def __init__(self, vector_index, graph_connector):

self.vector_index = vector_index

self.graph = graph_connector

self.max_hops = 3

def multi_hop_search(self, query: str, evidence_threshold: float = 0.7) -> List[Dict]:

"""Perform multi-hop reasoning across vector and graph data"""

hop_results = []

current_entities = set()

# Initial vector search

initial_results = self.vector_search_with_entities(query)

hop_results.append({

"hop": 0,

"results": initial_results,

"reasoning": "Initial semantic search"

})

# Extract entities for next hop

current_entities.update(

result['metadata']['entity_id']

for result in initial_results

if result['score'] > evidence_threshold

)

# Iterative hop expansion

for hop in range(1, self.max_hops + 1):

if not current_entities:

break

# Find connected entities

connected_entities = set()

for entity in current_entities:

related = self.graph.find_related_entities(

entity,

relationship_types=['RELATES_TO', 'INFLUENCES', 'DEPENDS_ON'],

max_depth=1

)

connected_entities.update(r['entity_id'] for r in related)

# Vector search within connected entity context

if connected_entities:

hop_results.append(self.contextual_vector_search(

query, connected_entities, hop

))

# Update entities for next hop

current_entities = connected_entities

return self.synthesize_multi_hop_results(hop_results)

Temporal-aware retrieval handles time-sensitive queries by incorporating temporal context into both vector and graph searches. This enables answering questions about how relationships and information have evolved over time.

def temporal_aware_search(self, query: str, time_context: Dict) -> List[Dict]:

"""Search with temporal awareness"""

start_time = time_context.get('start_time')

end_time = time_context.get('end_time', 'now')

# Temporal filter for vector search

temporal_filter = {

"timestamp": {

"$gte": start_time,

"$lte": end_time

}

}

# Vector search with temporal constraints

query_embedding = generate_embedding(query)

temporal_vector_results = self.vector_index.query(

vector=query_embedding,

filter=temporal_filter,

top_k=20,

include_metadata=True

)

# Graph search with temporal constraints

temporal_graph_results = self.graph.temporal_relationship_search(

query, start_time, end_time

)

return self.merge_temporal_results(

temporal_vector_results,

temporal_graph_results

)

Production Deployment and Monitoring

Deploying hybrid RAG systems in production requires robust monitoring, performance optimization, and cost management strategies. Pinecone Serverless simplifies many operational aspects but introduces new considerations around cold starts, query routing efficiency, and cross-system latency management.

Implement comprehensive monitoring that tracks both individual component performance and end-to-end query latency. Set up alerts for unusual patterns that might indicate data quality issues or system performance degradation.

import time

import logging

from dataclasses import dataclass

from typing import Optional

@dataclass

class QueryMetrics:

query_id: str

strategy: str

vector_latency: Optional[float]

graph_latency: Optional[float]

total_latency: float

result_count: int

cost_estimate: float

class ProductionHybridRAG:

def __init__(self, pinecone_index, graph_connector):

self.vector_index = pinecone_index

self.graph = graph_connector

self.metrics_logger = logging.getLogger('hybrid_rag_metrics')

async def production_query(self, query: str, user_context: Dict) -> Dict:

"""Production-ready query with monitoring and optimization"""

start_time = time.time()

query_id = generate_query_id()

try:

# Query analysis and routing

analysis = self.analyzer.analyze_query(query)

strategy = self.select_optimal_strategy(analysis, user_context)

# Execute query with strategy-specific optimization

if strategy == "vector_optimized":

results = await self.optimized_vector_search(query)

vector_latency = time.time() - start_time

graph_latency = None

elif strategy == "graph_optimized":

results = await self.optimized_graph_search(query, analysis)

vector_latency = None

graph_latency = time.time() - start_time

else:

results = await self.optimized_hybrid_search(query, analysis)

vector_latency = results['vector_latency']

graph_latency = results['graph_latency']

# Calculate metrics

total_latency = time.time() - start_time

metrics = QueryMetrics(

query_id=query_id,

strategy=strategy,

vector_latency=vector_latency,

graph_latency=graph_latency,

total_latency=total_latency,

result_count=len(results.get('matches', [])),

cost_estimate=self.estimate_query_cost(strategy, results)

)

# Log metrics

self.metrics_logger.info(f"Query metrics: {metrics}")

return {

"results": results,

"metrics": metrics,

"query_id": query_id

}

except Exception as e:

self.metrics_logger.error(f"Query {query_id} failed: {str(e)}")

raise

Implement cost optimization strategies specific to serverless architectures. Pinecone Serverless charges based on read/write operations and storage, making query efficiency crucial for cost management.

def estimate_query_cost(self, strategy: str, results: Dict) -> float:

"""Estimate query cost for budgeting and optimization"""

base_vector_cost = 0.0001 # Per query

base_graph_cost = 0.0002 # Per query

if strategy == "vector_optimized":

return base_vector_cost

elif strategy == "graph_optimized":

return base_graph_cost

else:

# Hybrid queries cost more but provide better results

vector_ops = results.get('vector_operations', 1)

graph_ops = results.get('graph_operations', 1)

return (base_vector_cost * vector_ops) + (base_graph_cost * graph_ops)

Conclusion

Hybrid RAG systems represent the cutting edge of enterprise knowledge retrieval, combining semantic understanding with explicit relationship mapping to handle complex, multi-dimensional queries that traditional RAG systems cannot address. By leveraging Pinecone Serverless alongside graph database capabilities, organizations can build systems that scale automatically while maintaining the performance and accuracy needed for critical business operations.

The architecture patterns and implementation strategies outlined in this guide provide a foundation for building production-ready hybrid RAG systems. From query analysis and routing to multi-hop reasoning and temporal awareness, each component contributes to a system that understands not just what information exists, but how that information connects to create comprehensive, actionable insights.

The serverless approach offered by Pinecone eliminates many traditional infrastructure concerns while introducing new opportunities for cost optimization and performance tuning. By combining this with robust graph database integration and sophisticated query processing, enterprises can deploy RAG systems that adapt to diverse query patterns while maintaining predictable performance and costs.

Ready to transform your enterprise knowledge retrieval capabilities? Start by implementing the foundational vector-graph integration patterns shown here, then gradually add advanced features like multi-hop reasoning and temporal awareness as your use cases evolve. The hybrid RAG architecture will position your organization to handle increasingly complex information needs while maintaining the scalability and reliability that enterprise applications demand.