Enterprise AI systems are drowning in their own data. Companies spend millions building vast knowledge repositories, yet their AI assistants still provide irrelevant answers, cite outdated information, and frustrate users with generic responses. The problem isn’t the volume of data—it’s the inability to retrieve the right information at the right time based on context.

Traditional RAG systems operate like enthusiastic librarians who bring you every book that mentions your keyword, regardless of whether you’re writing a research paper or looking for a quick definition. This spray-and-pray approach to information retrieval creates noise, reduces accuracy, and undermines user trust in AI systems.

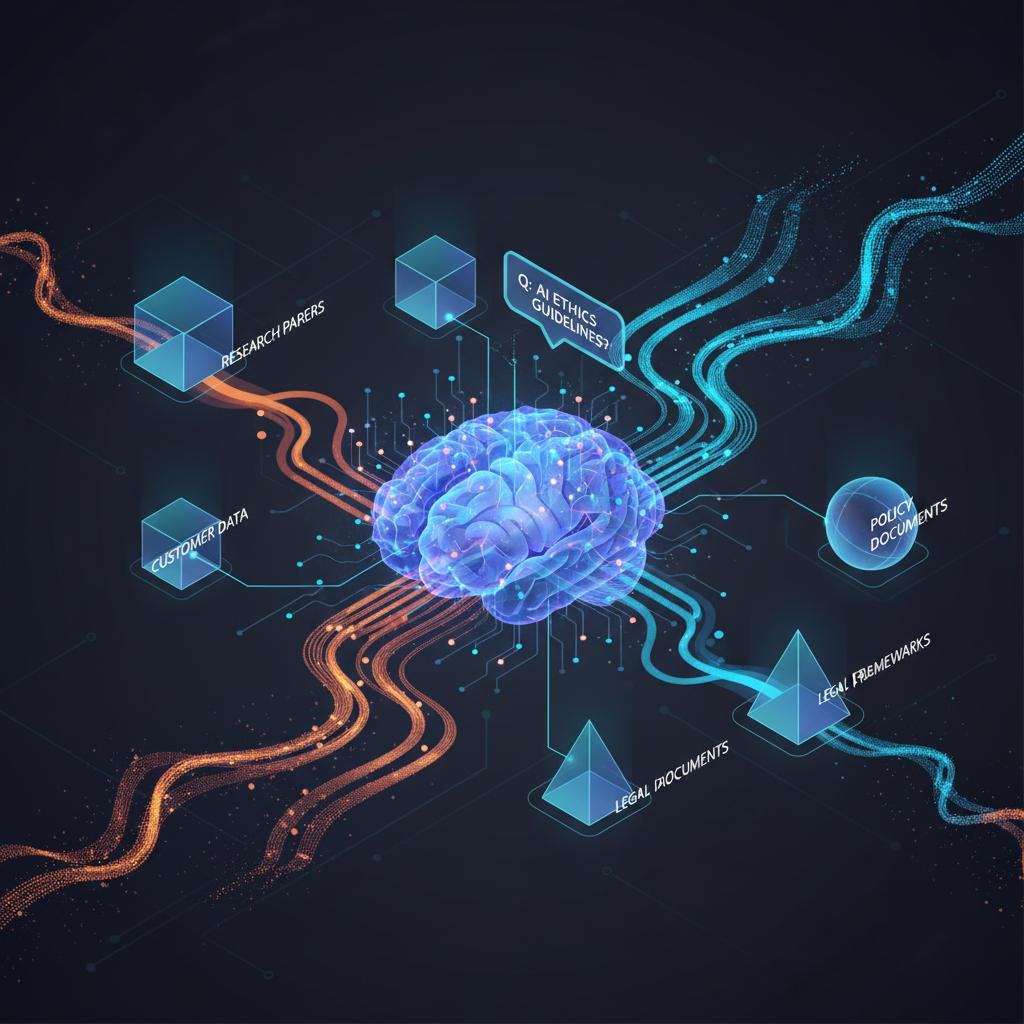

Contextual RAG represents a fundamental shift in how we approach knowledge retrieval. Instead of simply matching keywords, contextual RAG systems understand the user’s intent, consider conversation history, analyze the current task, and dynamically adjust retrieval strategies to surface the most relevant information. This isn’t just an incremental improvement—it’s the difference between a smart search engine and an intelligent knowledge assistant.

In this comprehensive guide, we’ll explore how to build contextual RAG systems that adapt to user needs, maintain conversation coherence, and deliver precise, relevant responses. You’ll learn the core architecture patterns, implementation strategies, and optimization techniques that separate enterprise-grade contextual RAG from basic retrieval systems.

Understanding Contextual RAG Architecture

Contextual RAG systems differ fundamentally from traditional implementations through their multi-layered approach to understanding and retrieval. While standard RAG systems follow a linear pattern of query → retrieve → generate, contextual RAG introduces dynamic decision-making at each step.

The core architecture consists of four interconnected components: the Context Engine, Dynamic Retrieval Router, Multi-Strategy Retriever, and Adaptive Generator. The Context Engine maintains state across conversations, tracking user preferences, conversation history, and task progression. This component transforms raw queries into rich contextual representations that inform downstream processes.

The Dynamic Retrieval Router acts as the system’s decision-making hub, analyzing contextual signals to determine the optimal retrieval strategy. Unlike static systems that apply the same approach to every query, the router selects from multiple retrieval methods based on query complexity, user intent, and available context.

Multi-Strategy Retrieval Implementation

Contextual RAG systems employ multiple retrieval strategies simultaneously, each optimized for different information types and user needs. Dense retrieval excels at semantic similarity matching, while sparse retrieval captures exact keyword matches and technical terminology. Hybrid approaches combine both methods, weighted dynamically based on query characteristics.

Graph-based retrieval adds another dimension by leveraging relationships between concepts, enabling the system to find information connected through entity relationships rather than just textual similarity. This proves particularly valuable for complex queries requiring multi-hop reasoning across different knowledge domains.

Implementing multi-strategy retrieval requires careful orchestration of different retrieval methods. The system must execute multiple retrieval approaches in parallel, then intelligently merge and rank results based on contextual relevance scores. This fusion process considers not just individual result quality but how well different pieces of information complement each other.

Building the Context Engine

The Context Engine serves as the memory and intelligence layer of contextual RAG systems. It maintains multiple types of context: conversational context tracking dialogue history and user intent evolution, task context understanding the current workflow or objective, and user context capturing preferences, expertise level, and interaction patterns.

Conversational context extends beyond simple chat history to include semantic understanding of topic evolution, entity tracking across turns, and intent progression analysis. The engine identifies when conversations shift topics, maintains entity resolution across references, and recognizes when users need different types of information support.

Task context requires understanding the broader workflow in which the RAG system operates. Whether users are conducting research, troubleshooting problems, or exploring new concepts, the Context Engine adapts retrieval strategies to support the current task phase. This might mean prioritizing comprehensive background information for exploration phases or focusing on specific, actionable solutions during problem-solving.

Implementing Dynamic Context Weighting

Effective Context Engines don’t treat all contextual signals equally. They implement dynamic weighting systems that adjust the importance of different context types based on query characteristics and user behavior patterns. Recent conversation turns might receive higher weight for follow-up questions, while user preferences become more important for ambiguous queries.

The weighting system learns from user interactions, tracking which contextual signals lead to successful information retrieval and user satisfaction. This creates a feedback loop that continuously improves context interpretation and application over time.

Context decay mechanisms ensure that older, less relevant information doesn’t overwhelm current needs. The engine implements time-based and interaction-based decay functions that gradually reduce the influence of outdated context while preserving important persistent preferences and learned patterns.

Advanced Retrieval Strategies

Contextual RAG systems excel through sophisticated retrieval strategies that adapt to different information needs and query types. Semantic clustering groups related information together, enabling the system to retrieve comprehensive information sets rather than isolated fragments.

Intent-based retrieval recognizes different query types—exploratory questions need broad, educational content while specific problem-solving queries require targeted, actionable information. The system maintains intent classification models that analyze query patterns and conversation context to determine optimal retrieval approaches.

Hierarchical retrieval strategies enable the system to navigate information at different levels of detail. Initial retrieval might focus on high-level concepts and summaries, with subsequent passes diving deeper into specific aspects based on user engagement and follow-up questions.

Implementing Contextual Re-ranking

Raw retrieval results require intelligent re-ranking that considers contextual relevance beyond semantic similarity scores. Contextual re-ranking algorithms evaluate how well retrieved information fits the current conversation context, user expertise level, and task requirements.

The re-ranking process considers multiple factors: semantic relevance to the immediate query, contextual fit with conversation history, information freshness and accuracy, source authority and reliability, and complementarity with other retrieved results. These factors are weighted dynamically based on context analysis.

Machine learning models trained on user interaction data can learn sophisticated re-ranking patterns that optimize for user satisfaction rather than just similarity scores. These models consider implicit feedback signals like time spent reading responses, follow-up question patterns, and explicit user feedback.

Context-Aware Generation

The generation phase in contextual RAG systems goes beyond simple template filling to create responses that acknowledge context, maintain conversation coherence, and adapt to user needs. Context-aware generators consider conversation history, user expertise level, and current task requirements when formulating responses.

Response personalization ensures that technical explanations match user expertise levels, terminology aligns with user preferences, and information depth suits the current task phase. The generator maintains user models that capture communication preferences, technical background, and learning patterns.

Coherence maintenance across conversation turns requires sophisticated state tracking and reference resolution. The generator must understand when to use pronouns versus full entity names, how to build upon previous explanations, and when to provide reminders or summaries of earlier discussion points.

Adaptive Response Formatting

Contextual RAG systems adapt response formatting based on user needs and information types. Exploratory queries might receive structured overviews with clear section breaks, while troubleshooting questions get step-by-step procedures with clear action items.

The formatting engine considers information complexity, user preferences, and delivery context when structuring responses. Mobile users might receive more concise, action-oriented responses, while desktop users can handle more detailed explanations with rich formatting.

Response adaptation extends to citing sources and providing evidence. The system learns user preferences for citation styles, evidence depth, and source types, adapting its referencing approach accordingly while maintaining transparency and trustworthiness.

Implementation Best Practices

Building production-ready contextual RAG systems requires careful attention to performance, scalability, and maintainability considerations. Context management can become computationally expensive, requiring efficient data structures and caching strategies to maintain responsiveness.

Implement context pruning mechanisms that maintain relevant information while discarding outdated or irrelevant context data. This prevents context windows from growing indefinitely while preserving important conversational and user preference information.

Modular architecture design enables independent optimization of different system components. The Context Engine, retrieval strategies, and generation components should be loosely coupled, allowing for iterative improvement and component replacement without system-wide changes.

Performance Optimization Strategies

Contextual RAG systems must balance sophistication with performance requirements. Implement caching strategies for frequently accessed context patterns and retrieval results. Context fingerprinting can identify similar conversational patterns, enabling reuse of previous analysis and retrieval decisions.

Asynchronous processing pipelines allow expensive context analysis and multi-strategy retrieval to occur in parallel, reducing user-perceived latency. Implement intelligent batching for retrieval operations and context updates to maximize throughput while maintaining responsiveness.

Monitoring and metrics collection should focus on contextual effectiveness rather than just traditional performance metrics. Track context utilization rates, contextual relevance scores, and user satisfaction with context-aware responses to guide system optimization efforts.

Contextual RAG represents the evolution from simple information retrieval to intelligent knowledge assistance. By understanding user intent, maintaining conversation context, and adapting retrieval strategies dynamically, these systems deliver the personalized, relevant experiences that enterprise users demand. The implementation requires sophisticated engineering and careful attention to context management, but the results justify the investment through dramatically improved user satisfaction and system effectiveness.

Ready to build contextual RAG systems that truly understand your users? Start by implementing the Context Engine architecture outlined here, then gradually add multi-strategy retrieval and adaptive generation capabilities. Your enterprise AI assistant will transform from a keyword-matching tool into an intelligent knowledge partner.