- Introduction to JIT Hybrid Graph RAG

- Setting Up Your Development Environment

- Prerequisites

- Installing LangChain

- Setting Up Neo4j

- Installing Additional Libraries

- Configuring Your Environment

- Verifying the Setup

- Summary

- Installing LangChain and Dependencies

- Prerequisites

- Installing LangChain

- Setting Up Neo4j

- Verifying the Setup

- Summary

- Setting Up NebulaGraph

- Prerequisites

- Installing NebulaGraph

- Accessing NebulaGraph

- Configuring NebulaGraph

- Integrating NebulaGraph with Your RAG System

- Configuring Neo4j for Hybrid RAG

- Setting Up Neo4j

- Configuring Neo4j for Optimal Performance

- Integrating Neo4j with LangChain

- Connecting to Neo4j

- Querying Neo4j

- Enhancing Retrieval with Rank Fusion

- Monitoring and Maintenance

- Summary

- Building the Knowledge Graph

- Step 1: Define Objectives

- Step 2: Gather and Categorize Data

- Step 3: Choose a Platform

- Step 4: Build an Initial Framework

- Step 5: Populate the Knowledge Graph

- Step 6: Define Relationships

- Step 7: Integrate with Other Systems

- Step 8: Implement Advanced Retrieval Techniques

- Step 9: Monitor and Maintain

- Example: Enterprise Knowledge Graph

- Conclusion

- Extracting Triples from Text Data

- Understanding Triples

- Methods for Extracting Triples

- Tools and Libraries

- Example Workflow

- Challenges and Best Practices

- Conclusion

- Loading Existing Knowledge Graphs

- Identifying and Preparing Data Sources

- Data Transformation and Cleaning

- Importing Data into Neo4j

- Optimizing Data for Performance

- Verifying Data Integrity

- Example: Importing DBpedia into Neo4j

- Conclusion

- Integrating Knowledge Graph with LangChain

- Setting Up LangChain

- Connecting to Neo4j

- Integrating LangChain with Neo4j

- Implementing GraphCypherQAChain

- Enhancing Retrieval with Vector Index

- Example Workflow

- Monitoring and Maintenance

- Conclusion

- Creating a Storage Context

- Understanding the Storage Requirements

- Choosing the Right Storage Solutions

- Setting Up Neo4j for Knowledge Graph Storage

- Configuring FAISS for Vector Storage

- Setting Up Elasticsearch for Keyword Search

- Integrating Storage Solutions

- Example Workflow

- Monitoring and Maintenance

- Conclusion

- Configuring the RetrieverQueryEngine

- Understanding the Role of the RetrieverQueryEngine

- Setting Up the RetrieverQueryEngine

- Configuring Vector Search

- Configuring Keyword Search

- Configuring Knowledge Graph Retrieval

- Implementing Result Fusion

- Example Workflow

- Monitoring and Maintenance

- Implementing the JIT Hybrid Retrieval

- Understanding JIT Hybrid Retrieval

- Setting Up the Development Environment

- Configuring the Retrieval Components

- Implementing Result Fusion

- Example Workflow

- Monitoring and Maintenance

- Combining Vector and Keyword Searches

- Understanding Vector and Keyword Searches

- Benefits of Combining Vector and Keyword Searches

- Implementing the Hybrid Search

- Example Workflow

- Monitoring and Maintenance

- Leveraging Graph Retrieval

- Testing and Optimizing Your JIT Hybrid Graph RAG

- Systematic Evaluation

- Testing Specific Stages of the RAG Pipeline

- Fine-Tuning and Optimization

- Monitoring and Maintenance

- Example: Evaluating RAG Performance

- Conclusion

- Running Initial Tests

- Setting Up the Test Environment

- Testing the Retrieval Component

- Testing the Generation Component

- Fine-Tuning Parameters

- Monitoring System Performance

- Example: Evaluating Initial Performance

- Summary

- Performance Optimization Techniques

- Memory Management

- Query Execution Optimization

- Data Indexing and Partitioning

- Load Balancing and Scalability

- Monitoring and Maintenance

- Example: Optimizing a JIT Hybrid Graph RAG System

- Conclusion and Future Directions

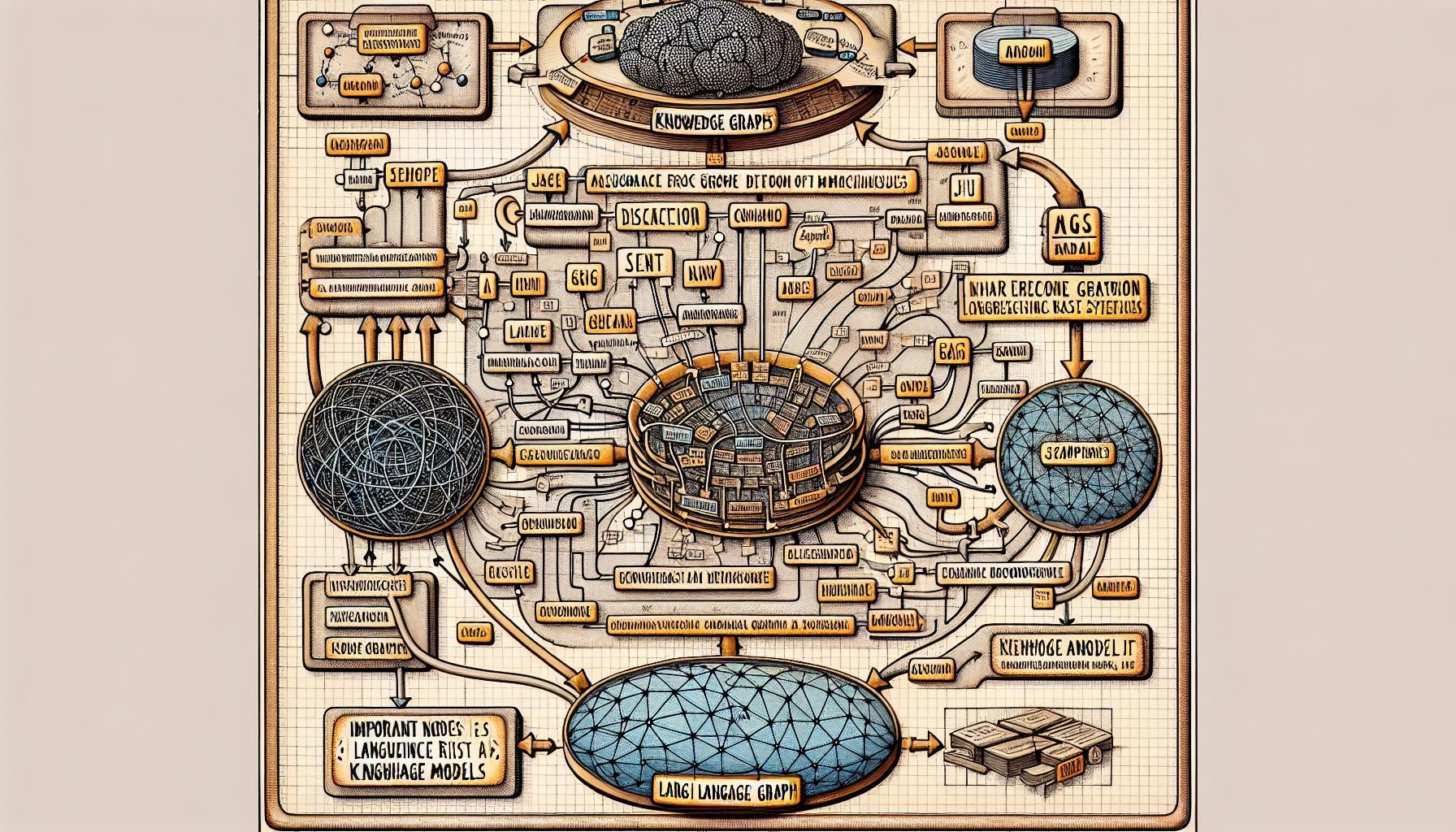

Introduction to JIT Hybrid Graph RAG

In the rapidly evolving landscape of AI and data-driven technologies, the demand for more accurate, context-aware, and efficient search mechanisms has never been higher. Traditional search engines often fall short when dealing with complex queries or specialized domains. This is where the concept of Just-In-Time (JIT) Hybrid Graph Retrieval-Augmented Generation (RAG) comes into play, offering a transformative approach to intelligent search and information retrieval.

JIT Hybrid Graph RAG combines the strengths of vector search, keyword search, and knowledge graph retrieval to deliver highly relevant and contextually rich responses. This hybrid approach leverages the structured nature of knowledge graphs to organize data as nodes and relationships, enabling more efficient and accurate retrieval of information. The integration of these diverse retrieval methods ensures that the system can handle a wide range of queries, from simple keyword searches to complex, context-dependent questions.

The architecture of a JIT Hybrid Graph RAG system typically begins with a user submitting a query. This query is then processed through a hybrid retrieval pipeline that integrates results from unstructured data searches (using vector and keyword indexes) and structured data searches (using knowledge graphs). The retrieval process can be further enhanced through reranking or rank fusion techniques, which prioritize the most relevant results. The combined results are then fed into a large language model (LLM) to generate a comprehensive and contextually accurate response.

One of the key advantages of JIT Hybrid Graph RAG is its ability to provide deeper insights and more accurate answers by leveraging the rich contextual information stored in knowledge graphs. Unlike traditional RAG systems that rely solely on textual chunks, JIT Hybrid Graph RAG can provide structured entity information, combining textual descriptions with properties and relationships. This structured approach enhances the LLM’s understanding of specific terminology and domain-specific knowledge, resulting in more precise and contextually appropriate responses.

For instance, in enterprise search applications, JIT Hybrid Graph RAG can significantly improve the accuracy and relevance of search results by integrating internal knowledge graphs with external data sources. This enables organizations to unlock the full potential of their data, driving innovation and providing a competitive edge. Similarly, in document retrieval and knowledge discovery use cases, the hybrid approach ensures that users receive the most relevant and contextually rich information, even when dealing with complex or specialized queries.

The implementation of JIT Hybrid Graph RAG requires careful planning and adherence to best practices. Key strategies include integrating diverse data sources, optimizing retrieval algorithms, and ensuring seamless interaction between the LLM and the knowledge graph. Additionally, leveraging tools and platforms that support hybrid retrieval and knowledge graph integration, such as Neo4j or Ontotext GraphDB, can streamline the development process and enhance the system’s overall performance.

In summary, JIT Hybrid Graph RAG represents a significant advancement in the field of intelligent search and information retrieval. By combining the strengths of vector search, keyword search, and knowledge graph retrieval, this approach delivers highly accurate, context-aware, and efficient search results. As organizations continue to grapple with increasing volumes of data and the demand for intelligent search capabilities, JIT Hybrid Graph RAG offers a powerful solution that can unlock new insights, drive innovation, and provide a competitive edge.

Setting Up Your Development Environment

To set up your development environment for building a JIT Hybrid Graph RAG system, you need to ensure that you have the right tools and libraries installed. This section will guide you through the necessary steps to get your environment ready, focusing on the essential components such as LangChain, Neo4j, and other supporting libraries.

Prerequisites

Before diving into the setup, make sure you have the following prerequisites:

- Python 3.8 or higher: Ensure that Python is installed on your system. You can download it from the official Python website.

- Jupyter Notebook: This is highly recommended for interactive development and debugging. Install it using pip:

pip install notebookInstalling LangChain

LangChain is a crucial library for building RAG systems. To install LangChain, run the following command:

pip install langchainFor more detailed installation instructions, refer to the LangChain documentation.

Setting Up Neo4j

Neo4j is a powerful graph database that will serve as the backbone for your knowledge graph. Follow these steps to set up Neo4j:

- Download and Install Neo4j: You can download the latest version of Neo4j from the official website. Follow the installation instructions for your operating system.

- Start Neo4j: Once installed, start the Neo4j server. You can do this via the Neo4j Desktop application or by running the following command in your terminal:

neo4j start- Access Neo4j Browser: Open your web browser and navigate to

http://localhost:7474. Log in with the default credentials (neo4j/neo4j) and change the password when prompted.

Installing Additional Libraries

To ensure seamless integration and functionality, you will need a few additional Python libraries:

- FAISS: This library is used for efficient similarity search and clustering of dense vectors. Install it using:

pip install faiss-cpu- Streamlit: For building a user-friendly interface to interact with your RAG system, install Streamlit:

pip install streamlit- Neo4j Python Driver: This driver allows your Python application to communicate with the Neo4j database:

pip install neo4jConfiguring Your Environment

After installing the necessary libraries, configure your environment to ensure everything works together smoothly:

- Environment Variables: Set up environment variables for LangChain and Neo4j. For example, you can add the following lines to your

.bashrcor.zshrcfile:

export LANGCHAIN_API_KEY='your_langchain_api_key'

export NEO4J_URI='bolt://localhost:7687'

export NEO4J_USER='neo4j'

export NEO4J_PASSWORD='your_password'- Configuration Files: Create configuration files for your project. For instance, you might have a

config.pyfile that stores your database connection settings and other configurations.

Verifying the Setup

To verify that your environment is correctly set up, run a simple script that connects to Neo4j and performs a basic query. Here’s an example:

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))

def test_connection():

with driver.session() as session:

result = session.run("MATCH (n) RETURN n LIMIT 1")

for record in result:

print(record)

test_connection()If the script runs without errors and prints a record from your Neo4j database, your environment is set up correctly.

Summary

Setting up your development environment for a JIT Hybrid Graph RAG system involves installing and configuring several key components, including Python, LangChain, Neo4j, and additional libraries like FAISS and Streamlit. By following the steps outlined above, you will be well-prepared to start building and experimenting with your RAG system, leveraging the powerful capabilities of hybrid retrieval and knowledge graph integration.

Installing LangChain and Dependencies

To set up your development environment for building a JIT Hybrid Graph RAG system, you need to ensure that you have the right tools and libraries installed. This section will guide you through the necessary steps to get your environment ready, focusing on the essential components such as LangChain, Neo4j, and other supporting libraries.

Prerequisites

Before diving into the setup, make sure you have the following prerequisites:

- Python 3.8 or higher: Ensure that Python is installed on your system. You can download it from the official Python website.

- Jupyter Notebook: This is highly recommended for interactive development and debugging. Install it using pip:

pip install notebookInstalling LangChain

LangChain is a crucial library for building RAG systems. To install LangChain, run the following command:

pip install langchainFor more detailed installation instructions, refer to the LangChain documentation.

Setting Up Neo4j

Neo4j is a powerful graph database that will serve as the backbone for your knowledge graph. Follow these steps to set up Neo4j:

- Download and Install Neo4j: You can download the latest version of Neo4j from the official website. Follow the installation instructions for your operating system.

- Start Neo4j: Once installed, start the Neo4j server. You can do this via the Neo4j Desktop application or by running the following command in your terminal:

neo4j start- Access Neo4j Browser: Open your web browser and navigate to

http://localhost:7474. Log in with the default credentials (neo4j/neo4j) and change the password when prompted.

Verifying the Setup

To verify that your environment is correctly set up, run a simple script that connects to Neo4j and performs a basic query. Here’s an example:

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))

def test_connection():

with driver.session() as session:

result = session.run("MATCH (n) RETURN n LIMIT 1")

for record in result:

print(record)

test_connection()If the script runs without errors and prints a record from your Neo4j database, your environment is set up correctly.

Summary

Setting up your development environment for a JIT Hybrid Graph RAG system involves installing and configuring several key components, including Python, LangChain, Neo4j, and additional libraries like FAISS and Streamlit. By following the steps outlined above, you will be well-prepared to start building and experimenting with your RAG system, leveraging the powerful capabilities of hybrid retrieval and knowledge graph integration.

Setting Up NebulaGraph

To set up NebulaGraph for your JIT Hybrid Graph RAG system, follow these steps to ensure a smooth and efficient installation process. NebulaGraph is a highly performant graph database designed for handling large-scale graph data, making it an excellent choice for integrating with your RAG system.

Prerequisites

Before you begin, ensure that your system meets the following prerequisites:

- Operating System: NebulaGraph supports various operating systems, including Linux, macOS, and Windows. For optimal performance, a Linux-based system is recommended.

- Docker: While NebulaGraph can be installed directly, using Docker simplifies the setup process. Ensure Docker is installed on your system. You can download it from the official Docker website.

Installing NebulaGraph

NebulaGraph can be installed using Docker, which provides a containerized environment for easy deployment and management. Follow these steps to install NebulaGraph using Docker:

- Pull the NebulaGraph Docker Image: Open your terminal and run the following command to pull the latest NebulaGraph image:

docker pull vesoft/nebula-graph:latest- Start NebulaGraph Services: Use Docker Compose to start the NebulaGraph services. Create a

docker-compose.ymlfile with the following content:

version: '3.4'

services:

metad0:

image: vesoft/nebula-metad:latest

environment:

USER: root

TZ: UTC

volumes:

- ./data/meta0:/data/meta

networks:

- nebula-net

storaged0:

image: vesoft/nebula-storaged:latest

environment:

USER: root

TZ: UTC

volumes:

- ./data/storage0:/data/storage

networks:

- nebula-net

graphd:

image: vesoft/nebula-graphd:latest

environment:

USER: root

TZ: UTC

ports:

- 9669:9669

depends_on:

- metad0

- storaged0

networks:

- nebula-net

networks:

nebula-net:Run the following command to start the services:

docker-compose up -d- Verify Installation: Ensure that the NebulaGraph services are running correctly by checking the Docker containers:

docker psYou should see the metad0, storaged0, and graphd services running.

Accessing NebulaGraph

Once the services are up and running, you can access NebulaGraph using the Nebula Console, a command-line tool for interacting with the database.

- Download Nebula Console: Download the Nebula Console binary from the official NebulaGraph GitHub repository.

- Connect to NebulaGraph: Use the Nebula Console to connect to your NebulaGraph instance. Run the following command, replacing

localhostwith your server’s IP address if necessary:

./nebula-console -u user -p password --address=localhost --port=9669Configuring NebulaGraph

To ensure optimal performance and integration with your JIT Hybrid Graph RAG system, configure NebulaGraph according to your specific requirements.

- Create a Graph Space: A graph space in NebulaGraph is a logical namespace for your graph data. Create a graph space using the following command:

CREATE SPACE my_space(partition_num=10, replica_factor=1);- Use the Graph Space: Switch to the newly created graph space:

USE my_space;- Define Schema: Define the schema for your graph data, including vertex tags and edge types. For example:

CREATE TAG person(name string, age int);

CREATE EDGE knows(start_date string);Integrating NebulaGraph with Your RAG System

To integrate NebulaGraph with your JIT Hybrid Graph RAG system, use the NebulaGraph Python client to interact with the database from your Python application.

- Install NebulaGraph Python Client: Install the client using pip:

pip install nebula2-python- Connect to NebulaGraph: Use the following code to connect to NebulaGraph and perform basic operations:

from nebula2.gclient.net import ConnectionPool

from nebula2.Config import Config

config = Config()

config.max_connection_pool_size = 10

connection_pool = ConnectionPool()

assert connection_pool.init([('localhost', 9669)], config)

session = connection_pool.get_session('user', 'password')

result = session.execute('USE my_space;')

print(result)By following these steps, you will have a fully functional NebulaGraph setup integrated with your JIT Hybrid Graph RAG system. This setup will enable efficient and scalable graph data management, enhancing the performance and capabilities of your intelligent search and information retrieval system.

Configuring Neo4j for Hybrid RAG

Configuring Neo4j for Hybrid RAG

Configuring Neo4j for a JIT Hybrid Graph RAG system is a critical step to ensure efficient and accurate information retrieval. Neo4j, a robust graph database, provides the necessary infrastructure to manage and query complex relationships within your data. This section will guide you through the essential configurations and best practices to optimize Neo4j for your hybrid retrieval-augmented generation system.

Setting Up Neo4j

To begin, ensure that Neo4j is properly installed and running on your system. Follow these steps to set up Neo4j:

- Download and Install Neo4j: Obtain the latest version of Neo4j from the official website. Follow the installation instructions specific to your operating system.

- Start Neo4j: After installation, start the Neo4j server. This can be done via the Neo4j Desktop application or by executing the following command in your terminal:

neo4j start- Access Neo4j Browser: Open your web browser and navigate to

http://localhost:7474. Log in with the default credentials (neo4j/neo4j) and change the password when prompted.

Configuring Neo4j for Optimal Performance

To ensure Neo4j operates efficiently within your JIT Hybrid Graph RAG system, consider the following configurations:

- Memory Configuration: Adjust the memory settings in the

neo4j.conffile to allocate sufficient resources for your database. For example:

dbms.memory.heap.initial_size=2G

dbms.memory.heap.max_size=4G

dbms.memory.pagecache.size=2GThese settings allocate 2GB of initial heap memory, 4GB of maximum heap memory, and 2GB for the page cache.

- Indexing: Create indexes on frequently queried properties to speed up search operations. For instance:

CREATE INDEX ON :Person(name);

CREATE INDEX ON :Document(title);Indexes significantly improve query performance by reducing the search space.

- Schema Design: Design your schema to reflect the relationships and entities relevant to your domain. For example, in an enterprise search application, you might have nodes representing

Employee,Project, andDocument, with relationships likeWORKS_ONandAUTHORED.

Integrating Neo4j with LangChain

LangChain is a crucial library for building RAG systems, and integrating it with Neo4j enhances the hybrid retrieval capabilities. Follow these steps to integrate Neo4j with LangChain:

- Install LangChain: Use pip to install LangChain:

pip install langchain- Install Neo4j Python Driver: This driver allows your Python application to communicate with the Neo4j database:

pip install neo4jConnecting to Neo4j

Establish a connection to Neo4j from your Python application using the Neo4j Python driver. Here’s an example script to test the connection:

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))

def test_connection():

with driver.session() as session:

result = session.run("MATCH (n) RETURN n LIMIT 1")

for record in result:

print(record)

test_connection()Querying Neo4j

To leverage Neo4j’s capabilities within your JIT Hybrid Graph RAG system, you need to perform complex queries that combine structured and unstructured data retrieval. Here’s an example of a Cypher query that retrieves documents authored by a specific employee:

MATCH (e:Employee {name: 'John Doe'})-[:AUTHORED]->(d:Document)

RETURN d.title, d.contentEnhancing Retrieval with Rank Fusion

Rank fusion techniques can be employed to combine results from Neo4j with those from vector and keyword searches. This involves assigning weights to different retrieval methods and merging the results to prioritize the most relevant information. For example, you might use a weighted sum approach to combine scores from Neo4j and vector search results.

Monitoring and Maintenance

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of your Neo4j database. Utilize Neo4j’s built-in monitoring tools and consider setting up alerts for critical metrics such as memory usage, query performance, and disk space.

Summary

Configuring Neo4j for a JIT Hybrid Graph RAG system involves setting up the database, optimizing performance through memory and indexing configurations, and integrating it with LangChain for hybrid retrieval. By following these best practices, you can ensure that your Neo4j database operates efficiently, providing accurate and contextually rich responses to complex queries. This setup not only enhances the performance of your RAG system but also unlocks deeper insights and more precise information retrieval, driving innovation and providing a competitive edge in your domain.

Building the Knowledge Graph

Building a knowledge graph is a multifaceted process that requires careful planning, structured methodologies, and the right tools. This section will guide you through the essential steps to build a robust and efficient knowledge graph, leveraging best practices and advanced technologies to ensure optimal performance and accuracy.

Step 1: Define Objectives

The first step in building a knowledge graph is to clearly define your objectives. Understanding the specific goals and use cases for your knowledge graph will guide the entire development process. Whether you aim to improve internal search capabilities, enhance customer support, or drive data-driven decision-making, having well-defined objectives is crucial.

Step 2: Gather and Categorize Data

Data is the backbone of any knowledge graph. Start by identifying all the relevant data sources within your organization. This can include databases, documents, spreadsheets, and even unstructured data from emails or social media. Categorize this data into broad classes, such as people, events, places, resources, and documents. This categorization will help in structuring the knowledge graph and defining relationships between entities.

Step 3: Choose a Platform

Selecting the right platform is critical for building and managing your knowledge graph. Most knowledge graph platforms are built over a Graph Database Management System (DBMS). Popular choices include Neo4j, Stardog, and Ontotext GraphDB. These platforms offer robust features for data modeling, querying, and visualization, making them ideal for complex knowledge graph projects.

Step 4: Build an Initial Framework

Creating an initial framework or scaffold is the next step. This framework can range from a simple tree of labels to a fully-fledged ontology. Leverage existing domain ontologies and taxonomies where possible to inform the creation of concepts in your knowledge graph. For example, Princeton University’s WordNet can provide a comprehensive framework of concepts that can be customized to fit your specific needs.

Step 5: Populate the Knowledge Graph

Once the framework is in place, begin populating the knowledge graph with data. This involves bulk loading of concepts and entities, which typically requires the expertise of a software developer. Use your knowledge graph platform’s SDK or API to automate this process. Ensure that you have access to existing databases and knowledge bases for seamless data extraction.

Step 6: Define Relationships

Defining relationships between entities is a crucial step in building a knowledge graph. Relationships (or edges) represent the links between nodes (entities) and are stored in the database with a name and direction. For example, in a corporate knowledge graph, you might have relationships like “WORKS_ON” between an employee and a project or “AUTHORED” between an employee and a document.

Step 7: Integrate with Other Systems

To maximize the utility of your knowledge graph, integrate it with other systems and data sources. This can include internal databases, external APIs, and even other knowledge graphs. Integration ensures that your knowledge graph remains up-to-date and provides a comprehensive view of the data landscape.

Step 8: Implement Advanced Retrieval Techniques

Enhance the retrieval capabilities of your knowledge graph by implementing advanced techniques such as rank fusion and reranking. These techniques prioritize the most relevant results by combining scores from different retrieval methods, such as vector search, keyword search, and knowledge graph retrieval. This hybrid approach ensures that users receive the most accurate and contextually rich responses.

Step 9: Monitor and Maintain

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of your knowledge graph. Utilize built-in monitoring tools provided by your knowledge graph platform and set up alerts for critical metrics such as memory usage, query performance, and data integrity. Regularly update the knowledge graph to incorporate new data and refine existing relationships.

Example: Enterprise Knowledge Graph

Consider an enterprise knowledge graph designed to improve internal search capabilities. The graph might include nodes representing employees, projects, documents, and departments, with relationships like “WORKS_ON,” “AUTHORED,” and “BELONGS_TO.” By integrating internal databases and external data sources, the knowledge graph can provide a comprehensive view of the organization’s data, enabling more accurate and efficient search results.

Conclusion

Building a knowledge graph is a complex but rewarding endeavor that can significantly enhance information retrieval and data-driven decision-making within an organization. By following the steps outlined above and leveraging advanced technologies and best practices, you can create a robust and efficient knowledge graph that meets your specific objectives and drives innovation.

Extracting Triples from Text Data

Extracting triples from text data is a fundamental process in building a knowledge graph, as it transforms unstructured text into structured data that can be easily queried and analyzed. Triples, which consist of a subject, predicate, and object, form the basic building blocks of a knowledge graph. This section will guide you through the methodologies and tools for extracting triples from text data, ensuring accuracy and efficiency in your knowledge graph construction.

Understanding Triples

A triple is a data structure that represents a fact in the form of a subject-predicate-object expression. For example, in the sentence “John Doe authored the document,” the triple would be (John Doe, authored, document). This simple yet powerful structure allows for the representation of complex relationships and hierarchies within a knowledge graph.

Methods for Extracting Triples

There are several methods for extracting triples from text data, each with its own advantages and use cases. These methods can be broadly categorized into rule-based approaches, machine learning-based approaches, and hybrid approaches.

Rule-Based Approaches

Rule-based approaches rely on predefined linguistic rules and patterns to identify and extract triples from text. These methods are highly precise but can be limited by the complexity and variability of natural language. Common techniques include:

- Regular Expressions: Use regular expressions to identify specific patterns in the text. For example, a regular expression can be designed to capture sentences that follow a “subject-verb-object” structure.

- Dependency Parsing: Analyze the grammatical structure of a sentence to identify relationships between words. Dependency parsers like SpaCy can be used to extract triples based on syntactic dependencies.

Machine Learning-Based Approaches

Machine learning-based approaches leverage algorithms and models to automatically learn patterns and relationships from annotated training data. These methods are more flexible and can handle a wider range of linguistic variations. Key techniques include:

- Named Entity Recognition (NER): Identify and classify entities in the text, such as people, organizations, and locations. NER models can be trained using libraries like NLTK or Stanford NER.

- Relation Extraction: Use supervised or unsupervised learning to identify relationships between entities. Models like BERT and OpenNRE can be employed to extract triples with high accuracy.

Hybrid Approaches

Hybrid approaches combine rule-based and machine learning-based methods to leverage the strengths of both. These approaches can achieve higher accuracy and robustness by using rules to handle straightforward cases and machine learning models to address more complex scenarios.

Tools and Libraries

Several tools and libraries are available to facilitate the extraction of triples from text data. These tools offer various functionalities, from basic text processing to advanced machine learning models.

- SpaCy: A popular NLP library that provides pre-trained models for dependency parsing, NER, and relation extraction. SpaCy’s intuitive API makes it easy to integrate into your knowledge graph pipeline.

- Stanford CoreNLP: A comprehensive suite of NLP tools that includes dependency parsing, NER, and relation extraction. CoreNLP is highly customizable and supports multiple languages.

- OpenNRE: An open-source toolkit for relation extraction that supports various neural network models. OpenNRE is designed for ease of use and can be integrated with other NLP libraries.

Example Workflow

To illustrate the process of extracting triples from text data, consider the following example workflow using SpaCy:

- Load the Text Data: Load the text data from your source, such as a document or database.

import spacy

nlp = spacy.load("en_core_web_sm")

text = "John Doe authored the document."

doc = nlp(text)- Perform Dependency Parsing: Use SpaCy to parse the text and identify dependencies.

for token in doc:

print(f"{token.text} -> {token.dep_} -> {token.head.text}")- Extract Entities and Relationships: Identify entities and their relationships based on dependency labels.

subject = [token for token in doc if token.dep_ == "nsubj"]

predicate = [token for token in doc if token.dep_ == "ROOT"]

object_ = [token for token in doc if token.dep_ == "dobj"]

triple = (subject[0].text, predicate[0].text, object_[0].text)

print(triple)Challenges and Best Practices

Extracting triples from text data presents several challenges, including handling ambiguous language, managing large volumes of data, and ensuring accuracy. To address these challenges, consider the following best practices:

- Data Preprocessing: Clean and preprocess your text data to remove noise and standardize formats. This can significantly improve the accuracy of your extraction methods.

- Model Training: Train your machine learning models on domain-specific data to enhance their performance. Use transfer learning techniques to leverage pre-trained models and fine-tune them for your specific use case.

- Evaluation and Validation: Regularly evaluate and validate your extraction methods using annotated datasets. Measure precision, recall, and F1-score to ensure the quality of your extracted triples.

Conclusion

Extracting triples from text data is a critical step in building a knowledge graph, enabling the transformation of unstructured text into structured, queryable data. By leveraging rule-based, machine learning-based, and hybrid approaches, and utilizing powerful tools and libraries, you can achieve accurate and efficient triple extraction. This process not only enhances the quality of your knowledge graph but also unlocks deeper insights and more precise information retrieval, driving innovation and providing a competitive edge in your domain.

Loading Existing Knowledge Graphs

Loading existing knowledge graphs into your JIT Hybrid Graph RAG system is a crucial step that can significantly enhance the system’s capabilities by leveraging pre-existing structured data. This process involves importing data from various sources, ensuring compatibility with your graph database, and optimizing the data for efficient retrieval and query performance. Here’s a comprehensive guide to loading existing knowledge graphs, tailored for a software engineering audience.

Identifying and Preparing Data Sources

The first step in loading existing knowledge graphs is to identify the data sources you intend to import. These sources can include publicly available knowledge graphs like DBpedia, Wikidata, and Freebase, as well as proprietary datasets within your organization. Ensure that the data is in a compatible format, such as RDF, CSV, or JSON, and that it adheres to the schema requirements of your graph database.

Data Transformation and Cleaning

Before importing the data, it’s essential to transform and clean it to ensure consistency and accuracy. This involves:

- Schema Mapping: Align the schema of the existing knowledge graph with your target graph database schema. This may involve renaming properties, converting data types, and restructuring relationships.

- Data Cleaning: Remove duplicates, correct errors, and standardize formats. Tools like OpenRefine can be invaluable for this task.

- Normalization: Normalize the data to eliminate redundancy and ensure that each entity and relationship is uniquely represented.

Importing Data into Neo4j

Neo4j, a popular graph database, provides several methods for importing data, including the use of Cypher queries, the Neo4j Import Tool, and the APOC library. Here’s a step-by-step guide to importing data using these methods:

Using Cypher Queries

Cypher, Neo4j’s query language, allows for flexible data import through its LOAD CSV command. Here’s an example of how to import data from a CSV file:

LOAD CSV WITH HEADERS FROM 'file:///path/to/your/data.csv' AS row

CREATE (n:Entity {id: row.id, name: row.name, type: row.type});This command reads data from the specified CSV file and creates nodes with the specified properties.

Using the Neo4j Import Tool

The Neo4j Import Tool is designed for bulk data import and is highly efficient for large datasets. To use this tool, prepare your data in CSV format and run the following command:

neo4j-admin import --nodes=path/to/nodes.csv --relationships=path/to/relationships.csvThis command imports nodes and relationships from the specified CSV files into your Neo4j database.

Using the APOC Library

The APOC (Awesome Procedures on Cypher) library extends Neo4j’s capabilities with a wide range of procedures, including data import. To import data using APOC, first install the library and then use the following Cypher query:

CALL apoc.load.json('file:///path/to/your/data.json') YIELD value

CREATE (n:Entity {id: value.id, name: value.name, type: value.type});This command reads data from the specified JSON file and creates nodes with the specified properties.

Optimizing Data for Performance

After importing the data, optimize it for efficient retrieval and query performance. This involves:

- Indexing: Create indexes on frequently queried properties to speed up search operations. For example:

CREATE INDEX ON :Entity(id);

CREATE INDEX ON :Entity(name);- Relationship Management: Ensure that relationships are correctly defined and indexed. Use the

CREATE INDEXcommand to index relationship properties if necessary. - Data Partitioning: For very large datasets, consider partitioning the data to distribute the load and improve query performance.

Verifying Data Integrity

Once the data is imported and optimized, verify its integrity to ensure that it accurately represents the original knowledge graph. This involves:

- Consistency Checks: Run queries to check for inconsistencies, such as missing relationships or duplicate nodes.

- Sample Queries: Execute sample queries to validate that the data is correctly structured and that the retrieval performance meets your requirements.

- Automated Tests: Implement automated tests to regularly check the integrity and performance of the knowledge graph.

Example: Importing DBpedia into Neo4j

To illustrate the process, consider importing data from DBpedia, a large-scale, multilingual knowledge graph derived from Wikipedia. Follow these steps:

- Download Data: Obtain the DBpedia dataset in RDF format from the DBpedia downloads page.

- Convert RDF to CSV: Use a tool like RDF2CSV to convert the RDF data to CSV format.

- Import Data: Use the Neo4j Import Tool to load the CSV data into your Neo4j database:

neo4j-admin import --nodes=path/to/dbpedia_nodes.csv --relationships=path/to/dbpedia_relationships.csv- Optimize and Verify: Create indexes, run consistency checks, and execute sample queries to ensure the data is correctly imported and optimized.

Conclusion

Loading existing knowledge graphs into your JIT Hybrid Graph RAG system is a multi-step process that requires careful planning, data transformation, and optimization. By following best practices and leveraging powerful tools like Neo4j and the APOC library, you can efficiently import and manage large-scale knowledge graphs, enhancing the capabilities of your intelligent search and information retrieval system. This process not only improves the accuracy and relevance of search results but also unlocks deeper insights and drives innovation within your organization.

Integrating Knowledge Graph with LangChain

Integrating a knowledge graph with LangChain is a transformative step in building a robust JIT Hybrid Graph RAG system. This integration leverages the structured nature of knowledge graphs to enhance the retrieval-augmented generation process, ensuring that responses are not only contextually rich but also anchored in validated, real-time data. Here’s a comprehensive guide to integrating a knowledge graph with LangChain, tailored for a software engineering audience.

Setting Up LangChain

LangChain is a crucial library for building RAG systems. To install LangChain, run the following command:

pip install langchainFor more detailed installation instructions, refer to the LangChain documentation.

Connecting to Neo4j

Neo4j, a powerful graph database, will serve as the backbone for your knowledge graph. Establish a connection to Neo4j from your Python application using the Neo4j Python driver. Here’s an example script to test the connection:

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))

def test_connection():

with driver.session() as session:

result = session.run("MATCH (n) RETURN n LIMIT 1")

for record in result:

print(record)

test_connection()Integrating LangChain with Neo4j

LangChain provides a seamless way to integrate with Neo4j, enabling the use of knowledge graphs for enhanced information retrieval. Follow these steps to integrate LangChain with Neo4j:

- Install Neo4j Python Driver: This driver allows your Python application to communicate with the Neo4j database:

pip install neo4j- Define the Graph Schema: Design your schema to reflect the relationships and entities relevant to your domain. For example, in an enterprise search application, you might have nodes representing

Employee,Project, andDocument, with relationships likeWORKS_ONandAUTHORED. - Create Indexes: Create indexes on frequently queried properties to speed up search operations. For example:

CREATE INDEX ON :Person(name);

CREATE INDEX ON :Document(title);Implementing GraphCypherQAChain

The GraphCypherQAChain class in LangChain serves a functional purpose in querying graph databases using natural language questions. It uses LLMs to generate Cypher queries from input questions, executes them against a Neo4j graph database, and provides answers based on the query results. Here’s how to implement it:

- Import Necessary Libraries:

from langchain.graphs import GraphCypherQAChain

from neo4j import GraphDatabase- Set Up the GraphCypherQAChain:

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))

graph_chain = GraphCypherQAChain(driver)- Query the Graph:

question = "Who authored the document titled 'AI in 2023'?"

response = graph_chain.query(question)

print(response)Enhancing Retrieval with Vector Index

To further enhance the retrieval process, integrate a vector index for similarity search. This involves creating embeddings for your text data and storing them in a vector index. Here’s how to set it up:

- Install FAISS:

pip install faiss-cpu- Create Embeddings:

from langchain.embeddings import Embedding

from langchain.vectorstores import FAISS

documents = ["Document 1 content", "Document 2 content"]

embeddings = [Embedding.create(doc) for doc in documents]

vector_index = FAISS(embeddings)- Integrate with GraphCypherQAChain:

graph_chain.set_vector_index(vector_index)Example Workflow

To illustrate the integration, consider the following example workflow:

- Load Data into Neo4j:

LOAD CSV WITH HEADERS FROM 'file:///path/to/your/data.csv' AS row

CREATE (n:Document {title: row.title, content: row.content});- Create Embeddings and Vector Index:

documents = ["Document 1 content", "Document 2 content"]

embeddings = [Embedding.create(doc) for doc in documents]

vector_index = FAISS(embeddings)- Set Up GraphCypherQAChain:

graph_chain.set_vector_index(vector_index)

question = "What is the content of the document titled 'AI in 2023'?"

response = graph_chain.query(question)

print(response)Monitoring and Maintenance

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of your integrated system. Utilize Neo4j’s built-in monitoring tools and set up alerts for critical metrics such as memory usage, query performance, and disk space.

Conclusion

Integrating a knowledge graph with LangChain significantly enhances the capabilities of a JIT Hybrid Graph RAG system. By leveraging the structured nature of knowledge graphs and the powerful retrieval capabilities of LangChain, you can build a system that delivers highly accurate, contextually rich, and efficient search results. This integration not only improves the performance of your RAG system but also unlocks deeper insights and drives innovation within your organization.

Creating a Storage Context

Creating a storage context is a pivotal step in building a JIT Hybrid Graph RAG system. This process involves setting up a robust and efficient storage infrastructure that can handle the diverse data types and retrieval methods integral to the system. A well-designed storage context ensures seamless integration, optimal performance, and scalability, which are crucial for handling complex queries and large datasets. Here’s a comprehensive guide to creating a storage context, tailored for a software engineering audience.

Understanding the Storage Requirements

Before diving into the setup, it’s essential to understand the storage requirements of a JIT Hybrid Graph RAG system. This system combines vector search, keyword search, and knowledge graph retrieval, each with its own storage needs:

- Vector Search: Requires storage for dense vector embeddings, which are used for similarity search.

- Keyword Search: Involves storing indexed text data for efficient keyword-based retrieval.

- Knowledge Graph Retrieval: Needs a graph database to store entities and relationships in a structured format.

Choosing the Right Storage Solutions

Selecting the appropriate storage solutions is critical for meeting the diverse requirements of the system. Here are some recommended technologies:

- Neo4j: A powerful graph database that excels in managing and querying complex relationships. It’s ideal for storing the knowledge graph component.

- FAISS: An efficient library for similarity search and clustering of dense vectors. It’s suitable for handling the vector search component.

- Elasticsearch: A highly scalable search engine that supports full-text search, making it perfect for the keyword search component.

Setting Up Neo4j for Knowledge Graph Storage

Neo4j serves as the backbone for the knowledge graph, providing a robust infrastructure for managing entities and relationships. Follow these steps to set up Neo4j:

- Download and Install Neo4j: Obtain the latest version from the official website. Follow the installation instructions specific to your operating system.

- Start Neo4j: After installation, start the Neo4j server using the Neo4j Desktop application or by running the following command in your terminal:

neo4j start- Access Neo4j Browser: Open your web browser and navigate to

http://localhost:7474. Log in with the default credentials (neo4j/neo4j) and change the password when prompted.

Configuring FAISS for Vector Storage

FAISS is used for storing and retrieving dense vector embeddings. Here’s how to set it up:

- Install FAISS: Use pip to install FAISS:

pip install faiss-cpu- Create Embeddings: Generate embeddings for your text data using a suitable model. For example:

from langchain.embeddings import Embedding

documents = ["Document 1 content", "Document 2 content"]

embeddings = [Embedding.create(doc) for doc in documents]- Store Embeddings in FAISS: Create a FAISS index and add the embeddings:

import faiss

dimension = len(embeddings[0])

index = faiss.IndexFlatL2(dimension)

index.add(embeddings)Setting Up Elasticsearch for Keyword Search

Elasticsearch provides the infrastructure for efficient keyword-based retrieval. Follow these steps to set it up:

- Download and Install Elasticsearch: Obtain the latest version from the official website. Follow the installation instructions for your operating system.

- Start Elasticsearch: Run the following command to start the Elasticsearch server:

./bin/elasticsearch- Index Data: Use the Elasticsearch API to index your text data. For example:

import requests

document = {"title": "Document 1", "content": "Document 1 content"}

response = requests.post('http://localhost:9200/documents/_doc', json=document)Integrating Storage Solutions

Integrating these storage solutions ensures that the JIT Hybrid Graph RAG system can leverage the strengths of each component. Here’s how to achieve seamless integration:

- Define a Unified Schema: Create a schema that maps entities and relationships across Neo4j, FAISS, and Elasticsearch. This schema should ensure consistency and facilitate data retrieval.

- Implement Data Pipelines: Develop data pipelines to automate the process of data ingestion, transformation, and storage across the different components. Use tools like Apache Kafka or Apache NiFi for this purpose.

- Establish Communication Protocols: Set up communication protocols to enable interaction between the components. For example, use REST APIs to query Elasticsearch and Neo4j, and direct method calls for FAISS.

Example Workflow

To illustrate the process, consider the following example workflow:

- Ingest Data: Load data into Neo4j, FAISS, and Elasticsearch using the respective APIs and tools.

- Create Embeddings: Generate embeddings for the text data and store them in FAISS.

- Index Data: Index the text data in Elasticsearch for keyword search.

- Query the System: Use a unified query interface to retrieve data from Neo4j, FAISS, and Elasticsearch, combining the results to provide a comprehensive response.

Monitoring and Maintenance

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of the storage context. Utilize built-in monitoring tools provided by Neo4j, FAISS, and Elasticsearch, and set up alerts for critical metrics such as memory usage, query performance, and disk space.

Conclusion

Creating a storage context for a JIT Hybrid Graph RAG system involves setting up and integrating multiple storage solutions to handle diverse data types and retrieval methods. By leveraging the strengths of Neo4j, FAISS, and Elasticsearch, you can build a robust and efficient storage infrastructure that meets the complex requirements of the system. This setup not only enhances the performance and scalability of the RAG system but also ensures accurate and contextually rich information retrieval, driving innovation and providing a competitive edge in your domain.

Configuring the RetrieverQueryEngine

Configuring the RetrieverQueryEngine is a critical step in optimizing the performance and accuracy of a JIT Hybrid Graph RAG system. This component is responsible for orchestrating the retrieval process, integrating results from vector search, keyword search, and knowledge graph retrieval to generate comprehensive and contextually rich responses. Here’s a detailed guide to configuring the RetrieverQueryEngine, tailored for a software engineering audience.

Understanding the Role of the RetrieverQueryEngine

The RetrieverQueryEngine acts as the central hub for query processing in a JIT Hybrid Graph RAG system. It manages the flow of queries through various retrieval methods, combines the results, and ensures that the most relevant information is presented to the user. This involves:

- Query Parsing: Interpreting the user’s query to determine the appropriate retrieval methods.

- Hybrid Retrieval: Executing the query across vector search, keyword search, and knowledge graph retrieval.

- Result Fusion: Combining and reranking the results from different retrieval methods to prioritize relevance and context.

Setting Up the RetrieverQueryEngine

To set up the RetrieverQueryEngine, follow these steps:

- Install Necessary Libraries: Ensure that all required libraries for vector search, keyword search, and knowledge graph retrieval are installed. This includes LangChain, FAISS, Elasticsearch, and the Neo4j Python driver.

pip install langchain faiss-cpu elasticsearch neo4j- Define the Retrieval Pipeline: Create a pipeline that integrates the different retrieval methods. This involves setting up connections to Neo4j, FAISS, and Elasticsearch, and defining the logic for query execution and result fusion.

Configuring Vector Search

Vector search is essential for handling queries that require similarity matching. Configure FAISS for efficient vector search:

- Create Embeddings: Generate embeddings for your text data using a suitable model.

from langchain.embeddings import Embedding

documents = ["Document 1 content", "Document 2 content"]

embeddings = [Embedding.create(doc) for doc in documents]- Store Embeddings in FAISS: Create a FAISS index and add the embeddings.

import faiss

dimension = len(embeddings[0])

index = faiss.IndexFlatL2(dimension)

index.add(embeddings)- Query FAISS: Implement a function to query the FAISS index and retrieve similar documents.

def query_faiss(query_embedding, top_k=5):

distances, indices = index.search(query_embedding, top_k)

return indicesConfiguring Keyword Search

Keyword search is crucial for handling queries that involve specific terms or phrases. Configure Elasticsearch for efficient keyword search:

- Index Data: Use the Elasticsearch API to index your text data.

import requests

document = {"title": "Document 1", "content": "Document 1 content"}

response = requests.post('http://localhost:9200/documents/_doc', json=document)- Query Elasticsearch: Implement a function to query Elasticsearch and retrieve matching documents.

def query_elasticsearch(query, top_k=5):

response = requests.get(f'http://localhost:9200/documents/_search?q={query}&size={top_k}')

return response.json()Configuring Knowledge Graph Retrieval

Knowledge graph retrieval leverages the structured nature of knowledge graphs to provide contextually rich responses. Configure Neo4j for efficient knowledge graph retrieval:

- Connect to Neo4j: Establish a connection to the Neo4j database.

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))- Query Neo4j: Implement a function to query the Neo4j database and retrieve relevant entities and relationships.

def query_neo4j(cypher_query):

with driver.session() as session:

result = session.run(cypher_query)

return [record for record in result]Implementing Result Fusion

Result fusion combines the results from vector search, keyword search, and knowledge graph retrieval to prioritize the most relevant information. Implement a function to merge and rerank the results:

- Combine Results: Merge the results from different retrieval methods.

def combine_results(vector_results, keyword_results, graph_results):

combined = vector_results + keyword_results + graph_results

return combined- Rerank Results: Apply a reranking algorithm to prioritize the most relevant results.

def rerank_results(combined_results):

# Implement a reranking algorithm based on relevance scores

ranked_results = sorted(combined_results, key=lambda x: x['score'], reverse=True)

return ranked_resultsExample Workflow

To illustrate the configuration, consider the following example workflow:

- Parse Query: Interpret the user’s query to determine the appropriate retrieval methods.

query = "Find documents authored by John Doe"- Execute Hybrid Retrieval: Execute the query across vector search, keyword search, and knowledge graph retrieval.

vector_results = query_faiss(Embedding.create(query))

keyword_results = query_elasticsearch(query)

graph_results = query_neo4j("MATCH (e:Employee {name: 'John Doe'})-[:AUTHORED]->(d:Document) RETURN d")- Combine and Rerank Results: Merge and rerank the results to prioritize relevance and context.

combined_results = combine_results(vector_results, keyword_results, graph_results)

final_results = rerank_results(combined_results)Monitoring and Maintenance

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of the RetrieverQueryEngine. Utilize built-in monitoring tools provided by FAISS, Elasticsearch, and Neo4j, and set up alerts for critical metrics such as query latency, result accuracy, and system load.

Configuring the RetrieverQueryEngine involves setting up and integrating multiple retrieval methods to handle diverse queries efficiently. By leveraging the strengths of vector search, keyword search, and knowledge graph retrieval, you can build a robust and efficient retrieval engine that delivers highly accurate and contextually rich responses. This setup not only enhances the performance of your JIT Hybrid Graph RAG system but also ensures that users receive the most relevant information, driving innovation and providing a competitive edge in your domain.

Implementing the JIT Hybrid Retrieval

Implementing the JIT Hybrid Retrieval system is a multifaceted process that integrates various retrieval methodologies to enhance the accuracy and relevance of search outcomes. This section will guide you through the essential steps to build a robust JIT Hybrid Retrieval system, leveraging the strengths of vector search, keyword search, and knowledge graph retrieval.

Understanding JIT Hybrid Retrieval

Just-In-Time (JIT) Hybrid Retrieval combines multiple search algorithms to deliver highly relevant and contextually rich responses. This approach leverages the structured nature of knowledge graphs, the semantic matching capabilities of vector search, and the precision of keyword search. The integration of these diverse retrieval methods ensures that the system can handle a wide range of queries, from simple keyword searches to complex, context-dependent questions.

Setting Up the Development Environment

To begin, ensure that your development environment is properly configured. This involves installing essential tools and libraries such as Python, LangChain, Neo4j, FAISS, and Elasticsearch.

- Python 3.8 or higher: Ensure Python is installed on your system.

- Jupyter Notebook: Install for interactive development and debugging.

pip install notebook- LangChain: Install using pip.

pip install langchain- Neo4j: Download and install from the official website. Start the Neo4j server and access it via

http://localhost:7474. - FAISS: Install for efficient similarity search.

pip install faiss-cpu- Elasticsearch: Download and install from the official website. Start the Elasticsearch server.

Configuring the Retrieval Components

Vector Search with FAISS

Vector search is essential for handling queries that require semantic matching. Configure FAISS for efficient vector search:

- Create Embeddings: Generate embeddings for your text data.

from langchain.embeddings import Embedding

documents = ["Document 1 content", "Document 2 content"]

embeddings = [Embedding.create(doc) for doc in documents]- Store Embeddings in FAISS: Create a FAISS index and add the embeddings.

import faiss

dimension = len(embeddings[0])

index = faiss.IndexFlatL2(dimension)

index.add(embeddings)- Query FAISS: Implement a function to query the FAISS index.

def query_faiss(query_embedding, top_k=5):

distances, indices = index.search(query_embedding, top_k)

return indicesKeyword Search with Elasticsearch

Keyword search is crucial for handling queries that involve specific terms or phrases. Configure Elasticsearch for efficient keyword search:

- Index Data: Use the Elasticsearch API to index your text data.

import requests

document = {"title": "Document 1", "content": "Document 1 content"}

response = requests.post('http://localhost:9200/documents/_doc', json=document)- Query Elasticsearch: Implement a function to query Elasticsearch.

def query_elasticsearch(query, top_k=5):

response = requests.get(f'http://localhost:9200/documents/_search?q={query}&size={top_k}')

return response.json()Knowledge Graph Retrieval with Neo4j

Knowledge graph retrieval leverages the structured nature of knowledge graphs to provide contextually rich responses. Configure Neo4j for efficient knowledge graph retrieval:

- Connect to Neo4j: Establish a connection to the Neo4j database.

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

user = "neo4j"

password = "your_password"

driver = GraphDatabase.driver(uri, auth=(user, password))- Query Neo4j: Implement a function to query the Neo4j database.

def query_neo4j(cypher_query):

with driver.session() as session:

result = session.run(cypher_query)

return [record for record in result]Implementing Result Fusion

Result fusion combines the results from vector search, keyword search, and knowledge graph retrieval to prioritize the most relevant information. Implement a function to merge and rerank the results:

- Combine Results: Merge the results from different retrieval methods.

def combine_results(vector_results, keyword_results, graph_results):

combined = vector_results + keyword_results + graph_results

return combined- Rerank Results: Apply a reranking algorithm to prioritize the most relevant results.

def rerank_results(combined_results):

ranked_results = sorted(combined_results, key=lambda x: x['score'], reverse=True)

return ranked_resultsExample Workflow

To illustrate the implementation, consider the following example workflow:

- Parse Query: Interpret the user’s query to determine the appropriate retrieval methods.

query = "Find documents authored by John Doe"- Execute Hybrid Retrieval: Execute the query across vector search, keyword search, and knowledge graph retrieval.

vector_results = query_faiss(Embedding.create(query))

keyword_results = query_elasticsearch(query)

graph_results = query_neo4j("MATCH (e:Employee {name: 'John Doe'})-[:AUTHORED]->(d:Document) RETURN d")- Combine and Rerank Results: Merge and rerank the results to prioritize relevance and context.

combined_results = combine_results(vector_results, keyword_results, graph_results)

final_results = rerank_results(combined_results)Monitoring and Maintenance

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of the JIT Hybrid Retrieval system. Utilize built-in monitoring tools provided by FAISS, Elasticsearch, and Neo4j, and set up alerts for critical metrics such as query latency, result accuracy, and system load.

Implementing the JIT Hybrid Retrieval system involves setting up and integrating multiple retrieval methods to handle diverse queries efficiently. By leveraging the strengths of vector search, keyword search, and knowledge graph retrieval, you can build a robust and efficient retrieval engine that delivers highly accurate and contextually rich responses. This setup not only enhances the performance of your JIT Hybrid Graph RAG system but also ensures that users receive the most relevant information, driving innovation and providing a competitive edge in your domain.

Combining Vector and Keyword Searches

Combining vector and keyword searches is a powerful approach that leverages the strengths of both methodologies to deliver highly relevant and contextually rich search results. This hybrid strategy is particularly effective in handling a wide range of queries, from simple keyword-based searches to complex, context-dependent questions. By integrating vector search and keyword search, you can enhance the accuracy, relevance, and efficiency of your information retrieval system.

Understanding Vector and Keyword Searches

Vector search relies on dense vector embeddings to capture the semantic meaning of text. This method excels at finding similar documents based on the context and meaning of the content, rather than just matching specific keywords. It uses advanced machine learning models to generate embeddings, which are then stored in a vector index for efficient similarity search.

Keyword search, on the other hand, focuses on matching specific terms or phrases within the text. It uses inverted indexes to quickly locate documents containing the queried keywords. This method is highly precise and is particularly effective for queries that involve exact matches or specific terms.

Benefits of Combining Vector and Keyword Searches

Combining vector and keyword searches offers several advantages:

- Enhanced Relevance: By leveraging the semantic understanding of vector search and the precision of keyword search, you can deliver more relevant results that accurately match the user’s intent.

- Improved Coverage: The hybrid approach ensures that both contextually similar documents and those containing specific keywords are retrieved, providing comprehensive coverage of the search space.

- Contextual Richness: Vector search captures the broader context and meaning of the query, while keyword search ensures that specific terms are matched, resulting in contextually rich responses.

- Robustness: The combination of both methods makes the retrieval system more robust, capable of handling a diverse range of queries with varying levels of complexity.

Implementing the Hybrid Search

To implement a hybrid search system, follow these steps:

- Generate Embeddings for Vector Search: Use a suitable model to generate embeddings for your text data. Store these embeddings in a vector index, such as FAISS, for efficient similarity search.

from langchain.embeddings import Embedding

documents = ["Document 1 content", "Document 2 content"]

embeddings = [Embedding.create(doc) for doc in documents]

import faiss

dimension = len(embeddings[0])

index = faiss.IndexFlatL2(dimension)

index.add(embeddings)- Index Data for Keyword Search: Use Elasticsearch to index your text data, enabling efficient keyword-based retrieval.

import requests

document = {"title": "Document 1", "content": "Document 1 content"}

response = requests.post('http://localhost:9200/documents/_doc', json=document)- Query Both Systems: Implement functions to query both the vector index and the keyword index.

def query_faiss(query_embedding, top_k=5):

distances, indices = index.search(query_embedding, top_k)

return indices

def query_elasticsearch(query, top_k=5):

response = requests.get(f'http://localhost:9200/documents/_search?q={query}&size={top_k}')

return response.json()- Combine and Rerank Results: Merge the results from both searches and apply a reranking algorithm to prioritize the most relevant information.

def combine_results(vector_results, keyword_results):

combined = vector_results + keyword_results

return combined

def rerank_results(combined_results):

ranked_results = sorted(combined_results, key=lambda x: x['score'], reverse=True)

return ranked_resultsExample Workflow

Consider the following example workflow to illustrate the hybrid search implementation:

- Parse Query: Interpret the user’s query to determine the appropriate retrieval methods.

query = "Find documents authored by John Doe"- Execute Hybrid Retrieval: Execute the query across both vector search and keyword search.

vector_results = query_faiss(Embedding.create(query))

keyword_results = query_elasticsearch(query)- Combine and Rerank Results: Merge and rerank the results to prioritize relevance and context.

combined_results = combine_results(vector_results, keyword_results)

final_results = rerank_results(combined_results)Monitoring and Maintenance

Regular monitoring and maintenance are essential to ensure the ongoing performance and reliability of the hybrid search system. Utilize built-in monitoring tools provided by FAISS and Elasticsearch, and set up alerts for critical metrics such as query latency, result accuracy, and system load.

Combining vector and keyword searches in a hybrid retrieval system significantly enhances the accuracy, relevance, and efficiency of search results. By leveraging the strengths of both methodologies, you can build a robust and comprehensive retrieval engine that delivers highly accurate and contextually rich responses, driving innovation and providing a competitive edge in your domain.

Leveraging Graph Retrieval

Leveraging graph retrieval in a JIT Hybrid Graph RAG system is a game-changer for intelligent search and information retrieval. This approach capitalizes on the structured nature of knowledge graphs to provide contextually rich and highly relevant responses. By organizing data as nodes and relationships, graph retrieval enables more efficient and accurate information retrieval, especially for complex, context-dependent queries.

Graph retrieval begins with the construction of a robust knowledge graph, which involves defining entities, relationships, and properties. This structured representation of data allows for sophisticated querying capabilities that go beyond simple keyword matching. For instance, in an enterprise search application, a knowledge graph can represent employees, projects, documents, and their interconnections, enabling queries like “Find all documents authored by employees who worked on Project X.”

One of the key advantages of graph retrieval is its ability to leverage the rich contextual information stored in knowledge graphs. Unlike traditional retrieval methods that rely solely on textual chunks, graph retrieval can provide structured entity information, combining textual descriptions with properties and relationships. This structured approach enhances the understanding of specific terminology and domain-specific knowledge, resulting in more precise and contextually appropriate responses.

Consider a scenario where a user queries, “Who are the key contributors to the AI project in 2023?” A knowledge graph can efficiently traverse the relationships between employees, projects, and contributions to provide a comprehensive answer. This is achieved through Cypher queries, Neo4j’s powerful query language, which allows for complex pattern matching and relationship traversal.

For example, a Cypher query to find key contributors might look like this:

MATCH (e:Employee)-[:WORKED_ON]->(p:Project {name: 'AI Project 2023'})-[:HAS_CONTRIBUTION]->(c:Contribution)

RETURN e.name, c.detailsThis query matches employees who worked on the AI project and retrieves their contributions, providing a detailed and contextually rich response.

Integrating graph retrieval with other retrieval methods, such as vector and keyword searches, further enhances the system’s capabilities. By combining the strengths of these diverse methods, a JIT Hybrid Graph RAG system can handle a wide range of queries with varying levels of complexity. For instance, vector search can identify semantically similar documents, keyword search can pinpoint specific terms, and graph retrieval can provide the contextual relationships between entities.

To implement graph retrieval effectively, it is crucial to optimize the performance of the knowledge graph. This involves creating indexes on frequently queried properties, designing an efficient schema, and ensuring that relationships are correctly defined and indexed. For example, creating indexes on properties like name and title can significantly speed up search operations:

CREATE INDEX ON :Employee(name);

CREATE INDEX ON :Document(title);Regular monitoring and maintenance are also essential to ensure the ongoing performance and reliability of the knowledge graph. Utilize Neo4j’s built-in monitoring tools and set up alerts for critical metrics such as memory usage, query performance, and disk space.

In summary, leveraging graph retrieval in a JIT Hybrid Graph RAG system unlocks deeper insights and more precise information retrieval. By capitalizing on the structured nature of knowledge graphs, this approach provides contextually rich and highly relevant responses, driving innovation and providing a competitive edge in various domains. Whether for enterprise search, document retrieval, or knowledge discovery, graph retrieval is a powerful tool that enhances the capabilities of intelligent search systems.

Testing and Optimizing Your JIT Hybrid Graph RAG

Testing and optimizing your JIT Hybrid Graph RAG system is crucial for ensuring its performance, accuracy, and reliability. This process involves systematic evaluation, fine-tuning, and continuous monitoring to address potential issues and enhance the system’s capabilities. Here’s a comprehensive guide to testing and optimizing your JIT Hybrid Graph RAG system, tailored for a software engineering audience.

Systematic Evaluation

To evaluate the performance of your JIT Hybrid Graph RAG system, follow a structured approach that includes synthetic dataset creation, critique agents, and judge LLMs.

Synthetic Dataset Creation

Generate a synthetic evaluation dataset using Language Models (LLMs). This dataset should include a variety of queries and corresponding answers to test different aspects of the system. Use tools like Mixtral for QA couple generation and filter the questions using critique agents based on groundedness, relevance, and stand-alone criteria.

Critique Agents and Judge LLMs

Critique agents play a vital role in evaluating the quality of the generated questions. They rate questions based on specific criteria, ensuring that only high-quality queries are included in the evaluation dataset. Judge LLMs, on the other hand, assess the system’s responses, providing insights into its accuracy and relevance.

Testing Specific Stages of the RAG Pipeline

To identify where failures occur, test specific stages of the RAG pipeline. This involves collecting outputs from each step and setting up individual evaluations.

Retrieval Step

For the retrieval step, use context precision and recall evaluations to measure the performance of the retrieval system. Tools like RAGAS can help assess the relevance and completeness of the retrieved information.

Generation Step

Evaluate the generation step by analyzing the LLM’s responses. Focus on metrics such as factual accuracy, coherence, and contextual relevance. Use judge LLMs to provide detailed feedback on the generated responses.

Fine-Tuning and Optimization

Fine-tuning the system involves adjusting various parameters and configurations to enhance performance. Experiment with different configurations and analyze the results to identify the most effective settings.

Chunk Size and Embeddings

Tuning the chunk size and embeddings can significantly impact the system’s performance. Conduct tests with different chunk sizes and embedding configurations to determine the optimal settings. Analyze the results to identify the configurations that yield the highest accuracy and relevance.

Reranking Options

Experiment with different reranking options to prioritize the most relevant results. Use techniques like rank fusion to combine scores from various retrieval methods, ensuring that the most contextually rich and accurate responses are presented.

Monitoring and Maintenance

Continuous monitoring and maintenance are essential to ensure the ongoing performance and reliability of the JIT Hybrid Graph RAG system. Utilize built-in monitoring tools and set up alerts for critical metrics.

Memory Usage and Query Performance

Monitor memory usage and query performance to identify potential bottlenecks. Ensure that the system has sufficient resources to handle complex queries and large datasets. Regularly review performance metrics and make necessary adjustments to optimize resource utilization.

Data Integrity and Consistency

Regularly check data integrity and consistency to ensure that the knowledge graph remains accurate and up-to-date. Implement automated tests to validate the integrity of the data and identify any discrepancies.

Example: Evaluating RAG Performance

Consider an example where you evaluate the performance of a JIT Hybrid Graph RAG system using a synthetic dataset. Generate a variety of queries and corresponding answers, and use critique agents to filter the questions. Conduct tests with different chunk sizes, embeddings, and reranking options, and analyze the results to identify the most effective configurations.

Analyzing Results