Here’s how to build a voice-enabled RAG Q&A system with ElevenLabs and Salesforce

Meta Description: Learn to build a voice-enabled RAG Q&A system using ElevenLabs and Salesforce. Get instant, spoken answers from your enterprise data. Guide included.

Introduction

Imagine asking your Salesforce data a complex question and, instead of sifting through reports or dashboards, you hear a clear, natural voice respond instantly with the precise information you need. This isn’t a far-off futuristic vision; it’s a tangible reality made possible by the convergence of powerful AI technologies. For many organizations, Salesforce is the central nervous system, housing invaluable customer data, sales activities, and service interactions. Yet, accessing and interpreting this wealth of information can often be a cumbersome process, involving manual searches, complex report generation, and time spent navigating intricate interfaces. This traditional approach, while functional, can act as a bottleneck, slowing down decision-making and limiting the proactive use of data insights.

The challenge lies in transforming this static repository of data into a dynamic, interactive knowledge base that can be queried conversationally. How can we make interacting with vast enterprise datasets as intuitive as talking to a colleague? This is where Retrieval Augmented Generation (RAG) enters the picture. RAG systems enhance Large Language Models (LLMs) by grounding them in external knowledge bases, ensuring that the generated responses are not only fluent but also factually accurate and contextually relevant to your specific data. When combined with cutting-edge voice synthesis technology like ElevenLabs, the potential to revolutionize data interaction is immense.

This article will guide you through the process of building your own voice-enabled RAG Q&A system, specifically tailored to leverage your Salesforce data and enriched by the natural-sounding voices from ElevenLabs. We will explore the core components, outline the prerequisites, and provide a step-by-step walkthrough of the implementation. By the end of this guide, you’ll understand how to bridge the gap between your enterprise data and intuitive voice interaction, empowering your teams with faster, more accessible insights. Prepare to unlock a new dimension of data engagement where your Salesforce instance literally speaks to you.

Understanding the Core Components: RAG, ElevenLabs, and Salesforce

Before diving into the technical implementation, it’s crucial to understand the key technologies that form the backbone of our voice-enabled Q&A system. Each component plays a distinct yet interconnected role in transforming your Salesforce data into an interactive, auditory experience.

What is Retrieval Augmented Generation (RAG)?

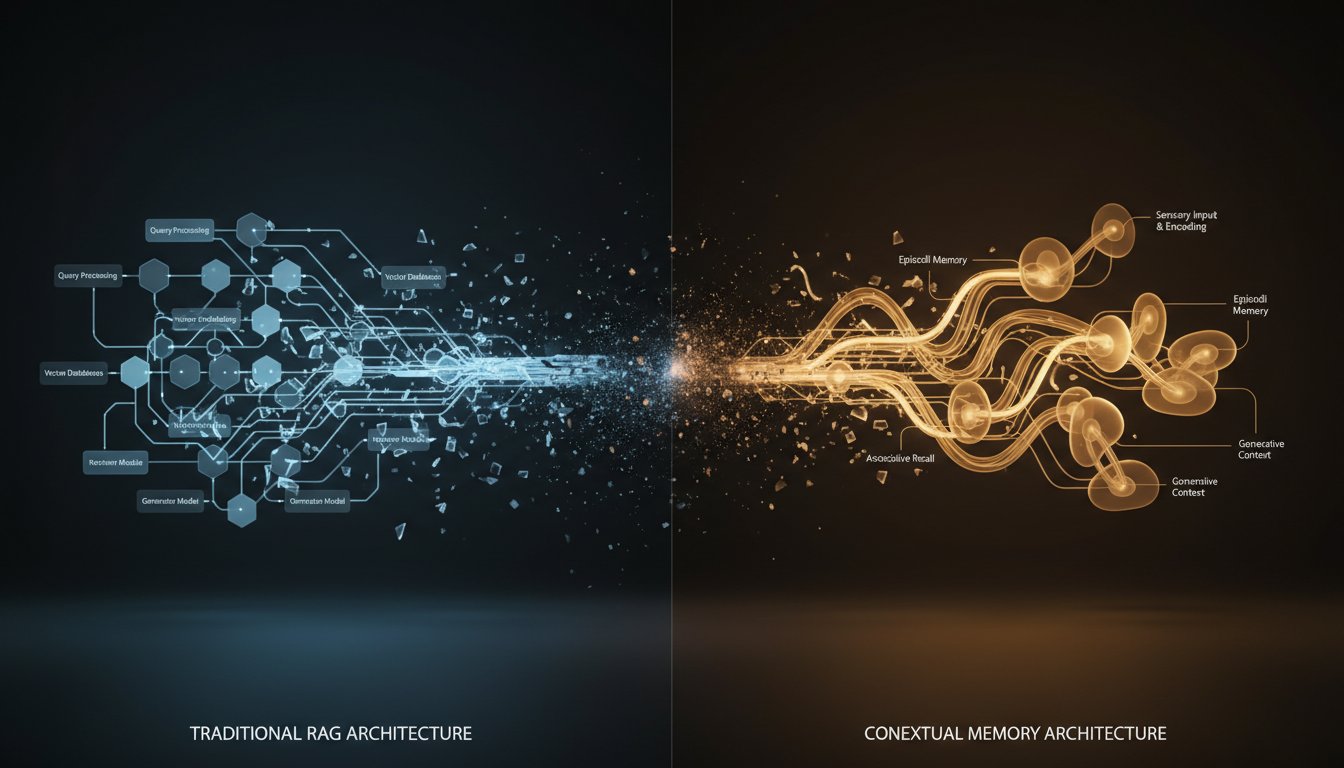

Retrieval Augmented Generation, or RAG, is an architectural approach that significantly enhances the capabilities of Large Language Models (LLMs). Standard LLMs, while incredibly powerful, generate responses based on the vast, but general, dataset they were trained on. This can sometimes lead to generic, outdated, or even inaccurate information when dealing with specific, proprietary enterprise data. RAG addresses this by connecting the LLM to an external, up-to-date knowledge source – in our case, your Salesforce data.

The process typically involves two main stages: retrieval and generation. When a user poses a query, the retrieval component first searches the connected knowledge base (e.g., Salesforce records vectorized and stored in a vector database) for the most relevant information. This retrieved context is then provided to the LLM along with the original query. The LLM then generates a response that is “grounded” in this specific, retrieved information, making it far more accurate and contextually appropriate. Recent advancements, like new frameworks (e.g., the conceptual ‘s3’ framework focusing on decoupling search from generation), are continually boosting RAG’s efficiency and generalization for enterprise applications, making such sophisticated integrations more viable and powerful.

Introducing ElevenLabs: The Power of Realistic AI Voices

Once our RAG system has formulated an accurate, text-based answer from your Salesforce data, the next step is to give it a voice. This is where ElevenLabs comes in. ElevenLabs is a voice technology research company renowned for its state-of-the-art AI voice synthesis. Their platform allows you to convert text into incredibly natural-sounding speech across a wide range of voices, languages, and accents.

The key differentiator for ElevenLabs is the quality and realism of its voices. Unlike robotic or monotonous text-to-speech (TTS) systems of the past, ElevenLabs provides voices that are rich in intonation, emotion, and clarity, making the listening experience engaging and human-like. For a Q&A system, this means users receive answers that are not just informative but also pleasant and easy to understand, significantly enhancing the user experience. By integrating ElevenLabs, we transform our RAG system’s output from silent text into a dynamic, audible conversation partner.

Salesforce as Your Knowledge Hub: Why it’s a Prime Candidate

Salesforce is more than just a CRM; for many businesses, it’s the central repository for critical information spanning sales, service, marketing, and custom operational data. It holds detailed records of customer interactions, product information, support cases, knowledge articles, and much more. This makes it an incredibly valuable, yet often underutilized, source for a RAG system.

The structured and semi-structured nature of Salesforce data, when properly processed and vectorized, lends itself well to semantic search and retrieval. Abstracting the vectorization process, as highlighted by platforms like FactSet for their own data systems, is key to simplifying data retrieval with AI. By treating Salesforce as the primary knowledge source for our RAG system, we can unlock insights that are directly relevant to business operations and customer engagement. Imagine instantly querying sales performance for a specific region, asking for the status of high-priority support tickets, or retrieving troubleshooting steps from knowledge articles – all through voice commands and receiving spoken responses.

Prerequisites and Setting Up Your Environment

To embark on building your voice-enabled Salesforce RAG Q&A system, you’ll need to prepare your development environment and gather the necessary credentials and tools. Careful setup is key to a smooth implementation process.

Salesforce Account and API Access

- Salesforce Edition: Ensure you have a Salesforce edition that allows API access (e.g., Enterprise, Unlimited, Developer Edition).

- API Enabled: Your Salesforce user profile must have ‘API Enabled’ permission.

- Connected App (Recommended): For secure authentication, it’s best practice to create a Connected App in Salesforce. This will provide you with a Consumer Key and Consumer Secret if you choose OAuth 2.0 authentication. Alternatively, for simpler setups or development, you might use username-password-security token authentication, though OAuth is preferred for production.

- When setting up the Connected App, ensure you grant appropriate OAuth scopes (e.g.,

api,refresh_token).

- When setting up the Connected App, ensure you grant appropriate OAuth scopes (e.g.,

ElevenLabs API Key

- Account Creation: Sign up for an account on the ElevenLabs website.

- API Key: Once registered and logged in, navigate to your profile or API section to obtain your API key. This key will be used to authenticate requests to the ElevenLabs API for text-to-speech conversion.

- You can try ElevenLabs for free now to voice-enable your RAG applications to get started.

Python Environment and Necessary Libraries

We’ll be using Python for this project. It’s recommended to use a virtual environment to manage dependencies.

- Python: Ensure you have Python 3.8 or higher installed.

- Create a virtual environment:

bash

python -m venv salesforce_rag_env

source salesforce_rag_env/bin/activate # On Windows: .\salesforce_rag_env\Scripts\activate - Install Libraries:

bash

pip install simple-salesforce elevenlabs-python langchain openai faiss-cpu tiktoken python-dotenvsimple-salesforce: For easy interaction with the Salesforce API.elevenlabs-python: The official Python client for the ElevenLabs API.langchain: A framework for developing applications powered by language models. It simplifies RAG pipeline creation.openai: To use OpenAI’s models for embeddings and generation (you’ll need an OpenAI API key). You can substitute other LLM providers compatible with LangChain.faiss-cpu: A library for efficient similarity search and clustering of dense vectors (CPU version). For larger datasets,faiss-gpumight be considered. This will serve as our local vector store.tiktoken: Used by LangChain for token counting with OpenAI models.python-dotenv: To manage API keys and other sensitive information using a.envfile.

Vector Database Choice

For this guide, we will use FAISS, a local vector store, for simplicity. However, for enterprise-scale applications, you might consider cloud-based vector databases like Pinecone, Weaviate, or others. The fundamental concept of vectorization, crucial for effective RAG (as demonstrated by FactSet’s approach to simplifying data retrieval), remains the same: converting your Salesforce text data into numerical representations (embeddings) that can be efficiently searched for semantic similarity.

Create a .env file in your project root to store your API keys and Salesforce credentials:

SALESFORCE_USERNAME='your_salesforce_username'

SALESFORCE_PASSWORD='your_salesforce_password'

SALESFORCE_SECURITY_TOKEN='your_salesforce_security_token'

SALESFORCE_CONSUMER_KEY='your_connected_app_consumer_key' # Optional, if using OAuth

SALESFORCE_CONSUMER_SECRET='your_connected_app_consumer_secret' # Optional, if using OAuth

OPENAI_API_KEY='your_openai_api_key'

ELEVENLABS_API_KEY='your_elevenlabs_api_key'

Step-by-Step: Building Your Voice-Enabled Salesforce RAG Q&A System

Now that the groundwork is laid, let’s dive into the core implementation. We’ll break this down into manageable steps, from fetching Salesforce data to hearing AI-generated spoken answers.

Step 1: Extracting and Preprocessing Salesforce Data

First, we need to connect to Salesforce and extract the data you want your RAG system to query. This could be Knowledge Articles, Case descriptions, Product details, or custom object records.

import os

from dotenv import load_dotenv

from simple_salesforce import Salesforce

from langchain.docstore.document import Document

load_dotenv()

# Salesforce Connection

sf_username = os.getenv('SALESFORCE_USERNAME')

sf_password = os.getenv('SALESFORCE_PASSWORD')

sf_security_token = os.getenv('SALESFORCE_SECURITY_TOKEN')

# For sandbox, use domain='test'

sf = Salesforce(username=sf_username, password=sf_password, security_token=sf_security_token)

print("Successfully connected to Salesforce!")

# Example: Fetching Knowledge Articles (ensure you have Knowledge enabled and articles present)

def fetch_salesforce_knowledge_articles():

query = "SELECT Id, Title, Summary, ArticleBody FROM KnowledgeArticleVersion WHERE PublishStatus='Online' AND Language='en_US' LIMIT 200"

try:

results = sf.query_all(query)

articles = []

for record in results['records']:

# Combine relevant fields into a single text content for RAG

# Ensure ArticleBody is not None and handle its potential HTML content if necessary

body = record.get('ArticleBody', '') if record.get('ArticleBody') else ''

# Simple HTML stripping, consider a more robust library for complex HTML

# For this example, we'll assume ArticleBody is mostly text or simple HTML

# A more robust solution would use BeautifulSoup or similar for HTML parsing

content = f"Title: {record['Title']}\nSummary: {record.get('Summary', '')}\nArticle: {body}"

articles.append(Document(page_content=content, metadata={"source": record['Id'], "title": record['Title']}))

print(f"Fetched {len(articles)} knowledge articles.")

return articles

except Exception as e:

print(f"Error fetching Salesforce data: {e}")

return []

salesforce_documents = fetch_salesforce_knowledge_articles()

# You can expand this to fetch data from other objects like Cases, Accounts, custom objects, etc.

# Remember to select fields that contain rich textual information for the RAG system.

Preprocessing:

Depending on your data, you might need to clean it: remove HTML tags (if ArticleBody contains rich text), handle special characters, etc. For RAG, concatenating relevant fields (like Title, Summary, Body) into a coherent page_content for each Document is often effective. Metadata can store the source ID for traceability.

Step 2: Vectorizing Your Salesforce Data and Storing it

Next, we’ll use LangChain and an embedding model (e.g., from OpenAI) to convert our text documents into vector embeddings and store them in FAISS.

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.text_splitter import RecursiveCharacterTextSplitter

OPENAI_API_KEY = os.getenv('OPENAI_API_KEY')

if not salesforce_documents:

print("No documents fetched from Salesforce. Exiting vectorization.")

else:

# Split documents into smaller chunks for better retrieval accuracy

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150)

split_docs = text_splitter.split_documents(salesforce_documents)

print(f"Split into {len(split_docs)} chunks.")

# Initialize OpenAI embeddings

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_API_KEY)

# Create FAISS vector store from documents

print("Creating FAISS vector store...")

try:

vector_store = FAISS.from_documents(split_docs, embeddings)

vector_store.save_local("faiss_salesforce_kb_index") # Save for later use

print("FAISS vector store created and saved successfully.")

except Exception as e:

print(f"Error creating FAISS vector store: {e}")

# To load later:

# vector_store = FAISS.load_local("faiss_salesforce_kb_index", embeddings)

This step can take some time and API calls depending on the volume of data.

Step 3: Setting up the RAG Pipeline

We’ll use LangChain’s RetrievalQA chain to combine the retrieval from FAISS with generation from an LLM.

from langchain.chat_models import ChatOpenAI

from langchain.chains import RetrievalQA

# Load the vector store if not already in memory

if 'vector_store' not in globals() or not vector_store:

try:

print("Loading FAISS vector store...")

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_API_KEY)

vector_store = FAISS.load_local("faiss_salesforce_kb_index", embeddings, allow_dangerous_deserialization=True)

print("FAISS vector store loaded.")

except Exception as e:

print(f"Error loading FAISS vector store: {e}. Ensure it was created.")

# Handle error, perhaps by exiting or re-triggering creation

# Initialize the LLM (e.g., OpenAI's GPT-3.5-turbo or GPT-4)

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0.2, openai_api_key=OPENAI_API_KEY)

# Create the RetrievalQA chain

# This chain will take a query, retrieve relevant documents from the vector_store,

# stuff them into a prompt, and pass that to the LLM.

if 'vector_store' in globals() and vector_store:

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff", # Options: stuff, map_reduce, refine, map_rerank

retriever=vector_store.as_retriever(search_kwargs={"k": 3}), # Retrieve top 3 relevant chunks

return_source_documents=True # Optional: to see which docs were retrieved

)

print("RAG QA chain initialized.")

else:

print("Vector store not available. QA chain cannot be initialized.")

Step 4: Integrating ElevenLabs for Voice Output

Now, we’ll write a function to take the text output from our RAG chain and convert it to speech using ElevenLabs.

from elevenlabs import play, stream

from elevenlabs.client import ElevenLabs

ELEVENLABS_API_KEY = os.getenv('ELEVENLABS_API_KEY')

client = ElevenLabs(

api_key=ELEVENLABS_API_KEY,

)

def speak_text(text, voice_id="Rachel"): # You can choose different voice IDs from ElevenLabs

print(f"AI Speaking: {text}")

try:

audio = client.generate(

text=text,

voice=voice_id,

model="eleven_multilingual_v2" # Or other suitable models

)

play(audio) # Plays the audio directly

# To stream (for longer audio or faster first byte):

# audio_stream = client.generate(text=text, voice=voice_id, stream=True)

# stream(audio_stream)

except Exception as e:

print(f"Error generating or playing audio with ElevenLabs: {e}")

# Test ElevenLabs (optional)

# speak_text("Hello from ElevenLabs and Rag About It!")

Step 5: Creating a Simple Interface to Ask Questions

Let’s create a simple command-line loop to ask questions, get answers from the RAG chain, and have them spoken.

def ask_salesforce_voice_qa():

if 'qa_chain' not in globals():

print("QA chain is not initialized. Cannot proceed.")

return

print("Salesforce Voice Q&A System Initialized. Type 'exit' to quit.")

while True:

user_query = input("Ask Salesforce: ")

if user_query.lower() == 'exit':

break

if not user_query.strip():

continue

print("Thinking...")

try:

# Get answer from RAG chain

result = qa_chain({"query": user_query})

answer = result['result']

# Speak the answer

speak_text(answer)

# Optionally, print source documents for verification

# print("\nSource Documents:")

# for doc in result['source_documents']:

# print(f"- {doc.metadata.get('title', 'N/A')}: {doc.page_content[:100]}...")

except Exception as e:

error_message = f"An error occurred: {e}"

print(error_message)

speak_text("I encountered an error trying to answer your question.")

if __name__ == '__main__':

# Ensure all components are set up before starting Q&A

if 'vector_store' in globals() and vector_store and 'qa_chain' in globals() and qa_chain:

ask_salesforce_voice_qa()

else:

print("System initialization failed. Please check previous steps and error messages.")

if 'salesforce_documents' in globals() and not salesforce_documents:

print("Hint: No documents were fetched from Salesforce. Check connection and query.")

if 'vector_store' not in globals() or not vector_store:

print("Hint: Vector store (FAISS index) creation or loading might have failed.")

This script provides a foundational, yet powerful, voice-enabled RAG system for your Salesforce data. Each step, from data extraction to voice synthesis, plays a vital role in the overall functionality.

Best Practices and Considerations for Enterprise Deployment

Transitioning this proof-of-concept into a robust, enterprise-grade solution requires careful consideration of several factors. While our guide establishes the core functionality, deploying it in a live business environment demands attention to security, performance, scalability, and user experience.

Data Security and Permissions in Salesforce

- Least Privilege Principle: The Salesforce user account connected to the RAG system should only have read access to the specific objects and fields necessary for the Q&A functionality. Avoid using admin accounts.

- Field-Level Security & Sharing Rules: Salesforce’s built-in security model (Field-Level Security, Sharing Rules, Role Hierarchies) should be respected. The data extracted and indexed should align with what the accessing user profile is permitted to see. Complex scenarios might involve indexing different data subsets for different user roles, though this adds complexity to the RAG setup.

- Sensitive Data Handling: Be extremely cautious if your Salesforce org contains PII, PHI, or other sensitive data. Determine if this data should be included in the RAG index. If so, ensure encryption at rest (for the vector store) and in transit, and robust access controls for the RAG application itself.

Optimizing RAG Performance

- Chunking Strategy: The way documents are split into chunks (

chunk_size,chunk_overlapinRecursiveCharacterTextSplitter) significantly impacts retrieval relevance. Experiment with different sizes based on your data’s nature. Too small, and context is lost; too large, and irrelevant information might dilute the LLM prompt. - Embedding Models: The choice of embedding model affects both cost and retrieval quality. Explore different models (e.g., OpenAI’s

text-embedding-ada-002, or open-source alternatives via Hugging Face) to find the best balance for your needs. - Retriever Configuration: Fine-tune the number of documents retrieved (

kinvector_store.as_retriever(search_kwargs={"k": 3})). Retrieving too few might miss crucial info; too many can overwhelm the LLM’s context window and increase costs. Consider advanced retrieval strategies like Parent Document Retrievers or Self-Querying Retrievers if needed. - LLM Choice: The LLM used for generation (e.g., GPT-3.5-turbo vs. GPT-4) impacts response quality, latency, and cost. GPT-4 typically provides higher quality but at a higher price and potentially higher latency.

Scalability and Cost Management

- Vector Database: For enterprise scale, a local FAISS index might not be sufficient. Managed vector databases (e.g., Pinecone, Weaviate, Vertex AI Vector Search) offer better scalability, resilience, and management features. This aligns with the emerging trend of “RAG as a Service,” where components of the RAG pipeline are managed services.

- API Costs: Be mindful of API call costs for embeddings (both indexing and querying), LLM generation, and ElevenLabs voice synthesis. Implement caching for frequently asked questions or common data if appropriate.

- Data Indexing Pipeline: For Salesforce orgs with frequently changing data, you’ll need a robust pipeline to regularly update the vector index. This could involve incremental updates or periodic full re-indexes, considering data freshness requirements and computational load.

User Experience: Voice Clarity and Responsiveness

- Voice Selection (ElevenLabs): Experiment with different ElevenLabs voices to find one that aligns with your brand and user preferences. Consider offering users a choice of voices.

- Latency: The end-to-end latency (from user speaking the question to hearing the spoken answer) is critical. Optimize each step: Salesforce query time, vector search, LLM response time, and ElevenLabs audio generation. For ElevenLabs, explore streaming audio for faster perceived responsiveness.

- Error Handling: Provide clear, helpful (and spoken) error messages if the system can’t answer a question, fails to connect to Salesforce, or encounters other issues.

Conclusion

We’ve journeyed through the exciting process of conceptualizing, designing, and building a voice-enabled RAG Q&A system that taps into the rich data within your Salesforce organization, brought to life by the natural voices of ElevenLabs. By combining the contextual grounding of Retrieval Augmented Generation with the intuitive interface of voice, you’ve seen how it’s possible to transform static enterprise data into an interactive, conversational resource. You are no longer just looking at data; you are engaging with it, asking it questions, and receiving clear, articulate responses as if from a knowledgeable assistant.

The steps outlined—from connecting to Salesforce and vectorizing your data, to setting up the RAG pipeline and integrating sophisticated text-to-speech—provide a solid foundation. We’ve also touched upon crucial best practices for enterprise deployment, ensuring that as you scale, considerations for security, performance, and user experience remain paramount. The ability to instantly query your most critical business information and hear the answers spoken back is a powerful paradigm shift, moving beyond dashboards and reports to direct, natural language interaction.

This is more than just a technical exercise; it’s about unlocking the true potential of your enterprise data, making insights more accessible, and empowering your teams to make faster, more informed decisions. The journey from raw data to conversational insights exemplifies the future of enterprise AI—interactive, intuitive, and impactful.

Call to Action

Ready to elevate your Salesforce data interaction with voice? Explore ElevenLabs and start building your own voice-enabled RAG system today. Try ElevenLabs for free now to voice-enable your RAG applications. For more advanced architectures and tutorials, visit RagAboutIt.com and continue pioneering the AI revolution.