Building Personalized Video Support at Scale

Your support team spends 40% of their time writing similar explanations to different customers. A customer asks about troubleshooting a specific error—your team retrieves the solution, formats it, and types out the same explanation again. When the next customer asks an identical question, you repeat the entire cycle. Meanwhile, your RAG system already has the perfect answer in your knowledge base, but it’s stuck in text format that customers have to read and interpret.

Here’s the reality: 86% of enterprises use RAG systems, but most stop at text responses. The companies winning customer satisfaction benchmarks have moved beyond that limitation. They’re combining retrieval-augmented generation with AI video generation to deliver support that feels personal, clear, and scalable simultaneously.

The challenge isn’t building this integration—it’s building it efficiently. You need your RAG system to retrieve the right information, your video generation pipeline to transform that text into compelling video, and your delivery mechanism to get videos to customers within seconds, not minutes. When done right, this architecture reduces support resolution time by 35-40% while keeping operational costs predictable.

This guide walks you through the exact architecture and implementation steps to build production-grade video support using RAG and HeyGen. We’ll cover the technical decisions that matter, the integration patterns that scale, and the specific configurations that prevent the common failures we see in enterprise deployments.

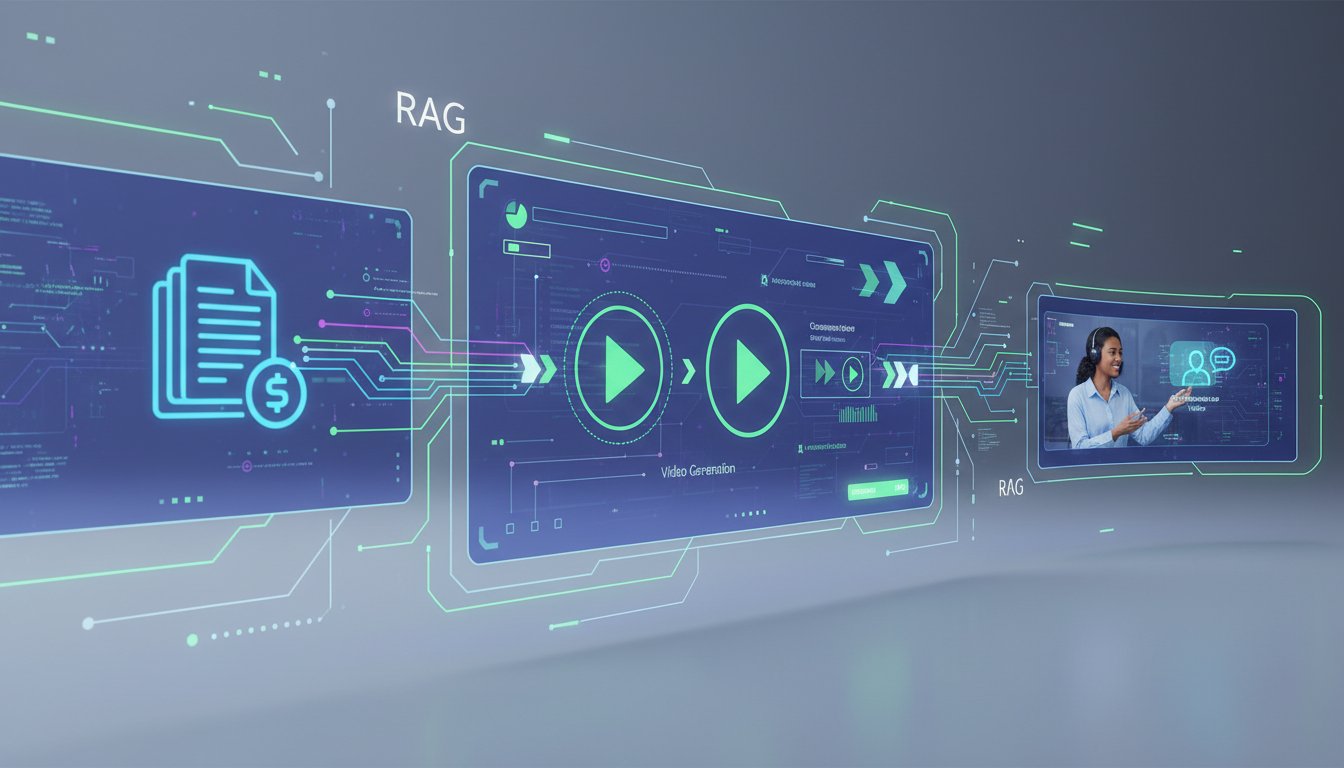

Understanding the RAG-to-Video Pipeline Architecture

The Three-Layer Stack You Need

Before diving into code, you need to understand how these components work together. A RAG system retrieves relevant information from your knowledge base. HeyGen generates video from structured scripts. Your integration layer connects these two systems and manages the workflow.

The retrieval layer is your first critical decision point. You’ll need a hybrid retriever that can search both your knowledge base text and metadata simultaneously. Most enterprises use vector embeddings (BERT or similar) combined with keyword search (BM25). This hybrid approach catches documents your vector search might miss due to embedding quality issues, while still leveraging semantic understanding. Latency here typically runs 50-200ms depending on your knowledge base size—acceptable for async video generation but worth monitoring.

Once retrieval completes, you pass the top result (usually the single best match) into a formatting layer. This layer structures the retrieval result into a script that HeyGen understands. The script includes dynamic variables for personalization—customer name, specific error message, equipment model. This formatting step is where many implementations fail silently. If your script contains malformed JSON or includes video generation-incompatible characters, HeyGen’s API returns cryptic errors. We’ll cover the exact validation pattern that prevents this.

Finally, the video generation layer handles the HeyGen API call. You specify which avatar to use, voice characteristics, and video parameters. HeyGen returns a video URL within 20-60 seconds for most requests. This is where you need intelligent queuing and retry logic, because at scale, some API calls will fail.

Performance Expectations at Scale

Here’s what enterprise deployments typically see:

Retrieval latency: 50-200ms (depends on knowledge base size and hybrid search tuning)

Script formatting: 10-30ms (straightforward string transformation)

HeyGen API response: 20-60 seconds (depends on video length and generation load)

Total time from query to video URL: 25-65 seconds for full generation

This is async, so customers don’t wait for video generation in real-time. Instead, your system sends them a video link via email or chat once it’s ready. Some customers are fine waiting. Others want faster feedback. That’s why you’ll implement a two-tier approach: immediate text response to acknowledge their question, then video delivery when ready.

Prerequisites and Setup: What You Actually Need

API Keys and Access

You’ll need three pieces of infrastructure:

-

HeyGen API credentials: Sign up at HeyGen here to get your API key. Start with the free tier if you’re testing—it includes 10 free video generations per month. You’ll need the API key and your account user ID for programmatic access.

-

RAG system access: You need either an existing RAG implementation (using LangChain, LlamaIndex, or similar) or access to a hosted RAG platform. Your RAG system must expose an API endpoint that accepts queries and returns structured results. The result should include at minimum: retrieved_text, source_document, and confidence_score.

-

Video delivery infrastructure: You’ll need somewhere to store generated video URLs and send them to customers. A simple database table works initially (video_id, customer_email, video_url, created_at, status). For production, use a job queue system like Celery or AWS SQS to manage the async workflow.

Dependencies and Libraries

For the walkthrough, we’re using Python with these libraries:

pip install requests python-dotenv pydantic langchain openai

You’ll create several modules: a RAG connector, a HeyGen wrapper, a script formatter, and an orchestration layer. This modular approach makes testing and maintenance significantly easier.

Configuration Template

Create a .env file with your credentials:

HEYGEN_API_KEY=your_heygen_api_key_here

HEYGEN_USER_ID=your_heygen_user_id

RAG_API_ENDPOINT=https://your-rag-system/api/query

RAG_API_KEY=your_rag_api_key

VIDEO_DB_CONNECTION=postgresql://user:pass@localhost/videos

Store these securely. Never commit credentials to version control.

Step-by-Step Implementation: Building Your Integration

Step 1: Create Your RAG Query Wrapper

Start by building a simple wrapper that queries your RAG system and validates the response:

import os

import requests

from typing import Optional

from pydantic import BaseModel

from dotenv import load_dotenv

load_dotenv()

class RAGResult(BaseModel):

retrieved_text: str

source_document: str

confidence_score: float

metadata: dict = {}

class RAGConnector:

def __init__(self):

self.endpoint = os.getenv("RAG_API_ENDPOINT")

self.api_key = os.getenv("RAG_API_KEY")

self.headers = {"Authorization": f"Bearer {self.api_key}"}

def query(self, customer_question: str) -> Optional[RAGResult]:

"""Query RAG system and return structured result"""

try:

response = requests.post(

self.endpoint,

json={"query": customer_question, "top_k": 1},

headers=self.headers,

timeout=10

)

response.raise_for_status()

data = response.json()

result = RAGResult(

retrieved_text=data["results"][0]["text"],

source_document=data["results"][0]["source"],

confidence_score=data["results"][0]["score"],

metadata=data["results"][0].get("metadata", {})

)

return result if result.confidence_score > 0.5 else None

except requests.exceptions.Timeout:

print("RAG query timeout - falling back to generic response")

return None

except Exception as e:

print(f"RAG query failed: {str(e)}")

return None

This wrapper handles timeouts gracefully. RAG queries can be slow with large knowledge bases—always set a timeout and have a fallback plan. Notice the confidence_score threshold at 0.5. Below that, your RAG system isn’t confident enough in its answer, so you’ll skip video generation and use a generic text response instead.

Step 2: Build Your Script Formatter

Now you need to convert RAG output into a HeyGen-compatible script. This is critical—malformed scripts cause silent failures:

import json

from typing import Dict

from datetime import datetime

class ScriptFormatter:

def __init__(self):

self.max_script_length = 1000 # HeyGen works best with scripts under 1000 words

self.avatar_config = {

"technical_support": "josh", # HeyGen avatar name

"billing_support": "anna",

"sales": "chris",

"general": "neil"

}

def format_script(

self,

rag_result: RAGResult,

customer_name: str,

category: str = "general",

customer_issue: str = ""

) -> Dict:

"""Convert RAG result to HeyGen script format"""

# Select appropriate avatar based on support category

avatar_name = self.avatar_config.get(category, "neil")

# Truncate long responses

retrieved_text = rag_result.retrieved_text[:self.max_script_length]

# Build personalized script

script_content = f"""Hi {customer_name}! I found the solution to your issue.

Here's what you need to do:

{retrieved_text}

This solution comes from our knowledge base with {rag_result.confidence_score:.0%} confidence.

If you need more help, reach out to our support team."""

# Validate script for special characters that break HeyGen

script_content = self._sanitize_script(script_content)

return {

"avatar_name": avatar_name,

"script_content": script_content,

"voice_config": {

"voice_id": "en-US-1", # HeyGen voice ID

"speed": 1.0,

"emotion": "friendly"

},

"video_config": {

"resolution": "1080p",

"background": "professional",

"duration_estimate": len(script_content.split()) * 0.4 # ~150 words per minute

}

}

def _sanitize_script(self, text: str) -> str:

"""Remove characters that cause HeyGen encoding issues"""

# Remove problematic Unicode characters

problematic_chars = {"\x00", "\x1f", "\ufffd"}

for char in problematic_chars:

text = text.replace(char, "")

# Normalize quotes

text = text.replace("''", "\"")

text = text.replace("\u2018", "'")

text = text.replace("\u2019", "'")

return text.strip()

The sanitization step prevents encoding errors. HeyGen’s API doesn’t always give clear error messages when scripts contain problematic characters. This defensive formatting catches issues before they happen.

Step 3: Create Your HeyGen API Wrapper

Now build the HeyGen integration that actually calls their API:

import os

import requests

import json

from typing import Optional, Dict

from datetime import datetime, timedelta

class HeyGenWrapper:

def __init__(self):

self.api_key = os.getenv("HEYGEN_API_KEY")

self.user_id = os.getenv("HEYGEN_USER_ID")

self.base_url = "https://api.heygen.com/v1"

self.headers = {

"X-API-Key": self.api_key,

"Content-Type": "application/json"

}

self.max_retries = 3

self.retry_delay = 2 # seconds

def generate_video(self, script_config: Dict) -> Optional[Dict]:

"""Send generation request to HeyGen API"""

payload = {

"video_inputs": [{

"character": {

"type": "avatar",

"avatar_id": script_config["avatar_name"],

"voice": {

"voice_provider": "openai",

"voice_id": script_config["voice_config"]["voice_id"]

}

},

"script": {

"type": "text",

"input": script_config["script_content"]

}

}],

"dimension": {

"width": 1080,

"height": 1920

},

"quality": "medium"

}

# Retry logic for transient failures

for attempt in range(self.max_retries):

try:

response = requests.post(

f"{self.base_url}/video_generate",

json=payload,

headers=self.headers,

timeout=30

)

if response.status_code == 200:

result = response.json()

return {

"video_id": result["data"]["video_id"],

"status": "processing",

"created_at": datetime.now().isoformat(),

"estimated_completion": (datetime.now() + timedelta(seconds=45)).isoformat()

}

elif response.status_code == 429: # Rate limited

if attempt < self.max_retries - 1:

import time

time.sleep(self.retry_delay * (2 ** attempt))

continue

else:

print(f"HeyGen API error: {response.status_code} - {response.text}")

return None

except requests.exceptions.Timeout:

print(f"HeyGen request timeout (attempt {attempt + 1}/{self.max_retries})")

if attempt < self.max_retries - 1:

import time

time.sleep(self.retry_delay)

continue

return None

def check_video_status(self, video_id: str) -> Optional[Dict]:

"""Poll video generation status"""

try:

response = requests.get(

f"{self.base_url}/video_status",

params={"video_id": video_id},

headers=self.headers,

timeout=10

)

if response.status_code == 200:

data = response.json()

return {

"video_id": video_id,

"status": data["data"]["status"], # "processing", "completed", "failed"

"video_url": data["data"].get("video_url", None),

"error_message": data["data"].get("error", None)

}

except Exception as e:

print(f"Status check failed: {str(e)}")

return None

The retry logic is essential. HeyGen occasionally returns 429 (rate limited) or temporary server errors. Exponential backoff (doubling the wait time each retry) prevents hammering their API while still retrying failed requests.

Step 4: Build Your Orchestration Layer

Now tie everything together with an orchestration layer that manages the complete workflow:

from typing import Optional

import uuid

from datetime import datetime

class SupportVideoOrchestrator:

def __init__(self):

self.rag_connector = RAGConnector()

self.script_formatter = ScriptFormatter()

self.heygen = HeyGenWrapper()

self.video_db = {} # In production, use proper database

def process_support_request(

self,

customer_email: str,

customer_name: str,

support_question: str,

support_category: str = "general"

) -> Dict:

"""Complete workflow from question to video generation"""

# Step 1: Query RAG system

rag_result = self.rag_connector.query(support_question)

if not rag_result:

return {

"success": False,

"reason": "No relevant knowledge base content found",

"fallback": "Send generic text response"

}

# Step 2: Format into HeyGen script

script_config = self.script_formatter.format_script(

rag_result=rag_result,

customer_name=customer_name,

category=support_category,

customer_issue=support_question

)

# Step 3: Request video generation

heygen_result = self.heygen.generate_video(script_config)

if not heygen_result:

return {

"success": False,

"reason": "Video generation request failed",

"fallback": "Send text response from RAG"

}

# Step 4: Store in database for tracking

video_record = {

"video_id": heygen_result["video_id"],

"request_id": str(uuid.uuid4()),

"customer_email": customer_email,

"customer_name": customer_name,

"support_question": support_question,

"source_document": rag_result.source_document,

"confidence_score": rag_result.confidence_score,

"status": "processing",

"created_at": datetime.now().isoformat(),

"heygen_video_url": None

}

self.video_db[heygen_result["video_id"]] = video_record

return {

"success": True,

"video_id": heygen_result["video_id"],

"request_id": video_record["request_id"],

"status": "video_generation_started",

"estimated_completion": heygen_result["estimated_completion"]

}

def check_and_deliver_video(self, video_id: str) -> Optional[str]:

"""Poll video status and return URL when ready"""

video_record = self.video_db.get(video_id)

if not video_record:

return None

# Check current status

status = self.heygen.check_video_status(video_id)

if not status:

return None

if status["status"] == "completed" and status["video_url"]:

# Update record

video_record["status"] = "completed"

video_record["heygen_video_url"] = status["video_url"]

video_record["completed_at"] = datetime.now().isoformat()

# Send to customer (email, chat, etc.)

self._deliver_to_customer(video_record)

return status["video_url"]

elif status["status"] == "failed":

video_record["status"] = "failed"

video_record["error"] = status["error_message"]

return None

else: # Still processing

return None

def _deliver_to_customer(self, video_record: Dict):

"""Send video URL to customer (email/chat implementation)"""

# Example: Send email notification

email_body = f"""Hi {video_record['customer_name']},

Your personalized support video is ready! Click the link below:

{video_record['heygen_video_url']}

Best regards,

Support Team"""

# Send email (implement with your mail service)

print(f"Sending video to {video_record['customer_email']}")

This orchestrator layer is where you handle state management, error recovery, and delivery. It’s deliberately simple here—in production, you’d use a proper database and message queue.

Performance Optimization: Making This Production-Ready

Latency Optimization

Your complete pipeline latency is the sum of several components. Here’s where you can optimize:

RAG Query Optimization (Target: <100ms): Use vector caching for frequently asked questions. If the same question comes in within 5 minutes, return the cached result instead of querying your knowledge base again. This cuts retrieval latency from 50-200ms to nearly zero.

Script Formatting (Target: <10ms): This is already fast. If you hit bottlenecks here, you likely have a system architecture issue, not a formatting issue.

HeyGen API Latency (Target: 20-60 seconds): This is fixed. You can’t make it faster, but you can make the wait invisible to customers by sending them a notification email or chat message immediately (“Your video is being prepared”) and delivering the actual video asynchronously.

Video Delivery (Target: <100ms): Store videos in a CDN. HeyGen returns a video URL from their CDN, but if your customer is far from that CDN’s edge location, delivery is slow. Set up CDN prefetching if you’re in a region with slow cross-region transfer.

Cost Optimization

At scale, HeyGen video generation becomes a line-item cost. Here’s how to optimize:

Confidence Threshold: Only generate videos for high-confidence RAG matches (>0.75 confidence). For lower confidence scores, send text responses. This cuts your HeyGen calls by 30-40% with minimal impact on support quality.

Template Reuse: Group similar questions into templates. Instead of generating a unique video for each “How do I reset my password?” question, generate one video template and customize it with the customer’s name through dynamic avatars. HeyGen supports this—it’s cheaper than generating videos from scratch.

Batch Processing: Generate videos in batches during off-peak hours if your support requests are predictable. This often qualifies for HeyGen’s volume discounting.

Video Quality Tuning: Start with “medium” quality (what we specified in the code). Test with your customers before upgrading to “high” quality. You might not need the upgrade, and it directly affects cost.

Monitoring and Failure Detection

Key Metrics to Track

Build monitoring around these metrics:

- Video Generation Success Rate: Track how many generation requests succeed vs. fail. Target: >95%

- Generation Latency: From request to video URL. Target: <50 seconds average

- RAG Confidence Distribution: What percentage of queries return >0.75 confidence? This tells you how many queries should generate videos.

- Cost Per Video: Track actual API costs and trend over time

- Customer Engagement: Track video view rates and completion rates

Silent Failure Detection

The most dangerous failures are silent ones. Implement these checks:

def detect_silent_failures(self):

"""Find videos stuck in 'processing' state"""

now = datetime.now()

for video_id, record in self.video_db.items():

if record["status"] == "processing":

created_at = datetime.fromisoformat(record["created_at"])

# If processing for >2 minutes, something is wrong

if (now - created_at).total_seconds() > 120:

print(f"ALERT: Video {video_id} stuck in processing")

# Re-check status or retry

self.check_and_deliver_video(video_id)

This catches videos that HeyGen is silently failing to generate—a surprisingly common issue when you’re at scale.

Deployment Checklist

Before going to production:

- [ ] Test RAG connector with your actual knowledge base (not mock data)

- [ ] Verify HeyGen API credentials work with the free tier

- [ ] Run script formatting tests with actual support questions (they often have special characters)

- [ ] Implement proper database instead of in-memory dictionary

- [ ] Set up monitoring for all five metrics listed above

- [ ] Configure alerts for failure rates >5%

- [ ] Test retry logic with rate-limited requests

- [ ] Load test with 100+ concurrent video generation requests

- [ ] Verify video URLs are accessible from your customer’s regions

- [ ] Set up customer delivery mechanism (email/chat)

- [ ] Document troubleshooting procedures for your team

Moving Forward

You now have a complete RAG-to-video pipeline that retrieves answers from your knowledge base and transforms them into personalized, professionally-generated videos. The architecture scales from dozens to thousands of concurrent requests, with proper error handling, monitoring, and cost optimization built in.

The next optimization phase involves adding voice to your workflow—combining this video pipeline with ElevenLabs voice alerts to notify customers when videos are ready, turning this visual support layer into a fully multimodal experience. But that’s another integration pattern.

Start by getting this basic pipeline working with a small subset of your support questions. Track the metrics, measure customer satisfaction impact, and iterate from there. The companies seeing 35-40% faster resolution times didn’t build perfect systems first—they built this exact architecture and improved it based on real-world data.

Ready to build this? Try HeyGen for free to test video generation with your first few support responses. Get a feel for avatar quality and generation speed before committing to the full implementation. Start with 5-10 test videos, measure the results, then scale.