Category: RAG Architecture

-

The Military AI Ultimatum That Just Changed Enterprise RAG Risk Calculus

When Defense Secretary Pete Hegseth issued his ultimatum to Anthropic on February 18, 2026—demanding unrestricted access to Claude AI or face designation as a “supply chain risk”—most enterprise technology leaders saw it as a defense industry issue. They were wrong. This standoff, which escalated through February with Anthropic refusing to drop its AI safeguards despite…

-

The Vector Embedding Ceiling: DeepMind’s Discovery That Changes Everything About Enterprise RAG

Enterprise teams have been scaling RAG systems under a dangerous assumption: that adding more dimensions to vector embeddings would solve retrieval accuracy problems indefinitely. DeepMind just shattered that assumption with a study revealing a mathematical bottleneck that fundamentally limits single-vector architectures—and some of the industry’s most trusted embedding models are already hitting the wall. When…

-

The Unified Training Divide: Why RAG 2.0’s Joint Optimization Promises Better Results But Few Enterprises Can Afford It

The enterprise AI community just witnessed a quiet revolution that most teams will read about but few will implement. RAG 2.0’s unified training architecture—where retrievers and language models train together as a single system—has achieved state-of-the-art performance on every major benchmark from open-domain question answering to hallucination reduction. Yet the same architectural innovation that makes…

-

The 70% Token Reduction Breakthrough: How Semantic Highlighting Is Rewriting RAG Economics

Your enterprise RAG system is burning through tokens like a data center burns electricity—and most of those tokens are noise. While competitors chase marginal accuracy gains through bigger models and denser embeddings, a fundamental architectural shift is quietly rewriting the economics of production RAG systems. The culprit isn’t your retrieval strategy or your vector database.…

-

The Measurement Crisis: Why Your Enterprise RAG System Optimizes Answers While Ignoring Infrastructure

Picture this: Your enterprise RAG system delivers impressively accurate answers during demos. The retrieval precision looks stellar on your dashboards. Your stakeholders are pleased. Then, three months into production, your autonomous AI agents start making decisions based on outdated financial data, a security audit reveals ungoverned cross-domain data access, and nobody can explain why the…

-

The Production Orchestration Gap: Why Enterprise RAG Teams Are Racing Toward Agent Composer

If you’ve built a RAG prototype that works beautifully in testing but crumbles under production load, you’re not alone. You’re part of the 80% statistic—the vast majority of enterprise RAG projects that never make it past the proof-of-concept stage. The technical reasons vary: data quality issues, retrieval drift, context management failures, integration nightmares. But the…

-

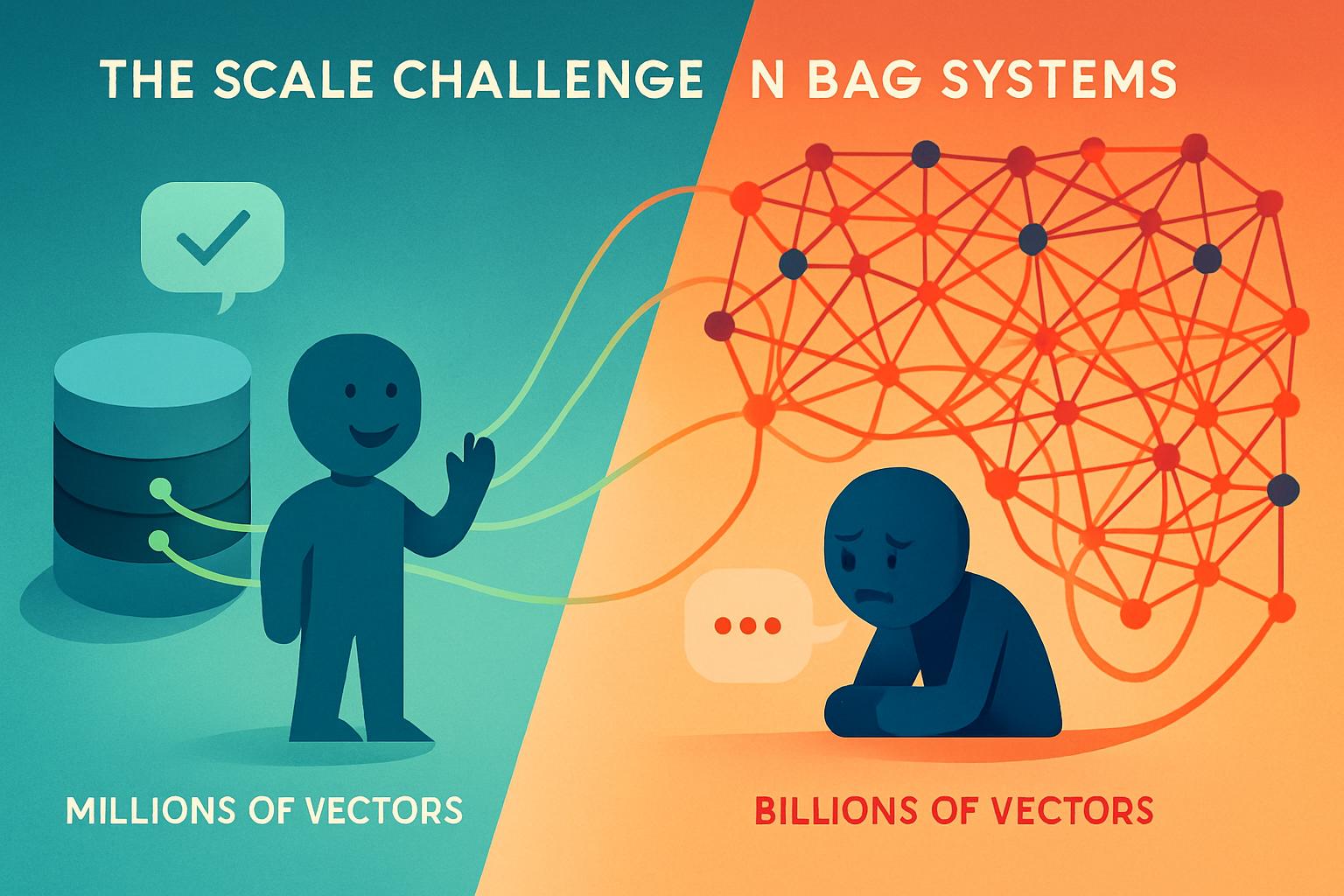

The Vector Database Performance Wall: Why Enterprise RAG Hits a Latency Ceiling at Scale

The Vector Database Performance Wall: Why Enterprise RAG Hits a Latency Ceiling at Scale Your RAG system worked beautifully in the proof-of-concept phase. The retrieval was snappy. Latency hovered around 200-300 milliseconds. Your team celebrated. Then came production—with millions of real documents, billions of vectors, and actual users hammering your system simultaneously. Suddenly, that 300ms…

-

The Chunking Blind Spot: Why Your RAG Accuracy Collapses When Context Boundaries Matter Most

Enterprise RAG teams obsess over embedding models and reranking algorithms while overlooking the silent killer of retrieval accuracy: how documents are chunked. The irony is sharp—research shows that tuning chunk size, overlap, and hierarchical splitting can deliver 10-15% recall improvements that rival expensive model upgrades. Yet most teams default to uniform 500-token chunks without understanding…

-

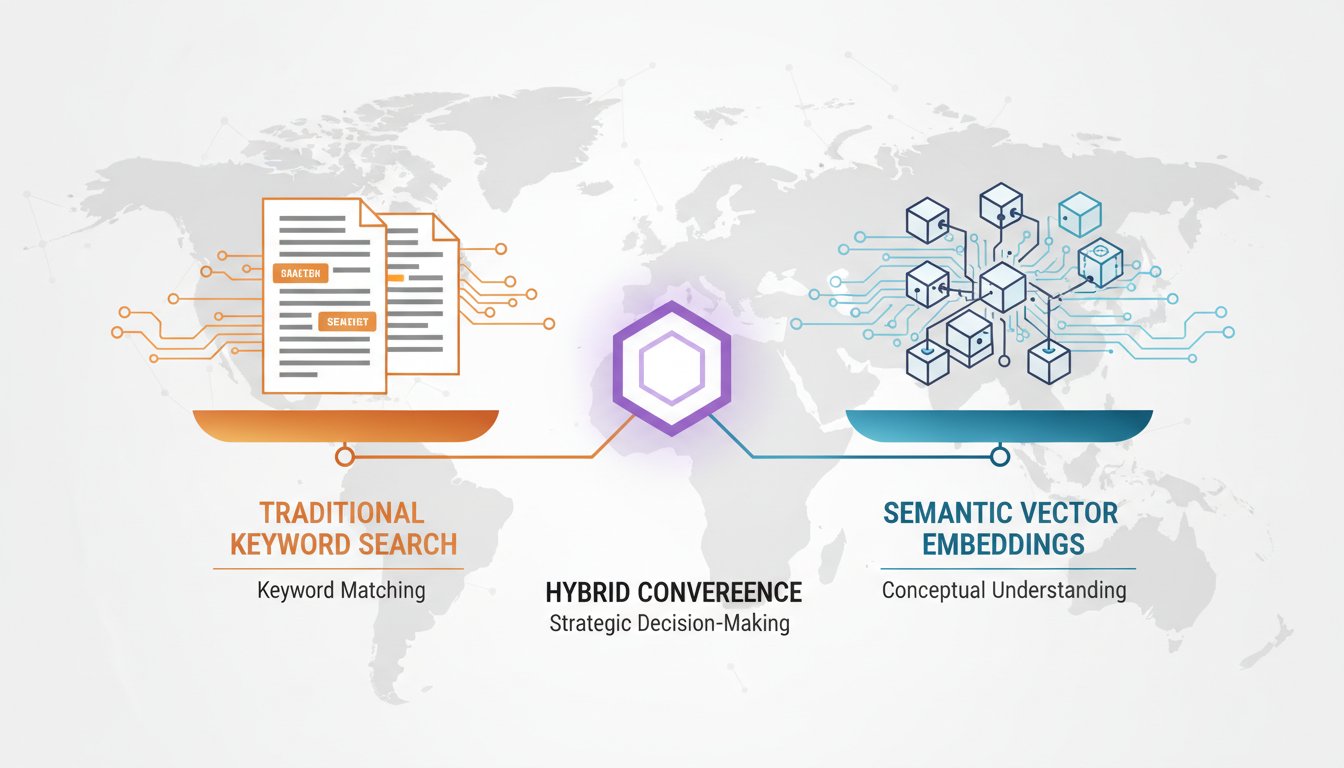

Hybrid Retrieval for Enterprise RAG: When to Use BM25, Vectors, or Both

The retrieval decision you make at the start of your RAG project quietly determines whether your system succeeds or fails at scale. It’s the choice between keyword-based search (BM25), semantic vector embeddings, or a hybrid approach that uses both. On the surface, this seems technical. In reality, it’s a strategic decision that impacts retrieval accuracy,…

-

The Structured Output Revolution: Why Constraint-Based Generation Is the Hidden Efficiency Multiplier in Enterprise RAG

The problem appears simple on the surface: your RAG system retrieves the right information, feeds it to your LLM, and gets back a response. But watch what happens in production. Your customer service bot retrieves perfect documentation but returns unstructured, contradictory information. Your compliance officer needs extracted data in a specific JSON format, but the…

-

How to Build Multi-Source RAG Systems with LangGraph: The Complete Enterprise Knowledge Integration Guide

Enterprise organizations generate knowledge across dozens of disconnected systems—Confluence wikis, Slack conversations, email threads, project documentation, customer support tickets, and legacy databases. When your AI assistant can only access one or two of these sources, you’re essentially building a digital librarian who’s only allowed to read picture books while ignoring the encyclopedias. This fragmentation creates…

-

How to Build Contextual RAG Systems with Google’s NotebookLM: The Complete Enterprise Knowledge Synthesis Guide

The average enterprise loses 2.5 hours per day searching for information across scattered documents, databases, and knowledge repositories. Despite investing millions in enterprise search solutions, knowledge workers still struggle to find relevant, contextual answers when they need them most. Traditional search returns documents; what teams really need are synthesized insights that connect information across multiple…

-

How to Build Real-Time RAG Systems with Redis Vector Search: The Complete Production Guide

The race to deploy real-time AI applications has exposed a critical bottleneck in traditional RAG architectures: latency. While organizations rush to implement retrieval-augmented generation systems, many discover their carefully crafted solutions crumble under production workloads, delivering responses that arrive seconds too late for real-time applications. Consider this scenario: A financial trading platform needs to provide…