These RAG Use Cases Help Enterprises Solve Real Problems So Much Easier

Introduction

In today’s data-driven world, enterprises are constantly seeking innovative ways to harness the power of their vast information stores. Imagine a scenario where your customer service team could instantly access and understand every relevant piece of information from years of interactions, product manuals, and troubleshooting guides, delivering perfectly tailored solutions in seconds. Or picture your R&D department rapidly synthesizing insights from countless research papers and internal experiments to accelerate breakthroughs. For many, this level of efficiency and insight has seemed like a distant dream, hampered by the limitations of traditional AI and data retrieval methods.

The core challenge lies in bridging the gap between the generic knowledge of large language models (LLMs) and the specific, proprietary data that holds the most value for an individual enterprise. While LLMs are incredibly powerful, they often lack the context of an organization’s unique operational landscape, leading to responses that are too general, or worse, inaccurate. This is where Retrieval Augmented Generation (RAG) emerges as a transformative solution. RAG isn’t just another AI buzzword; it’s a practical and powerful approach that enhances LLMs by dynamically incorporating information from external knowledge sources, allowing them to provide answers that are not only intelligent but also deeply contextualized and accurate.

This article will delve into the practical applications of RAG, exploring real-world use cases that demonstrate how enterprises are leveraging this technology to solve complex problems more easily and effectively. We’ll examine how RAG is revolutionizing areas from customer support to knowledge management and content creation, turning once-daunting data challenges into opportunities for innovation and growth. By understanding these applications, you’ll see how RAG can empower your organization to unlock the full potential of its data, making problem-solving not just faster, but fundamentally smarter.

What is RAG and Why is it a Game-Changer for Enterprises?

Retrieval Augmented Generation (RAG) is an architectural approach that combines the strengths of pre-trained dense retrieval systems and sequence-to-sequence models, particularly Large Language Models (LLMs). In simpler terms, RAG allows an AI model to “look up” relevant information from a specific dataset before generating an answer or content. This process grounds the AI’s output in factual, up-to-date, or domain-specific knowledge.

Traditional LLMs: Powerful but Potentially Unmoored

Standard LLMs are trained on massive, diverse datasets, enabling them to understand and generate human-like text on a wide array of topics. However, their knowledge is essentially frozen at the point of their last training. This presents several challenges for enterprises:

- Lack of Specific Knowledge: An LLM trained on general internet data won’t inherently know your company’s internal policies, specific product details, or confidential research findings.

- Potential for Hallucination: When faced with questions outside their training data or requiring very specific information, LLMs can sometimes “hallucinate” or generate plausible-sounding but incorrect or fabricated answers.

- Difficulty with Real-Time Updates: Enterprise data is dynamic. New products are launched, policies change, and market conditions evolve. Standard LLMs cannot easily incorporate this new information without extensive retraining.

How RAG Overcomes These Limitations

RAG addresses these shortcomings by integrating a retrieval step into the generation process:

- Retrieval: When a query is posed, the RAG system first searches a specified knowledge base (e.g., internal company documents, a product database, a collection of research papers) for relevant information.

- Augmentation: The retrieved information is then provided as context to the LLM along with the original query.

- Generation: The LLM uses this augmented context to generate a more accurate, relevant, and informed response.

This dynamic retrieval mechanism ensures that the LLM’s outputs are grounded in the provided data, significantly reducing hallucinations and allowing the system to leverage the most current and specific information available. As Samsung SDS notes in their work on SKE-GPT, a RAG-based system, this customization allows for more specialized and reliable AI applications.

High-Impact RAG Use Cases Transforming Business Operations

Enterprises are increasingly adopting RAG to build sophisticated AI solutions that drive efficiency and innovation. The ability to ground AI responses in proprietary data unlocks a myriad of applications, turning generic AI into a tailored, high-value asset. Here are some key use cases, drawing inspiration from insights shared by industry leaders like Signity Solutions and real-world implementations discussed across professional networks.

Use Case 1: Advanced Customer Support & AI-Powered Agents

The Challenge: Customers demand quick, accurate, and personalized support. Traditional chatbots often frustrate users with generic responses or an inability to handle complex queries, leading to overwhelmed human agents and diminished customer satisfaction.

The RAG Solution: RAG-powered AI agents can access and process vast knowledge bases, including product manuals, FAQs, troubleshooting guides, and even past customer interaction logs. When a customer asks a question, the RAG system retrieves the most relevant snippets of information and provides them to the LLM, which then formulates a precise, context-aware, and helpful answer.

- Proof Point: Companies are actively building AI agents that leverage RAG to handle a significant portion of customer inquiries, from simple questions to more complex troubleshooting. This not only improves response times and accuracy but also frees up human agents to focus on a_i_very_complex_issue_s that require deeper empathy or problem-solving skills. The LinkedIn discussion on “Enterprise RAG: Real life stories, use cases and challenges” highlights this trend, emphasizing the business value derived from such implementations.

- Example: An e-commerce company could use a RAG system to power its support chatbot. If a customer asks about the warranty policy for a specific product purchased six months ago, the RAG system can retrieve the exact warranty terms for that product and the customer’s purchase date, allowing the LLM to provide a definitive answer, unlike a generic LLM which might only know general warranty concepts.

Use Case 2: Intelligent Knowledge Management & Internal Search

The Challenge: Employees often spend a significant amount of time searching for information scattered across various internal systems – documents, wikis, databases, and shared drives. This inefficiency hampers productivity and can lead to decisions made with incomplete information.

The RAG Solution: RAG can power sophisticated internal search engines and knowledge management platforms. Instead of keyword-based searches that return a list of documents, a RAG-powered system understands the intent behind an employee’s query and can retrieve specific answers or summaries synthesized from multiple relevant documents.

- Proof Point: Samsung SDS’s award-winning SKE-GPT technology is a prime example of RAG applied to enterprise knowledge management. It allows employees to easily find and utilize information from the company’s extensive internal data, improving operational efficiency and knowledge sharing.

- Expert Insight: As outlined by Signity Solutions in their “8 High-Impact Use Cases of RAG,” intelligent search is a cornerstone application. Employees can ask natural language questions like, “What were the key findings from our Q3 market research on widget X?” and receive a concise summary with links to source documents, rather than having to manually sift through reports.

Use Case 3: Enhanced Content Creation & Personalization

The Challenge: Creating high-quality, consistent, and personalized content at scale – whether for marketing, technical documentation, or internal communications – is a resource-intensive task.

The RAG Solution: RAG systems can act as powerful co-pilots for content creators. By accessing a company’s existing content library, style guides, product specifications, and customer data, RAG can help draft marketing copy, generate product descriptions, create initial drafts of technical manuals, or even personalize email campaigns.

- Data Point: Imagine a marketing team needing to create multiple ad variations for different customer segments. A RAG system, fed with segment profiles and past campaign performance data, can help generate tailored ad copy that resonates more effectively with each audience.

- Example: A software company can use RAG to accelerate the creation of its technical documentation. When a new feature is developed, the RAG system can retrieve information from design documents, code comments, and existing similar feature documentation to draft a base version of the new guide, which technical writers can then refine.

Use Case 4: Streamlined Compliance & Legal Document Analysis

The Challenge: Navigating complex regulatory landscapes and reviewing extensive legal documents is a time-consuming, costly, and high-stakes endeavor. Manual review is prone to human error and can struggle to keep pace with evolving regulations.

The RAG Solution: RAG can be employed to build AI tools that rapidly scan, analyze, and extract key information from legal contracts, compliance documents, and regulatory filings. By training the retrieval component on relevant legal statutes and internal policies, these systems can identify potential risks, ensure adherence to obligations, or quickly locate specific clauses.

- Example: A financial institution could use RAG to review loan agreements for compliance with specific regulatory requirements. The system could flag clauses that deviate from standards or highlight missing information, significantly speeding up the due diligence process.

- Expert Insight: The ability of RAG to ground its analysis in specific, up-to-date regulatory texts makes it invaluable for compliance departments. This ensures that the insights provided are not just intelligent guesses but are based on the actual documented requirements.

Use Case 5: Code Generation and Developer Assistance

The Challenge: Software development involves repetitive tasks, navigating complex codebases, and adhering to specific coding standards. Accelerating development cycles while maintaining code quality is a constant goal.

The RAG Solution: RAG can assist developers by generating code snippets, explaining complex code sections, or suggesting solutions based on the project’s existing codebase, best practices, and relevant programming libraries. By retrieving context from internal repositories and documentation, RAG-powered developer tools can offer more relevant and accurate coding assistance than generic code generation models.

- Proof Point: The general trend in AI, as seen with Google Cloud’s showcase of GenAI use cases, points towards tools that augment human capabilities. For developers, this means AI that understands their specific project context, which RAG is uniquely positioned to provide.

- Example: A developer working on a large, legacy system needs to implement a new module that interacts with existing components. A RAG-based assistant, having indexed the entire codebase, can suggest appropriate API usage, provide examples of similar implementations within the project, and even draft boilerplate code conforming to established patterns.

The Business Value: Quantifiable Benefits of RAG Implementation

The adoption of RAG is not merely a technological upgrade; it translates into tangible business value across various facets of an enterprise. As highlighted in discussions like the LinkedIn article on “Enterprise RAG,” companies are realizing significant returns on their RAG investments.

Increased Efficiency and Productivity

By automating information retrieval and providing contextually relevant answers quickly, RAG systems drastically reduce the time employees spend searching for information or performing repetitive data processing tasks. This directly boosts productivity, allowing teams to focus on higher-value activities.

- Data Point: Signity Solutions points out that use cases like automated customer service and internal knowledge discovery lead to significant time savings for employees, translating into cost savings and faster project completion.

Improved Decision-Making with Data-Driven Insights

Access to precise, contextualized information enables better and faster decision-making. Whether it’s a sales team understanding customer needs better or an executive team getting quick summaries of market trends from internal reports, RAG empowers stakeholders with the right information at the right time.

Enhanced Customer and Employee Experience

Customers receive faster, more accurate support, leading to higher satisfaction and loyalty. Employees, equipped with powerful RAG-driven tools, experience less frustration in their daily tasks and can perform their roles more effectively, boosting morale and retention.

* Example: Samsung SDS’s implementation showcases how RAG can improve the employee experience by making vast internal knowledge easily accessible and usable.

Cost Reduction and Resource Optimization

Automation of tasks previously requiring significant manual effort, such as initial document review or first-line customer support, leads to direct cost reductions. Resources can then be reallocated to strategic initiatives that drive growth and innovation.

Navigating Challenges and Ensuring Successful RAG Adoption

While the benefits of RAG are compelling, successful implementation requires careful planning and consideration of potential challenges. The journey to enterprise-grade RAG is not without its complexities.

Data Quality and Preparation

The adage “garbage in, garbage out” holds particularly true for RAG systems. The effectiveness of the retrieval mechanism heavily depends on the quality, organization, and accessibility of the underlying knowledge base. Enterprises must invest in data governance, cleaning, and structuring to ensure the RAG system can find and utilize the correct information.

Integration with Existing Systems

RAG solutions often need to integrate seamlessly with existing enterprise applications, databases, and workflows. This can present technical challenges, requiring robust APIs and careful architectural design to ensure smooth data flow and user experience.

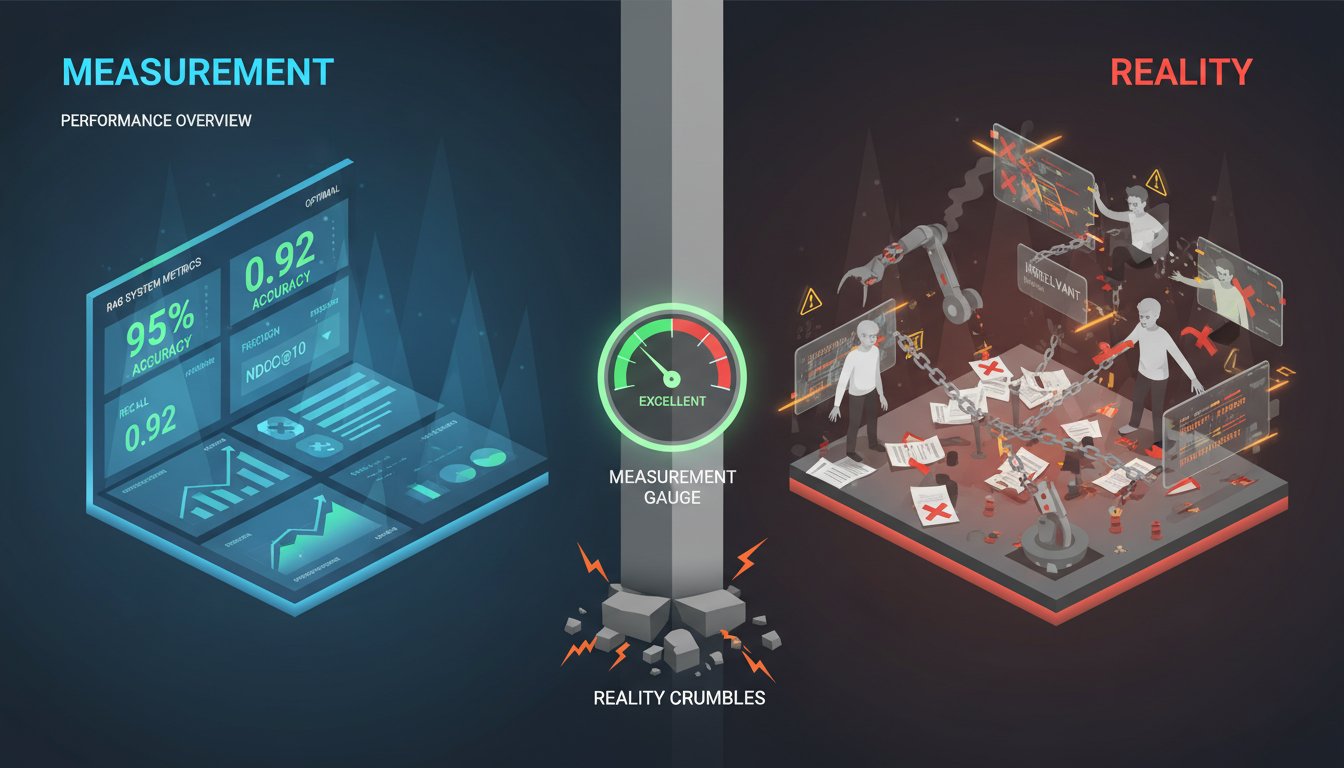

Addressing “Brittleness” and Ensuring Robustness

Community discussions, such as the one on the OpenAI Developer Forum regarding RAG’s potential “brittleness,” highlight a valid concern. If the retriever fails to find the correct documents or if the context provided is misleading, the LLM’s output can still be suboptimal. Building robust RAG systems involves fine-tuning retrieval strategies, implementing fallback mechanisms, and continuous monitoring and evaluation of performance.

- Expert Insight: This doesn’t mean RAG is “dead on arrival,” but rather that enterprises need to be aware of these challenges and engineer solutions that are resilient and adaptable. Techniques like query expansion, hybrid search (combining keyword and semantic search), and re-ranking retrieved documents can improve robustness.

Choosing the Right RAG Tools and Platforms

The RAG landscape is evolving rapidly, with new tools and platforms emerging. Selecting the right components – from vector databases for storing embeddings to LLMs and orchestration frameworks – is crucial. Organizations need to evaluate options based on their specific needs, scalability requirements, and existing tech stack.

Conclusion

The journey through the diverse applications of Retrieval Augmented Generation reveals a technology that is far more than a fleeting trend. From revolutionizing customer interactions and internal knowledge sharing to accelerating content creation and ensuring compliance, RAG is demonstrably helping enterprises solve real, complex problems with an efficacy that was previously out of reach. By grounding the power of Large Language Models in their own specific, high-value data, organizations are unlocking new levels of efficiency, insight, and competitive advantage.

The path to implementing enterprise-grade RAG involves navigating challenges related to data quality, system integration, and the inherent complexities of AI. However, as success stories from companies like Samsung SDS and the numerous use cases detailed by industry analysts illustrate, the rewards—enhanced productivity, smarter decision-making, and superior stakeholder experiences—are substantial. The ability to make problem-solving significantly easier and more effective is precisely why RAG is rapidly becoming a cornerstone of modern enterprise AI strategy.

CTA

Ready to explore how Retrieval Augmented Generation can transform your specific enterprise challenges and make complex problem-solving easier?

Subscribe to Rag About It for ongoing insights, in-depth tool comparisons, and expert guides on building and deploying powerful, enterprise-grade RAG systems. Visit https://ragaboutit.com to dive deeper into the world of RAG and start your journey towards data-driven innovation.