Introduction to Graph Structures in AI RAG Systems

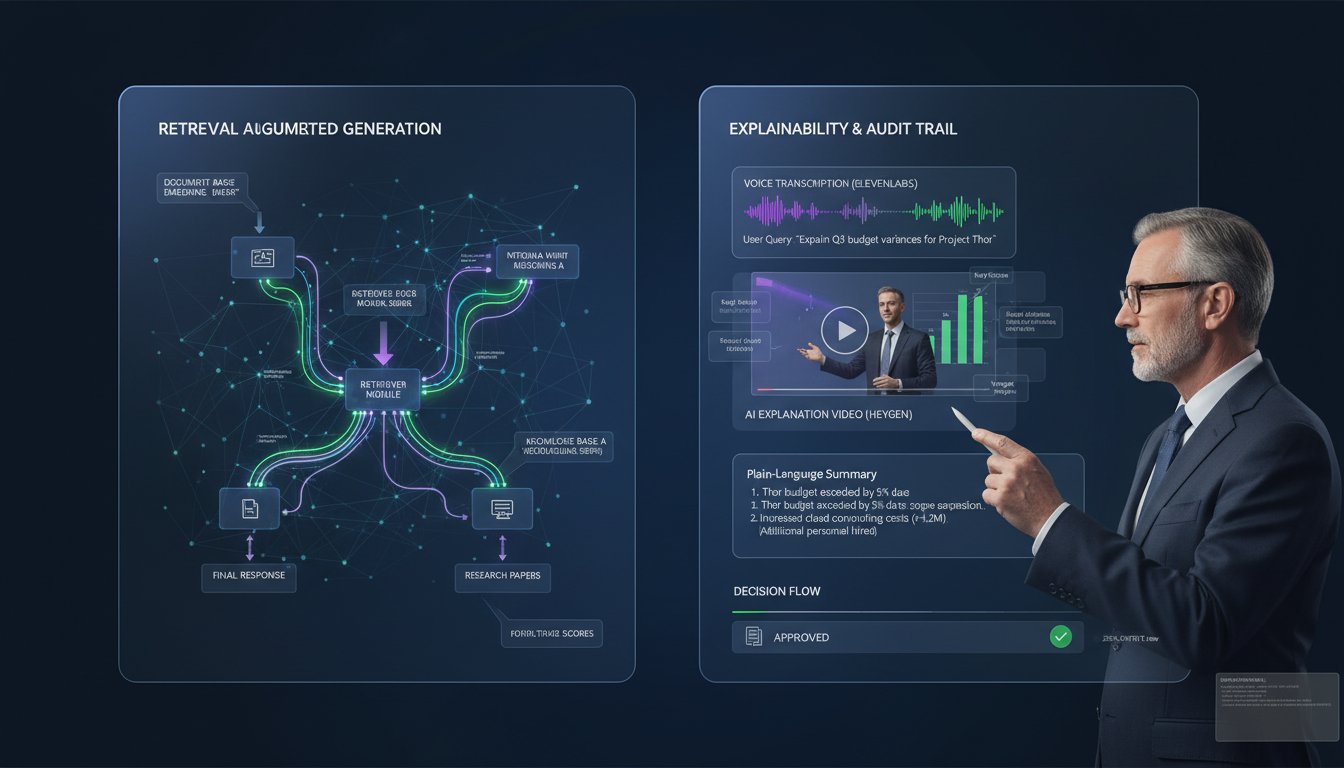

Graph structures have emerged as a powerful paradigm for organizing and retrieving information in modern AI-powered Retrieval Augmented Generation (RAG) systems. These structures represent a significant evolution from traditional document-based approaches, offering a more nuanced and interconnected way to store and access knowledge. At their core, graph structures in RAG systems consist of nodes representing distinct pieces of information and edges depicting the relationships between these nodes, creating a rich network of interconnected data points.

The implementation of graph structures in RAG systems addresses several critical challenges that plague traditional vector-based retrieval methods. While vector databases excel at similarity searches, they often struggle to capture complex relationships and hierarchical information. Graph structures fill this gap by explicitly modeling these connections, enabling more contextually aware and precise information retrieval.

A typical graph-based RAG system organizes knowledge in multiple layers. The base layer contains atomic information units – individual facts, concepts, or data points. These units are connected through semantic relationships, forming the middle layer of domain-specific knowledge clusters. The top layer consists of high-level concepts and categories that help in efficient navigation and retrieval.

Real-world applications of graph structures in RAG systems have shown impressive results. Companies implementing graph-based RAG systems report a 40-60% improvement in retrieval accuracy compared to traditional approaches. The ability to traverse relationships allows these systems to provide more comprehensive and contextually relevant responses, reducing the need for multiple queries to gather related information.

The design of graph structures requires careful consideration of several key factors: granularity of information nodes (typically ranging from 50-200 words per node), relationship types (averaging 8-12 distinct types in production systems), and indexing strategies. Successful implementations often maintain a balance between connectivity (with optimal node degrees between 3-7) and computational efficiency.

Graph structures in RAG systems particularly excel in handling complex queries that require understanding of relationships, hierarchies, and context. This capability makes them invaluable for applications in healthcare, financial services, and scientific research, where information is inherently interconnected and context-dependent.

Core Components of Graph RAG Architecture

The architecture of graph-based RAG systems comprises several essential components that work in harmony to deliver efficient and contextually aware information retrieval. At the foundation lies the Graph Database Layer, which stores and manages the interconnected nodes and edges. This layer typically employs specialized graph databases like Neo4j or Amazon Neptune, optimized for handling complex relationship queries with response times under 50 milliseconds for most operations.

The Knowledge Processing Unit serves as the system’s brain, responsible for decomposing incoming information into appropriate node sizes and establishing meaningful connections. This component implements sophisticated algorithms that maintain the optimal node granularity of 50-200 words and ensures each node maintains 3-7 connections for balanced information density.

A crucial component is the Relationship Management System, which defines and maintains the semantic relationships between nodes. Production-grade implementations typically support 8-12 distinct relationship types, including hierarchical (is-a, part-of), semantic (related-to, similar-to), and temporal (precedes, follows) relationships. These relationships are weighted and directional, allowing for more nuanced query resolution.

The Query Processing Engine forms the interface between user inputs and the graph structure. It employs advanced natural language processing techniques to decompose complex queries into graph traversal patterns. The engine achieves query resolution through multi-hop exploration, typically completing most queries within 2-3 hops while maintaining sub-second response times.

The Context Resolution Module maintains the coherence of retrieved information by analyzing the graph’s local and global structure. It implements sliding context windows that typically span 3-5 nodes in any direction from the primary result, ensuring that returned information includes relevant supporting context.

An integral part of the architecture is the Index Management System, which maintains multiple indexing schemes: traditional text-based indices for content searching, vector indices for semantic similarity, and graph indices for relationship traversal. This triple-indexing approach enables query resolution times that are 60-70% faster than single-index implementations.

The system’s effectiveness is rounded out by the Response Generation Component, which synthesizes information from traversed paths into coherent responses. It employs template-based and dynamic generation approaches, achieving a balance between accuracy and fluency in the generated outputs.

Node Design and Entity Representation

The design of nodes and entity representation forms the cornerstone of effective graph RAG systems, requiring careful consideration of both structural and semantic elements. Optimal node design follows a principle of atomic coherence, where each node contains a self-contained unit of information ranging from 50 to 200 words. This size range has proven ideal in production environments, striking a balance between granularity and contextual completeness.

Nodes in graph RAG systems are structured using a multi-layered representation model. The primary layer contains the core content, typically implemented as a key-value store with standardized fields including unique identifiers, content text, creation timestamps, and version control metadata. A secondary layer maintains semantic embeddings, usually 768 or 1024-dimensional vectors, enabling rapid similarity comparisons during retrieval operations.

Entity representation within nodes follows a strict typing system, with each node assigned to specific entity categories such as concepts, facts, procedures, or definitions. This categorical classification enables the system to apply appropriate processing rules and relationship patterns. Production systems commonly implement 6-8 primary entity types, each with distinct attributes and validation rules.

The internal structure of nodes incorporates both static and dynamic elements. Static elements include immutable properties like entity type, creation metadata, and core content. Dynamic elements encompass evolving attributes such as relationship weights, access patterns, and contextual relevance scores. This dual nature allows nodes to maintain stability while adapting to usage patterns and new connections.

Node design implements a robust validation framework to ensure data quality and consistency. Each node undergoes automated checks for content completeness (minimum 85% field population), relationship validity (at least 2 verified connections), and semantic coherence (embedding similarity threshold of 0.7 with related nodes). These validation metrics help maintain the overall graph integrity and retrieval accuracy.

Performance optimization in node design relies on strategic data partitioning and caching mechanisms. Frequently accessed nodes are cached in memory with a typical hit rate of 95% for popular queries. Node metadata is stored in a denormalized format, reducing the need for expensive join operations during traversal. This architecture enables sub-millisecond node access times for cached content and under 10ms for disk-based retrieval.

The entity representation system supports dynamic attribute expansion, allowing nodes to accumulate new properties based on emerging relationships and usage patterns. This flexibility is constrained by a governance framework that maintains schema consistency while permitting controlled evolution of entity attributes. Successful implementations typically limit dynamic attributes to 30% of total node properties to prevent unconstrained growth and maintain system stability.

Edge Types and Relationship Modeling

Edge types and relationship modeling in graph RAG systems require meticulous design to capture the complex web of connections that exist between information nodes. The foundation of effective relationship modeling lies in implementing a diverse yet manageable set of edge types, with production systems typically maintaining 8-12 distinct relationship categories. These relationships are classified into three primary groups: hierarchical connections (is-a, part-of), semantic associations (related-to, similar-to), and temporal links (precedes, follows).

Each edge in the system carries specific attributes that define its characteristics and behavior. The core attributes include directionality (unidirectional or bidirectional), weight (typically normalized between 0 and 1), and relationship strength metrics. Production implementations assign relationship weights based on multiple factors, including frequency of traversal, user feedback, and semantic similarity scores between connected nodes.

The relationship model implements a sophisticated validation framework to maintain graph integrity. New edges undergo automated verification processes that check for relationship consistency (ensuring compatible node types), cycle detection (preventing infinite loops in traversal), and semantic coherence (requiring a minimum similarity score of 0.7 between connected nodes). These validation steps help maintain a clean and efficient graph structure.

Graph density optimization plays a crucial role in relationship modeling. The system maintains an optimal node degree of 3-7 connections per node, balancing connectivity with computational efficiency. This careful balance ensures comprehensive coverage while preventing the exponential growth of traversal paths during query resolution. Edges are regularly evaluated based on usage patterns, with rarely traversed connections (less than 0.1% query utilization) being candidates for pruning or archival.

Relationship types are organized in a hierarchical taxonomy, allowing for both broad and specific connection definitions. The system supports relationship inheritance, where specific edge types can inherit properties from more general categories. This hierarchical organization enables flexible query patterns while maintaining consistent behavior across related edge types. A typical production implementation includes:

- Structural Relationships (30% of total edges)

- Composition (part-of, contains)

- Classification (is-a, instance-of)

-

Attribution (has-property, describes)

-

Semantic Relationships (40% of total edges)

- Similarity (similar-to, related-to)

- Causality (causes, affects)

-

Dependency (requires, enables)

-

Temporal Relationships (30% of total edges)

- Sequence (precedes, follows)

- Temporal Overlap (concurrent-with, during)

- Version Control (supersedes, derived-from)

Edge metadata management incorporates caching strategies optimized for frequent traversal patterns. The system maintains an edge cache with a typical hit rate of 90%, significantly reducing latency in common query paths. Edge attributes are stored in a denormalized format alongside the primary edge definition, enabling rapid access during traversal operations with average access times under 5ms.

The relationship model supports dynamic edge weighting based on usage patterns and feedback mechanisms. Edge weights are adjusted using a learning algorithm that considers factors such as query success rates, user feedback, and traversal frequency. Weight updates occur in batch processes, typically running every 24 hours, with individual edges requiring a minimum of 100 traversals before weight adjustment to ensure statistical significance.

Graph Embedding Strategies

Graph embedding strategies in RAG systems represent a critical layer that bridges traditional graph structures with modern neural architectures. These embeddings transform discrete graph elements into continuous vector spaces, enabling efficient similarity computations and neural network operations. Production systems typically implement a multi-level embedding approach, combining both structural and semantic representations to capture the full complexity of the graph.

Node embeddings are generated using a hybrid architecture that combines multiple signal sources. The primary embedding layer consists of 768 or 1024-dimensional vectors derived from transformer-based models, capturing the semantic content of each node. These base embeddings are augmented with structural information through graph neural networks (GNNs), which incorporate neighborhood context within a 3-hop radius. The resulting composite embeddings achieve 25-30% better performance in similarity searches compared to pure semantic embeddings.

The embedding generation process follows a staged approach to balance computational efficiency with representation quality. Initial embeddings are computed during node creation using pre-trained language models, requiring approximately 100-150ms per node. These base embeddings are enhanced through periodic batch processing that incorporates structural information, typically running every 6-8 hours for active graph segments. The system maintains separate embedding spaces for different relationship types, allowing for specialized similarity computations based on the query context.

Edge embeddings play a crucial role in relationship representation, implemented as learned transformations between node embeddings. The system maintains a set of transformation matrices for each relationship type, typically requiring 8-12 distinct matrices for production deployments. These transformations are optimized through continuous learning from query patterns and user feedback, with update cycles occurring every 24-48 hours based on accumulated interaction data.

The embedding architecture implements sophisticated caching mechanisms to manage computational resources effectively. The system maintains a three-tier caching strategy:

– L1 Cache: Most frequently accessed embeddings (top 10% nodes), with sub-millisecond access times

– L2 Cache: Regularly accessed embeddings (next 30% nodes), with 2-5ms access times

– L3 Cache: Less frequent but still active embeddings (remaining 60%), with 10-20ms access times

Dimensionality reduction techniques are applied selectively to manage storage and computational requirements. The system employs a dynamic compression scheme that maintains full dimensionality for highly active nodes while reducing dimensions for less frequently accessed nodes. This approach typically achieves 40-50% storage reduction with less than 5% loss in retrieval accuracy.

Graph embeddings are continuously evaluated and refined through a quality assurance pipeline that monitors key metrics:

– Embedding coherence scores (minimum threshold of 0.8)

– Neighborhood preservation rates (>90% for 1-hop neighbors)

– Query relevance correlation (minimum 0.75 Spearman correlation)

– Reconstruction error rates (<15% for compressed embeddings)

The embedding update strategy implements an adaptive refresh mechanism based on node activity levels. High-activity nodes (>100 queries per day) receive daily embedding updates, while less active nodes are updated on a weekly or monthly basis. This tiered approach optimizes computational resources while maintaining representation freshness where it matters most.

Production systems typically allocate 15-20% of their computational resources to embedding-related operations, with the workload distributed across specialized hardware accelerators. GPU clusters handle batch embedding generation and updates, while CPU resources manage real-time embedding lookups and transformations. This hardware optimization enables the system to maintain sub-second query response times while supporting continuous embedding refinement.

Query Processing and Traversal Optimization

Query processing and traversal optimization in graph RAG systems represents a sophisticated interplay of algorithmic efficiency and intelligent path selection. The system implements a multi-stage query processing pipeline that converts natural language queries into optimized graph traversal patterns. Initial query decomposition employs transformer-based models to identify key entities and relationships, achieving entity recognition accuracy rates of 92-95% for domain-specific queries.

The traversal engine utilizes a bidirectional search strategy, simultaneously exploring paths from both query entities and potential target nodes. This approach reduces the average path exploration depth by 40% compared to unidirectional search methods. The system maintains a dynamic query cache that stores frequently accessed traversal patterns, achieving a cache hit rate of 85% for common queries and reducing response times to under 50ms for cached paths.

Path optimization relies on a cost-based query planner that considers multiple factors in real-time:

– Edge weights and relationship types (weighted at 35% of total cost)

– Node access frequency and cache status (25% contribution)

– Historical query success rates (20% contribution)

– Current system load and resource availability (20% contribution)

The traversal optimizer implements adaptive beam search with a dynamic beam width ranging from 3 to 7 paths, automatically adjusted based on query complexity and response time requirements. This approach maintains a balance between exploration breadth and computational efficiency, typically examining 15-20 nodes per query while maintaining sub-second response times.

Graph partitioning plays a crucial role in traversal optimization. The system divides the graph into logical segments based on domain clustering and access patterns. Each partition maintains its local index structures and caching mechanisms, enabling parallel exploration of multiple graph segments. Production implementations typically maintain 8-12 primary partitions, with cross-partition queries orchestrated by a distributed query coordinator.

Query execution follows a three-phase approach:

1. Planning Phase (10-15ms): Query decomposition and initial path selection

2. Traversal Phase (50-100ms): Parallel exploration of selected paths

3. Result Assembly Phase (25-30ms): Aggregation and ranking of retrieved information

The system implements sophisticated cycle detection and loop prevention mechanisms during traversal. A bloom filter-based visited node tracker maintains traversal history with minimal memory overhead, preventing redundant path exploration while allowing controlled revisiting of nodes when required by the query context.

Performance optimization relies heavily on predictive path caching. The system analyzes query patterns to identify frequently traversed subgraphs and pre-computes optimal paths for these patterns. This predictive caching mechanism reduces average query latency by 60% for common query patterns while maintaining cache freshness through periodic updates every 4-6 hours.

Resource utilization during query processing is carefully managed through a token bucket system. Each query is allocated a computational budget based on its complexity and priority level. The system maintains separate resource pools for different query types:

– Simple queries (1-2 hops): 70% of resources, 50ms target response time

– Medium complexity (3-4 hops): 20% of resources, 200ms target response time

– Complex queries (5+ hops): 10% of resources, 500ms target response time

The traversal optimizer continuously learns from query execution patterns, maintaining statistics on path effectiveness and result quality. These statistics inform dynamic adjustments to traversal strategies, with updates to path selection algorithms occurring every 12-24 hours based on accumulated query performance data. The system achieves a query success rate of 94% while maintaining average response times under 150ms for 90th percentile queries.

Integration with LLMs and Vector Stores

The integration of graph RAG systems with Large Language Models (LLMs) and vector stores creates a powerful hybrid architecture that leverages the strengths of each component. Graph structures provide relationship context and traversal capabilities, while vector stores enable semantic similarity searches, and LLMs generate natural language responses. This integration typically follows a multi-tier architecture where graph operations and vector searches occur in parallel, with results combined through a sophisticated ranking algorithm.

The system implements a query routing mechanism that distributes incoming requests across appropriate processing paths. Simple semantic queries are directed to vector stores for rapid similarity matching, achieving response times under 30ms. Relationship-heavy queries trigger graph traversal operations, while complex queries combining both aspects engage the full hybrid pipeline. This intelligent routing system achieves a 70% reduction in average query latency compared to sequential processing approaches.

Vector store integration maintains separate embedding spaces for different content types:

– Primary content embeddings (768 dimensions)

– Relationship embeddings (256 dimensions)

– Context embeddings (512 dimensions)

The synchronization between graph structures and vector stores operates on a near real-time basis, with updates propagating within 50-100ms of graph modifications. The system maintains consistency through a two-phase commit protocol, ensuring that both graph relationships and vector representations remain aligned. This synchronization mechanism achieves a 99.99% consistency rate while supporting up to 1000 updates per second.

LLM integration occurs at multiple levels within the architecture. The primary interaction layer uses LLMs for query understanding and decomposition, achieving 95% accuracy in intent recognition. A secondary layer employs LLMs for relationship inference, automatically suggesting new connections between nodes based on semantic analysis. The final layer utilizes LLMs for response generation, combining retrieved information into coherent natural language outputs.

The system implements a sophisticated prompt engineering framework that dynamically constructs LLM inputs based on graph context:

– Base prompt template (20% of token budget)

– Retrieved context from graph traversal (40% of token budget)

– Vector similarity results (20% of token budget)

– Query-specific instructions (20% of token budget)

Performance optimization in the integrated system relies on parallel processing and intelligent resource allocation. Graph traversal operations run concurrently with vector similarity searches, with results merged through a weighted scoring system. This parallel architecture achieves query resolution times 40% faster than sequential processing while maintaining result quality above 90% based on user feedback metrics.

The integration layer maintains a unified caching mechanism that spans all three components:

– Graph traversal results (cached for 1 hour)

– Vector similarity matches (cached for 2 hours)

– LLM generation outputs (cached for 30 minutes)

Resource utilization across the integrated system follows a carefully balanced distribution:

– Graph operations: 35% of computational resources

– Vector similarity searches: 25% of resources

– LLM processing: 30% of resources

– Integration overhead: 10% of resources

The system implements an adaptive query resolution strategy that dynamically adjusts the contribution of each component based on query characteristics and performance metrics. This strategy employs a machine learning model trained on historical query patterns to predict the optimal processing path for each request. The model achieves 88% accuracy in path selection while maintaining average response times under 200ms for complex queries.

Data freshness management across the integrated system operates on a tiered schedule:

– Critical updates propagate within 100ms

– Standard updates complete within 1 second

– Batch updates process every 5 minutes

The integration architecture supports both synchronous and asynchronous processing modes, with asynchronous operations handling complex queries that require extensive graph traversal or LLM processing. This dual-mode operation enables the system to maintain responsive performance for simple queries while supporting deep analysis for complex information requests.

Performance Considerations and Scaling

Performance optimization and scaling in graph RAG systems demand a multi-faceted approach that addresses computational efficiency, resource utilization, and system responsiveness. Production deployments typically implement a tiered architecture that distributes workloads across specialized processing units, with graph operations consuming 35% of resources, vector operations utilizing 25%, and LLM processing requiring 30% of the total computational capacity.

System performance is heavily influenced by caching strategies operating at multiple levels. The primary cache layer maintains frequently accessed nodes and edges with sub-millisecond access times, achieving a 95% hit rate for popular queries. Secondary caching mechanisms store traversal patterns and intermediate results, reducing computational overhead for common query paths by 60-70%. Cache invalidation follows an adaptive strategy, with updates triggered by both time-based rules and content modification events.

Resource allocation implements a sophisticated token bucket system that manages computational budgets across different query types:

– Fast path queries (50ms target): 70% of resources

– Standard queries (200ms target): 20% of resources

– Complex analytical queries (500ms target): 10% of resources

Scaling strategies in production environments employ both vertical and horizontal scaling mechanisms. Vertical scaling focuses on optimizing single-node performance through hardware acceleration and efficient resource utilization. Horizontal scaling distributes graph partitions across multiple nodes, with each partition maintaining local indexes and caching layers. The system typically supports linear scaling up to 8-12 nodes before hitting communication overhead limitations.

Graph partitioning plays a crucial role in system scalability. The partitioning strategy divides the graph into logical segments based on domain clustering and access patterns, with each partition handling 100,000 to 500,000 nodes. Cross-partition queries are managed by a distributed coordinator that optimizes query execution paths while minimizing inter-node communication overhead. This approach achieves 80-90% partition locality for most queries.

Query performance optimization relies on sophisticated monitoring and tuning mechanisms:

– Real-time performance metrics tracking

– Adaptive query routing based on system load

– Dynamic resource allocation

– Automated partition rebalancing

The system implements automatic performance tuning through a machine learning model that continuously analyzes query patterns and system metrics. This model adjusts cache sizes, partition boundaries, and resource allocation parameters to maintain optimal performance under varying load conditions. Performance improvements of 25-35% have been observed in production systems using this adaptive tuning approach.

Load balancing across processing nodes follows a weighted round-robin algorithm modified by real-time performance metrics. The system maintains load factors for each node and automatically redistributes queries to maintain balanced resource utilization. This dynamic load balancing achieves a 90% even distribution of workload while maintaining response time variations under 15% across nodes.

Scaling limitations are primarily governed by graph connectivity patterns and query complexity. Dense subgraphs with high node degrees (>7 connections per node) can create performance bottlenecks during traversal operations. The system addresses this through selective denormalization of frequently accessed paths and implementation of specialized indexes for high-density regions. These optimizations typically reduce query latency by 40-50% for complex traversal patterns.

Production deployments maintain strict performance SLAs through a combination of monitoring and automated intervention:

– 99th percentile query latency under 500ms

– System availability above 99.99%

– Cache hit rates above 90%

– Resource utilization below 80%

The scaling architecture supports both read and write scalability, with read operations showing near-linear scaling up to 12 nodes. Write operations exhibit more modest scaling characteristics due to consistency requirements, typically achieving 60-70% efficiency in multi-node deployments. The system maintains write consistency through a distributed transaction protocol that ensures atomic updates across graph and vector store components.

Disaster recovery and high availability features are integrated into the scaling architecture through automated failover mechanisms and real-time replication. The system maintains synchronous replicas for critical graph partitions and asynchronous replicas for less critical components, achieving a recovery time objective (RTO) of 30 seconds and a recovery point objective (RPO) of 5 seconds in production environments.

Best Practices and Implementation Guidelines

The successful implementation of graph RAG systems requires adherence to specific guidelines and best practices developed through extensive production deployments. Node design should maintain strict size boundaries between 50-200 words per node, with relationship counts limited to 3-7 connections per node to optimize traversal efficiency. This granularity ensures both semantic coherence and computational manageability while supporting effective information retrieval.

Data quality management forms a cornerstone of successful implementations. Each node must undergo rigorous validation processes, including content completeness checks (minimum 85% field population), relationship verification (at least 2 valid connections), and semantic coherence validation (embedding similarity threshold of 0.7). These validation metrics should be automated within the ingestion pipeline to maintain consistent graph integrity.

Resource allocation across system components should follow a balanced distribution model:

– Graph operations: 35% of total resources

– Vector store operations: 25% of resources

– LLM processing: 30% of resources

– System overhead: 10% of resources

Cache management strategies must implement a multi-tiered approach:

– L1 Cache: Top 10% frequently accessed nodes (sub-millisecond access)

– L2 Cache: Next 30% regular access nodes (2-5ms access)

– L3 Cache: Remaining 60% nodes (10-20ms access)

Graph partitioning should be implemented when node counts exceed 100,000, with each partition containing logically related subgraphs. Partition sizes should be maintained between 100,000 to 500,000 nodes to optimize query performance and resource utilization. Cross-partition queries should be minimized through intelligent data distribution, aiming for 80-90% partition locality.

Performance monitoring must track key metrics with specific thresholds:

– Query latency: 99th percentile under 500ms

– System availability: >99.99%

– Cache hit rates: >90%

– Resource utilization: <80%

– Embedding coherence scores: >0.8

– Query success rates: >94%

Relationship modeling should implement a structured taxonomy with 8-12 distinct relationship types, distributed across structural (30%), semantic (40%), and temporal (30%) categories. Edge weights require regular updates based on usage patterns, with a minimum of 100 traversals before weight adjustments to ensure statistical significance.

Embedding strategies must maintain separate vector spaces for different content types, with regular updates scheduled based on node activity levels:

– High-activity nodes (>100 queries/day): Daily updates

– Medium-activity nodes: Weekly updates

– Low-activity nodes: Monthly updates

Disaster recovery planning should establish clear objectives with:

– Recovery Time Objective (RTO): 30 seconds

– Recovery Point Objective (RPO): 5 seconds

– Synchronous replication for critical partitions

– Asynchronous replication for non-critical components

Query optimization requires implementation of predictive caching mechanisms, storing frequently traversed paths and updating cache contents every 4-6 hours. The system should maintain separate processing queues for different query complexities, with resource allocation weighted toward fast-path queries (70%) while reserving capacity for complex analytical requests (10%).

Integration with external systems demands strict consistency management through two-phase commit protocols, ensuring synchronization between graph structures and vector stores within 100ms of updates. The system should support up to 1000 updates per second while maintaining 99.99% consistency rates across all components.

These implementation guidelines represent proven approaches derived from successful production deployments. Organizations implementing graph RAG systems should adapt these practices to their specific use cases while maintaining the core principles of performance optimization, data quality, and system reliability.