Retrieval-Augmented Generation (RAG) is a cutting-edge technique in the field of natural language processing (NLP) that combines the strengths of retrieval-based and generative models. This approach enhances the capabilities of language models by incorporating external knowledge from large text corpora, enabling them to generate more accurate and contextually relevant responses. Amazon Web Services (AWS) offers a comprehensive suite of tools and services to implement RAG solutions effectively. This article delves into the architecture, implementation, and benefits of deploying RAG on AWS, providing a detailed and comprehensive overview.

Introduction to Retrieval-Augmented Generation (RAG)

RAG models leverage both retrieval and generation mechanisms to produce high-quality responses. The retrieval component fetches relevant information from a knowledge base, while the generative model uses this information to generate coherent and contextually appropriate responses. This hybrid approach addresses the limitations of purely generative models, which often struggle with factual accuracy and coherence.

AWS Services for RAG Implementation

AWS provides a robust ecosystem for deploying RAG solutions, featuring services like Amazon Bedrock, Amazon Kendra, and Amazon SageMaker. These services offer a range of capabilities, from data ingestion and preprocessing to model training and deployment.

Amazon Bedrock

Amazon Bedrock is a fully managed service that simplifies the development of generative AI applications. It offers a choice of high-performing foundation models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon itself. Bedrock’s serverless architecture ensures that users do not have to manage any infrastructure, making it easier to integrate and deploy generative AI capabilities securely and efficiently[^1^].

Amazon Kendra

Amazon Kendra is a highly accurate enterprise search service powered by machine learning. It provides an optimized Kendra Retrieve API that can be used with Amazon Kendra’s high-accuracy semantic ranker as an enterprise retriever for RAG workflows. Kendra’s semantic search technologies can scan large databases and retrieve data more accurately, making it an ideal component for RAG implementations[^2^].

Amazon SageMaker

Amazon SageMaker is a comprehensive machine learning service that enables developers to build, train, and deploy machine learning models at scale. SageMaker JumpStart, a feature within SageMaker, offers prebuilt models and code examples to accelerate RAG implementation. It also provides tools for data preprocessing, model training, and evaluation[^3^].

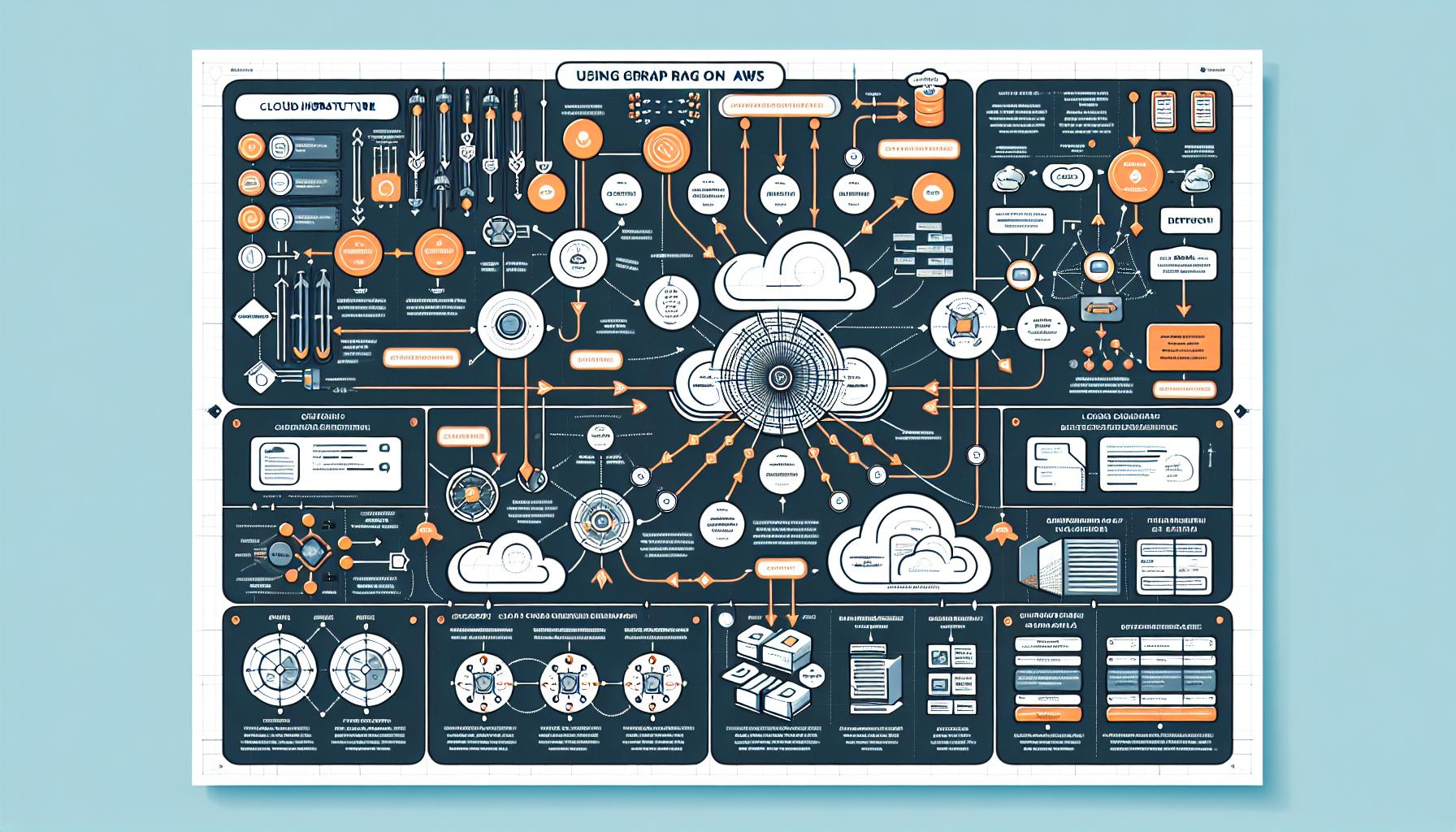

Architecture of RAG on AWS

The architecture of a RAG system on AWS typically involves several key components, including data storage, retrieval mechanisms, and generative models. The following sections outline the architecture in detail.

Data Storage

Data storage is a critical component of any RAG system. AWS offers Amazon Simple Storage Service (Amazon S3) as a scalable and secure storage solution. In the context of RAG, a dataset of radiology report findings-impressions pairs, for example, can be uploaded to Amazon S3 and then ingested using Knowledge Bases for Amazon Bedrock[^4^].

Retrieval Mechanism

The retrieval mechanism involves converting user queries into vector embeddings, searching the knowledge base, and obtaining relevant results. Amazon Bedrock’s Titan Text Embeddings model can be used to generate these vector embeddings. The retrieved information is then used to augment the prompt for the generative model[^5^].

Generative Model

The generative model, such as Claude 3 Sonnet, is invoked to generate the final response based on the augmented prompt. This model leverages the retrieved information to produce more accurate and contextually relevant outputs[^6^].

Reference Architecture

The reference architecture diagram in Figure 3 of the AWS blog illustrates the fully managed RAG pattern with Knowledge Bases for Amazon Bedrock. This architecture converts user queries into embeddings, searches the knowledge base, obtains relevant results, augments the prompt, and then invokes an LLM to generate the response[^7^].

Implementation Steps

Implementing a RAG system on AWS involves several key steps, including data preprocessing, model training, and evaluation. The following sections provide a detailed overview of each step.

Data Preprocessing

Data preprocessing is essential for preparing the dataset for training and retrieval. This step involves cleaning the data, generating vector embeddings, and storing the processed data in a suitable format. Amazon SageMaker provides tools and notebooks to facilitate data preprocessing[^8^].

Model Training

Model training involves fine-tuning the generative model using the preprocessed data. Amazon SageMaker JumpStart offers prebuilt models and code examples to accelerate this process. The training process can be customized based on the specific requirements of the RAG system[^9^].

Evaluation

The performance of the RAG system is evaluated using metrics such as the ROUGE score. This score measures the quality of the generated responses by comparing them to reference outputs. AWS provides tools for performance analysis and evaluation, ensuring that the RAG system meets the desired accuracy and relevance criteria[^10^].

Benefits of RAG on AWS

Deploying RAG on AWS offers several benefits, including scalability, security, and cost optimization. The following sections highlight these benefits in detail.

Scalability

AWS’s serverless architecture ensures that RAG systems can scale seamlessly to handle varying workloads. Services like Amazon Bedrock and Amazon Kendra provide the necessary infrastructure to support large-scale deployments without the need for manual intervention^11^.

Security

Security is a top priority for AWS, and its services are designed to meet the highest security standards. Amazon Bedrock offers enterprise-grade security and privacy, ensuring that sensitive data is protected throughout the RAG workflow. Additionally, AWS provides tools like Amazon CloudWatch and AWS CloudTrail for monitoring and logging, enabling proactive management of security events[^12^].

Cost Optimization

AWS offers flexible pricing models, such as pay-per-use and reserved instances, allowing organizations to align costs with actual usage patterns. Tools like AWS Cost Explorer and AWS Budgets help monitor and manage costs, ensuring that RAG deployments remain cost-effective over time[^13^].

Comparative Analysis: AWS vs. Azure vs. GCP

When comparing AWS with other cloud providers like Azure and Google Cloud Platform (GCP) for RAG deployment, each platform offers unique strengths and capabilities. The following sections provide a comparative analysis of these platforms.

AWS

AWS offers a comprehensive and flexible ecosystem for deploying RAG solutions. With managed services like Amazon Kendra and Amazon Bedrock, as well as customizable components like AWS Lambda and Amazon DynamoDB, AWS provides a robust platform for building scalable RAG architectures. Additionally, AWS’s security, monitoring, and cost optimization features ensure the long-term success of RAG deployments[^14^].

Azure

Azure emphasizes security and seamless integration with Microsoft products, making it an attractive option for organizations already invested in the Microsoft ecosystem. Azure’s Cognitive Search and Azure OpenAI Service provide powerful tools for implementing RAG solutions. However, Azure’s offerings may not be as extensive or flexible as AWS’s[^15^].

GCP

Google Cloud Platform (GCP) offers a streamlined approach to RAG deployment with its Vertex AI platform. Vertex AI provides tools for data preprocessing, model training, and deployment, along with features like the Vertex AI Matching Engine for efficient vector similarity search. GCP’s serverless deployment options and cost optimization features make it a strong contender for businesses prioritizing scalability and cost-effectiveness[^16^].

Conclusion

In conclusion, AWS provides a comprehensive and flexible platform for deploying Retrieval-Augmented Generation (RAG) solutions. With services like Amazon Bedrock, Amazon Kendra, and Amazon SageMaker, AWS offers the necessary tools and infrastructure to build robust and scalable RAG systems. The benefits of deploying RAG on AWS include scalability, security, and cost optimization, making it an ideal choice for organizations looking to enhance their AI capabilities.

While Azure and GCP also offer robust solutions for RAG deployment, AWS’s extensive offerings and flexibility make it a strong contender for businesses seeking customization and integration with a wide range of services. By leveraging AWS’s powerful tools and services, organizations can harness the potential of RAG to deliver accurate, contextually relevant, and timely responses to user queries, ultimately driving innovation and enhancing customer experiences.