The game just changed. Again.

Anthhropic’s Claude Opus 4.6 didn’t just launch with incremental improvements—it arrived with multi-agent teams and a quiet but seismic shift buried in the technical details: contextual memory is replacing traditional Retrieval-Augmented Generation as the primary knowledge architecture for agentic AI systems. For enterprise teams who spent the last 18 months building sophisticated RAG pipelines, this isn’t just another model update. It’s an architectural reckoning that forces a fundamental question: Are we building yesterday’s infrastructure for tomorrow’s AI?

The implications stretch far beyond a single model release. Industry analysts are now predicting that contextual memory will surpass RAG in agentic AI usage throughout 2026, marking the end of RAG’s dominance as the default pattern for enterprise knowledge systems. If they’re right, every vector database, embedding pipeline, and retrieval optimization you’ve implemented might be solving the wrong problem. Let’s unpack what’s actually happening, why it matters, and what enterprise RAG teams should do right now.

The Claude Opus 4.6 Architecture Shift Nobody’s Talking About

When Anthropic announced Claude Opus 4.6 in early February 2026, most coverage focused on the headline features: multi-agent teams capable of long-horizon task execution, customizable agentic plug-ins for enterprise workflows, and deployment across legal, customer support, and marketing departments. These are significant capabilities that position Claude as infrastructure rather than just a tool.

But the real story lives in the architectural decisions underneath. Claude Opus 4.6’s multi-agent teams don’t rely primarily on traditional RAG retrieval patterns. Instead, they leverage persistent contextual memory—a fundamentally different approach to how AI systems access and utilize knowledge over time.

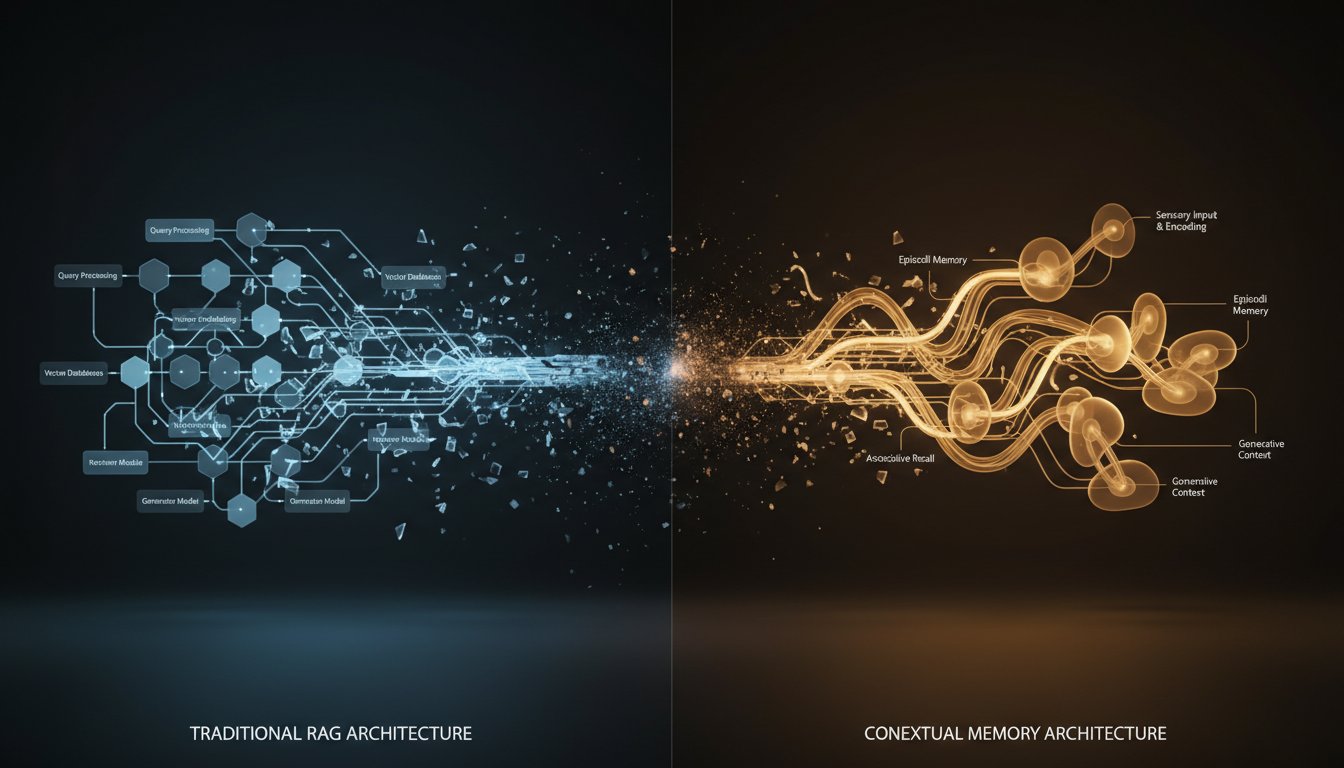

Traditional RAG works through a retrieval-then-generate cycle: your query triggers a vector similarity search, relevant documents get retrieved from your knowledge base, those documents become context for the LLM, and the model generates a response. Every interaction is essentially stateless, requiring retrieval each time. It’s effective but computationally expensive and architecturally rigid.

Contextual memory flips this model. Instead of retrieving knowledge on-demand for each query, the AI maintains persistent, evolving understanding across interactions. Think of it less like searching a library for every question and more like an expert who accumulates domain knowledge over time and recalls relevant information naturally during conversation. For long-horizon tasks and multi-agent coordination, this architectural difference becomes game-changing.

Why This Matters for Multi-Agent Systems

Multi-agent AI systems expose RAG’s fundamental limitations. When multiple agents need to coordinate, share context, and maintain consistent understanding across extended workflows, the retrieve-on-every-query pattern breaks down.

Consider an enterprise scenario where one agent researches a customer issue, another drafts a response, and a third reviews for compliance. Traditional RAG forces each agent to independently retrieve relevant context, leading to redundant searches, inconsistent information, and coordination overhead. Contextual memory allows agents to share persistent understanding, building on each other’s work without constant re-retrieval.

The performance implications are dramatic. Early implementations show contextual memory architectures reducing retrieval operations by 60-80% for multi-step tasks while improving coherence across agent interactions. For enterprises running hundreds or thousands of AI operations daily, this isn’t just faster—it’s fundamentally more cost-effective.

The Data Showing RAG’s Declining Dominance

The shift away from pure RAG architectures isn’t speculation—it’s already happening in production systems. Multiple data points from early 2026 paint a consistent picture:

Cost Economics Are Driving Change: Databricks’ recent announcement of AI infrastructure costs dropping up to 90x cheaper creates new architectural possibilities. When inference costs plummet, the traditional RAG trade-off (expensive retrieval vs. expensive generation) shifts dramatically. Suddenly, maintaining richer contextual state becomes economically viable where it wasn’t before.

Enterprise Adoption Patterns: The Snowflake-OpenAI partnership ($200 million collaboration) explicitly focuses on AI agents connected to first-party data with persistent context. This isn’t a RAG implementation—it’s a contextual memory architecture designed for continuous operation rather than query-response cycles.

Industry Forecasts: Multiple enterprise AI analysts predict contextual memory will surpass RAG as the dominant pattern for agentic AI systems in 2026. This represents a fundamental architectural transition comparable to the shift from rule-based systems to machine learning.

What’s driving this prediction? The maturation of agentic AI from experimental projects to core business infrastructure. As AI moves from answering isolated questions to executing complex, multi-step workflows, architectural requirements fundamentally change.

The Real-World Performance Gap

Production deployments reveal where RAG struggles and contextual memory excels. For single-turn question answering, traditional RAG remains highly effective. But for the use cases enterprises actually care about—customer support workflows, legal document analysis, complex research tasks—the limitations become apparent.

A typical enterprise RAG system executing a 10-step research task might perform 20-30 retrieval operations, each requiring vector similarity search, document ranking, and context injection. Contextual memory architectures performing the same task maintain evolving understanding, retrieving new information only when genuinely needed rather than on every step.

The difference shows up in both latency and cost. RAG systems scale linearly with task complexity—more steps mean proportionally more retrievals. Contextual memory scales sub-linearly, as accumulated understanding reduces the need for additional retrieval as tasks progress.

What This Means for Your RAG Infrastructure

If you’re running production RAG systems or mid-way through implementation, this shift raises urgent questions. Should you abandon your RAG architecture entirely? Double down on traditional retrieval? The answer, frustratingly but realistically, is “it depends”—but with specific decision criteria.

When Traditional RAG Still Makes Sense

RAG isn’t dead. It’s being repositioned. Specific use cases where traditional RAG architectures remain optimal:

Regulatory and Compliance Environments: When you need explicit, auditable retrieval with clear document provenance, RAG’s retrieve-then-cite pattern provides transparency that contextual memory architectures struggle to match. Financial services, healthcare, and legal applications often require this level of traceability.

Frequently Updated Knowledge Bases: If your enterprise knowledge changes daily or hourly, RAG’s stateless retrieval ensures every query accesses the latest information. Contextual memory systems require more sophisticated update mechanisms to maintain accuracy.

Simple Query-Response Applications: For straightforward Q&A systems, chatbots, or documentation search, the added complexity of contextual memory provides minimal benefit. RAG’s retrieve-generate pattern remains simpler to implement and maintain.

Cost-Sensitive, Low-Volume Workloads: Contextual memory requires maintaining state, which incurs infrastructure costs even during idle periods. For applications with sporadic usage, RAG’s stateless approach can be more economical.

When Contextual Memory Becomes Necessary

The architectural shift toward contextual memory becomes compelling for specific enterprise scenarios:

Multi-Agent Workflows: If your AI implementation involves multiple agents coordinating across extended tasks, contextual memory’s shared understanding dramatically improves coherence and reduces redundant operations.

Long-Horizon Task Execution: Projects spanning hours or days benefit from persistent context that accumulates understanding rather than treating each interaction as isolated.

Personalized Enterprise Applications: Customer support, sales assistance, or internal productivity tools that need to remember user preferences and interaction history work better with contextual memory than retrieval-based approaches.

High-Volume, Complex Operations: When you’re running thousands of multi-step AI workflows daily, the cost savings from reduced retrieval operations justify the architectural complexity.

The Hybrid Architecture Reality

Here’s what most analysis misses: the future isn’t “contextual memory OR RAG”—it’s intelligent combinations of both. The most sophisticated enterprise AI architectures emerging in 2026 use contextual memory as the primary knowledge mechanism with RAG as a supplementary retrieval layer for specific needs.

Think of it as a two-tier system: contextual memory maintains working knowledge and accumulated understanding, while RAG handles specialized retrieval when the AI needs information beyond its current context. This hybrid approach delivers contextual memory’s efficiency benefits while preserving RAG’s transparency and currency advantages.

Anthhropic’s Claude Opus 4.6 implementation hints at this direction. The multi-agent teams maintain persistent context but can invoke retrieval operations when encountering novel scenarios or requiring specific documentation. It’s not abandoning RAG—it’s relegating it from primary architecture to specialized tool.

Practical Implementation Considerations

For enterprise teams navigating this transition, several architectural patterns are emerging:

Context Windowing with Selective Retrieval: Maintain rolling contextual memory for recent interactions while using RAG to retrieve historical information or specialized knowledge as needed.

Agent-Specific Memory Models: Different agents in your system might use different architectures—customer-facing agents with contextual memory for personalization, compliance agents with RAG for auditability.

Progressive Context Building: Start interactions with RAG retrieval to establish baseline context, then shift to contextual memory for subsequent exchanges within a session.

The key is recognizing that architectural choices aren’t binary. Your RAG infrastructure isn’t obsolete—but it might need repositioning within a broader system that includes contextual memory capabilities.

The Vendor Landscape Is Already Shifting

Major AI infrastructure providers are reading these same signals and adjusting their roadmaps accordingly. OpenAI’s enterprise AI agent services emphasize persistent context and multi-session understanding. The Snowflake-OpenAI partnership explicitly positions AI as an “intelligence layer” with continuous data access rather than query-response retrieval.

Meanwhile, traditional RAG service providers are pivoting toward hybrid offerings. Vector database vendors are adding state management features. Managed RAG platforms are incorporating contextual memory as optional architecture patterns.

This vendor movement matters because it signals where enterprise AI infrastructure investment is flowing. If you’re selecting platforms or building internal capabilities, the trend is clear: pure RAG offerings are giving way to flexible architectures that support multiple knowledge access patterns.

What This Means for Build vs. Buy Decisions

The architectural uncertainty around RAG vs. contextual memory creates interesting implications for enterprise teams deciding whether to build custom infrastructure or adopt managed services.

Building custom RAG systems made sense when the architectural pattern was stable and well-understood. But if we’re entering a transition period where optimal architectures are still emerging, the argument for managed services strengthens. Platforms that abstract the underlying knowledge access mechanism—whether RAG, contextual memory, or hybrid—provide flexibility to adapt as best practices evolve.

Conversely, if your use case clearly fits established RAG patterns and you’ve already invested in custom infrastructure, wholesale migration to new architectures might be premature. The key is maintaining architectural flexibility rather than over-committing to a single approach.

Action Items for Enterprise RAG Teams

If you’re responsible for enterprise RAG systems or evaluating AI infrastructure, here’s what this shift demands right now:

For Teams with Production RAG Systems:

-

Audit your use cases against the criteria above. Which applications genuinely require traditional RAG’s retrieve-cite pattern vs. which might benefit from contextual memory?

-

Identify multi-agent workflows where contextual memory could reduce costs and improve coherence. These are your highest-impact migration candidates.

-

Plan for hybrid architectures rather than wholesale replacement. Your RAG infrastructure likely has ongoing value as part of a broader system.

-

Monitor cost and performance metrics to establish baselines. When you do pilot contextual memory approaches, you’ll need data to evaluate actual improvements.

For Teams Evaluating New Implementations:

-

Question the default RAG assumption. Just because RAG has been the standard pattern doesn’t mean it’s optimal for your specific requirements.

-

Prioritize architectural flexibility in vendor selection. Choose platforms that support multiple knowledge access patterns rather than locking you into a single approach.

-

Consider starting with hybrid architectures that give you experience with both RAG and contextual memory while maintaining fallback options.

-

Engage with early implementations of contextual memory systems. The technology is mature enough for production pilots but evolving rapidly enough that early experience provides competitive advantage.

For Everyone:

Stay close to architectural evolution in agentic AI. The shift from RAG to contextual memory is part of a broader maturation from isolated AI queries to persistent, capable AI systems. Understanding these transitions separates teams that get blindsided by architectural obsolescence from those that adapt proactively.

The Bottom Line: Evolution, Not Revolution

Anthhropic’s Claude Opus 4.6 launch and the broader industry shift toward contextual memory don’t invalidate everything you’ve built with RAG. But they do signal that RAG’s role is changing—from universal default to specialized tool within more sophisticated architectures.

For enterprise teams, this creates both risk and opportunity. The risk is over-investing in pure RAG architectures that become increasingly marginal for agentic AI applications. The opportunity is getting ahead of architectural transitions while competitors remain locked into yesterday’s patterns.

The teams that will thrive aren’t those who picked the “right” architecture early and stuck with it. They’re the ones who built flexible systems, maintained architectural awareness, and adapted as the landscape evolved. In a field moving as fast as enterprise AI, that adaptability might be the most valuable capability of all.

The contextual memory revolution is here. The question isn’t whether to adapt, but how quickly you can evolve your infrastructure before the competitive gap becomes insurmountable. Your move.