The breaking news hit tech circles like a thunderclap: NVIDIA just invested $2 billion in CoreWeave, with an ambitious plan to build over 5 gigawatts of AI factory infrastructure by 2030. Industry analysts are calling it “the largest infrastructure buildout in human history.” Investor enthusiasm sent CoreWeave’s stock surging 10% overnight. The prevailing narrative? This partnership will democratize AI deployment, making enterprise RAG systems easier and cheaper to build.

But here’s the uncomfortable truth that nobody in the hype cycle wants to discuss: for most RAG practitioners, this massive infrastructure consolidation creates more problems than it solves.

I know this sounds contrarian. After all, more infrastructure should mean better access, right? More compute capacity should translate to lower costs and easier deployment. That’s the conventional wisdom echoing through every tech publication covering this announcement. But if you’re actually building RAG systems at enterprise scale—not training foundation models, not running hyperscaler workloads—the reality is far more nuanced and considerably less optimistic.

The infrastructure gap for RAG deployment isn’t what the AI factories are designed to solve. The challenges keeping enterprise RAG projects stuck in proof-of-concept purgatory have little to do with gigawatt-scale compute availability. And the strategic dependencies this partnership creates might actually make your RAG architecture decisions harder, not easier.

What Just Happened: Decoding the AI Factory Buildout

Let’s establish the facts before we dissect the implications. NVIDIA’s $2 billion investment in CoreWeave represents a strategic bet on what they’re calling “AI factories”—massive data centers optimized specifically for AI workloads. The partnership aims to deploy multiple generations of NVIDIA infrastructure, starting with the Rubin platform, Vera CPUs, and Bluefield storage systems.

The scale is genuinely staggering. Five gigawatts of computing infrastructure by 2030 would create unprecedented AI compute capacity. For context, a single gigawatt could power approximately 750,000 homes. We’re talking about infrastructure that could theoretically support massive parallel training runs for the next generation of foundation models.

CoreWeave CEO Michael Intrator framed this as a century-long opportunity, emphasizing sustained innovation in AI technologies. The market agrees—the 10% stock jump signals investor confidence that demand for this infrastructure will materialize. Industry analysts project $5.2 trillion in global AI data center capital expenditures, with NVIDIA’s Jensen Huang predicting $85 trillion in infrastructure investment over the next 15 years.

These are numbers that make headlines. But here’s what the press releases don’t emphasize: AI factories are architected primarily for training workloads—the computationally intensive process of building foundation models from scratch. The infrastructure optimizations, cooling systems, networking topology, and resource allocation strategies all assume workloads that look fundamentally different from what most RAG systems actually need.

Retrieval-augmented generation operates in a different computational paradigm. RAG inference patterns involve rapid retrieval from vector databases, semantic search operations, and relatively lightweight model calls compared to training runs. The infrastructure that makes sense for training a 100-billion parameter model from scratch doesn’t necessarily translate to efficient RAG deployment at enterprise scale.

The Conventional Wisdom (And Why It Misses the Mark)

The dominant narrative around AI infrastructure buildouts follows a seductive logic: more infrastructure equals easier deployment equals broader AI adoption. If NVIDIA and CoreWeave can build massive AI factories, then enterprises will have abundant access to compute resources, removing barriers to RAG deployment.

This reasoning works brilliantly for hyperscalers and foundation model developers. If you’re Meta training Llama 4, or OpenAI developing GPT-5, access to gigawatt-scale infrastructure is genuinely transformative. Your bottleneck is raw compute availability. Your workloads can absorb virtually unlimited parallel processing. Your use case justifies the architectural assumptions baked into AI factory design.

But that’s not what most enterprise RAG deployments look like.

Recent discussions among RAG practitioners on forums like Reddit’s r/LangChain reveal a strikingly different set of challenges. When developers discuss “RAG On Premises: Biggest Challenges,” the pain points cluster around GPU access for specific inference tasks, document processing complexity at scale, and integration with existing enterprise infrastructure. The conversation isn’t “we need gigawatt-scale compute”—it’s “how do we efficiently utilize the GPUs we can actually access?”

One enterprise AI lead described their rollout struggle: managing 20,000+ documents with acceptable latency while maintaining security, performance, and compliance standards. Their infrastructure bottleneck wasn’t compute availability—it was architectural complexity, data pipeline efficiency, and integration with legacy systems.

The scale mismatch is significant. AI factories optimize for workloads measured in petaflops sustained over weeks or months. Most enterprise RAG deployments need burst compute for retrieval operations measured in milliseconds, with relatively modest sustained inference loads. It’s the difference between building a nuclear power plant and needing a reliable generator.

This isn’t just about scale—it’s about optimization focus. Training infrastructure prioritizes massive parallel throughput. RAG inference infrastructure needs low-latency retrieval, efficient vector search, and rapid model serving. These are related but distinct computational patterns, and infrastructure optimized for one doesn’t automatically excel at the other.

The Real Implications for RAG Builders

Cost Unpredictability and the Mid-Market Squeeze

Here’s where the economics get uncomfortable. AI factory infrastructure is designed to achieve profitability at massive scale. The business model depends on customers running sustained, high-utilization workloads that can absorb the fixed costs of gigawatt-scale buildouts.

For mid-market RAG deployments, this creates an inverse economics problem. You’re paying for infrastructure architected for scale you’ll never need, with pricing models that assume utilization patterns you won’t match. The economies of scale that make AI factories viable for hyperscalers don’t translate down to enterprise RAG workloads.

Consider the hidden costs that emerge from this mismatch. Infrastructure providers optimize around their largest, most profitable customers—the foundation model trainers and hyperscale deployers. Service level agreements, support prioritization, and feature development roadmaps naturally gravitate toward those use cases. Your RAG deployment’s specific needs—low-latency retrieval, efficient vector operations, integration flexibility—become edge cases rather than core priorities.

Cost predictability suffers when your workload doesn’t match the infrastructure’s design center. Pricing models built around sustained high utilization penalize the bursty, unpredictable access patterns typical of enterprise RAG systems. You end up either over-provisioning (paying for capacity you rarely use) or accepting performance variability that undermines user experience.

The infrastructure consolidation this partnership represents exacerbates these dynamics. As compute capacity consolidates around a few major providers, pricing power concentrates. Market forces that might otherwise create competitive pressure for mid-market workloads weaken. You’re increasingly price-takers in a market optimized for different customers.

Architectural Constraints and the Portability Problem

Dependency on AI factory infrastructure creates subtle but consequential architectural constraints. When your RAG system assumes access to specific infrastructure capabilities—particular GPU architectures, networking topologies, storage systems—you’re making architectural decisions that reduce portability.

This matters more than it might initially appear. RAG system requirements evolve rapidly. What works for 10,000 documents might not scale to 100,000. What performs acceptably for ten concurrent users might collapse under a hundred. The ability to adapt your infrastructure strategy as requirements shift is strategically valuable.

Locking into AI factory infrastructure means betting that your RAG workload will continue to align with how those providers optimize their systems. If infrastructure development priorities shift toward even larger training workloads—if the next generation of Rubin platforms optimizes further for sustained parallel processing at the expense of retrieval efficiency—your architectural assumptions become liabilities.

The vendor relationship risks extend beyond pricing. CoreWeave’s $14 billion deal with Meta signals where strategic priorities lie. When your infrastructure provider’s largest customer is running workloads fundamentally different from yours, roadmap alignment becomes problematic. Features you need might languish while capabilities for hyperscale training get prioritized.

Alternative approaches—on-premises deployment, hybrid architectures, edge computing strategies—maintain optionality. An on-premises RAG deployment using open-source vector databases and commodity GPUs might not achieve the theoretical peak performance of AI factory infrastructure, but it preserves architectural flexibility. You can adapt as requirements change without renegotiating contracts or redesigning around different infrastructure constraints.

Hybrid strategies offer particularly compelling middle ground for many enterprise RAG deployments. Keep sensitive data and core retrieval operations on-premises where you maintain control. Use cloud infrastructure for burst capacity and experimentation. This architecture accepts slightly higher complexity in exchange for strategic flexibility and reduced dependency risk.

Strategic Dependencies in a Consolidating Market

The NVIDIA-CoreWeave partnership signals a broader market trend: AI infrastructure is consolidating around a few major players. This concentration creates strategic dependencies that extend beyond immediate technical or cost considerations.

When your RAG system’s performance depends on continued access to specific infrastructure, you’ve created an operational dependency. If pricing changes, if service levels shift, if strategic priorities realign—your system’s viability becomes partially contingent on factors outside your control.

This dependency compounds over time. As you optimize your RAG architecture for specific infrastructure capabilities, migration costs increase. The initial decision to build on AI factory infrastructure becomes stickier with each architectural choice that assumes those capabilities. What starts as a deployment decision evolves into a strategic constraint.

Market consolidation reduces your negotiating leverage. With fewer viable alternatives, switching costs rise and competitive pressure weakens. The infrastructure provider’s incentive to accommodate your specific needs diminishes as they focus on larger, more profitable relationships.

For RAG builders, this creates a strategic dilemma. Do you accept the dependency risks in exchange for access to cutting-edge infrastructure? Or do you prioritize architectural independence, potentially accepting performance trade-offs to maintain strategic flexibility?

There’s no universal answer, but the decision deserves more scrutiny than the prevailing “more infrastructure is better” narrative suggests. For many enterprise RAG deployments, the architectural independence and strategic flexibility of alternative approaches outweigh the theoretical performance advantages of AI factory infrastructure.

What RAG Builders Should Do Instead

So if massive AI factory buildouts don’t solve the problems most RAG deployers actually face, what’s the alternative strategy?

Start by honestly assessing your actual infrastructure requirements rather than assuming you need what the headlines are selling. Most enterprise RAG systems need:

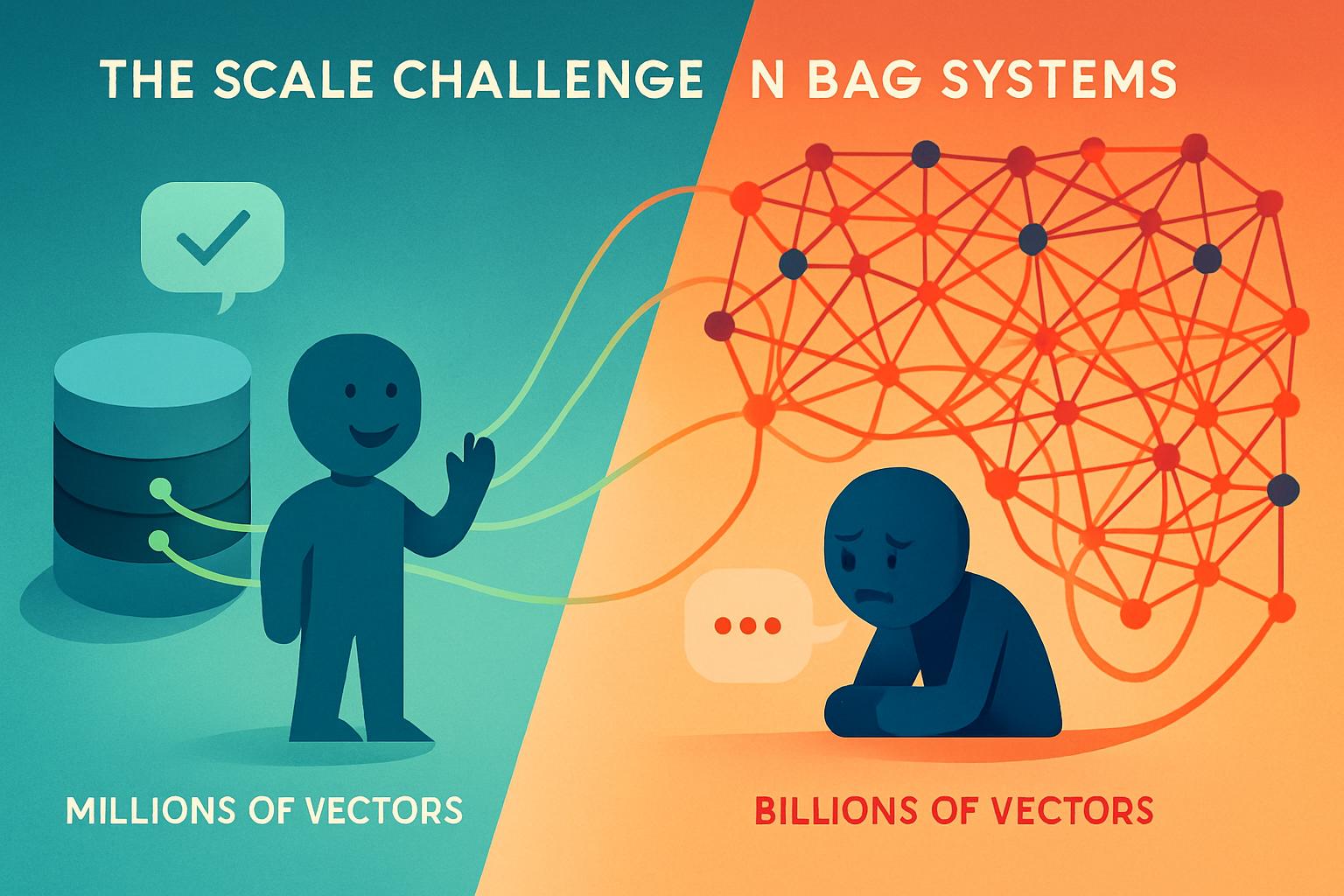

- Efficient vector search at moderate scale (millions to tens of millions of embeddings, not billions)

- Low-latency retrieval (measured in milliseconds, not the sustained throughput AI factories optimize for)

- Flexible integration with existing enterprise systems and data sources

- Cost predictability that aligns with actual usage patterns rather than sustained high utilization

- Architectural portability to adapt as requirements evolve

These requirements point toward different infrastructure strategies than gigawatt-scale AI factories. Consider:

On-premises deployment for core RAG capabilities when data sensitivity, latency requirements, or cost predictability justify the capital investment. Modern vector databases like Milvus, Weaviate, or Qdrant run efficiently on commodity hardware. You maintain architectural control and avoid vendor dependencies.

Hybrid architectures that keep sensitive retrieval operations on-premises while leveraging cloud infrastructure for experimentation, burst capacity, or workloads with less stringent requirements. This balances control with flexibility.

Edge deployment for use cases requiring real-time processing or operating in bandwidth-constrained environments. RAG inference can run surprisingly efficiently on edge hardware when properly optimized.

Managed vector database services that specialize in retrieval workloads rather than general-purpose AI factory infrastructure. Providers like Pinecone or Zilliz optimize specifically for the access patterns RAG systems actually exhibit.

The key is matching infrastructure strategy to your actual requirements rather than defaulting to whatever makes the biggest headlines. Ask the uncomfortable questions:

- What happens to our RAG system if this infrastructure provider changes pricing?

- Can we migrate to alternative infrastructure if strategic priorities shift?

- Are we optimizing for theoretical peak performance or reliable, cost-effective operation?

- Do our infrastructure dependencies create acceptable strategic risks?

For many enterprise RAG deployments, honest answers to these questions point away from dependence on AI factory infrastructure and toward approaches that prioritize flexibility, cost predictability, and architectural independence.

The Bigger Picture: Infrastructure vs. Application Layer Divergence

The NVIDIA-CoreWeave partnership represents more than just an infrastructure investment—it signals a fundamental market evolution. The gap between compute infrastructure providers and AI application builders is widening.

AI factories optimize for the economics of massive-scale training workloads. This makes perfect sense for their business model and their largest customers. But it creates a strategic divergence for application-layer builders, including RAG practitioners. Your success increasingly depends on factors infrastructure providers aren’t optimizing for.

This divergence has implications beyond immediate deployment decisions. As infrastructure consolidates around training-optimized architectures, the market creates opportunities for specialized infrastructure that serves different needs. Vector database providers, inference-optimized serving platforms, and edge deployment solutions can differentiate by focusing on what AI factories aren’t prioritizing.

For RAG builders, this evolving landscape demands more sophisticated infrastructure strategy. The days of assuming “more powerful infrastructure equals better RAG deployment” are ending. Success requires understanding the specific computational patterns your RAG system exhibits, honestly assessing which infrastructure approaches align with those patterns, and maintaining enough architectural flexibility to adapt as the market evolves.

The NVIDIA-CoreWeave partnership will undoubtedly enable remarkable AI capabilities. Foundation model training will accelerate. Hyperscale deployments will achieve new performance benchmarks. But for the enterprise RAG builder working with thousands or tens of thousands of documents, serving hundreds or thousands of users, operating within specific cost constraints and integration requirements—the path forward requires different infrastructure thinking than what the AI factory headlines suggest.

Key Takeaways

The $2 billion NVIDIA-CoreWeave partnership creates impressive AI infrastructure, but it solves problems most RAG builders don’t have:

- AI factories optimize for training workloads, not the retrieval and inference patterns that characterize enterprise RAG systems

- Mid-market RAG deployments face cost disadvantages when infrastructure is architected for gigawatt-scale utilization

- Infrastructure dependencies create strategic risks as market consolidation reduces alternatives and increases switching costs

- Alternative approaches—on-premises, hybrid, edge, or specialized vector database services—often better serve enterprise RAG requirements

- Architectural flexibility matters more than peak theoretical performance for most real-world RAG deployments

The infrastructure buildout represents genuine progress for AI as a field. But if you’re building RAG systems for enterprise deployment, resist the urge to assume that what’s good for foundation model training is automatically good for your use case. Evaluate your actual requirements, understand the trade-offs in different infrastructure strategies, and make decisions that align with your specific needs rather than following the hype.

Your RAG system’s success depends more on architectural choices, data quality, and integration sophistication than on access to gigawatt-scale compute. Choose infrastructure that supports those priorities, even if it doesn’t make headlines. The goal isn’t to deploy on the most impressive infrastructure—it’s to build RAG systems that reliably deliver value for your users while maintaining strategic flexibility as the technology landscape evolves.

Want to dive deeper into RAG architecture strategies that don’t depend on AI factory infrastructure? We’re developing a comprehensive framework for evaluating infrastructure dependencies and designing portable RAG systems. Subscribe to Rag About It to get notified when we publish the next piece in this series on building infrastructure-independent RAG architectures.