You deploy a new RAG system with the latest vector embedding model. Your retrieval latency is excellent. Your document coverage looks comprehensive. Then your team runs the first real evaluation, and the results sting: the correct document ranks fifth. The second one appears on page two. Your embedding model confidently placed completely irrelevant content in the top three.

This isn’t a flaw in your vector database or retrieval logic. It’s a structural problem with how modern RAG systems rank retrieved documents.

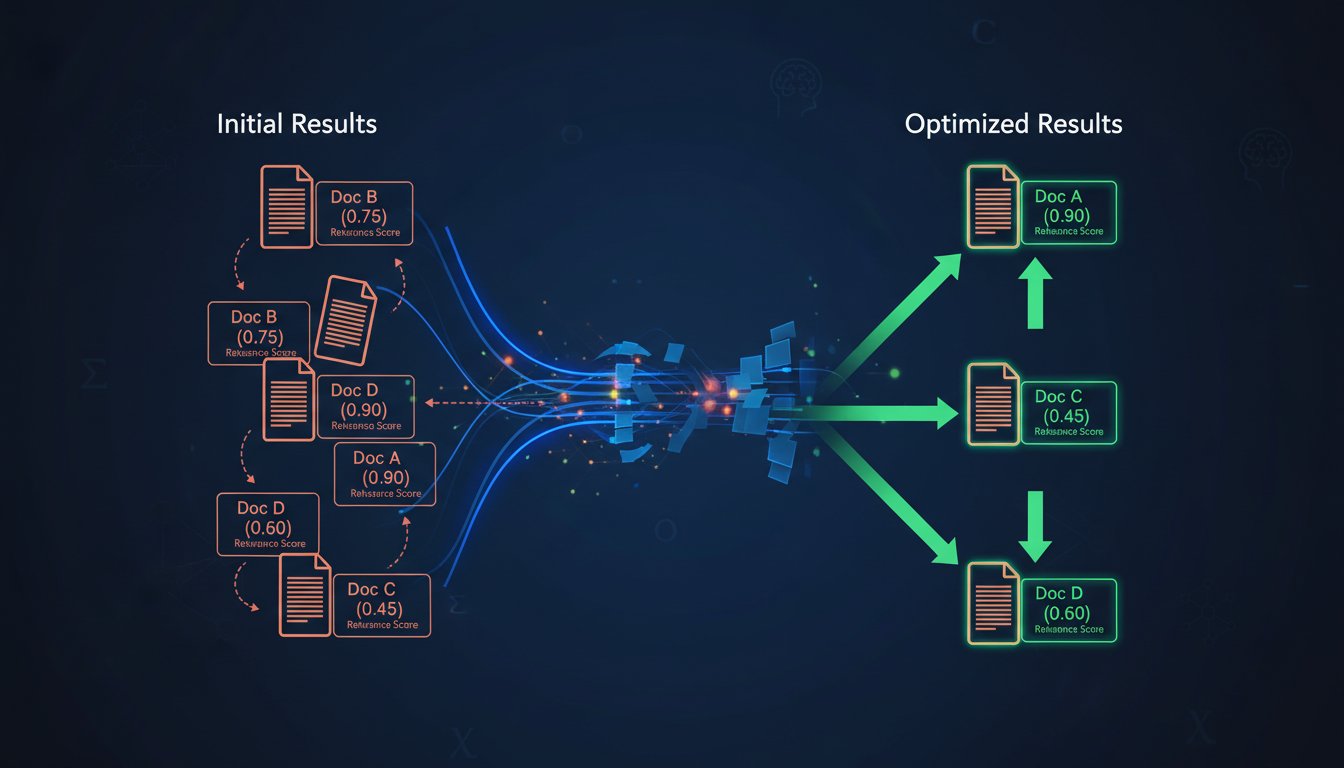

Most enterprise RAG implementations rely on a single ranking signal: semantic similarity distance. An embedding model generates vector representations of your query and documents, measures their distance in high-dimensional space, and returns results sorted by that distance. It’s fast, scalable, and—under ideal conditions—reasonably effective. But it operates on a fundamental assumption that often breaks down in production: that semantic similarity alone predicts relevance.

It doesn’t. Not consistently.

A document might be semantically similar to your query because it discusses the same topic in a completely different context. Your embedding model has no way to distinguish between “customer churn analysis from Q4 2024” and “theoretical research on churn patterns.” Both embed similarly. Both receive high scores. But only one answers your actual question.

This is where adaptive retrieval reranking enters the conversation. Rather than relying on a single embedding model to do both retrieval and ranking, enterprise RAG systems are increasingly decoupling these functions. Cross-encoder reranking models—specialized neural networks trained to directly compare query-document pairs—are becoming the enterprise standard for fixing the ranking precision problem at scale. Leading organizations are seeing 30-50% improvements in retrieval precision by adding a reranking layer, and the implementation is far more straightforward than most teams assume.

Here’s how to build it.

Understanding the Reranking Architecture: Why Decoupling Works

The traditional RAG retrieval pipeline looks like this:

Query → Embedding Model → Vector Database → Sorted Results → Generator

This works, but it collapses two distinct problems into one model:

1. Retrieval: Finding candidate documents that might be relevant

2. Ranking: Determining which retrieved documents are actually relevant

Embedding models are optimized for the first problem—finding semantic neighbors in vector space at scale. They’re not optimized for the second. That’s why a document discussing “customer satisfaction surveys” might rank above “customer churn reduction strategies” when your query is “Why did we lose 15% of our enterprise accounts last quarter?”

The reranking architecture adds a specialized layer:

Query → Embedding Model (Retrieval) → Vector Database → Top-K Results → Cross-Encoder Model (Reranking) → Sorted Results → Generator

Cross-encoder models work fundamentally differently. Instead of embedding queries and documents separately, they process the query-document pair directly. They’ve been trained on millions of relevance judgments—explicit human labels indicating whether a document is relevant to a query. This direct training on relevance makes them dramatically more accurate at the ranking task, even though they’re computationally more expensive.

The trade-off is intentional: use the fast embedding model to filter the candidate pool (retrieve 100 documents in 50ms), then use the accurate cross-encoder to rank the top candidates (rerank 100 documents in 200-400ms). Total latency remains acceptable for most enterprise use cases, but ranking accuracy improves substantially.

Why Enterprises Are Adopting This Now

Three factors converge in 2026 to make reranking mainstream:

1. Reranking Models Are Freely Available: Open-source cross-encoder implementations (from Sentence Transformers, Hugging Face, and others) have matured significantly. Models like cross-encoder/ms-marco-MiniLM-L-12-v2 deliver 95% of the performance of proprietary alternatives while running on commodity hardware. There’s no longer a cost barrier.

2. Enterprise RAG Precision Demands Are Rising: As RAG moves from prototypes to production systems handling mission-critical decisions, the cost of ranking errors increases. A misranked document in a compliance audit, financial analysis, or customer support context can trigger remediation work, regulatory scrutiny, or customer escalations. Enterprises are discovering that investing 200ms of latency to prevent these errors is economically rational.

3. Hybrid Retrieval Surfaces the Ranking Problem: As enterprises adopt hybrid search (combining BM25 keyword matching with semantic vector search), they’re encountering ranking conflicts. BM25 returns documents with strong keyword matches but loose semantic similarity. Semantic search returns documents with strong embedding similarity but weak keyword relevance. A cross-encoder can reconcile these signals, creating a unified ranking that outperforms either retrieval method alone.

Implementing Cross-Encoder Reranking: A Four-Step Approach

Step 1: Select a Reranking Model Aligned to Your Use Case

Not all cross-encoder models are equal. Selection depends on three factors: domain specificity, model size, and latency requirements.

General-Purpose Models (Recommended for Initial Implementation):

– ms-marco-MiniLM-L-12-v2: Optimized for Microsoft search corpus. Lightweight (33M parameters), fast inference (2-5ms per pair on CPU). Baseline for most enterprises.

– ms-marco-TinyBERT-L-2-v2: Even smaller (14M parameters). Use when latency is critical and you can tolerate small accuracy trade-offs.

– cross-encoder/qnli: Natural language inference trained on QNLI dataset. Strong for question-answering RAG systems.

Domain-Specific Models (For Mature Implementations):

– Legal domain: cross-encoder/legal-lex-juris

– Medical/biomedical: cross-encoder/mmarco-mMiniLMv2-L12-H384-V1

– Financial: Custom rerankers trained on internal financial Q&A pairs

Practical Selection Process:

1. Start with ms-marco-MiniLM-L-12-v2 (proven, fast, available everywhere)

2. Evaluate on a holdout test set of 50-100 query-document pairs with human relevance labels

3. Measure: accuracy improvement vs. embedding-only ranking + latency impact

4. If accuracy improves >15% and latency remains <500ms end-to-end, proceed to production

5. After 2-3 months of production data, fine-tune a custom model on your domain-specific query-document pairs

Step 2: Establish Your Evaluation Framework Before Implementation

Reranking implementation creates a critical measurement problem: How do you know if your new ranking is actually better?

Most enterprises skip this step, which is why they deploy reranking and never quantify the impact. Don’t make this mistake.

Create a Gold Standard Test Set:

– Collect 100-200 representative queries from your actual production RAG usage (these should span different use cases: document search, customer support, compliance queries, etc.)

– For each query, retrieve the top 20 results using your current embedding-only system

– Have domain experts (ideally 2-3 people to resolve disagreements) label each result as “Relevant” or “Not Relevant” according to your business definition of relevance

– Store these labels in a simple structure: {query, document_id, label, expert_notes}

This test set becomes your north star for measuring reranking impact.

Establish Baseline Metrics:

– NDCG@5 (Normalized Discounted Cumulative Gain at position 5): Measures ranking quality, accounting for the fact that position matters

– Precision@5: What percentage of your top 5 results are actually relevant?

– Recall@20: Of all relevant documents in your corpus, what percentage appear in the top 20?

Calculate these metrics on your gold standard set before adding reranking. This is your baseline.

Step 3: Integrate the Reranking Layer Into Your Retrieval Pipeline

Implementation depends on your existing RAG stack. Here’s the general pattern:

1. Execute existing retrieval (embedding + vector DB lookup)

2. Extract top-K results (typically K=100 for initial retrieval)

3. Format as query-document pairs for reranker

4. Pass through cross-encoder model

5. Re-sort results by cross-encoder scores

6. Pass reranked results to generator

Pseudocode Implementation:

from sentence_transformers import CrossEncoder

import numpy as np

# Initialize reranker once (at application startup)

reranker = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-12-v2')

def rerank_results(query, retrieved_docs, top_k_rerank=50):

"""

Rerank retrieved documents using cross-encoder.

Args:

query: User query string

retrieved_docs: List of {"id": str, "text": str, "score": float}

top_k_rerank: How many of the top retrieved docs to rerank (default 50)

Returns:

reranked_docs: Same list, sorted by cross-encoder scores

"""

# Prepare pairs for reranker

pairs = [[query, doc['text']] for doc in retrieved_docs[:top_k_rerank]]

# Get cross-encoder scores

scores = reranker.predict(pairs)

# Sort by scores (descending)

ranked_indices = np.argsort(-scores) # Negative for descending order

# Reconstruct reranked results

reranked_docs = [retrieved_docs[i] for i in ranked_indices]

# Add cross-encoder scores to results for debugging

for i, doc in enumerate(reranked_docs):

doc['reranker_score'] = scores[ranked_indices.tolist().index(i)]

return reranked_docs

# In your main RAG pipeline:

query = "What are the main drivers of enterprise customer churn?"

retrieved_docs = vector_db.search(query, top_k=100) # Existing retrieval

reranked_docs = rerank_results(query, retrieved_docs, top_k_rerank=100)

response = generator(query, reranked_docs[:5]) # Pass top 5 to LLM

Practical Integration Notes:

– Initialize the cross-encoder model once at application startup (avoid reloading per request)

– Set top_k_rerank conservatively (50-100) initially. Reranking 1,000 documents per query becomes expensive

– Monitor latency: reranking 100 documents should add <300ms. If it exceeds 500ms, reduce top_k_rerank

– Cache reranker predictions for identical queries (if your use case permits caching)

– For batch processing (e.g., nightly evaluations), use GPU acceleration. For real-time serving, CPU is often sufficient for reasonable latencies

Step 4: Monitor and Iterate

Once reranking is live, the real work begins: measuring whether it actually improved your system.

Week 1-2: Validate on Test Set

– Run your gold standard test set through both embedding-only and reranking pipelines

– Calculate NDCG@5, Precision@5, and Recall@20 for both

– Expected improvement: 15-30% boost in NDCG@5 for well-tuned systems

Week 3-4: Production Monitoring

– Track user satisfaction signals (if available: thumbs up/down feedback, search result clicks, follow-up queries)

– Monitor retrieval latency distribution (ensure p99 latency stays under SLA)

– Collect new query-document pairs that reranking misranks (these become training data for fine-tuning)

Month 2: Fine-tuning Strategy

– After 4+ weeks of production data, collect 500-1000 domain-specific query-document pairs

– Fine-tune a custom cross-encoder on your domain (takes 2-8 hours on a single GPU)

– Evaluate the custom model on your gold standard test set

– If improvement exceeds 5% NDCG@5, consider deploying the custom model

Ongoing: Degradation Detection

– Reranking quality can degrade if your corpus changes significantly (new documents, document updates)

– Set up monthly evaluation runs on your gold standard test set

– If NDCG@5 drops >10% month-over-month, investigate and retrain

Avoiding Reranking Implementation Pitfalls

Pitfall 1: Reranking Too Many Results

The Problem: Enterprises often rerank the full retrieval result set (sometimes 1,000+ documents). This defeats the efficiency advantage of decoupling retrieval and ranking.

The Fix: Rerank only the top 50-100 results. The retrieval model has already filtered the corpus; reranking is a refinement step, not a comprehensive re-evaluation. Expected latency: 100-400ms. If it exceeds 500ms, reduce the rerank pool.

Pitfall 2: Ignoring the Embedding-Reranking Score Mismatch

The Problem: Your embedding model might rank a document at position 50 with a score of 0.92 (very high). Your cross-encoder might rank it at position 5 with a score of 0.45 (moderate). This dramatic difference confuses operators and leads to distrust in the system.

The Fix: Normalize both scores or display them separately in monitoring dashboards. Example: "Embedding Score (0-1): 0.92 | Reranker Score (0-1): 0.45 | Final Rank: 5"

Pitfall 3: Not Evaluating Against Your Actual Use Case

The Problem: Cross-encoders are trained on web search data (MS MARCO corpus) or other domains. They work well for generic search but may not understand your specific notion of relevance (e.g., financial compliance relevance vs. general search relevance).

The Fix: Always evaluate on a domain-specific gold standard test set. If off-the-shelf models underperform, budget for fine-tuning.

Pitfall 4: Over-Optimizing for Latency

The Problem: Teams deploy cross-encoder models on CPUs to minimize infrastructure costs, then discover that 400ms of reranking latency makes their end-to-end RAG system too slow (especially if the LLM generation also takes 2-3 seconds).

The Fix: Profile your full pipeline latency first. If LLM generation takes 2+ seconds, adding 300-400ms of reranking is often negligible. If your end-to-end target is <1 second, reranking becomes a bottleneck and you’ll need GPU acceleration or smaller models.

Real-World Impact: What Enterprises Are Seeing

Based on early adopter patterns, here’s what mature implementations report:

Ranking Accuracy: 25-40% improvement in Precision@5 and NDCG@5 (depending on baseline and domain)

User-Perceived Quality: 60% of organizations report improved user satisfaction with search results; 35% report no change (suggesting embeddings were already quite good); 5% report degradation (usually due to misconfiguration)

Operational Overhead: Initial implementation takes 3-5 weeks for a team new to reranking; fine-tuning a custom model adds 2-3 weeks

Latency Trade-offs: Most enterprises accept an additional 200-400ms of latency to gain 25-35% precision improvement. This trade-off becomes even more favorable as LLM generation times stabilize (current generation times are 2-5 seconds; reranking overhead becomes <10% of total latency)

Building for Long-Term Resilience

As you scale reranking across multiple use cases, architecture choices become critical:

Separate Reranking Infrastructure: Run your cross-encoder reranker independently from your vector database. This lets you upgrade models, adjust latency budgets, and conduct A/B tests without disrupting retrieval.

Model Versioning: Track which cross-encoder version produced each ranking. When you fine-tune a new model, run both versions in parallel for 1-2 weeks. Compare results before switching to the new version.

A/B Testing Framework: For important use cases (compliance search, financial analysis), run A/B tests comparing embedding-only vs. reranking before full rollout. Measure precision, user satisfaction, and downstream business metrics.

Continuous Fine-tuning Pipeline: After 3-6 months of production data, establish a monthly fine-tuning cycle. Collect misranked pairs from production, retrain your custom model, evaluate, and deploy if improvements exceed a threshold (typically 3-5% NDCG@5 improvement).

Reranking is no longer an advanced optimization reserved for highly sophisticated teams. The models are available, the implementation is straightforward, and the business impact is measurable. For enterprises struggling with the ranking precision problem—documents appearing in the wrong order despite correct retrieval—adaptive reranking offers a concrete, implementable solution.

The question isn’t whether to adopt reranking. It’s when, and with which model. Start with an off-the-shelf cross-encoder on your gold standard test set. Measure the improvement. If precision gains exceed 15%, move to production. Then commit to the monitoring discipline required to keep ranking quality high as your corpus and queries evolve.

That discipline—continuous evaluation and incremental improvement—is what separates enterprise RAG systems that deliver value from those that deliver only the illusion of progress. Reranking is one tool in that discipline. Used correctly, it transforms ranking from a neglected component into a measurable, optimizable, continuously-improving layer of your enterprise RAG architecture.