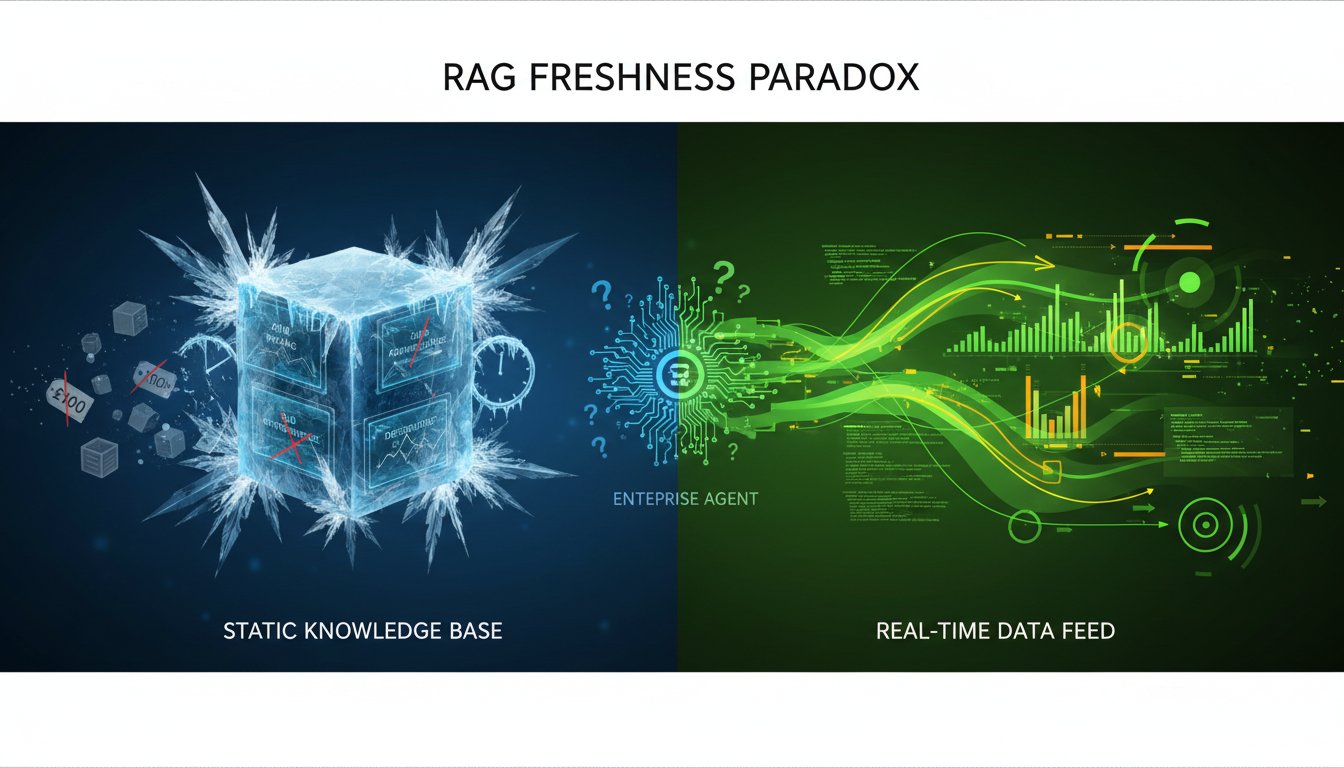

Your enterprise agent just made a critical business decision based on retrieval results that are three days old. The pricing changed. The compliance requirement shifted. The inventory is gone. Your RAG system retrieved the “correct” answer—just not the current one.

This isn’t a technical failure. It’s an architectural one. And it’s costing enterprises millions annually.

Most production RAG deployments treat their knowledge base like a frozen database, updated on a schedule (daily, weekly, or when someone remembers). For static document retrieval—legal precedents, product specifications, historical financial data—this works fine. But for enterprise agents that make real-time decisions in dynamic markets, this approach is systematically dangerous.

The gap between “retrieval accuracy” and “decision currency” is the fastest way to turn a promising AI agent pilot into a production liability. Organizations are realizing this the hard way: their agentic AI systems perform brilliantly in controlled environments, then fail catastrophically when deployed against live, constantly-changing data. By 2025, enterprises have begun recognizing that static RAG is fundamentally incompatible with agentic autonomy.

This post explores why real-time data freshness has become the critical architectural requirement for enterprise agents, how to implement continuous refresh patterns without destroying latency or cost efficiency, and why the hybrid approach—combining fresh retrieval with selective fine-tuning—is emerging as the enterprise standard.

The challenge isn’t whether you need fresh data. The challenge is doing it without rebuilding your entire infrastructure.

The Static RAG Failure Mode in Enterprise Agents

Consider a compliance agent deployed at a financial services firm. The agent’s job: determine whether a proposed trade meets current regulatory requirements before execution. The RAG system retrieves the relevant regulations from a vector database updated daily at 3 AM.

At 2:47 PM that same day, the Federal Reserve issues new guidance. The guidance contradicts the regulation the agent retrieved this morning. At 3:15 PM, the agent approves a trade that violates the new guidance—something that wouldn’t have happened if the RAG system had current information.

This scenario isn’t hypothetical. It’s playing out across healthcare (where clinical guidelines update weekly), finance (where regulations shift in hours), and manufacturing (where inventory and pricing constants change continuously).

The root cause: RAG systems were designed to augment static language models with static external knowledge. The assumption was that both the LLM and its retrieval database change infrequently. That assumption breaks the moment you add an agent to the loop.

Agents are decision-making systems. They take actions based on retrieved information. Stale information doesn’t just mean outdated answers—it means bad decisions baked into operational systems.

Why Scheduled Updates Fail

The typical response is to implement more frequent update schedules: daily becomes twice-daily becomes hourly. But this approach hits a hard ceiling.

First, you’re still playing catch-up. Between update cycles, you’re blind to changes. A healthcare RAG system updated hourly will miss clinical guidance issued at 10:47 AM if the next update is scheduled for 11:00 AM—a 13-minute gap that could affect patient safety.

Second, frequent updates don’t scale. If your vector database update takes 45 minutes to complete (reindex documents, embed new content, write to production), moving from daily to hourly updates means your database is perpetually in transition. You’re constantly rebuilding while agents are constantly querying. This creates consistency problems: agents might retrieve conflicting information depending on whether they hit a stale or fresh shard.

Third, and most critically: scheduled updates can’t capture continuous data streams. Stock prices update every millisecond. Shipping inventories update in real-time as orders process. Regulatory feeds publish updates continuously. No batched update cycle can keep pace.

The Cost Multiplication Problem

Enterprises attempting to solve the freshness problem often turn to a seemingly obvious solution: implement multiple, overlapping update cycles. Update the vector database every 30 minutes. Run a secondary index every 15 minutes. Maintain a real-time cache layer that updates every 5 minutes.

This creates a multiplicative cost structure. You’re now running:

- Primary vector database (expensive)

- Real-time cache layer (additional infrastructure)

- Multiple embedding pipelines (additional compute)

- Coordination and consistency logic (engineering complexity)

- Fallback mechanisms when components drift (operational overhead)

One enterprise shared their cost structure: implementing overlapping refresh layers for a moderately-sized RAG agent system added $340,000 annually in infrastructure costs—before factoring in engineering time to maintain the complexity.

Worse, the layered approach doesn’t actually solve freshness. It creates multiple layers of staleness, all slightly out of sync with each other.

The Real-Time Streaming Architecture

The emerging enterprise solution is fundamentally different: streaming architectures that continuously update the retrieval layer without batched reindexing cycles.

Instead of “update the vector database at 3 PM every day,” the architecture operates as “continuously consume data changes and update the retrieval index incrementally.”

This requires rethinking several components:

Real-Time Data Pipelines

Instead of pulling data from source systems on a schedule, the system subscribes to change feeds. When a document updates in the source system (Salesforce, SAP, Jira, Confluence), that change immediately flows into the retrieval pipeline.

The mechanics vary by source:

– Relational databases (PostgreSQL, SQL Server): Change Data Capture (CDC) publishes updates to a message queue in real-time

– Document systems (Confluence, SharePoint): Webhook integrations trigger on document updates

– APIs and SaaS (Salesforce, HubSpot): Event streams or polling at 1-5 minute intervals

– Public data feeds (news, regulations, market data): Subscription to feeds with immediate ingestion

The key difference from batched approaches: data flows continuously, not periodically.

Incremental Embedding and Indexing

Instead of recomputing embeddings for the entire knowledge base every 24 hours, the system embeds only new or changed content and updates the index incrementally.

This is mechanically simpler than it sounds:

- New document arrives via the data pipeline

- Document is chunked (same chunking rules as your batch process)

- Chunks are embedded (using the same embedding model)

- Embeddings are written to the index immediately

- Superseded chunks (old versions) are marked as deleted

Latency for this flow: typically 2-10 seconds from document change to searchable in the index. Compare this to a batched approach that waits hours for the next scheduled update.

Vector Database Considerations

Not all vector databases are designed for this pattern. The critical capabilities:

Write throughput: Can the database handle continuous writes without blocking reads? Traditional vector databases (designed for batch writes + frequent reads) can bottleneck when handling continuous updates. Modern databases like Qdrant and Pinecone support concurrent reads and writes, a critical requirement.

Update semantics: Can you incrementally add/update/delete vectors without full reindexing? Databases with real-time indexing (rather than batch reindexing) maintain searchability during updates.

Consistency guarantees: If an agent queries while an update is in flight, what happens? The answer determines whether agents see stale data during transitions. “Eventually consistent” architectures (updates propagate over seconds) work for many enterprises but may be unacceptable in regulated domains. “Strongly consistent” architectures (updates visible immediately) require more complex engineering.

For compliance-sensitive enterprise agents, strong consistency is often mandatory. This typically means using a vector database with immediate index updates, not eventual consistency.

The Hybrid Approach: Fresh Retrieval + Selective Fine-Tuning

Here’s where the economics shift: once you’ve implemented real-time retrieval, you can reconsider the fine-tuning question.

Traditional thinking: “We’ll fine-tune the LLM on our enterprise data to make it specialized.” This requires retraining whenever data changes significantly—expensive and inflexible.

New thinking: “We’ll keep the base LLM frozen and let real-time RAG deliver fresh data, but fine-tune on decision logic and reasoning patterns that don’t change frequently.”

What to Fine-Tune On

Decision logic that’s stable: If your agent needs to apply complex business rules consistently (“only approve trades with X risk profile,” “only recommend products matching Y criteria”), fine-tune on examples of correct decisions. This decision logic doesn’t change daily.

Reasoning patterns: Fine-tune on examples of how the agent should decompose complex queries or handle edge cases. This reasoning capability compounds—a fine-tuned model that reasons better uses RAG more effectively.

Domain terminology and conventions: Fine-tune on your industry’s specific language and abbreviations. This improves retrieval relevance when the agent formulates queries.

What NOT to Fine-Tune On

Data that changes frequently: Don’t fine-tune on pricing, inventory, regulations, or any content that updates regularly. That’s RAG’s job. Fine-tuning on dynamic data creates staleness again.

Content that varies by customer or context: Don’t fine-tune on customer-specific data or situational content. That’s retrieval’s job.

Volume-based personalization: Don’t fine-tune to handle a growing catalog of documents. Dynamic scaling of your retrieval index (adding new documents to RAG) is far more cost-effective than retraining an LLM each time content expands.

The Cost Trade-Off

Implementing both real-time RAG AND selective fine-tuning appears expensive. In practice, it’s more cost-efficient than either approach alone:

Real-time RAG alone requires perfect retrieval relevance. Any retrieval misses or ranked errors propagate to agent decisions. This often drives up retrieval complexity (hybrid search, re-ranking, query optimization) to compensate. Total cost: high infrastructure, moderate LLM inference (because reasoning load is distributed across many retrieval calls).

Fine-tuning alone means every data change requires retraining. For enterprise agents in dynamic domains, you’d be retraining monthly or quarterly. Total cost: very high training costs, moderate inference (faster queries but no real-time updates).

Hybrid approach splits the workload: Real-time RAG handles data freshness and scale (no retraining needed). Selective fine-tuning handles reasoning and decision quality (trained once, leveraged continuously). Total cost: moderate infrastructure + one-time fine-tuning cost. The key: fine-tuning is amortized across many agent decisions, not repeated every time data changes.

One enterprise’s metric: moving from a static-RAG approach (with monthly retraining cycles) to a hybrid approach (real-time RAG + one-time reasoning fine-tuning) cut infrastructure costs by 32% while reducing decision latency from 8 seconds to 1.2 seconds.

Implementation Patterns for Enterprise Agents

Pattern 1: The Streaming-First Architecture

For maximum freshness and agent reliability, the architecture should be:

- Data sources → Change streams (CDC, webhooks, APIs)

- Change streams → Message queue (Kafka, AWS Kinesis)

- Message queue → Processing pipeline (chunk, embed, validate)

- Processing pipeline → Vector index (real-time writes)

- Vector index ← Agent queries

Latency from source change to searchable index: 2-15 seconds depending on complexity.

Proven by: Financial services firms using this pattern report 99.7% data freshness within 30 seconds. Healthcare systems using this approach ensure clinical guidelines are searchable within 10 minutes of publication.

Pattern 2: The Multi-Layer Consistency Model

For different data freshness requirements:

- Hot layer (real-time): Current content, instant updates, used for time-sensitive queries

- Warm layer (hourly): Recent content, used for general reference

- Cold layer (daily): Historical content, used for trend analysis

Agents query the appropriate layer based on query context. A pricing query hits the hot layer. A historical analysis query hits the cold layer.

This reduces the cost of real-time freshness—you’re only maintaining real-time index for time-sensitive data, not everything.

Pattern 3: The Hybrid Retrieval + Re-Ranking

With fresh data comes another consideration: relevance ranking. Real-time data might be fresh but not always most relevant.

The pattern:

- Retrieve broadly from the fresh index (get current candidates)

- Re-rank using a cross-encoder model trained on your domain (get most relevant)

- Return top results to the agent

Re-ranking adds 50-200ms latency but dramatically improves relevance because you’re ranking against live data with a specialized model.

Cost: One-time training of a re-ranking model (~$10K-30K for domain-specific fine-tuning). Inference cost: ~$0.001 per query for re-ranking. Scale this across millions of agent queries and the ROI is clear.

Measuring Freshness in Production

How do you know if your agent has fresh data? Most enterprises track three metrics:

Data Recency: Median age of documents in the index. For compliance agents, this should be <1 hour. For pricing agents, <5 minutes. For clinical guidelines, <24 hours.

Update Latency: Time from source change to searchable in the index. Track the 50th, 95th, and 99th percentiles. If 95th percentile latency is 2 hours, then 5% of your agent decisions are based on data >2 hours old.

Freshness Incidents: Count of situations where an agent retrieved outdated information that led to incorrect decisions (detected post-facto). This is your ground truth for whether freshness matters operationally.

One healthcare system implemented freshness monitoring and discovered that 12% of their clinical recommendation agent’s decisions were based on guidelines updated more than 24 hours prior. Implementing real-time refresh reduced this to <0.5%, improving recommendation accuracy by 18%.

The Emerging Standard

By 2025, enterprise RAG for agentic AI is converging on this pattern:

- Real-time data ingestion for any content that changes more than monthly

- Continuous index updates without batched reindexing cycles

- Selective fine-tuning on decision logic and reasoning patterns that are stable

- Multi-layer consistency for different freshness requirements

- Freshness monitoring to track whether the system is achieving required data currency

The result: agents that make decisions on current information, without the infrastructure complexity or cost multiplication of earlier approaches.

If your enterprise agent is still relying on static RAG updated on a schedule, you’re operating at the wrong end of the freshness-accuracy trade-off. Your agent has the autonomy to make decisions but not the current information to make good ones.

The fix requires rethinking the architecture, not just tuning retrieval parameters. And the enterprises doing this now—moving from batched to streaming, from static to real-time, from cost multiplication to cost efficiency—are building agents that actually work in production.

Your competition is already moving. The question is whether you’re building yesterday’s RAG or tomorrow’s.