Your enterprise RAG system is silently degrading right now, and you probably don’t know it. The metrics look fine on your dashboard. Query latency is within SLA. Retrieval accuracy benchmarks are solid. But somewhere in your production pipeline, stale data is polluting your responses, outdated information is shaping decisions, and your system is answering yesterday’s questions with yesterday’s answers.

This is the knowledge staleness crisis—and it’s why 73% of enterprise RAG deployments fail within the first year. The culprit isn’t a technical bug you can patch. It’s architectural: static knowledge bases can’t keep pace with the real-time information demands of modern enterprises. Your vector database snapshot from this morning is already obsolete by noon. Your embeddings don’t know about the contract amendment signed an hour ago, the product recall issued this morning, or the legal ruling that just reshuffled regulatory compliance requirements.

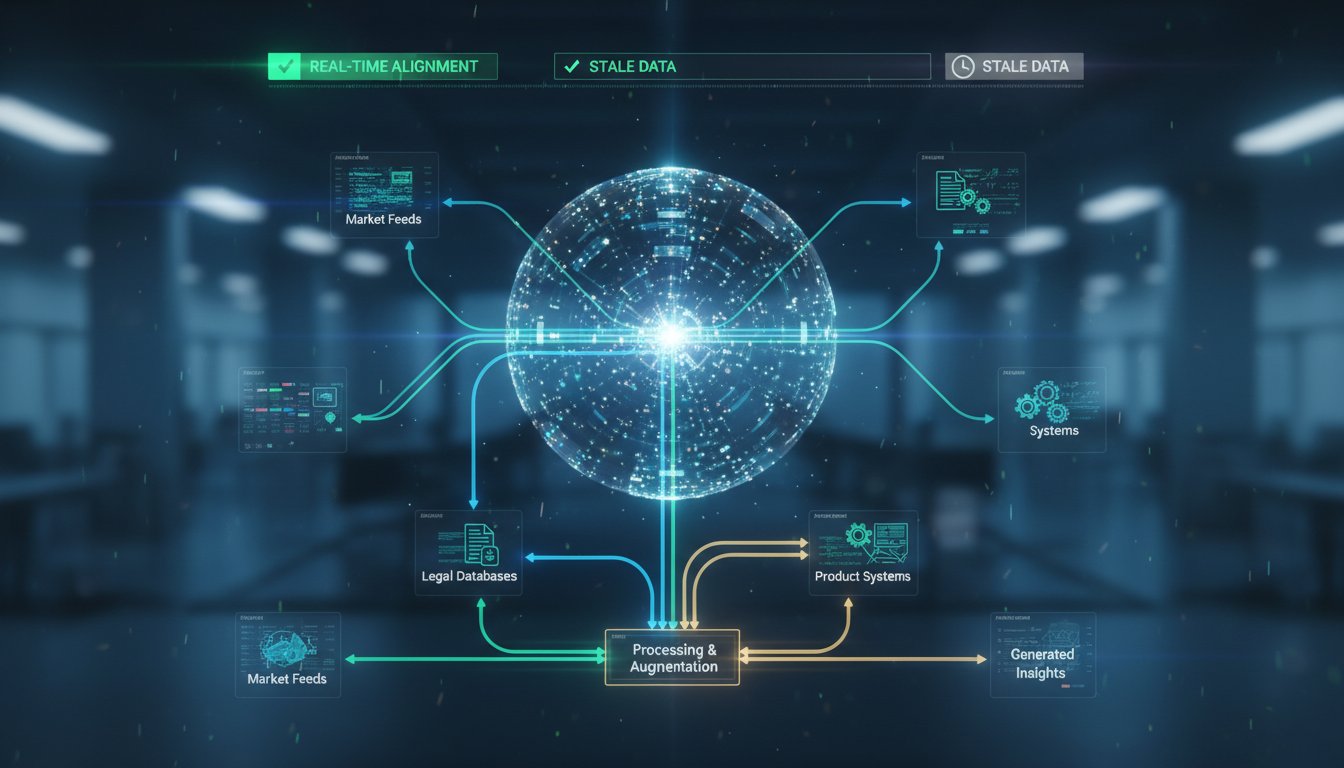

The solution emerging from forward-thinking enterprises isn’t more monitoring dashboards (though you need those too). It’s architectural evolution—shifting from static retrieval to real-time knowledge graph integration that keeps your RAG system continuously aligned with ground truth. This isn’t theoretical anymore. Legal teams at top firms are deploying real-time knowledge graphs to track live rulings. Financial institutions are embedding live market data feeds directly into their retrieval pipelines. Customer support teams are pulling product updates in real-time rather than retraining their entire system every quarter.

The enterprises that understand this shift will have a massive competitive advantage. Those that don’t will spend the next two years wondering why their RAG investments deliver lower ROI than traditional search systems. This guide walks you through exactly why real-time knowledge graphs matter, how they work in production, and the specific architectural patterns that separate the 27% of enterprises whose RAG systems actually succeed from the 73% that stumble. We’ll move past theoretical comparisons into the practical implementation decisions that determine whether your knowledge graph becomes a scaling asset or a bottleneck nightmare.

The Root Cause: Why Static RAG Systems Are Architected for Failure

The fundamental problem with most enterprise RAG implementations is their assumption: knowledge is relatively stable. You index your documentation, embed it, store the vectors, and retrieve them as needed. This assumption works fine for mostly-static domains—archived legal documents, historical financial records, or published technical specifications. But the moment your enterprise needs RAG to support real-time decision-making, the entire architecture breaks down.

Consider a financial services example: Your RAG system is supporting a credit decision engine. A customer applies for a line of credit at 2:15 PM. Your system retrieves the customer’s last three years of transaction history, recent credit products, and relevant regulatory guidelines—all from your vector database snapshot taken that morning. The retrieval works perfectly. But here’s what your system doesn’t know: at 1:47 PM, the Federal Reserve released new monetary policy guidance that changed rate forecasts. At 2:03 PM, a competitor announced a new product category that affects your competitive positioning on pricing. At 2:08 PM, regulatory guidance from a specific state regulator was published that impacts this customer’s eligibility.

Your RAG system makes a credit decision based on information that was current 15 minutes ago. That 15-minute latency gap doesn’t sound dramatic until you realize it’s compounded across thousands of decisions, across multiple business lines, across compliance-sensitive domains where regulatory changes can make yesterday’s decisions illegal today.

This is why knowledge staleness is the #1 reason enterprise RAG systems degrade in production. Not retrieval latency. Not LLM hallucinations. Not evaluation metrics—though those matter too. It’s the architectural decision to treat knowledge as a static asset rather than a continuously evolving truth.

The Ripple Effect: How Staleness Cascades Into Failure

When your enterprise RAG system relies on static knowledge, degradation follows a predictable trajectory. Week 1-2: Everything works fine because the knowledge snapshot is recent. Week 3-4: You notice retrieval quality drifting on certain query types—the ones that depend on information that updates frequently. Week 8: Your compliance team flags that the system recommended actions based on regulatory guidance that was superseded. Month 4: You realize that your cost-per-query has climbed 40% because you’re now running expensive retraining pipelines monthly instead of the originally-planned quarterly cycle. Month 6: You’re looking at a full system rebuild or acceptance that your RAG investment underperforms traditional search.

The cascade happens because static systems force impossible tradeoffs:

- Retraining frequency vs. cost: More frequent retraining = higher relevance but exponential infrastructure costs. Many teams settle for “frequent enough” rather than “current enough,” accepting the degradation.

- Context window size vs. staleness: You could include more context to compensate for staleness, but that increases latency and costs. The math never works out.

- Accuracy vs. compliance: You might accept slightly stale data for general queries, but regulated industries can’t. Compliance requires audit trails showing exactly what information the system used and when it was current. Static systems can’t provide this.

This is why 73% of enterprise RAG systems hit the failure threshold. The static architecture works until it doesn’t—and then it fails catastrophically because the failure isn’t obvious until it’s systemic.

The Real-Time Knowledge Graph Solution: Architecture That Stays Current

Real-time knowledge graph architecture represents a fundamental shift in how enterprise RAG systems handle knowledge. Instead of treating knowledge as a static snapshot you embed once, you treat it as a continuously-updating truth graph that dynamically reflects ground reality.

Here’s how it works: Your knowledge graph maintains relationships between entities (products, regulations, customers, market conditions, contract terms) as a structured representation. Instead of embedding static documents, you embed the relationships themselves. When ground truth changes—a regulation is updated, a product is modified, a contract is amended—you update the graph. The retrieval system immediately sees the updated relationships and can incorporate them into responses.

Architecture 1: The Hybrid Retrieval Stack (Sparse + Dense + Graph)

The most effective real-time knowledge graph implementations use three retrieval channels working simultaneously:

Channel 1 – BM25 Sparse Retrieval: Handles exact-match and keyword queries. A regulatory query for “Section 404 compliance requirements” needs exact text matching. Sparse retrieval excels here and doesn’t require vector embeddings. Latency: ~50-150ms.

Channel 2 – Dense Vector Retrieval: Handles semantic and conceptual queries. “What products should we offer customers with credit scores between 680-720?” This requires semantic understanding. Vector embeddings excel here. Latency: ~100-300ms for retrieval + ~150-400ms for ranking.

Channel 3 – Graph Traversal Retrieval: Handles relationship queries and multi-hop reasoning. “What regulations apply to this product type in this jurisdiction?” This requires understanding entity relationships. Graph databases excel here. Latency: ~50-200ms for standard traversals, up to 500ms+ for complex multi-hop queries.

A real-time knowledge graph system routes queries intelligently:

Incoming Query

↓

Query Router

├─ Exact match patterns? → BM25 Sparse (50-150ms)

├─ Semantic understanding needed? → Dense Vector (250-700ms)

└─ Relationship/entity lookup? → Graph Traversal (50-500ms)

↓

Hybrid Ranker (combines results from all channels)

↓

Context Assembly (real-time fact verification)

↓

LLM Generation (with current context)

This architecture means that when a contract is updated in your system, the change immediately propagates through the graph. When a new regulation is published and ingested, the graph reflects it within seconds. When market data updates, the relationships update. Your retrieval system always works with current information.

Architecture 2: The Event-Driven Knowledge Update Pattern

Production real-time knowledge graphs don’t rely on scheduled batch retraining. They use event-driven updates:

Trigger Events:

– Document ingestion (new regulation, policy, contract)

– Data modifications (price changes, product updates, customer status changes)

– External data feeds (market data, regulatory notifications, news)

– User feedback loops (query results marked as inaccurate, retrieval quality flags)

Update Pipeline:

Event triggered (e.g., new regulation published)

↓

Extract entities & relationships from new information

↓

Compute differential updates to graph

↓

Update graph database (microsecond-latency operation)

↓

Invalidate affected vector embeddings (optional, if relationship changed)

↓

Trigger downstream observability alerts (monitoring system notified)

↓

System immediately reflects new knowledge

The key insight: You don’t re-embed everything. You update the graph structure. This is orders of magnitude faster than traditional retraining. Legal firms using this pattern can reflect new case law within 30 seconds of publication. Financial institutions can update market-sensitive information within milliseconds of data feed updates. Customer support teams can reflect product changes in real-time.

Architecture 3: The Temporal Knowledge Graph Pattern (For Regulated Industries)

Compliance-heavy industries (finance, healthcare, legal) need more than just current information. They need to know when information was current and prove it in audits.

Temporal knowledge graphs add time-versioning to every relationship:

Entity: "Regulation SEC-404"

Relationships:

├─ applies_to: "Public Companies"

│ ├─ valid_from: 2002-07-30

│ ├─ valid_to: null (still current)

│ └─ source: "Sarbanes-Oxley Act"

├─ applies_to: "Foreign Private Issuers"

│ ├─ valid_from: 2007-01-01

│ ├─ valid_to: 2024-12-31

│ └─ source: "SEC Regulation S-X"

└─ applies_to: "Foreign Private Issuers (Updated)"

├─ valid_from: 2025-01-01

├─ valid_to: null

└─ source: "SEC Amendment 2024-Q4"

When your system retrieves information, it queries for relationships valid at the specific point in time relevant to the decision. If a compliance officer asks “What did our system know about SEC-404 requirements on January 15, 2025?”, the temporal graph can prove exactly what information was available at that moment. This satisfies audit requirements that static RAG systems cannot possibly meet.

Real-World Production Patterns: Where Knowledge Graphs Win

Use Case 1: Legal Research & Contract Management

The Problem: Legal firms need access to current case law, regulatory guidance, and contract precedents. A case decision released this morning affects what advice you give clients this afternoon. Static RAG systems mean lawyers are either working with stale information or burning resources on expensive manual updates.

The Real-Time Knowledge Graph Solution:

- Graph nodes represent legal entities (cases, regulations, contracts, parties, precedents)

- Edges represent relationships (“cites”, “supersedes”, “modifies”, “interprets”)

- Event triggers: New case decisions, regulatory guidance, appellate rulings

- Update pattern: Within 30 seconds of publication, new rulings are extracted, entities created, relationships established

- Temporal versioning: Every relationship maintains validity dates for historical audit

Result: Legal research AI always works with current case law. A lawyer can ask “What’s the current precedent for trademark disputes in tech licensing?” and receive responses grounded in cases decided today, not from the last quarterly update. The system can also show how precedent has evolved: “This case superseded the 2019 ruling on X, which itself modified the 1997 guidance on Y.”

Impact: Firms report 3x faster research cycles and significantly higher client confidence because advice is grounded in current law, not historical snapshots.

Use Case 2: Financial Risk & Compliance Monitoring

The Problem: Regulatory guidance and market conditions shift constantly. A risk control system built on yesterday’s regulatory framework might be enforcing obsolete rules or missing new requirements. Financial institutions need real-time compliance without retraining the entire system daily.

The Real-Time Knowledge Graph Solution:

- Graph nodes represent regulatory requirements, risk categories, market conditions, and products

- Edges represent relationships (“product_subject_to”, “regulation_applies_in”, “market_condition_affects_risk”)

- Event triggers: Regulatory announcements, market data feeds (interest rates, volatility indices), product changes

- Update pattern: Regulatory changes reflected within minutes, market data within seconds

- Temporal versioning: Track when regulations became effective for historical compliance reporting

Result: Risk systems always operate under current regulatory regime. When the Federal Reserve releases new guidance on consumer lending requirements, the graph updates, and tomorrow’s credit decisions use the new framework. When a market index hits certain thresholds, portfolio recommendations adjust in real-time.

Impact: Institutions reduce compliance risk because the system is architecturally incapable of operating on stale regulatory guidance. Cost-per-decision actually decreases because you eliminate the need for expensive daily retraining cycles.

Use Case 3: Customer Support with Real-Time Product Information

The Problem: Support teams need current product information, but products change constantly—features added, bugs fixed, pricing updated. Traditional RAG systems become a liability when they recommend discontinued products or outdated procedures.

The Real-Time Knowledge Graph Solution:

- Graph nodes represent products, features, support procedures, pricing, and known issues

- Edges represent relationships (“product_has_feature”, “feature_available_in_regions”, “pricing_for_tier”, “issue_affects_users”)

- Event triggers: Product catalog updates, feature releases, pricing changes, support ticket patterns flagged as emerging issues

- Update pattern: Product changes reflected immediately when pushed to production

Result: Support AI always recommends current products and procedures. When a feature is released, the graph reflects it within minutes. When a bug is identified affecting specific user segments, the graph is updated to flag it.

Impact: Support teams reduce misdirected recommendations by 60%+ because the system works with real-time product truth. Customer satisfaction increases because the AI is never recommending deprecated features or outdated workarounds.

Implementation Blueprint: From Strategy to Production

Moving from static RAG to real-time knowledge graphs requires three distinct phases:

Phase 1: Foundation (Weeks 1-4)

Goal: Build the graph infrastructure and basic update pipeline

Tasks:

1. Select graph database: Neo4j (most production-ready for enterprise), TigerGraph (best for scale), or Cosmos DB Graph (if Azure-native required)

2. Design entity & relationship schema specific to your domain

3. Seed initial knowledge base from current static sources

4. Implement basic entity extraction pipeline (LLM-based or rule-based for your domain)

5. Set up event streaming infrastructure (Kafka, Pub/Sub, or SNS depending on your cloud)

Success Metrics: Graph has 80%+ of production entities, basic update pipeline tested, latency <500ms for simple traversals

Phase 2: Intelligence (Weeks 5-8)

Goal: Add retrieval routing, ranking, and observability

Tasks:

1. Implement hybrid retrieval router (sparse + dense + graph channels)

2. Build cross-channel ranker that intelligently combines results from all three retrieval modes

3. Add relationship-based context assembly (automatically include related information with retrieved results)

4. Implement retrieval quality monitoring (track how often graph relationships are used vs. dense vectors vs. sparse)

5. Set up automated degradation detection (alert when graph staleness increases)

Success Metrics: Hybrid retrieval produces 15%+ better results than single-channel retrieval, latency stays <1 second p95, graph updates propagate in <2 minutes

Phase 3: Scale (Weeks 9-12)

Goal: Add temporal versioning, compliance features, and production hardening

Tasks:

1. Implement temporal knowledge graph (add valid_from/valid_to to relationships)

2. Build audit trail infrastructure (every graph change is logged with timestamp and source)

3. Add compliance reporting dashboards (show what information system used at decision time)

4. Implement graph partitioning strategy for scaling to 10M+ entities

5. Set up multi-region graph replication for disaster recovery

Success Metrics: System passes compliance audits, can reproduce any historical decision with full information lineage, supports your projected entity/relationship scale for 2+ years

The Observability Requirement You Can’t Ignore

Here’s the critical insight most teams miss: Real-time knowledge graphs only solve the staleness problem if you can detect when staleness is still happening.

You need monitoring that answers these questions:

-

How current is your graph right now? What’s the maximum age of any relationship in active use? If your product relationships are from this morning but your regulatory relationships are from last week, you have a staleness problem.

-

How often is the graph actually being used? If your hybrid retriever is using dense vectors 80% of the time and the graph 20% of the time, your graph investment isn’t paying off. (This indicates your graph schema might be missing important relationships.)

-

Are updates actually propagating? Set up synthetic tests: “Inject a fake test relationship into the graph, wait 30 seconds, verify it appears in retrieval results.” If this test fails, your update pipeline is broken and you won’t notice until production degradation is severe.

-

What’s your p95 graph traversal latency? As your graph scales from 100K to 10M entities, complex traversals will slow. Monitor this actively. Once you hit 500ms on multi-hop queries, it’s time to implement query optimization or graph partitioning.

-

Which relationships have never been retrieved? This identifies schema design problems. If you created a relationship type that’s never used, your graph schema doesn’t match production query patterns.

Implement these monitors from day one of graph deployment. Don’t wait until you notice production degradation. You should be able to answer all five questions from your observability dashboard at any moment.

The Decision Tree: Is Real-Time Knowledge Graph Right for Your Enterprise?

Not every enterprise needs real-time knowledge graphs. The overhead is real—additional infrastructure, more complex pipelines, steeper operational learning curve. Use this decision tree:

Question 1: Do you have regulatory or compliance requirements that mandate knowing exactly what information was current at the time of a decision?

– YES → You need temporal knowledge graphs. Static RAG won’t meet audit requirements.

– NO → Continue to Question 2.

Question 2: Does your domain have information that updates more than weekly (regulations, pricing, product features, market conditions)?

– YES → Continue to Question 3.

– NO → Static RAG might be sufficient. Knowledge staleness won’t be your primary failure mode.

Question 3: Are you planning to scale this RAG system to support >100 concurrent users or >1M decisions annually?

– YES → Knowledge staleness will become catastrophic. Real-time graphs solve this at scale.

– NO → Consider hybrid approach: static graphs for the pilot, upgrade to real-time if usage scales.

Question 4: Do you have 2-3 engineers who can own the graph infrastructure and update pipeline long-term?

– YES → You can successfully deploy and maintain real-time knowledge graphs.

– NO → Start with consulting partners or managed graph services (Neo4j Aura, etc.) to reduce operational burden.

If you answered YES to Questions 1-3 and YES to Question 4, real-time knowledge graphs are your architectural imperative. Not optional. The enterprises that skip this step are the 73% that fail in production.

The Competitive Reality

The enterprises winning with enterprise RAG in 2025 share one architectural trait: they solved the knowledge staleness problem. They’re not running static systems that degrade predictably. They’re running real-time systems that stay current.

This is why the top law firms are deploying real-time knowledge graphs to track rulings within hours of publication. Why leading financial institutions are embedding market data feeds directly into retrieval pipelines. Why customer support teams are reflecting product changes in real-time.

The technical insight is straightforward: Knowledge that doesn’t degrade is knowledge that doesn’t fail. Real-time knowledge graphs are how you architect systems that stay current, stay compliant, and stay relevant as your enterprise evolves.

The teams that implement this architecture in Q1 2025 will spend 2025 refining their implementation and building competitive advantage. The teams that wait will spend 2026 explaining why their RAG systems underperform and catching up architecturally. By 2027, real-time knowledge graphs won’t be a competitive advantage—they’ll be table stakes for any enterprise RAG system in regulated industries.

The question isn’t whether you should build real-time knowledge graphs. It’s whether you’ll build them in time to avoid being part of the 73% that fails. Your next decision is simple: Which team owns the graph infrastructure roadmap in your organization? If you don’t know, now’s the time to decide. Because your RAG system’s future—and your Q3 2025 production success metrics—depend on it.