Retrieval-Augmented Generation Example for Backend Software Engineers

Retrieval-Augmented Generation (RAG) is an innovative approach that combines the strengths of retrieval-based and generation-based models to enhance the performance of natural language processing (NLP) tasks. This article delves into the intricacies of RAG, providing a comprehensive understanding tailored for backend software engineers. We will explore the architecture, implementation, and practical applications of RAG, supported by Python code snippets.

Introduction to Retrieval-Augmented Generation

RAG is a hybrid model that leverages both retrieval and generation techniques to produce more accurate and contextually relevant responses. Traditional retrieval-based models rely on a predefined set of documents to find the best match for a given query, while generation-based models use neural networks to generate responses from scratch. RAG combines these approaches by retrieving relevant documents and then using a generative model to produce a final response based on the retrieved information.

Why RAG?

The primary advantage of RAG is its ability to provide more accurate and contextually appropriate responses. By incorporating external knowledge through retrieval, RAG can generate responses that are not only coherent but also factually correct. This is particularly useful in applications such as question answering, customer support, and content generation.

Architecture of RAG

The RAG architecture consists of two main components:

- Retriever: This component retrieves relevant documents or passages from a large corpus based on the input query.

- Generator: This component generates the final response using the retrieved documents as context.

Retriever

The retriever can be implemented using various techniques, such as TF-IDF, BM25, or dense retrieval models like DPR (Dense Passage Retrieval). The choice of retriever depends on the specific use case and the size of the corpus.

Generator

The generator is typically a sequence-to-sequence model, such as BERT, GPT-3, or T5. It takes the retrieved documents and the input query as input and generates a coherent response.

Implementing RAG in Python

Let’s walk through a basic implementation of RAG using Python. We will use the Hugging Face Transformers library, which provides pre-trained models and utilities for both retrieval and generation.

Step 1: Install Required Libraries

First, we need to install the necessary libraries. You can do this using pip:

pip install transformers faiss-cpuStep 2: Load Pre-trained Models

We will use the DPR model for retrieval and the T5 model for generation.

from transformers import DPRQuestionEncoder, DPRQuestionEncoderTokenizer, DPRContextEncoder, DPRContextEncoderTokenizer

from transformers import T5ForConditionalGeneration, T5Tokenizer

# Load DPR models and tokenizers

question_encoder = DPRQuestionEncoder.from_pretrained('facebook/dpr-question_encoder-single-nq-base')

question_tokenizer = DPRQuestionEncoderTokenizer.from_pretrained('facebook/dpr-question_encoder-single-nq-base')

context_encoder = DPRContextEncoder.from_pretrained('facebook/dpr-ctx_encoder-single-nq-base')

context_tokenizer = DPRContextEncoderTokenizer.from_pretrained('facebook/dpr-ctx_encoder-single-nq-base')

# Load T5 model and tokenizer

t5_model = T5ForConditionalGeneration.from_pretrained('t5-base')

t5_tokenizer = T5Tokenizer.from_pretrained('t5-base')Step 3: Indexing the Corpus

We need to encode the documents in our corpus using the context encoder and store them in a FAISS index for efficient retrieval.

import faiss

import numpy as np

# Sample corpus

corpus = [

"The Eiffel Tower is located in Paris.",

"The Great Wall of China is one of the Seven Wonders of the World.",

"Python is a popular programming language."

]

# Encode the corpus

encoded_corpus = []

for doc in corpus:

inputs = context_tokenizer(doc, return_tensors='pt')

embeddings = context_encoder(**inputs).pooler_output.detach().numpy()

encoded_corpus.append(embeddings)

encoded_corpus = np.vstack(encoded_corpus)

# Create FAISS index

index = faiss.IndexFlatL2(encoded_corpus.shape[1])

index.add(encoded_corpus)Step 4: Retrieving Relevant Documents

Given a query, we can now retrieve the most relevant documents from the corpus.

def retrieve_documents(query, k=2):

inputs = question_tokenizer(query, return_tensors='pt')

query_embedding = question_encoder(**inputs).pooler_output.detach().numpy()

distances, indices = index.search(query_embedding, k)

return [corpus[i] for i in indices[0]]

query = "Where is the Eiffel Tower located?"

retrieved_docs = retrieve_documents(query)

print("Retrieved Documents:", retrieved_docs)Step 5: Generating the Final Response

Finally, we use the retrieved documents as context to generate the final response using the T5 model.

def generate_response(query, retrieved_docs):

context = " ".join(retrieved_docs)

input_text = f"question: {query} context: {context}"

inputs = t5_tokenizer(input_text, return_tensors='pt')

outputs = t5_model.generate(**inputs)

response = t5_tokenizer.decode(outputs[0], skip_special_tokens=True)

return response

response = generate_response(query, retrieved_docs)

print("Generated Response:", response)Practical Applications of RAG

RAG can be applied to various real-world scenarios, including:

Question Answering

RAG can significantly improve the accuracy of question-answering systems by retrieving relevant documents and generating precise answers. This is particularly useful in domains such as healthcare, legal, and customer support, where accurate information retrieval is crucial.

Content Generation

In content generation, RAG can be used to create articles, summaries, and reports by retrieving relevant information from a large corpus and generating coherent and contextually appropriate content.

Chatbots and Virtual Assistants

RAG can enhance the performance of chatbots and virtual assistants by providing more accurate and contextually relevant responses. This can lead to improved user satisfaction and engagement.

Knowledge Management

Organizations can use RAG to manage and retrieve knowledge from large document repositories, making it easier for employees to find relevant information and make informed decisions.

Challenges and Future Directions

While RAG offers significant advantages, it also presents several challenges:

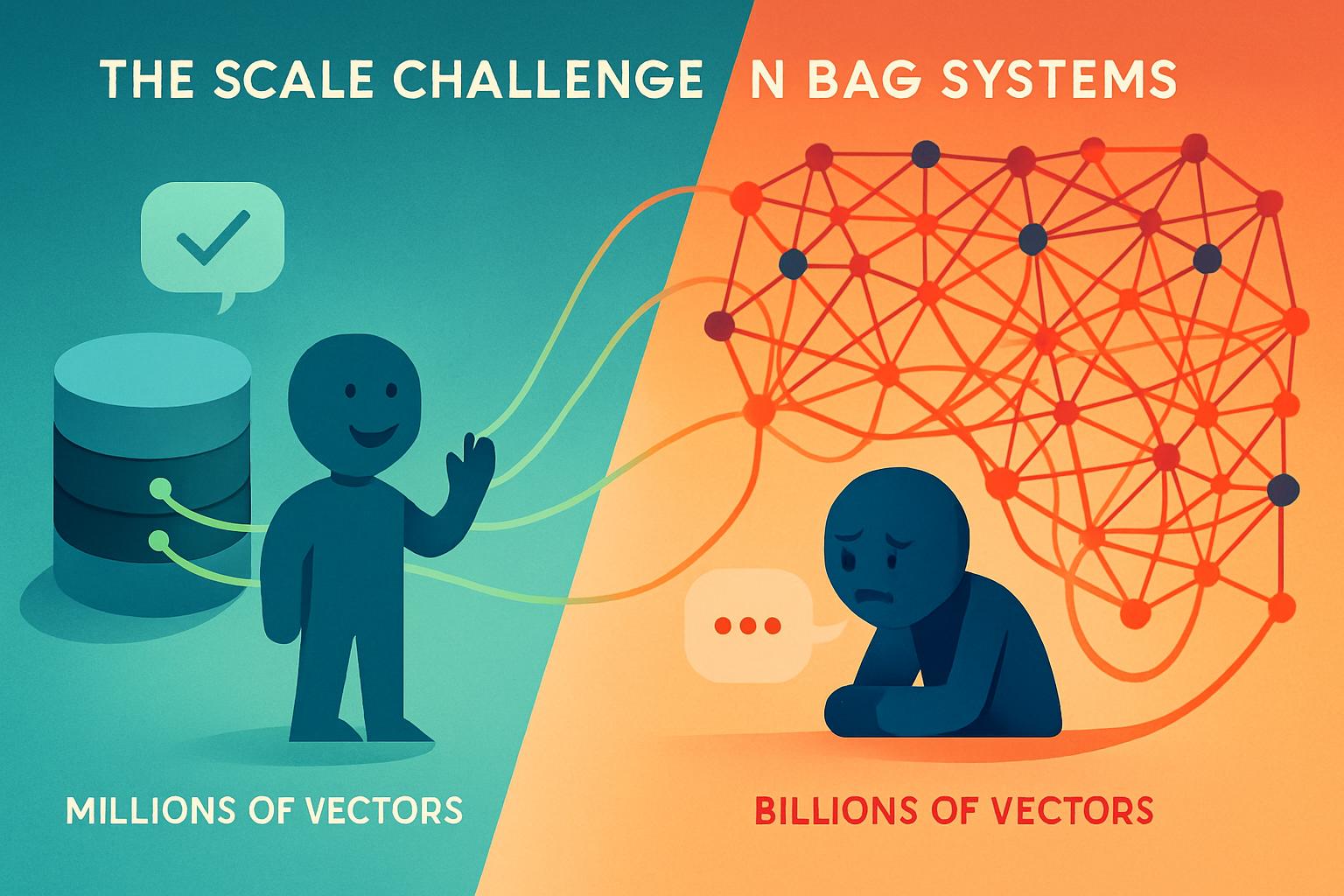

Scalability

As the size of the corpus increases, the retrieval process can become computationally expensive. Efficient indexing and retrieval techniques, such as FAISS, can help mitigate this issue.

Latency

The two-step process of retrieval and generation can introduce latency, which may be problematic for real-time applications. Optimizing the retrieval and generation models can help reduce latency.

Accuracy

The accuracy of the generated responses depends on the quality of the retrieved documents and the generative model. Fine-tuning the models on domain-specific data can improve accuracy.

Interpretability

Understanding how the model arrives at a particular response can be challenging. Developing techniques to interpret and explain the model’s decisions is an ongoing area of research.

Conclusion

Retrieval-Augmented Generation represents a significant advancement in the field of natural language processing, combining the strengths of retrieval-based and generation-based models to produce more accurate and contextually relevant responses. By leveraging pre-trained models and efficient retrieval techniques, backend software engineers can implement RAG to enhance various applications, from question answering to content generation.

As the field continues to evolve, addressing challenges related to scalability, latency, accuracy, and interpretability will be crucial to fully realizing the potential of RAG. By staying informed about the latest developments and best practices, engineers can harness the power of RAG to build more intelligent and responsive systems.