It’s a scenario every AI developer has encountered. You’ve meticulously built a Retrieval-Augmented Generation (RAG) system, fed it pristine data, and deployed it with high hopes. Yet, when a user asks a complex question, the system returns an answer that is technically accurate but contextually nonsensical. It retrieves the right document but fails to synthesize the information in a logical way, almost like a research assistant who pulls books from the shelf but has no idea how they connect. This frustrating gap between data retrieval and genuine reasoning has been the persistent bottleneck holding back the true potential of enterprise AI. We expect our AI to not just find information, but to understand it, to connect disparate dots, and to deliver insights that are more than just a glorified keyword search.

A standard RAG pipeline is powerful, but it often operates with a critical flaw: it’s context-blind. It retrieves passages that match a query but lacks the cognitive framework to reason about them. This leads to shallow answers for complex queries that require synthesizing information from multiple sources or understanding nuanced policy documents. The challenge isn’t just about finding the right data; it’s about teaching the Large Language Model (LLM) how to think with that data. Until now, this has been the domain of complex fine-tuning or cumbersome prompt engineering. But what if there was a more elegant, efficient way to elicit sophisticated reasoning? Researchers from Apple have recently published a groundbreaking paper, “Eliciting In-context Retrieval and Reasoning for Long-Context Language Models,” that presents a paradigm shift in how we approach this problem. This article will break down Apple’s revolutionary technique, explore the principles that make it so effective, and provide a conceptual guide on how you can apply these insights to build AI systems that don’t just retrieve information but truly reason with it.

The Hidden Bottleneck in Standard RAG Systems

The conventional “retrieve-then-generate” architecture is the bedrock of modern RAG. In this model, a user’s query is converted into a vector embedding and used to search a database of pre-processed text chunks. The most relevant chunks are retrieved and prepended to the original query as context for an LLM, which then generates a final answer. While this has proven effective for straightforward Q&A, it begins to falter when faced with complexity.

The core limitation is that the retrieval is separate from the reasoning. The system retrieves text but has no inherent understanding of how to use it to construct a logical argument or synthesize a multi-step answer. This can manifest in several ways: answers that simply regurgitate a retrieved passage without addressing the user’s true intent, a failure to connect information across different documents, or an inability to handle queries that require deductive reasoning. This disconnect is a major hurdle for enterprises that need AI to tackle sophisticated internal knowledge bases, from intricate financial regulations to complex engineering documentation. With the global RAG market projected to exceed $40 billion by 2035, solving this reasoning bottleneck isn’t just an academic exercise—it’s a critical step toward unlocking immense business value.

A Paradigm Shift: Apple’s In-Context Retrieval and Reasoning

Apple’s research introduces a subtle but incredibly powerful modification to the RAG process. Instead of only providing the LLM with retrieved data, their method also provides a small number of high-quality, in-context demonstrations. These demonstrations show the model exactly how to reason through a similar problem, effectively teaching it the desired cognitive process on the fly.

What is “In-Context” Learning for RAG?

Think of it as giving the LLM an open-book exam, but with a few sample questions already expertly answered. These answered examples, or “demonstrations,” aren’t just simple question-answer pairs. They expose the model to the chain of thought required to get from a query and a set of documents to a well-reasoned conclusion. The demonstration includes the initial question, the relevant snippets of text, the reasoning steps a human would take, and the final synthesized answer. By including this in the prompt alongside the user’s actual query and its newly retrieved data, the model learns to mimic the reasoning pattern.

The Power of High-Quality Demonstrations

A key finding from Apple’s research is that you don’t need a massive library of these examples. A small, curated set of high-quality demonstrations is sufficient to guide the model’s behavior. This is far more efficient than traditional fine-tuning, which requires vast datasets and significant computational resources. The focus shifts from quantity to quality, empowering developers to steer model behavior with just a handful of well-crafted examples.

The Results Speak for Themselves

The efficacy of this method is not just theoretical. Apple’s technique demonstrated a 6.2% absolute improvement on the Natural Questions (NQ) benchmark, a standard for evaluating machine reading comprehension. This substantial gain highlights the method’s ability to significantly boost an LLM’s reasoning and question-answering capabilities with minimal overhead.

Conceptual Walkthrough: Implementing an Apple-Inspired RAG System

Adopting this advanced technique doesn’t require rebuilding your RAG system from scratch. It’s about augmenting your existing pipeline with a new layer of intelligence. Here’s a conceptual guide to implementing an Apple-inspired reasoning framework.

Step 1: Curate Your “Golden” Demonstrations

The success of this entire process hinges on the quality of your demonstrations. Identify common, complex query patterns your users face. For each pattern, create a “golden” record that includes:

* A Representative Query: A real-world example of the question.

* Ideal Context: The exact text passages needed to answer the query.

* Chain of Thought: A step-by-step breakdown of the reasoning process. (e.g., “First, identify the user’s project role from Document A. Second, find the corresponding travel policy in Document B. Third, cross-reference the client visit clause in Document C. Finally, synthesize these points into a clear approval process.”)

* The Final Answer: The perfectly crafted, synthesized response.

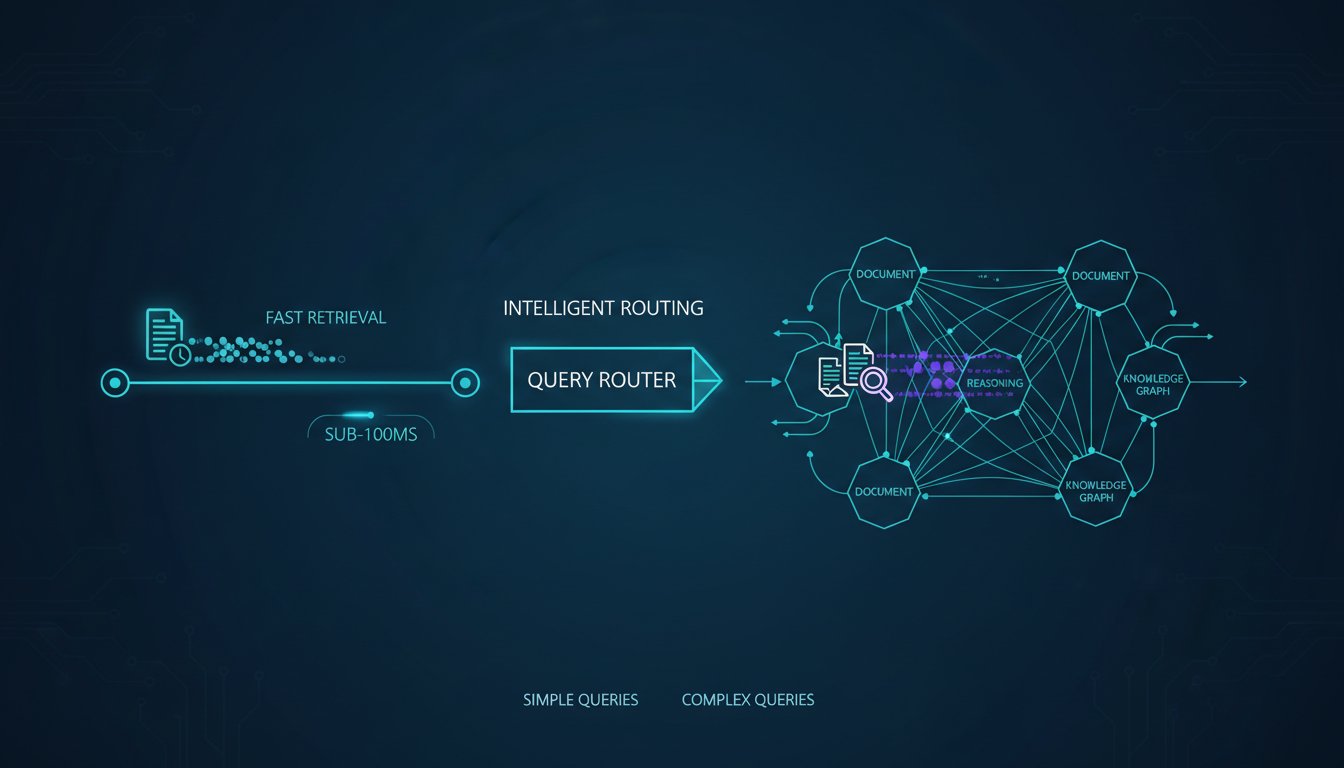

Step 2: The Two-Pass Retrieval Process

Your retrieval process now needs to do two things. First, it retrieves the documents relevant to the user’s current query as it normally would. Second, it performs another retrieval against your curated demonstration library to find the demonstration that most closely matches the user’s query pattern. This ensures the model gets the most relevant example for the specific reasoning task at hand.

Step 3: Crafting the Final Augmented Prompt

Finally, you construct a new, augmented prompt to send to the LLM. This prompt is a fusion of the original RAG context and the new reasoning guidance. It should be structured to include:

1. The selected high-quality demonstration (Query, Context, Reasoning, Answer).

2. The current user’s query.

3. The documents retrieved for the user’s query.

By providing both the example and the problem, you give the model everything it needs to replicate the desired reasoning pattern and generate a superior, well-synthesized answer.

Practical Application: Building a “Reasoning” Slack Bot with Voice Responses

Let’s translate this theory into a tangible enterprise use case. Nearly every company uses Slack as a central communication hub, but its search functionality struggles with complex questions about internal knowledge. A standard RAG bot integrated into Slack might find a document about travel policy, but it would likely fail a query like, “Can I fly business class to our London client next month, and what’s the approval workflow since the trip is under 5 days?”

By implementing the Apple-inspired technique, a Slack bot could use a demonstration for “complex policy questions” to guide its reasoning. It would learn to first extract the key entities (business class, London, client, under 5 days), retrieve the relevant sections from the Travel Policy and the Expense Approval documents, synthesize the rules, and deliver a precise, multi-part answer directly in the channel.

To elevate this experience from a simple chatbot to a true digital assistant, you can add a voice component. After the bot provides its perfectly reasoned text response, an accompanying audio version allows the employee to listen to the answer on the go. This is invaluable for remote teams, employees on the move, or for enhancing accessibility. Imagine your team members asking complex questions in Slack and receiving a perfectly reasoned text and audio response they can listen to, all within seconds.

As you build the next generation of reasoning AI, consider how the user experience can match the sophistication of the backend. Integrating lifelike voice responses is a key part of this human-centric approach, making your powerful AI tools more accessible and intuitive than ever. The future of RAG is not just about finding data, but about understanding it. By embracing techniques like in-context reasoning, we can finally close the gap between retrieval and genuine comprehension, building AI that empowers users with real insight. To add powerful, human-like voice capabilities to your custom applications and bots, try ElevenLabs for free now (http://elevenlabs.io/?from=partnerjohnson8503).