The average enterprise loses 2.5 hours per day searching for information across scattered documents, databases, and knowledge repositories. Despite investing millions in enterprise search solutions, knowledge workers still struggle to find relevant, contextual answers when they need them most. Traditional search returns documents; what teams really need are synthesized insights that connect information across multiple sources.

Google’s NotebookLM represents a fundamental shift in how we approach enterprise knowledge management. Unlike conventional RAG systems that retrieve and generate responses from isolated chunks, NotebookLM creates a contextual understanding layer that synthesizes information across entire document collections. This approach transforms how organizations can build intelligent knowledge systems that don’t just find information—they understand it.

In this comprehensive guide, we’ll walk through building production-ready contextual RAG systems using NotebookLM’s architecture principles. You’ll learn how to implement document synthesis pipelines, create contextual embedding strategies, and deploy systems that provide nuanced, multi-document insights. By the end, you’ll have a complete framework for building RAG systems that truly understand your enterprise knowledge landscape.

Understanding NotebookLM’s Contextual Synthesis Architecture

NotebookLM’s breakthrough lies in its approach to document understanding. Traditional RAG systems treat documents as collections of independent chunks, leading to fragmented responses that miss critical connections between related concepts. NotebookLM instead creates what Google calls “contextual synthesis”—a process that maintains document relationships and cross-references throughout the retrieval and generation pipeline.

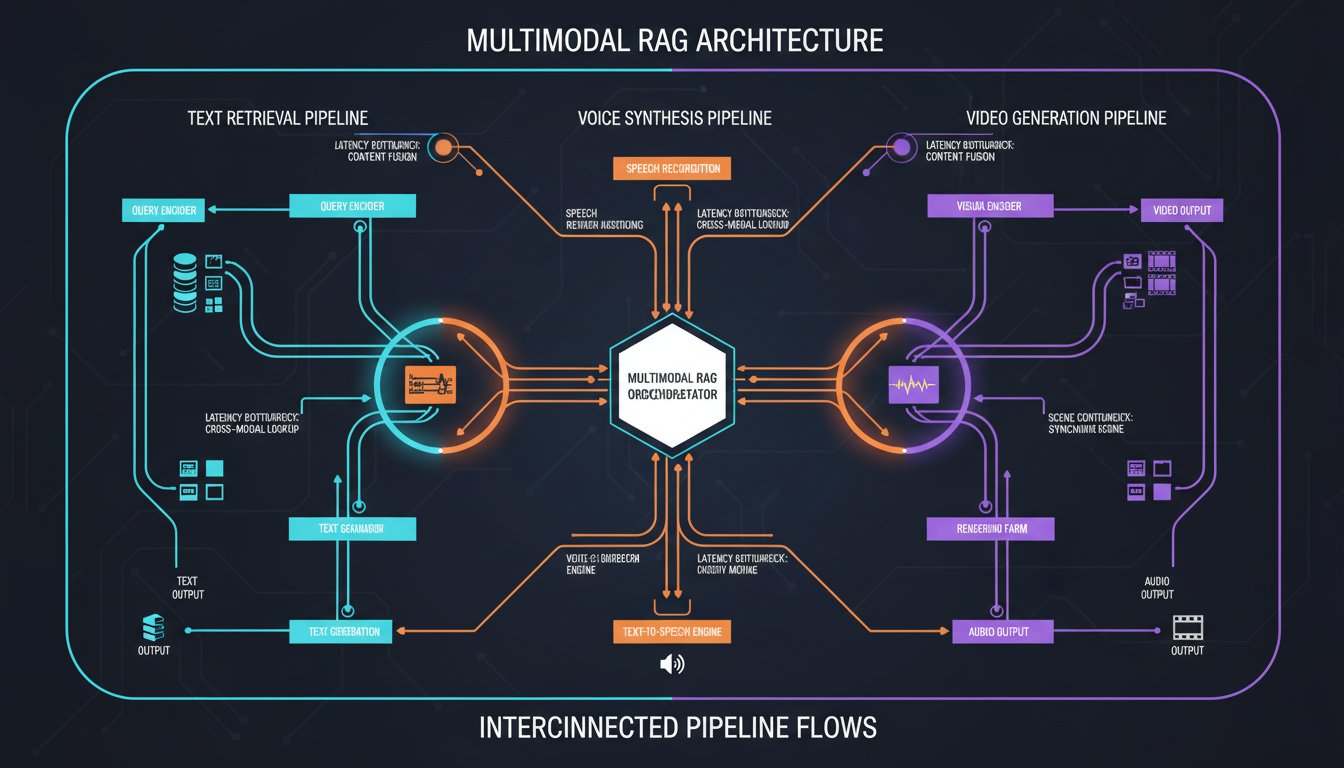

The core architecture consists of three interconnected layers: the Document Graph Layer, which maps relationships between documents and concepts; the Contextual Embedding Layer, which creates embeddings that preserve inter-document relationships; and the Synthesis Generation Layer, which produces responses that draw from multiple sources while maintaining coherent narrative flow.

Document Graph Construction

The foundation of contextual synthesis begins with building comprehensive document graphs. Unlike traditional approaches that index documents independently, NotebookLM-inspired systems create explicit relationship mappings between documents, sections, and concepts.

Start by implementing entity extraction across your document corpus. Use named entity recognition to identify people, organizations, concepts, and topics that appear across multiple documents. Create bidirectional relationship mappings—when Document A references Concept X, and Document B also discusses Concept X, establish explicit connections between Documents A and B through their shared conceptual overlap.

Implement cross-reference detection to identify when documents explicitly reference each other. This includes citation analysis, hyperlink mapping, and semantic similarity detection for concepts discussed in different documents using different terminology. Modern NLP libraries like spaCy and Hugging Face Transformers provide robust entity extraction capabilities that can handle technical documentation, research papers, and business documents effectively.

Contextual Embedding Strategies

Traditional RAG embeddings focus on semantic similarity within individual chunks. Contextual embeddings expand this approach by incorporating relationship information directly into the vector representations. This ensures that retrieved chunks carry information about their broader document context and relationships to other relevant materials.

Implement hierarchical embedding structures that capture document-level, section-level, and chunk-level semantics simultaneously. Use techniques like hierarchical attention mechanisms to weight embeddings based on their position within document hierarchies and their relationship strength to other documents in the corpus.

Consider implementing graph neural networks (GNNs) to create embeddings that explicitly incorporate document relationship information. Libraries like PyTorch Geometric provide tools for building GNN-based embedding systems that can process document graphs and generate contextually-aware vector representations.

Building Multi-Document Synthesis Pipelines

The synthesis pipeline transforms retrieved chunks into coherent, multi-perspective responses that acknowledge different viewpoints and information sources. This requires sophisticated orchestration of retrieval, analysis, and generation components.

Advanced Retrieval Orchestration

Implement multi-stage retrieval that goes beyond simple semantic similarity. Start with broad conceptual retrieval to identify relevant document clusters, then perform focused chunk retrieval within those clusters. This approach ensures comprehensive coverage while maintaining computational efficiency.

Use query expansion techniques to identify related concepts that might not appear in the original query but are relevant to providing complete answers. Implement semantic query expansion using word embeddings and knowledge graphs to capture implied information needs.

Develop conflict detection mechanisms that identify when retrieved chunks contain contradictory information. Flag these conflicts explicitly in responses and provide users with transparent access to different perspectives rather than attempting to reconcile irreconcilable differences artificially.

Synthesis Generation Framework

Build generation pipelines that create responses acknowledging multiple sources and perspectives. Use structured prompting techniques that instruct language models to synthesize information while maintaining source attribution and identifying areas of agreement or disagreement between sources.

Implement citation management systems that track which parts of generated responses derive from which source documents. This enables both transparency and quality control, allowing users to verify claims and explore primary sources when needed.

Develop response validation mechanisms that check generated content against source materials to ensure accuracy and prevent hallucination. Use semantic similarity scoring and fact-checking pipelines to validate that synthesized responses accurately represent source information.

Enterprise Integration and Deployment Patterns

Deploying contextual RAG systems in enterprise environments requires careful consideration of existing infrastructure, security requirements, and user workflows.

Infrastructure Architecture

Design scalable architectures that can handle enterprise document volumes while maintaining response time requirements. Implement distributed processing pipelines that can parallelize document ingestion, graph construction, and embedding generation across multiple compute nodes.

Use vector databases optimized for hierarchical and graph-based embeddings. Solutions like Pinecone, Weaviate, or Qdrant can handle complex embedding structures while providing the query performance required for real-time applications.

Implement caching strategies at multiple levels—cache document graphs, embedding computations, and frequently requested synthesis results. This dramatically improves response times for common queries while reducing computational overhead.

Security and Compliance Framework

Build access control systems that respect document-level permissions throughout the synthesis process. Users should only receive synthesized responses that draw from documents they have permission to access. Implement fine-grained permission checking that evaluates access rights for each source document before including it in synthesis operations.

Develop audit trails that track which documents contributed to each generated response. This enables compliance reporting and helps organizations understand how their knowledge systems are being used. Include logging for all retrieval operations, synthesis generation, and user interactions.

Implement data governance frameworks that handle sensitive information appropriately. Use techniques like differential privacy or secure multi-party computation when dealing with confidential documents that need to contribute to synthesis while maintaining privacy protection.

Performance Optimization and Quality Assurance

Contextual RAG systems require sophisticated monitoring and optimization to maintain high-quality outputs while managing computational complexity.

Response Quality Metrics

Develop comprehensive evaluation frameworks that assess both factual accuracy and synthesis quality. Implement automated fact-checking pipelines that validate generated claims against source documents. Use semantic similarity scoring to ensure that synthesized responses accurately represent source material perspectives.

Create human evaluation workflows for assessing synthesis quality, coherence, and usefulness. Regularly sample system outputs for expert review, focusing on complex queries that require multi-document synthesis. Use this feedback to refine synthesis prompts and improve generation quality.

Implement user feedback collection systems that capture implicit and explicit quality signals. Track user interactions with generated responses—do users explore cited sources, copy generated content, or abandon sessions early? These behavioral signals provide valuable insights into system effectiveness.

Computational Efficiency

Optimize embedding generation and similarity search operations for enterprise-scale document collections. Use techniques like approximate nearest neighbor search and embedding quantization to maintain search quality while reducing computational requirements.

Implement intelligent caching strategies that identify frequently accessed document combinations and pre-compute synthesis results. Use machine learning models to predict which document combinations are likely to be requested together and proactively generate cached responses.

Develop load balancing strategies that distribute synthesis operations across available compute resources effectively. Consider using serverless architectures for handling variable query loads while maintaining cost efficiency.

Contextual RAG systems represent the next evolution in enterprise knowledge management, moving beyond simple document retrieval to provide intelligent synthesis capabilities that respect document relationships and provide nuanced, multi-perspective insights. By implementing the architectural patterns and deployment strategies outlined in this guide, organizations can build knowledge systems that truly understand their information landscape and provide users with the contextual understanding they need to make informed decisions.

The key to success lies in treating documents as interconnected knowledge networks rather than isolated information silos. When you build systems that understand and preserve these relationships, you create tools that don’t just find information—they synthesize understanding. Start with a pilot implementation focusing on a specific document domain, validate the approach with real users, and gradually expand to cover your organization’s broader knowledge ecosystem.