Imagine the familiar rhythm of a busy development sprint. Code is being shipped, bugs are being squashed, and features are taking shape. At the center of this controlled chaos is Jira, the undisputed source of truth for the project’s progress. Yet, for project manager Sarah, Jira often feels more like a source of anxiety. She’s caught in a constant communication crossfire. Developers are deep in flow state, but stakeholders from marketing, sales, and leadership are constantly pinging for updates. “What’s the status of JIRA-1337?” “Has the login bug been fixed yet?” Each question requires her to either dive into Jira herself to translate technical jargon into a business-friendly update or, worse, interrupt a developer, breaking their concentration. Studies show that a single interruption can cost a developer up to 23 minutes of focus, a cumulative tax on productivity that no team can afford.

The challenge is universal: bridging the communication gap between the technical engine room and the non-technical stakeholders who depend on its output. Standard Jira notifications are too noisy and technical. Manual status reports are time-consuming to create and often outdated by the time they’re read. Lengthy status meetings pull everyone away from productive work. There has to be a better way to deliver clear, timely, and engaging updates without sacrificing developer productivity or stakeholder clarity. What if you could build an automated system that watches for important Jira updates, intelligently summarizes the changes, and then generates a personalized video report from a lifelike AI avatar, delivering it directly to the right people?

This isn’t a far-off futuristic concept; it’s a practical and powerful solution you can build today. By orchestrating a workflow between Jira webhooks, a serverless function, a Large Language Model (LLM), and the cutting-edge APIs from HeyGen and ElevenLabs, you can transform your project communication. This system works tirelessly in the background, turning dense ticket updates into digestible video summaries that keep everyone perfectly in sync. In this comprehensive walkthrough, we will guide you through every technical step required to build your own automated Jira video reporter. You will learn how to configure the triggers in Jira, write the serverless code to process the data, and integrate the generative AI services that bring your updates to life. Get ready to eliminate communication bottlenecks and empower your team with a reporting system as innovative as the products they build.

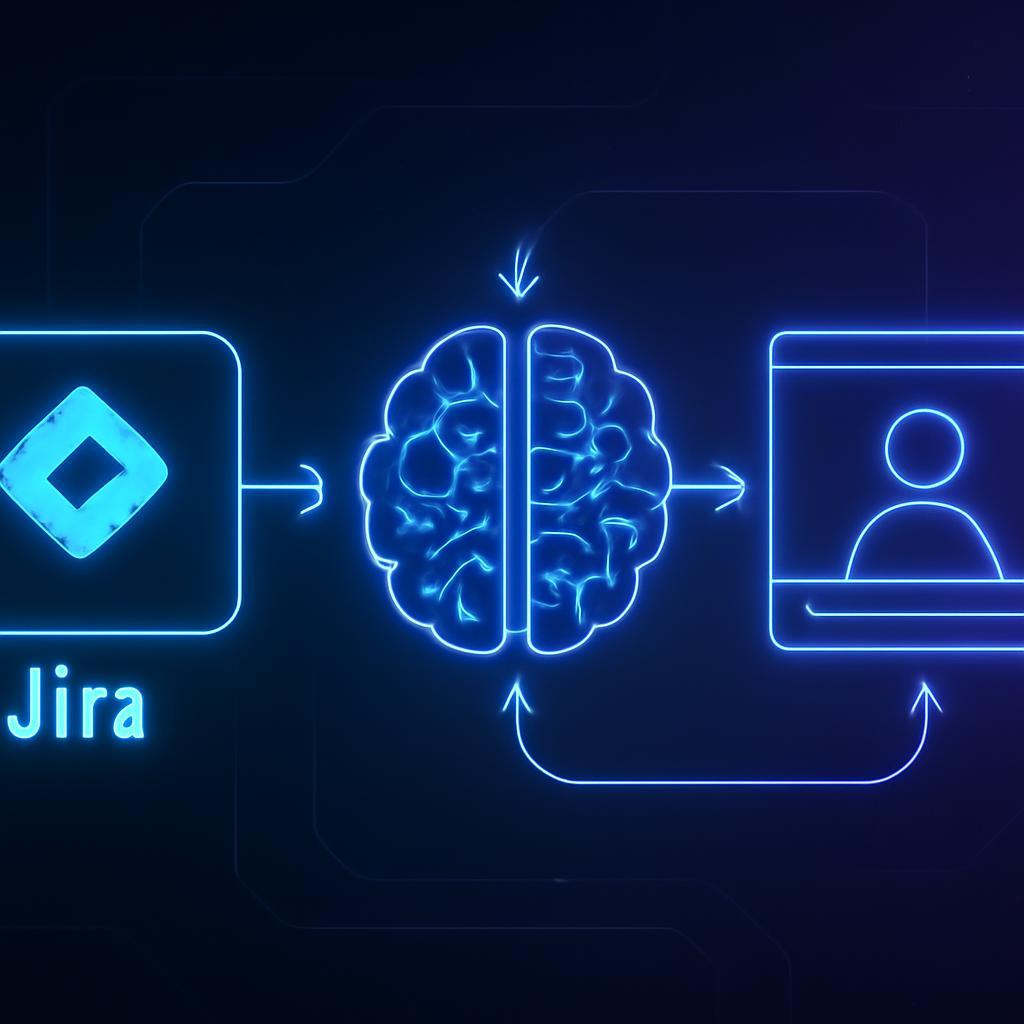

The Architecture of an Automated Jira Video Reporter

Before diving into the code, it’s crucial to understand the high-level architecture of this automation. The entire system is event-driven, kicking into action the moment an issue is updated in Jira. It’s designed to be modular, scalable, and cost-effective, leveraging serverless technology to ensure you only pay for what you use.

Core Components of the Workflow

The magic of this system lies in how five key components work in concert:

- Jira Webhooks: This is the starting pistol for our workflow. A webhook is a user-defined HTTP callback that is triggered by a specific event. In our case, we’ll configure a webhook in Jira to fire whenever an issue is updated (e.g., a comment is added, a status changes, or a field is modified). It securely sends a JSON payload containing all the relevant issue data to a specified URL.

- Serverless Function: This is the central nervous system of our operation. We’ll use a platform like AWS Lambda, Google Cloud Functions, or Azure Functions to host our code. This function acts as the endpoint for the Jira webhook, receiving the data, parsing it, and orchestrating the subsequent calls to the other services. The serverless model means we don’t have to manage any infrastructure; the function simply wakes up, does its job, and goes back to sleep.

- Large Language Model (LLM): To make the update digestible, we need to summarize it. The raw data from Jira—including technical comments, logs, and status transitions—is fed into an LLM (like one from OpenAI, Anthropic, or a self-hosted model). We’ll use prompt engineering to instruct the model to generate a concise, professional script suitable for a video narration.

- ElevenLabs API: This service transforms our text script into a hyper-realistic, human-sounding voiceover. Rather than a robotic, computer-generated voice, ElevenLabs provides a rich, expressive audio track that makes the update engaging. You can even clone your own voice or choose from a library of professional-grade voices to maintain brand consistency.

- HeyGen API: This is where the visual element comes to life. HeyGen takes the audio file generated by ElevenLabs and uses it to animate a realistic AI avatar. You can choose a pre-made avatar or create a custom one to act as your team’s designated status reporter. The API synchronizes the avatar’s lip movements and facial expressions to the audio, producing a polished, professional video.

The Workflow in Action

Visualizing the data flow helps clarify how these parts connect:

- A developer adds a comment to a Jira issue and moves it from “In Progress” to “In Review.”

- This action triggers the Jira webhook, which sends a detailed JSON payload about the issue to our serverless function’s URL.

- The serverless function activates, parsing the JSON to extract the issue key, summary, the latest comment, and the status change.

- The function formats this information into a prompt for the LLM, asking for a brief, stakeholder-friendly summary.

- The LLM returns a script, such as: “Good news on ticket JIRA-42. The login bug fix has been completed and is now in review. The team expects to deploy it by end of day pending approval.”

- This script is sent to the ElevenLabs API, which returns an MP3 audio file of the narration.

- The serverless function then calls the HeyGen API, providing the URL to the ElevenLabs audio and the ID for a pre-selected avatar.

- HeyGen processes this request and, after a minute or two, makes the final MP4 video available at a unique URL.

- Finally, the function posts the video URL back as a comment on the Jira ticket and simultaneously shares it in a designated stakeholder Slack channel for immediate visibility.

Step-by-Step Implementation: Setting Up Your Jira Webhook

The first practical step is to tell Jira where to send updates. This is done by creating and configuring a webhook within your Jira instance’s administrative settings.

Creating the Webhook in Jira

- Navigate to Settings: You’ll need Jira admin permissions for this. Go to Settings (⚙️) > System.

- Find Webhooks: In the left-hand menu, under the “Advanced” section, click on Webhooks.

- Create a New Webhook: Click the “Create a Webhook” button in the top right corner. You’ll be presented with a configuration form.

- Name: Give it a descriptive name, like “AI Video Reporter.”

- URL: This is the most critical field. You’ll need to enter the trigger URL for the serverless function you will create in the next step. For now, you can use a placeholder or a webhook testing service like

webhook.siteto inspect the payload. - Events: This is where you specify what actions should trigger the webhook. To avoid excessive noise, be specific. A good starting point is to check the boxes under “Issue” for updated. You can further refine this using Jira Query Language (JQL).

- Filter with JQL: In the “Issue related events” section, use a JQL query to limit the webhook to specific projects or issue types. For example, to only trigger for bugs and tasks in the “Phoenix Project” (PROJ), you would use:

project = PROJ AND issuetype in (Bug, Task)

This ensures the automation only runs for relevant issues, saving costs and preventing spam.

Securing Your Webhook Endpoint

A publicly exposed URL for your serverless function is a potential security risk. Anyone who knows the URL could send fake data to it. To prevent this, Jira allows you to add a secret to your webhook. You can generate a random string and add it to the webhook URL as a parameter (e.g., https://api.your-function.com/trigger?secret=YOUR_SECRET_STRING). In your serverless function, the very first step should be to check if this secret is present and correct. If not, immediately reject the request.

Building the Orchestration Logic with a Serverless Function

With Jira ready to send data, it’s time to build the function that will receive and process it. We’ll use Python for our examples, as it’s a popular choice for scripting and API integrations, but the logic can be easily adapted to Node.js or other languages.

Parsing the Jira Payload

The JSON payload sent by Jira is rich with information. Your first task is to extract the exact details you need for the summary. A typical payload for an issue update can be complex, but you’ll likely focus on a few key fields.

Here’s a Python snippet (for an AWS Lambda function) showing how to parse the incoming event:

import json

def lambda_handler(event, context):

# TODO: Verify secret token first

body = json.loads(event['body'])

issue = body.get('issue', {})

issue_key = issue.get('key')

summary = issue.get('fields', {}).get('summary')

status = issue.get('fields', {}).get('status', {}).get('name')

# The 'comment' object is only present if a comment triggered the update

comment_body = body.get('comment', {}).get('body', 'No new comment added.')

# Now, assemble the context for the LLM

llm_context = f"""Jira Ticket: {issue_key} ({summary})

Status changed to: {status}

Latest comment: {comment_body}"""

# Next steps: Call LLM, ElevenLabs, and HeyGen APIs

# ...

Generating the Summary with an LLM

Now, you’ll pass this llm_context to your chosen LLM. The key to getting a good result is effective prompt engineering. You need to give the model a clear role, context, and format for its output.

Example prompt structure:

"You are an expert project manager's assistant creating a script for a brief video status update. The audience is non-technical stakeholders. Summarize the following Jira update in no more than three sentences. Be clear, concise, and professional. Here is the data:\n\n{llm_context}"

You would then make an API call to a service like OpenAI, passing this prompt to the model and getting back the generated script.

Integrating ElevenLabs for Voice Generation

With your script in hand, it’s time to give it a voice. ElevenLabs has a straightforward API for text-to-speech synthesis. You’ll need to sign up for an API key and choose a Voice ID for your AI reporter. To get started with high-quality AI voices, you can try ElevenLabs for free now.

Here’s an example of how to call the API:

import requests

ELEVENLABS_API_KEY = "your_elevenlabs_api_key"

VOICE_ID = "your_chosen_voice_id"

headers = {

"Accept": "audio/mpeg",

"Content-Type": "application/json",

"xi-api-key": ELEVENLABS_API_KEY

}

data = {

"text": generated_script, # The script from the LLM

"model_id": "eleven_multilingual_v2",

"voice_settings": {

"stability": 0.5,

"similarity_boost": 0.75

}

}

response = requests.post(f"https://api.elevenlabs.io/v1/text-to-speech/{VOICE_ID}", json=data, headers=headers)

# You would typically save this audio to a temporary location, like an S3 bucket

with open('/tmp/update_audio.mp3', 'wb') as f:

f.write(response.content)

# The next step would be to get a public URL for this audio file

Creating the Video with HeyGen

The final step in generation is creating the video. HeyGen’s API allows you to combine an avatar with an audio track. You’ll first need to pick an avatar in your HeyGen account and get its ID. HeyGen makes creating these avatar videos incredibly simple. Click here to sign up and explore their powerful API.

Making the API call to generate the video looks like this:

import requests

HEYGEN_API_KEY = "your_heygen_api_key"

AVATAR_ID = "your_heygen_avatar_id"

headers = {

"X-Api-Key": HEYGEN_API_KEY,

"Content-Type": "application/json"

}

data = {

"video_inputs": [

{

"character": {

"type": "avatar",

"avatar_id": AVATAR_ID,

},

"voice": {

"type": "audio",

"audio_url": public_audio_url # URL of the audio from ElevenLabs

}

}

],

"test": False, # Set to True for testing

"title": f"Jira Update: {issue_key}"

}

response = requests.post("https://api.heygen.com/v2/video/generate", json=data, headers=headers)

video_id = response.json()['data']['video_id']

# You'll need to poll the status of the video until it's ready and you get the final video URL

Distributing the Final Video and Advanced Customizations

Generating the video is only half the battle; it needs to be delivered to the right people to be effective. This is the final step in your serverless function’s logic.

Posting the Video Link Back to Jira

To keep a complete record, a best practice is to post the video update directly to the Jira issue that triggered it. You can do this by using the Jira REST API to add a comment.

Your function would make an authenticated API call to the /rest/api/2/issue/{issueKey}/comment endpoint, with a body like: {"body": "An AI-generated video summary of this update has been created: [video_url]"}.

Notifying Stakeholders (Slack/Email)

The real power comes from pushing the update to where your stakeholders live. You can easily configure your function to:

- Post to Slack: Use Slack’s Incoming Webhooks to post a message to a specific channel (e.g.,

#project-phoenix-updates) with a link to the video. - Send an Email: Use a service like AWS SES or SendGrid to email the video link to a predefined distribution list of project stakeholders.

Ideas for Customization

Once you have the basic workflow running, the possibilities for customization are endless:

- Conditional Logic: Only generate videos for issues with a certain priority (

Priority = Highest) or when the status changes to “Done.” - Personalized Content: Use different prompts for the LLM based on the target audience. Create a technical summary for a developer channel and a business-impact summary for an executive channel.

- Dynamic Avatars: Use different HeyGen avatars or ElevenLabs voices depending on the project, giving each project its own virtual spokesperson.

Remember Sarah, the project manager drowning in communication overhead? By implementing this system, her world transforms. Now, when a critical bug is fixed, stakeholders in the leadership Slack channel don’t see a cryptic “JIRA-1337 moved to Done” notification. Instead, they receive a direct message with a one-minute video. A friendly, professional AI avatar appears and says, “Hi team, just a quick update on the Phoenix Project. The critical login bug has been resolved and deployed to production. All user-facing services are now fully operational.” Communication is clear, immediate, and engaging. The developers were never interrupted, and Sarah can focus on strategic planning instead of playing telephone.

This level of sophisticated automation is no longer the domain of large tech corporations. By cleverly combining the services you already use, like Jira, with the power of generative AI from platforms like HeyGen and ElevenLabs, you can build a truly transformative communication workflow. Ready to bring your Jira updates to life? Start by exploring the powerful, developer-friendly APIs from HeyGen and ElevenLabs, and build a reporting system that saves time, eliminates friction, and keeps everyone on your team perfectly aligned.