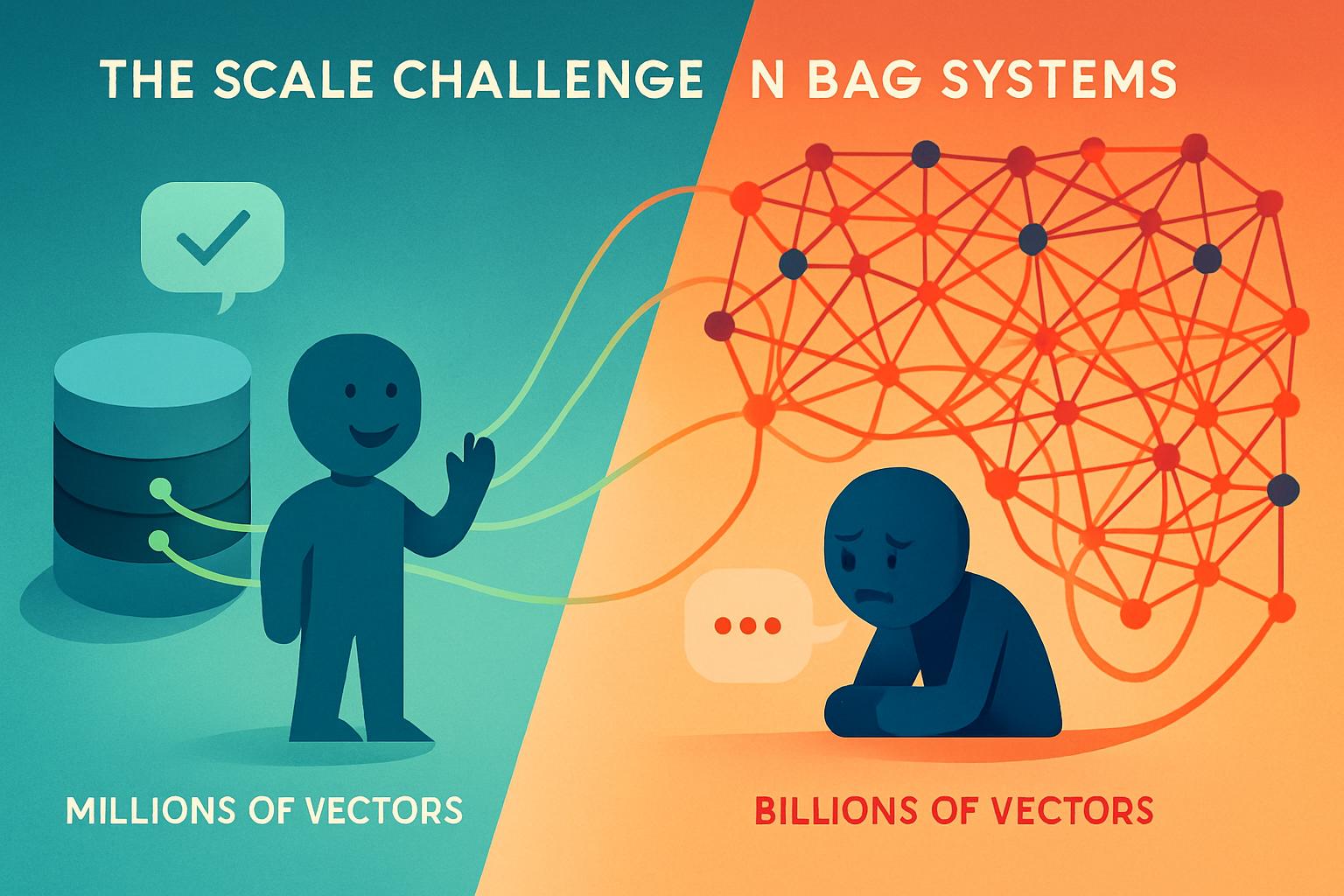

Enterprise AI teams are hitting a wall with traditional RAG systems. Despite investing millions in vector databases and fine-tuned models, they’re still getting inconsistent answers, struggling with complex multi-hop queries, and watching their systems fail when faced with nuanced business questions that require reasoning across multiple documents.

The problem isn’t just retrieval—it’s the lack of intelligent orchestration and continuous improvement. Traditional RAG systems are static, reactive, and blind to their own performance gaps. They retrieve documents, generate responses, and move on, never learning from failures or optimizing their approach.

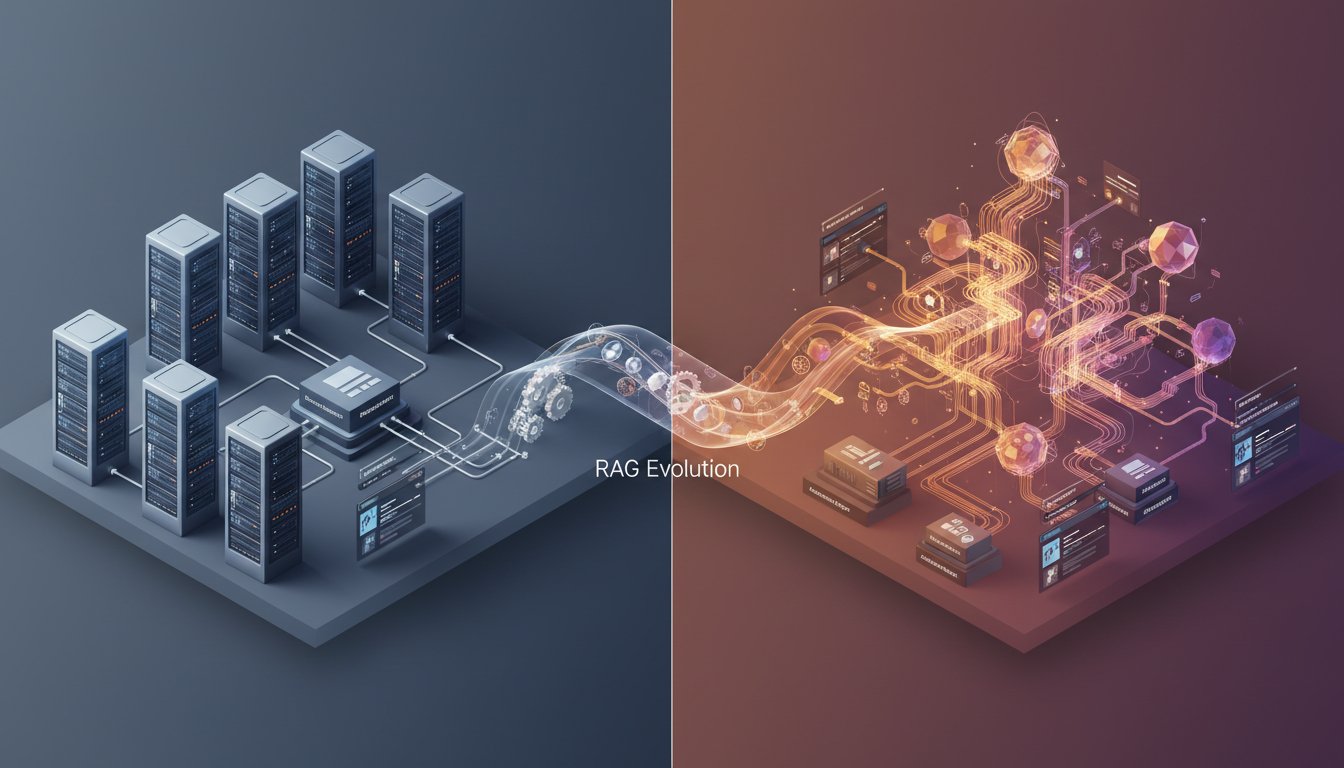

Microsoft’s GraphRAG combined with Autogen changes this entirely. This isn’t just another RAG variant—it’s a paradigm shift toward self-improving, multi-agent systems that can reason about knowledge graphs, coordinate multiple AI agents, and continuously optimize their performance based on user feedback and success metrics.

In this guide, we’ll build a production-ready system that combines GraphRAG’s knowledge graph reasoning with Autogen’s multi-agent orchestration to create a RAG system that literally gets smarter with every query. You’ll walk away with a complete implementation that handles complex enterprise scenarios, automatically improves its retrieval strategies, and scales to handle thousands of concurrent users.

Understanding the GraphRAG + Autogen Architecture

GraphRAG revolutionizes traditional RAG by building knowledge graphs from your documents instead of relying solely on vector similarity. When combined with Autogen’s multi-agent framework, you get a system where specialized agents handle different aspects of the retrieval and generation process.

The architecture consists of five primary agents:

– Graph Builder Agent: Constructs and maintains the knowledge graph from ingested documents

– Query Router Agent: Analyzes incoming queries and determines the optimal retrieval strategy

– Knowledge Retriever Agent: Executes graph-based queries and vector searches in parallel

– Response Generator Agent: Synthesizes information from multiple sources into coherent answers

– Performance Monitor Agent: Tracks system performance and triggers optimization cycles

This multi-agent approach solves critical enterprise challenges. Traditional RAG systems struggle with queries like “What are the regulatory implications of our Q3 marketing strategy for the European market?” because they can’t connect disparate concepts across documents. GraphRAG’s knowledge graph captures these relationships, while Autogen’s agents coordinate to handle the complexity.

The Knowledge Graph Advantage

GraphRAG’s knowledge graph construction goes beyond simple entity extraction. It identifies relationships, hierarchies, and contextual connections that vector embeddings miss. When a user asks about “supply chain disruptions affecting Q4 revenue projections,” the system understands that supply chains connect to vendors, vendors affect costs, costs impact margins, and margins determine revenue—even if these connections aren’t explicitly stated in any single document.

The graph structure enables sophisticated query patterns impossible with traditional RAG:

– Multi-hop reasoning across document boundaries

– Temporal relationship analysis for trend identification

– Hierarchical knowledge traversal for comprehensive coverage

– Semantic relationship exploration for context enrichment

Setting Up the Development Environment

Before diving into implementation, establish a robust development environment that can handle both GraphRAG’s computational requirements and Autogen’s agent coordination overhead.

Infrastructure Requirements

GraphRAG + Autogen systems demand significant computational resources. For production deployment, allocate:

– CPU: Minimum 16 cores for parallel graph processing

– Memory: 64GB+ for large knowledge graphs and concurrent agent operations

– GPU: NVIDIA A100 or equivalent for embedding generation and LLM inference

– Storage: NVMe SSD with 1TB+ for graph database and vector index storage

Essential Dependencies

Install the core libraries and their specific versions to ensure compatibility:

pip install graphrag==0.3.0

pip install autogen==0.2.16

pip install neo4j==5.14.0

pip install openai==1.3.7

pip install langchain==0.1.0

pip install chromadb==0.4.18

pip install tiktoken==0.5.2

Database Configuration

Set up Neo4j for graph storage and ChromaDB for vector indexing:

# docker-compose.yml

version: '3.8'

services:

neo4j:

image: neo4j:5.14.0

environment:

- NEO4J_AUTH=neo4j/enterprise_password

- NEO4J_PLUGINS=["apoc", "graph-data-science"]

ports:

- "7474:7474"

- "7687:7687"

volumes:

- neo4j_data:/data

chromadb:

image: chromadb/chroma:0.4.18

ports:

- "8000:8000"

volumes:

- chroma_data:/chroma/chroma

volumes:

neo4j_data:

chroma_data:

Implementing the Multi-Agent RAG System

Graph Builder Agent Implementation

The Graph Builder Agent transforms unstructured documents into structured knowledge graphs. Unlike traditional chunking strategies, this agent identifies entities, relationships, and hierarchical structures that preserve semantic meaning.

import autogen

from graphrag import GraphRAG

from neo4j import GraphDatabase

import openai

from typing import Dict, List, Any

class GraphBuilderAgent(autogen.AssistantAgent):

def __init__(

self,

name: str,

neo4j_uri: str,

neo4j_user: str,

neo4j_password: str,

openai_api_key: str

):

super().__init__(

name=name,

llm_config={

"config_list": [{

"model": "gpt-4-turbo-preview",

"api_key": openai_api_key

}]

}

)

self.driver = GraphDatabase.driver(

neo4j_uri,

auth=(neo4j_user, neo4j_password)

)

self.graph_rag = GraphRAG()

def process_documents(self, documents: List[Dict[str, Any]]) -> str:

"""Process documents and build knowledge graph"""

for doc in documents:

# Extract entities and relationships

entities = self._extract_entities(doc['content'])

relationships = self._extract_relationships(doc['content'], entities)

# Store in Neo4j

self._store_graph_data(doc, entities, relationships)

return f"Successfully processed {len(documents)} documents into knowledge graph"

def _extract_entities(self, content: str) -> List[Dict[str, Any]]:

"""Extract entities using GraphRAG's entity extraction"""

prompt = f"""

Extract key entities from this text. For each entity, provide:

1. Entity name

2. Entity type (Person, Organization, Concept, Location, etc.)

3. Importance score (1-10)

4. Brief description

Text: {content[:2000]}...

Return as JSON array.

"""

response = openai.chat.completions.create(

model="gpt-4-turbo-preview",

messages=[{"role": "user", "content": prompt}],

temperature=0.1

)

# Parse and validate entities

entities = self._parse_entities_response(response.choices[0].message.content)

return entities

def _extract_relationships(self, content: str, entities: List[Dict[str, Any]]) -> List[Dict[str, Any]]:

"""Extract relationships between entities"""

entity_names = [e['name'] for e in entities]

prompt = f"""

Identify relationships between these entities in the text:

Entities: {entity_names}

For each relationship, provide:

1. Source entity

2. Target entity

3. Relationship type

4. Confidence score (0-1)

5. Supporting text snippet

Text: {content[:2000]}...

Return as JSON array.

"""

response = openai.chat.completions.create(

model="gpt-4-turbo-preview",

messages=[{"role": "user", "content": prompt}],

temperature=0.1

)

relationships = self._parse_relationships_response(response.choices[0].message.content)

return relationships

Query Router Agent Implementation

The Query Router Agent analyzes incoming queries and determines the optimal retrieval strategy. Complex queries might require graph traversal, while simple factual questions might use vector similarity.

class QueryRouterAgent(autogen.AssistantAgent):

def __init__(self, name: str, openai_api_key: str):

super().__init__(

name=name,

llm_config={

"config_list": [{

"model": "gpt-4-turbo-preview",

"api_key": openai_api_key

}]

}

)

def analyze_query(self, query: str) -> Dict[str, Any]:

"""Analyze query and determine retrieval strategy"""

analysis_prompt = f"""

Analyze this query and determine the optimal retrieval strategy:

Query: "{query}"

Provide analysis including:

1. Query complexity (simple/moderate/complex)

2. Required retrieval methods (vector_search, graph_traversal, hybrid)

3. Key entities to focus on

4. Expected response type (factual, analytical, comparative)

5. Confidence score for analysis

Return as JSON object.

"""

response = openai.chat.completions.create(

model="gpt-4-turbo-preview",

messages=[{"role": "user", "content": analysis_prompt}],

temperature=0.1

)

analysis = self._parse_analysis_response(response.choices[0].message.content)

return analysis

def route_query(self, query: str, analysis: Dict[str, Any]) -> Dict[str, Any]:

"""Route query to appropriate retrieval agents"""

routing_strategy = {

"query": query,

"methods": analysis["retrieval_methods"],

"entities": analysis["key_entities"],

"complexity": analysis["complexity"],

"parallel_execution": analysis["complexity"] in ["moderate", "complex"]

}

return routing_strategy

Knowledge Retriever Agent Implementation

The Knowledge Retriever Agent executes both graph-based queries and vector searches, then intelligently combines results based on relevance and confidence scores.

class KnowledgeRetrieverAgent(autogen.AssistantAgent):

def __init__(

self,

name: str,

neo4j_driver,

chroma_client,

openai_api_key: str

):

super().__init__(

name=name,

llm_config={

"config_list": [{

"model": "gpt-4-turbo-preview",

"api_key": openai_api_key

}]

}

)

self.neo4j_driver = neo4j_driver

self.chroma_client = chroma_client

def retrieve_knowledge(

self,

routing_strategy: Dict[str, Any]

) -> Dict[str, Any]:

"""Execute retrieval based on routing strategy"""

results = {

"vector_results": [],

"graph_results": [],

"combined_results": []

}

if "vector_search" in routing_strategy["methods"]:

results["vector_results"] = self._execute_vector_search(

routing_strategy["query"]

)

if "graph_traversal" in routing_strategy["methods"]:

results["graph_results"] = self._execute_graph_search(

routing_strategy["query"],

routing_strategy["entities"]

)

# Combine and rank results

results["combined_results"] = self._combine_results(

results["vector_results"],

results["graph_results"]

)

return results

def _execute_vector_search(self, query: str) -> List[Dict[str, Any]]:

"""Execute vector similarity search"""

collection = self.chroma_client.get_collection("documents")

results = collection.query(

query_texts=[query],

n_results=10,

include=["documents", "metadatas", "distances"]

)

formatted_results = []

for i, doc in enumerate(results["documents"][0]):

formatted_results.append({

"content": doc,

"metadata": results["metadatas"][0][i],

"similarity_score": 1 - results["distances"][0][i],

"source": "vector_search"

})

return formatted_results

def _execute_graph_search(

self,

query: str,

entities: List[str]

) -> List[Dict[str, Any]]:

"""Execute graph traversal search"""

# Build Cypher query based on entities

cypher_query = self._build_cypher_query(entities)

with self.neo4j_driver.session() as session:

result = session.run(cypher_query, entities=entities)

graph_results = []

for record in result:

graph_results.append({

"content": record["content"],

"entities": record["entities"],

"relationships": record["relationships"],

"confidence_score": record["confidence"],

"source": "graph_traversal"

})

return graph_results

Orchestrating Multi-Agent Workflows

The true power of this system emerges when agents work together. Autogen’s group chat functionality enables sophisticated workflows where agents collaborate, debate, and refine their outputs.

Workflow Configuration

class SelfImprovingRAGSystem:

def __init__(self, config: Dict[str, Any]):

# Initialize all agents

self.graph_builder = GraphBuilderAgent(

name="GraphBuilder",

neo4j_uri=config["neo4j_uri"],

neo4j_user=config["neo4j_user"],

neo4j_password=config["neo4j_password"],

openai_api_key=config["openai_api_key"]

)

self.query_router = QueryRouterAgent(

name="QueryRouter",

openai_api_key=config["openai_api_key"]

)

self.knowledge_retriever = KnowledgeRetrieverAgent(

name="KnowledgeRetriever",

neo4j_driver=self.graph_builder.driver,

chroma_client=self._init_chroma_client(config),

openai_api_key=config["openai_api_key"]

)

self.response_generator = ResponseGeneratorAgent(

name="ResponseGenerator",

openai_api_key=config["openai_api_key"]

)

self.performance_monitor = PerformanceMonitorAgent(

name="PerformanceMonitor",

openai_api_key=config["openai_api_key"]

)

# Create group chat

self.group_chat = autogen.GroupChat(

agents=[

self.query_router,

self.knowledge_retriever,

self.response_generator,

self.performance_monitor

],

messages=[],

max_round=10

)

self.manager = autogen.GroupChatManager(groupchat=self.group_chat)

def process_query(self, query: str, user_context: Dict[str, Any] = None) -> Dict[str, Any]:

"""Process a query through the multi-agent system"""

# Start the conversation

initial_message = f"""

New query received: "{query}"

User context: {user_context or 'None provided'}

QueryRouter: Please analyze this query and determine the retrieval strategy.

"""

# Execute the multi-agent workflow

chat_result = self.query_router.initiate_chat(

self.manager,

message=initial_message

)

# Extract final response and performance metrics

return self._extract_final_response(chat_result)

Performance Monitoring and Continuous Improvement

The Performance Monitor Agent tracks system performance and triggers optimization cycles. This agent learns from user feedback, query patterns, and response quality to continuously improve the system.

Feedback Integration

class PerformanceMonitorAgent(autogen.AssistantAgent):

def __init__(self, name: str, openai_api_key: str):

super().__init__(

name=name,

llm_config={

"config_list": [{

"model": "gpt-4-turbo-preview",

"api_key": openai_api_key

}]

}

)

self.performance_metrics = {

"query_count": 0,

"average_response_time": 0,

"user_satisfaction_scores": [],

"retrieval_accuracy": 0,

"improvement_triggers": []

}

def track_query_performance(

self,

query: str,

response: str,

retrieval_results: Dict[str, Any],

response_time: float,

user_feedback: Dict[str, Any] = None

):

"""Track performance metrics for continuous improvement"""

# Update basic metrics

self.performance_metrics["query_count"] += 1

# Calculate rolling average response time

current_avg = self.performance_metrics["average_response_time"]

count = self.performance_metrics["query_count"]

new_avg = ((current_avg * (count - 1)) + response_time) / count

self.performance_metrics["average_response_time"] = new_avg

# Process user feedback if provided

if user_feedback:

satisfaction_score = user_feedback.get("satisfaction_score", 0)

self.performance_metrics["user_satisfaction_scores"].append(satisfaction_score)

# Trigger improvement if satisfaction drops

if satisfaction_score < 3 and len(self.performance_metrics["user_satisfaction_scores"]) > 10:

recent_scores = self.performance_metrics["user_satisfaction_scores"][-10:]

if sum(recent_scores) / len(recent_scores) < 3:

self._trigger_improvement_cycle("low_satisfaction")

def _trigger_improvement_cycle(self, trigger_type: str):

"""Trigger system improvement based on performance issues"""

improvement_strategies = {

"low_satisfaction": self._improve_response_quality,

"slow_retrieval": self._optimize_retrieval_speed,

"poor_accuracy": self._enhance_knowledge_graph

}

if trigger_type in improvement_strategies:

improvement_strategies[trigger_type]()

self.performance_metrics["improvement_triggers"].append({

"trigger_type": trigger_type,

"timestamp": datetime.now(),

"action_taken": True

})

Production Deployment and Scaling

Deploying a self-improving RAG system requires careful attention to scalability, monitoring, and maintenance. The multi-agent architecture provides natural scaling points, but coordination overhead must be managed.

Horizontal Scaling Strategy

Implement agent pools to handle concurrent requests:

class ScalableRAGSystem:

def __init__(self, config: Dict[str, Any]):

self.agent_pools = {

"query_routers": [QueryRouterAgent(f"QueryRouter_{i}", config["openai_api_key"]) for i in range(3)],

"knowledge_retrievers": [KnowledgeRetrieverAgent(f"KnowledgeRetriever_{i}", config) for i in range(5)],

"response_generators": [ResponseGeneratorAgent(f"ResponseGenerator_{i}", config["openai_api_key"]) for i in range(3)]

}

self.load_balancer = LoadBalancer(self.agent_pools)

async def process_query_async(self, query: str) -> Dict[str, Any]:

"""Process query with automatic load balancing"""

# Get available agents

router = await self.load_balancer.get_available_agent("query_routers")

retriever = await self.load_balancer.get_available_agent("knowledge_retrievers")

generator = await self.load_balancer.get_available_agent("response_generators")

# Execute pipeline

routing_strategy = await router.analyze_query(query)

retrieval_results = await retriever.retrieve_knowledge(routing_strategy)

final_response = await generator.generate_response(query, retrieval_results)

# Release agents back to pool

self.load_balancer.release_agent(router)

self.load_balancer.release_agent(retriever)

self.load_balancer.release_agent(generator)

return final_response

Monitoring and Observability

Implement comprehensive monitoring to track system health and performance:

import prometheus_client

from opentelemetry import trace, metrics

class SystemMonitor:

def __init__(self):

# Prometheus metrics

self.query_counter = prometheus_client.Counter(

'rag_queries_total',

'Total queries processed'

)

self.response_time_histogram = prometheus_client.Histogram(

'rag_response_time_seconds',

'Response time distribution'

)

self.agent_utilization_gauge = prometheus_client.Gauge(

'rag_agent_utilization',

'Agent pool utilization',

['agent_type']

)

# OpenTelemetry tracing

self.tracer = trace.get_tracer(__name__)

def track_query(self, query: str, response_time: float, success: bool):

"""Track query metrics"""

self.query_counter.inc()

self.response_time_histogram.observe(response_time)

# Create trace

with self.tracer.start_as_current_span("process_query") as span:

span.set_attribute("query.length", len(query))

span.set_attribute("response.time", response_time)

span.set_attribute("success", success)

The combination of GraphRAG’s intelligent knowledge representation and Autogen’s multi-agent orchestration creates a RAG system that truly learns and improves over time. Unlike traditional implementations that remain static after deployment, this architecture continuously optimizes its retrieval strategies, refines its knowledge graphs, and adapts to user feedback patterns.

This approach transforms RAG from a simple question-answering system into an intelligent knowledge assistant that becomes more valuable with every interaction. The self-improving capabilities ensure that your investment in AI infrastructure pays increasing dividends as the system learns your organization’s specific needs and knowledge patterns.

Ready to build your own self-improving RAG system? Start with the GraphRAG documentation and Autogen tutorials, then adapt the code examples above to your specific use case. The future of enterprise AI isn’t just about better models—it’s about systems that evolve and improve themselves.