Imagine a new customer, let’s call her Jane. She just signed up for your groundbreaking SaaS product, filled with excitement and ambition. She navigates to her dashboard, only to be met with a labyrinth of features, buttons, and settings. A moment later, a generic welcome email lands in her inbox with a link to a 50-page knowledge base. Her initial excitement quickly fades into overwhelm, and within a week, her engagement plummets. This scenario is all too common. The initial moments of a customer’s journey are the most critical, yet they are often the most impersonal. According to Wyzowl, 86% of people say they’d be more likely to stay loyal to a business that invests in onboarding content that welcomes and educates them after they’ve bought.

Now, picture an alternative. The moment Jane signs up, she receives a message in the Intercom chat widget. It’s not just text; it’s a short video. An avatar, looking like a friendly customer success manager, greets her by name. “Hi Jane,” it says, “Welcome to the team! I see you’re interested in our analytics feature. Here’s a 30-second guide on how to connect your first data source to get immediate value.” In less than a minute, Jane feels seen, understood, and has a clear, actionable first step. The challenge has always been that this level of personalization is manually intensive and impossible to scale. How can you create thousands of unique videos for every new user? The answer lies in a sophisticated, automated workflow powered by Retrieval-Augmented Generation (RAG).

This is where today’s technology shines. We can now build an intelligent system that acts as a hyper-personalized onboarding specialist for every single customer. This system connects to your customer data, uses a RAG model to pull relevant information from your knowledge base, generates a unique script with an LLM, produces a studio-quality voiceover with ElevenLabs, and creates a professional video with a digital avatar using HeyGen. The final, personalized video is then delivered automatically to the new user through Intercom. This article is not just a high-level overview; it’s a complete technical walkthrough. We will dissect the architecture of this automated video engine, provide step-by-step implementation guidance with code, and show you how to connect these powerful tools to create an onboarding experience that doesn’t just welcome users—it wows them.

The Architecture of an Automated Onboarding Video Engine

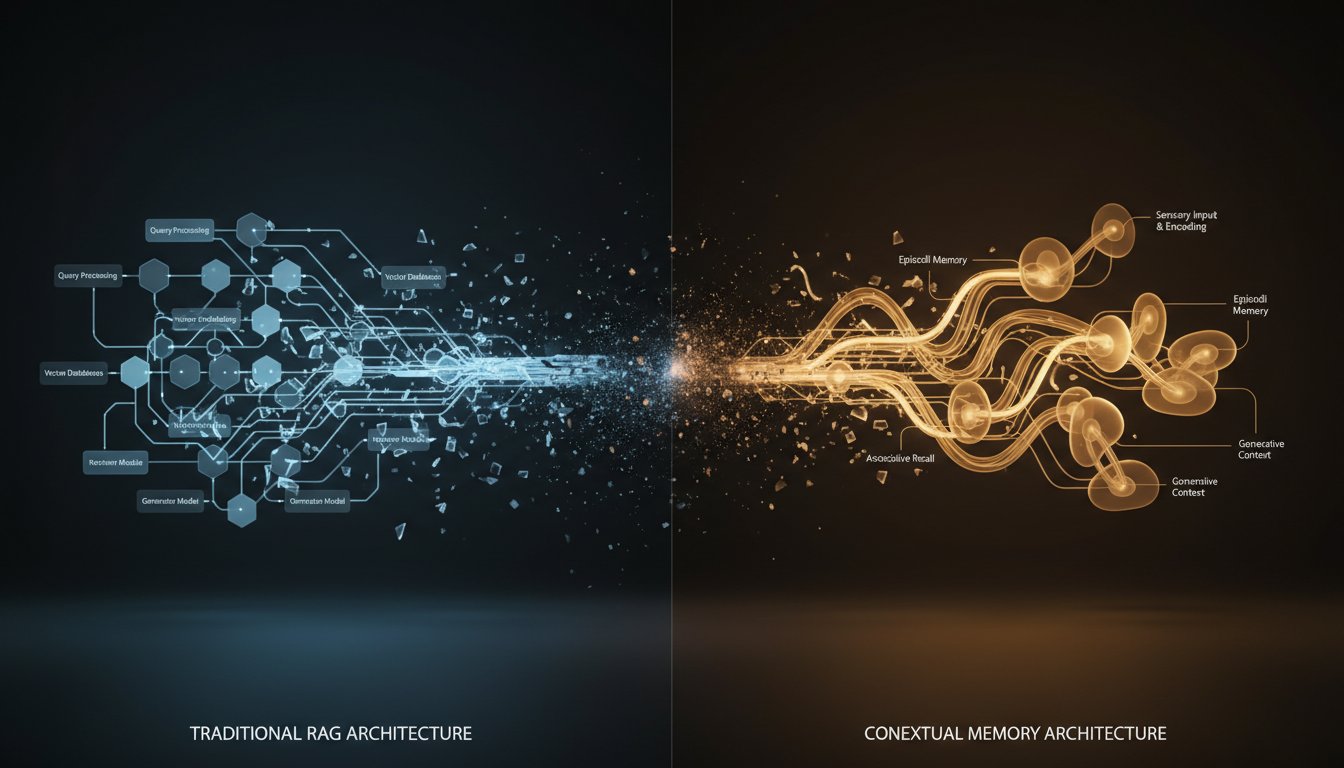

Before diving into code, it’s crucial to understand the high-level architecture. This system isn’t a single application but an orchestrated workflow of specialized AI services, each playing a critical role. Think of it as a digital assembly line for creating personalized videos at scale.

Core Components of Our RAG System

Our automated video engine is composed of several key components working in concert. Each piece is essential for transforming a simple user signup event into a personalized video message.

- Trigger: The process kicks off with an event from Intercom. Using webhooks, we can listen for specific actions, such as a “New User Created” event, which will be the starting gun for our workflow.

- Data Source & Vector Database: This is your company’s knowledge base, tutorials, and documentation. We’ll process this unstructured text, convert it into numerical representations (embeddings), and store it in a vector database like Pinecone or Qdrant. This allows our RAG system to find the most relevant information for a user’s specific needs instantly.

- Orchestrator: A central script, often running as a serverless function (like AWS Lambda or Google Cloud Functions) for efficiency, manages the entire workflow. It receives the trigger, calls the various APIs in sequence, and handles the logic.

- Large Language Model (LLM): At the heart of our scriptwriting process is an LLM like GPT-4 or Claude 3. It takes the user’s data and the context retrieved from our vector database to generate a natural, engaging, and personalized video script.

- Voice Generation: To bring the script to life, we’ll use the ElevenLabs API. It can generate incredibly lifelike, human-sounding voiceovers that are critical for building trust and a personal connection. A robotic voice would shatter the illusion of personalization. You can try ElevenLabs for free now.

- Video Generation: The HeyGen API takes our script and the generated voiceover file to create the final video. We can specify a custom or pre-made digital avatar, background, and other visual elements, ensuring brand consistency. You can click here to sign up for HeyGen.

- Delivery System: Finally, the Orchestrator uses the Intercom API to send a message to the new user, embedding the link to their freshly generated, personalized onboarding video.

The Workflow in Action: From Signup to Video Message

Let’s trace the data flow from start to finish:

- A new user, Jane, completes the signup form, indicating her interest in “Feature X.”

- Intercom fires a webhook, sending a payload containing Jane’s name, company, and feature interest to our Orchestrator function.

- The Orchestrator queries the vector database with a question like, “What is the best first step for a new user interested in Feature X?”

- The vector database returns the most relevant chunks of text from your knowledge base.

- The Orchestrator packages this context and Jane’s data into a prompt for the LLM.

- The LLM generates a personalized script: “Hi Jane, welcome! Let’s get you started with Feature X. The first thing you’ll want to do is navigate to the ‘Integrations’ tab and connect your account…”

- This script is sent to the ElevenLabs API, which returns a high-quality MP3 voiceover.

- The Orchestrator uploads the audio file to a temporary storage location (like an S3 bucket) and gets a public URL.

- The script text and the audio URL are sent to the HeyGen API to begin video generation.

- HeyGen processes the request and, upon completion, sends a webhook back to our Orchestrator with the final video URL.

- The Orchestrator calls the Intercom API to post a personalized message to Jane: “Welcome, Jane! I’ve made a quick video just for you to help you get started,” followed by the video link.

This entire process, from signup to video delivery, can happen in just a few minutes, providing an immediate and powerful first touchpoint.

Step-by-Step Implementation Guide

Now, let’s roll up our sleeves and build this system. This guide assumes you have a basic understanding of Python and API integrations.

Setting Up Your Environment and Tools

First, ensure you have the necessary accounts and tools ready.

- Prerequisites: Python 3.8+, an Intercom account with admin access, and a collection of your knowledge base articles (as text or markdown files).

- API Keys: You will need to sign up for and obtain API keys from:

- An LLM provider (e.g., OpenAI)

- ElevenLabs

- HeyGen

- Project Setup: Create a new project directory and a virtual environment. Then, install the required Python libraries:

# requirements.txt

pip install requests

pip install openai

pip install elevenlabs

pip install langchain

pip install langchain-openai

pip install qdrant-client # Or your preferred vector DB client

pip install python-dotenv

Step 1: Building the RAG Foundation for Scripting

The brain of our personalization is the RAG system. It ensures the script is not just friendly but also factually correct and helpful, based on your actual documentation.

-

Chunk and Embed Documents: Using a library like LangChain, we’ll load your knowledge base documents, split them into smaller, digestible chunks, and use an embedding model (like OpenAI’s

text-embedding-3-small) to convert them into vectors.“`python

(Simplified Example using LangChain)

from langchain_community.document_loaders import DirectoryLoader

from langchain_openai import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import QdrantLoad docs

loader = DirectoryLoader(‘./knowledge_base/’, glob=”*/.md”)

documents = loader.load()Split docs

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

docs = text_splitter.split_documents(documents)Embed and store

embeddings = OpenAIEmbeddings()

qdrant = Qdrant.from_documents(

docs,

embeddings,

location=”:memory:”, # For local testing

collection_name=”onboarding_docs”,

)

retriever = qdrant.as_retriever()

“` -

Create a Prompt Template: Design a prompt that instructs the LLM how to use the retrieved context to write the script.

“`python

from langchain.prompts import PromptTemplatetemplate = “””

You are a friendly and helpful customer onboarding specialist. Your goal is to write a concise, welcoming, and actionable video script that is approximately 30 seconds long.Use the following context from our knowledge base to guide the user on their first step:

{context}Here is the user’s information:

User Name: {user_name}

Company: {company_name}

Feature of Interest: {feature_interest}Based on all this, please write a video script. Start by personally greeting the user by their name.

“””prompt = PromptTemplate.from_template(template)

“`

This setup allows you to pass user data into your prompt and dynamically inject the most relevant help documentation to create a hyper-relevant script.

Step 2: Generating Lifelike Voice with ElevenLabs

A personalized message delivered with a robotic voice is an instant failure. ElevenLabs provides state-of-the-art voice synthesis that is nearly indistinguishable from human speech, which is crucial for making a warm first impression. Research suggests that high-quality audio can improve information recall and build user trust significantly.

Here’s a function to generate the voiceover from our script:

import requests

import os

ELEVENLABS_API_KEY = os.getenv("ELEVENLABS_API_KEY")

def generate_voiceover(script_text: str, voice_id: str = "21m00Tcm4TlvDq8ikWAM") -> bytes:

"""Generates voiceover using ElevenLabs API and returns the audio content."""

url = f"https://api.elevenlabs.io/v1/text-to-speech/{voice_id}"

headers = {

"Accept": "audio/mpeg",

"Content-Type": "application/json",

"xi-api-key": ELEVENLABS_API_KEY

}

data = {

"text": script_text,

"model_id": "eleven_multilingual_v2",

"voice_settings": {

"stability": 0.5,

"similarity_boost": 0.75

}

}

response = requests.post(url, json=data, headers=headers)

response.raise_for_status()

return response.content

To start creating beautiful, realistic AI voices for your projects, you can try ElevenLabs for free now.

Step 3: Creating Personalized Avatars with HeyGen

With our script and voiceover ready, it’s time to generate the video. HeyGen’s API makes it possible to programmatically create videos with talking avatars, a task that was once the exclusive domain of video production studios.

import requests

import os

HEYGEN_API_KEY = os.getenv("HEYGEN_API_KEY")

def create_onboarding_video(script: str, audio_url: str) -> str:

"""Submits a video generation job to HeyGen API."""

url = "https://api.heygen.com/v2/video/generate"

headers = {

"Content-Type": "application/json",

"x-api-key": HEYGEN_API_KEY

}

data = {

"video_inputs": [

{

"character": {

"type": "avatar",

"avatar_id": "YOUR_AVATAR_ID", # Your chosen avatar

"avatar_style": "normal"

},

"voice": {

"type": "audio",

"audio_url": audio_url

}

}

],

"test": True,

"caption": False,

"dimension": {

"width": 1920,

"height": 1080

},

"title": "Personalized Onboarding Video"

}

response = requests.post(url, headers=headers, json=data)

response.raise_for_status()

video_id = response.json()["data"]["video_id"]

return video_id

This function initiates the video generation process. You’ll need to set up a webhook in your HeyGen account to be notified when the video is ready for sharing. To start creating your own AI-powered video avatars, you can click here to sign up for HeyGen.

Step 4: Connecting Everything with Intercom

The final step is to deliver the video. You’ll first need to configure a webhook in your Intercom workspace to send new user data to your orchestrator’s endpoint. Once your orchestrator receives the final video URL from the HeyGen webhook, it will use the Intercom API to send a message to the user who triggered the workflow.

import requests

import os

INTERCOM_ACCESS_TOKEN = os.getenv("INTERCOM_ACCESS_TOKEN")

def send_video_to_intercom(user_id: str, video_url: str):

"""Posts a message with the video link to a user in Intercom."""

url = "https://api.intercom.io/conversations"

headers = {

"Authorization": f"Bearer {INTERCOM_ACCESS_TOKEN}",

"Content-Type": "application/json",

"Accept": "application/json"

}

data = {

"from": {"type": "user", "id": user_id},

"body": f"Hi there! I made a quick personalized video to help you get started: {video_url}"

}

response = requests.post(url, headers=headers, json=data)

response.raise_for_status()

print(f"Successfully sent video to user {user_id}")

Beyond Onboarding: Expanding the Personalized Video Workflow

Once this infrastructure is in place, its potential extends far beyond the initial welcome message. You can adapt this automated video engine for various touchpoints in the customer lifecycle:

- New Feature Announcements: Instead of a generic email blast, send personalized videos to specific user segments, explaining how a new feature solves their unique problems.

- Proactive Support & Education: Integrate with product analytics tools. If you detect a user struggling with a particular workflow, automatically trigger a video that walks them through the solution.

- Renewal and Upsell Campaigns: As a customer’s subscription renewal approaches, send a personalized “thank you” video from their “account manager” avatar, highlighting the value they’ve received and suggesting relevant upgrades.

The initial investment in this architecture pays dividends by enabling a new, highly effective communication channel that scales with your user base.

We started with the story of Jane, the user lost in a sea of features. By implementing this system, you transform her experience. Instead of a generic email, she gets a personal guide, making her feel valued and empowered from the very first minute. This is the tangible difference between a customer who churns within a month and one who becomes a long-term advocate for your brand. We’ve moved beyond the theory of personalization and into practical application. By intelligently combining a RAG system for contextual scripting, ElevenLabs for emotive voice, and HeyGen for scalable video production, you can build a next-generation customer experience engine.

This isn’t a futuristic dream locked away in a product roadmap; it’s an achievable project that can directly impact your activation rates, boost engagement, and reduce churn. The tools are here, and the blueprint is in this guide. Ready to build an onboarding experience that your customers will love? Grab your API keys from ElevenLabs and HeyGen, and start coding your personalized video engine today.