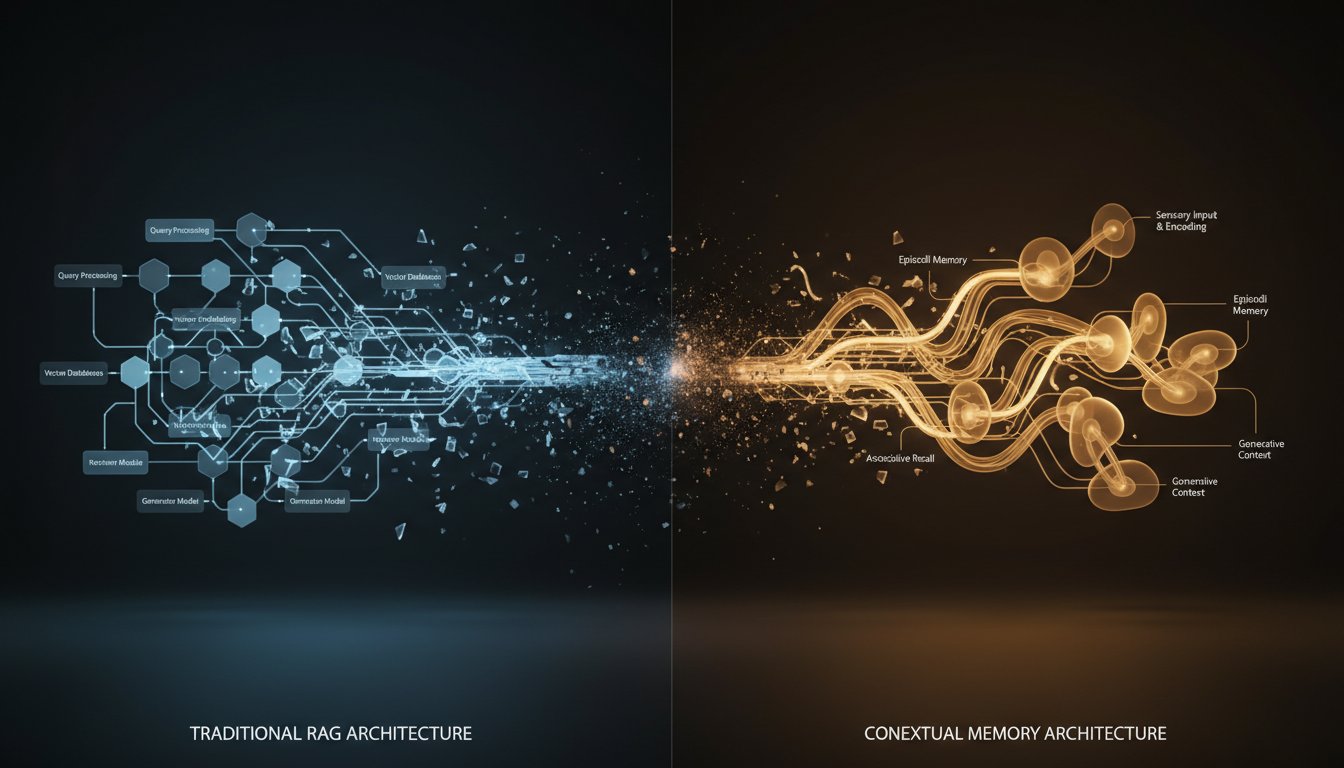

Retrieval Augmented Generation (RAG) is a cutting-edge technique that enhances the capabilities of generative AI models by integrating them with information retrieval systems. RAG addresses the limitations of large language models (LLMs) by providing them with access to up-to-date, domain-specific information, resulting in more accurate, contextually relevant, and timely responses to user queries.

The importance of RAG lies in its ability to mitigate the issues associated with traditional generative AI models, such as hallucinations, outdated information, and lack of context. By allowing LLMs to retrieve and incorporate relevant information from external knowledge bases, RAG ensures that the generated content is grounded in facts and tailored to the user’s specific needs.

RAG’s significance is particularly evident in industries where accuracy, timeliness, and domain-specific knowledge are crucial, such as finance, healthcare, and legal services. For example, in the financial sector, RAG can enable AI systems to provide investment insights based on real-time market data, enhance compliance by verifying information sources, and mitigate risks associated with outdated or generalized information.

As businesses increasingly adopt AI solutions, RAG is poised to become a game-changer, enabling organizations to harness the power of generative AI while ensuring the reliability, transparency, and relevance of the generated content. By combining the strengths of retrieval-based models and generative models, RAG offers a powerful tool for businesses looking to stay ahead of the curve and provide exceptional services to their customers.

Key Considerations for Deploying RAG in the Cloud

When deploying RAG solutions in the cloud, several key considerations come into play to ensure optimal performance, security, and cost-effectiveness. One crucial aspect is the choice of cloud platform, with AWS, Azure, and Google Cloud Platform (GCP) being the leading contenders. Each platform offers unique strengths and trade-offs that must be carefully evaluated based on the specific requirements of the RAG application.

Security and compliance are paramount concerns when dealing with sensitive data in RAG systems. Cloud platforms provide various encryption options, such as customer-managed encryption keys (CMEK) in GCP, to protect data at rest and in transit. Additionally, features like Binary Authorization in GCP help ensure that only authorized container images are deployed, mitigating the risk of unauthorized access or tampering.

Reliability is another critical factor, as RAG applications often require high availability and fault tolerance. Cloud platforms offer regional services with automatic failover and load balancing across multiple zones, minimizing the impact of outages. For example, GCP’s AlloyDB for PostgreSQL provides high availability clusters with automatic failover, ensuring continuous operation even in the face of disruptions.

Scalability is a key advantage of deploying RAG in the cloud, as it allows for efficient handling of large knowledge corpora and user demands. Cloud platforms provide elastic resources that can be dynamically scaled based on workload requirements. This scalability extends to various components of the RAG pipeline, such as data preprocessing, retrieval, and generation, enabling optimized performance and cost-efficiency.

Integration with existing enterprise systems is another important consideration. Cloud platforms offer a wide range of services and APIs that facilitate seamless integration with data sources, authentication systems, and other enterprise applications. This integration capability allows RAG solutions to leverage existing data assets and workflows, enhancing their value and usability within the organization.

Cost optimization is a critical aspect of deploying RAG in the cloud. Cloud platforms provide flexible pricing models, such as pay-per-use and reserved instances, allowing organizations to align costs with actual usage patterns. Additionally, cloud providers offer cost management tools and best practices to help monitor and optimize resource utilization, ensuring that RAG deployments remain cost-effective over time.

Ethical considerations, such as data privacy and explainability, are also crucial when deploying RAG in the cloud. Cloud platforms provide various security controls and compliance certifications to help organizations meet regulatory requirements and protect sensitive data. However, it is essential to carefully select and anonymize data used in the retrieval process, and to involve human experts in validating RAG outputs, especially in sensitive domains like healthcare.

In conclusion, deploying RAG in the cloud requires careful consideration of security, reliability, scalability, integration, cost optimization, and ethical factors. By leveraging the strengths of leading cloud platforms like AWS, Azure, and GCP, organizations can build robust and efficient RAG solutions that drive business value and enhance user experiences. However, navigating the complexities of enterprise-scale deployment requires expertise and strategic decision-making to ensure long-term success.

AWS Offerings for RAG Deployment

Amazon Web Services (AWS) provides a comprehensive suite of offerings that enable organizations to deploy Retrieval Augmented Generation (RAG) solutions effectively. One of the key components is Amazon Kendra, an enterprise search service powered by machine learning. Kendra offers an optimized Retrieve API that integrates with its high-accuracy semantic ranker, serving as a powerful enterprise retriever for RAG workflows. This API allows developers to enhance the retrieval process and improve the overall quality of generated responses.

For organizations seeking a more managed approach, Amazon Bedrock stands out as a fully-managed service that simplifies the development of generative AI applications. Bedrock offers a choice of high-performing foundation models and a broad set of capabilities while maintaining privacy and security. With Knowledge Bases for Amazon Bedrock, connecting foundation models to data sources for RAG becomes a seamless process. Vector conversions, retrievals, and improved output generation are handled automatically, reducing the complexity of implementing RAG.

AWS also provides Amazon SageMaker JumpStart, a machine learning hub that offers foundation models, built-in algorithms, and prebuilt ML solutions. Organizations can accelerate their RAG implementation by leveraging existing SageMaker notebooks and code examples, saving valuable development time and effort.

For more customized RAG solutions, AWS offers a range of services that can be integrated to create a robust and scalable architecture. Amazon S3 and Amazon RDS provide reliable storage options for document repositories, while AWS Lambda enables quick responses and efficient orchestration between services. Amazon DynamoDB, a fast and flexible NoSQL database, can store and fetch conversations efficiently, reducing latency and improving the user experience.

To ensure the security and compliance of RAG deployments, AWS provides various encryption options, such as AWS Key Management Service (KMS) for customer-managed encryption keys. Additionally, AWS Identity and Access Management (IAM) allows fine-grained access control to resources, ensuring that only authorized users and services can interact with the RAG system.

AWS also offers a range of monitoring and logging services, such as Amazon CloudWatch and AWS CloudTrail, which help organizations track the performance and usage of their RAG deployments. These services provide valuable insights into resource utilization, API calls, and potential security events, enabling proactive management and optimization of the RAG system.

In terms of cost optimization, AWS provides flexible pricing models, such as pay-per-use and reserved instances, allowing organizations to align costs with actual usage patterns. AWS Cost Explorer and AWS Budgets help monitor and manage costs, ensuring that RAG deployments remain cost-effective over time.

Overall, AWS offers a comprehensive and flexible ecosystem for deploying RAG solutions. With a combination of managed services like Amazon Kendra and Amazon Bedrock, as well as customizable components like AWS Lambda and Amazon DynamoDB, organizations can build robust and scalable RAG architectures that deliver accurate and contextually relevant responses to user queries. By leveraging AWS’s security, monitoring, and cost optimization features, businesses can ensure the long-term success and viability of their RAG deployments.

Amazon SageMaker and its RAG Capabilities

Amazon SageMaker, a fully managed machine learning platform, offers a range of capabilities that make it well-suited for deploying Retrieval Augmented Generation (RAG) solutions. One of the key features of SageMaker is its ability to train and deploy large language models (LLMs) using popular frameworks such as PyTorch and TensorFlow. This allows organizations to leverage state-of-the-art LLMs as the foundation for their RAG systems, ensuring high-quality text generation.

SageMaker also provides a variety of built-in algorithms and pre-trained models through SageMaker JumpStart, which can be used to accelerate the development of RAG solutions. For example, the Sentence-BERT (SBERT) model, available in JumpStart, can be used for efficient semantic search and retrieval, a crucial component of RAG. By leveraging pre-trained models, organizations can save significant time and resources in building their RAG systems from scratch.

Another powerful feature of SageMaker is its ability to integrate with other AWS services, such as Amazon S3 for storage and Amazon Kendra for enterprise search. This integration allows organizations to seamlessly connect their document repositories with their RAG systems, enabling efficient retrieval of relevant information during the generation process. SageMaker’s built-in support for Kendra’s Retrieve API further simplifies the integration process, making it easier to build end-to-end RAG solutions.

SageMaker also offers a range of tools and features for data preprocessing, model training, and deployment. For instance, SageMaker Processing allows organizations to run data processing and feature engineering tasks at scale, preparing large datasets for use in RAG systems. SageMaker Training enables distributed training of LLMs across multiple instances, accelerating the training process and reducing time-to-market. SageMaker Inference provides low-latency, high-throughput serving of trained models, ensuring fast and efficient generation of responses in RAG applications.

To ensure the security and compliance of RAG deployments, SageMaker integrates with AWS security services such as AWS Identity and Access Management (IAM) and AWS Key Management Service (KMS). This allows organizations to implement fine-grained access control and encrypt sensitive data, protecting the privacy and integrity of their RAG systems.

SageMaker also provides a range of monitoring and logging capabilities through integration with Amazon CloudWatch and AWS CloudTrail. These services enable organizations to track the performance and usage of their RAG deployments, identify potential issues, and optimize resource utilization for cost-effectiveness.

In terms of cost optimization, SageMaker offers flexible pricing options, such as on-demand and spot instances, allowing organizations to align costs with actual usage patterns. SageMaker also provides automatic scaling capabilities, ensuring that resources are dynamically adjusted based on workload requirements, minimizing waste and maximizing cost-efficiency.

Overall, Amazon SageMaker provides a comprehensive and powerful platform for deploying RAG solutions in the cloud. With its ability to train and deploy large language models, integrate with other AWS services, and provide a range of tools for data preprocessing, model training, and deployment, SageMaker enables organizations to build robust and scalable RAG systems. By leveraging SageMaker’s security, monitoring, and cost optimization features, businesses can ensure the long-term success and viability of their RAG deployments, delivering accurate and contextually relevant responses to user queries.

Azure Offerings for RAG Deployment

Microsoft Azure provides a robust set of offerings for deploying Retrieval Augmented Generation (RAG) solutions, empowering organizations to harness the power of generative AI while ensuring the reliability, transparency, and relevance of the generated content. One of the key components in Azure’s RAG ecosystem is Azure AI Search, formerly known as Azure Cognitive Search. Azure AI Search is a proven solution for information retrieval, providing indexing and query capabilities with the infrastructure and security of the Azure cloud. It offers seamless integration with embedding models for indexing and chat models or language understanding models for retrieval, enabling organizations to design comprehensive RAG solutions that include all the elements for generative AI over their proprietary content.

Azure OpenAI Service is another crucial offering in Azure’s RAG arsenal. It provides access to state-of-the-art language models, such as GPT-3, which can be fine-tuned for specific domains and integrated with Azure AI Search to create powerful RAG applications. The combination of Azure OpenAI Service and Azure AI Search allows organizations to leverage the strengths of both retrieval-based and generative models, delivering accurate and contextually relevant responses to user queries.

For a more streamlined approach to RAG deployment, Microsoft offers the GPT-RAG Solution Accelerator, an enterprise-ready solution designed specifically for deploying large language models using the RAG pattern within enterprise settings. GPT-RAG emphasizes a robust security framework and zero-trust principles to handle sensitive data carefully, incorporating features like Azure Virtual Network and Azure Front Door with Web Application Firewall to ensure data security. It also includes auto-scaling capabilities to adapt to varying workloads, ensuring a seamless user experience even during peak times.

Azure Databricks, a fast, easy, and collaborative Apache Spark-based analytics platform, plays a significant role in RAG deployments on Azure. It provides an integrated set of tools that support various RAG scenarios, such as processing unstructured data, managing embeddings, and serving RAG applications. Azure Databricks enables organizations to process and prepare large datasets for use in RAG systems, leveraging its distributed computing capabilities and integration with other Azure services.

To ensure the security and compliance of RAG deployments, Azure offers a range of security features and services. Azure Key Vault provides secure storage for encryption keys and secrets, while Azure Active Directory enables fine-grained access control and authentication. Azure Monitor and Azure Log Analytics help organizations track the performance and usage of their RAG deployments, providing valuable insights for proactive management and optimization.

In terms of cost optimization, Azure provides flexible pricing models, such as pay-as-you-go and reserved instances, allowing organizations to align costs with actual usage patterns. Azure Cost Management and Billing helps monitor and manage costs, ensuring that RAG deployments remain cost-effective over time.

Overall, Azure offers a comprehensive and integrated ecosystem for deploying RAG solutions, combining powerful services like Azure AI Search, Azure OpenAI Service, and Azure Databricks. With its emphasis on security, scalability, and cost optimization, Azure enables organizations to build robust and efficient RAG applications that drive business value and enhance user experiences. By leveraging Azure’s offerings and best practices, businesses can navigate the complexities of enterprise-scale RAG deployment and ensure long-term success in the rapidly evolving landscape of generative AI.

Azure Cognitive Search and its RAG Integration

Azure Cognitive Search, now known as Azure AI Search, is a powerful information retrieval solution that plays a crucial role in deploying Retrieval Augmented Generation (RAG) solutions on the Azure platform. It provides a solid foundation for indexing and querying large datasets, enabling organizations to build comprehensive RAG applications that deliver accurate and contextually relevant responses to user queries.

One of the key strengths of Azure AI Search is its seamless integration with embedding models for indexing and chat models or language understanding models for retrieval. This integration allows organizations to leverage the power of state-of-the-art language models, such as those provided by Azure OpenAI Service, to enhance the retrieval process and improve the overall quality of generated responses.

By combining Azure AI Search with Azure OpenAI Service, organizations can create powerful RAG solutions that harness the strengths of both retrieval-based and generative models. Azure AI Search handles the indexing and retrieval of relevant information from large datasets, while Azure OpenAI Service’s language models, such as GPT-3, generate high-quality responses based on the retrieved information.

The integration of Azure AI Search with RAG workflows is further simplified by the availability of the GPT-RAG Solution Accelerator, an enterprise-ready solution designed specifically for deploying large language models using the RAG pattern within enterprise settings. The GPT-RAG Solution Accelerator emphasizes a robust security framework and zero-trust principles, ensuring that sensitive data is handled carefully throughout the RAG process.

Azure AI Search also integrates seamlessly with other Azure services, such as Azure Databricks, which enables organizations to process and prepare large datasets for use in RAG systems. This integration allows businesses to leverage Azure Databricks’ distributed computing capabilities to efficiently handle unstructured data, manage embeddings, and serve RAG applications at scale.

In terms of security and compliance, Azure AI Search benefits from Azure’s comprehensive security features and services. Azure Key Vault provides secure storage for encryption keys and secrets, while Azure Active Directory enables fine-grained access control and authentication. These security measures ensure that RAG deployments built on Azure AI Search remain protected and compliant with industry standards and regulations.

The integration of Azure AI Search with Azure Monitor and Azure Log Analytics further enhances the manageability and optimization of RAG deployments. These services provide valuable insights into the performance and usage of RAG applications, enabling organizations to proactively monitor and optimize their systems for maximum efficiency and cost-effectiveness.

In conclusion, Azure AI Search is a vital component in the Azure ecosystem for deploying RAG solutions. Its seamless integration with embedding models, chat models, and language understanding models, combined with its compatibility with other Azure services, makes it a powerful tool for building comprehensive and efficient RAG applications. By leveraging Azure AI Search and its integration with the broader Azure platform, organizations can create robust and scalable RAG solutions that deliver accurate and contextually relevant responses to user queries, driving business value and enhancing user experiences.

GCP Offerings for RAG Deployment

Google Cloud Platform (GCP) offers a comprehensive suite of services and tools that enable organizations to deploy Retrieval Augmented Generation (RAG) solutions effectively. One of the key components in GCP’s RAG ecosystem is Vertex AI, a unified platform for building, deploying, and scaling machine learning models. Vertex AI provides a range of capabilities that simplify the development and deployment of RAG applications, including Vertex AI Matching Engine for efficient vector similarity search and Vertex AI PaLM API for access to state-of-the-art large language models.

Vertex AI Matching Engine is a fully managed service that enables high-performance vector similarity search at scale. It allows organizations to store and search massive collections of vectors, making it an ideal solution for the retrieval component of RAG systems. By leveraging Vertex AI Matching Engine, businesses can efficiently index and query large datasets, ensuring fast and accurate retrieval of relevant information during the generation process.

The Vertex AI PaLM API provides access to Google’s advanced large language models, such as PaLM (Pathways Language Model), which can be fine-tuned for specific domains and integrated with Vertex AI Matching Engine to create powerful RAG applications. The combination of Vertex AI PaLM API and Vertex AI Matching Engine allows organizations to harness the strengths of both retrieval-based and generative models, delivering accurate and contextually relevant responses to user queries.

GCP also offers Cloud Run, a fully managed serverless platform that enables organizations to run stateless containers in a scalable and cost-effective manner. Cloud Run is well-suited for deploying RAG applications, as it allows businesses to focus on building and refining their models without worrying about infrastructure management. With Cloud Run, RAG systems can automatically scale based on demand, ensuring optimal performance and cost-efficiency.

For data storage and processing, GCP provides a range of services that seamlessly integrate with RAG workflows. Cloud Storage offers secure and scalable object storage for document repositories, while BigQuery, a fully managed data warehouse, enables organizations to analyze and process large datasets efficiently. These services, combined with GCP’s data processing tools like Dataflow and Dataproc, allow businesses to prepare and manage the data required for their RAG systems effectively.

To ensure the security and compliance of RAG deployments, GCP offers various security features and services. Cloud Key Management Service (KMS) provides secure key management for encrypting sensitive data, while Binary Authorization helps ensure that only trusted container images are deployed. GCP’s Identity and Access Management (IAM) enables fine-grained access control, ensuring that only authorized users and services can interact with the RAG system.

GCP also provides robust monitoring and logging capabilities through services like Cloud Monitoring and Cloud Logging. These services enable organizations to track the performance and usage of their RAG deployments, identify potential issues, and optimize resource utilization for cost-effectiveness.

In terms of cost optimization, GCP offers flexible pricing models, such as pay-per-use and committed use discounts, allowing organizations to align costs with actual usage patterns. GCP’s Cost Management tools, such as Cost Explorer and Budgets, help monitor and manage costs, ensuring that RAG deployments remain cost-effective over time.

Overall, GCP offers a powerful and integrated ecosystem for deploying RAG solutions, combining advanced services like Vertex AI, Cloud Run, and BigQuery. With its emphasis on scalability, security, and cost optimization, GCP enables organizations to build robust and efficient RAG applications that deliver accurate and contextually relevant responses to user queries. By leveraging GCP’s offerings and best practices, businesses can navigate the complexities of enterprise-scale RAG deployment and drive long-term success in the rapidly evolving landscape of generative AI.

Vertex AI and its RAG Capabilities

Vertex AI, Google Cloud Platform’s unified machine learning platform, offers a range of capabilities that make it well-suited for deploying Retrieval Augmented Generation (RAG) solutions. One of the key features of Vertex AI is the Vertex AI Matching Engine, a fully managed service that enables high-performance vector similarity search at scale. This powerful tool allows organizations to store and search massive collections of vectors, making it an ideal solution for the retrieval component of RAG systems. By leveraging Vertex AI Matching Engine, businesses can efficiently index and query large datasets, ensuring fast and accurate retrieval of relevant information during the generation process.

Another crucial component of Vertex AI’s RAG capabilities is the Vertex AI PaLM API, which provides access to Google’s advanced large language models, such as PaLM (Pathways Language Model). These state-of-the-art models can be fine-tuned for specific domains and integrated with Vertex AI Matching Engine to create powerful RAG applications. The combination of Vertex AI PaLM API and Vertex AI Matching Engine allows organizations to harness the strengths of both retrieval-based and generative models, delivering accurate and contextually relevant responses to user queries.

Vertex AI also offers a range of tools and features for data preprocessing, model training, and deployment. For instance, Vertex AI Pipelines enables organizations to build and manage end-to-end machine learning workflows, streamlining the process of data preparation, model training, and deployment. Vertex AI Training allows businesses to train their models at scale using distributed training techniques, accelerating the development process and reducing time-to-market. Vertex AI Prediction provides low-latency, high-throughput serving of trained models, ensuring fast and efficient generation of responses in RAG applications.

To ensure the security and compliance of RAG deployments, Vertex AI integrates with GCP’s security services, such as Cloud Key Management Service (KMS) for secure key management and encryption of sensitive data. Additionally, Vertex AI’s integration with GCP’s Identity and Access Management (IAM) enables fine-grained access control, ensuring that only authorized users and services can interact with the RAG system.

Vertex AI also provides built-in monitoring and logging capabilities, allowing organizations to track the performance and usage of their RAG deployments. These features enable businesses to identify potential issues, optimize resource utilization, and ensure the cost-effectiveness of their RAG solutions.

In terms of cost optimization, Vertex AI offers flexible pricing options, such as pay-per-use and committed use discounts, allowing organizations to align costs with actual usage patterns. By leveraging Vertex AI’s autoscaling capabilities and GCP’s Cost Management tools, businesses can minimize waste and maximize cost-efficiency in their RAG deployments.

In conclusion, Vertex AI provides a comprehensive and powerful platform for deploying RAG solutions on Google Cloud Platform. With its Vertex AI Matching Engine for efficient vector similarity search, Vertex AI PaLM API for access to advanced language models, and a range of tools for data preprocessing, model training, and deployment, Vertex AI enables organizations to build robust and scalable RAG systems. By leveraging Vertex AI’s security, monitoring, and cost optimization features, businesses can ensure the long-term success and viability of their RAG deployments, delivering accurate and contextually relevant responses to user queries.

Comparative Analysis of AWS, Azure, and GCP for RAG

When comparing AWS, Azure, and GCP for deploying Retrieval Augmented Generation (RAG) solutions, it becomes evident that each cloud provider offers a unique set of strengths and capabilities. AWS boasts a comprehensive suite of offerings, with Amazon Kendra serving as a powerful enterprise retriever for RAG workflows. The fully-managed Amazon Bedrock simplifies the development process by connecting foundation models to data sources, while SageMaker JumpStart accelerates implementation with prebuilt models and code examples. AWS’s flexibility allows for customized RAG architectures using services like S3, RDS, Lambda, and DynamoDB.

Azure, on the other hand, emphasizes a robust security framework and zero-trust principles with its GPT-RAG Solution Accelerator. Azure AI Search seamlessly integrates with embedding models and chat models, while Azure OpenAI Service provides access to state-of-the-art language models like GPT-3. Azure Databricks plays a significant role in processing unstructured data and managing embeddings for RAG scenarios.

GCP’s Vertex AI stands out as a unified platform for building and scaling RAG applications. The Vertex AI Matching Engine enables efficient vector similarity search, while the Vertex AI PaLM API offers access to advanced large language models. Cloud Run’s serverless platform allows for scalable and cost-effective deployment of RAG systems, and GCP’s data storage and processing services, such as Cloud Storage and BigQuery, seamlessly integrate with RAG workflows.

In terms of security and compliance, all three cloud providers offer robust features and services. AWS provides encryption options like AWS KMS and fine-grained access control with IAM. Azure’s Key Vault secures encryption keys and secrets, while Active Directory enables access control. GCP’s Cloud KMS and Binary Authorization ensure data protection and trusted container deployment.

Monitoring and logging capabilities are also well-represented across the three platforms. AWS CloudWatch and CloudTrail, Azure Monitor and Log Analytics, and GCP’s Cloud Monitoring and Cloud Logging enable organizations to track performance, usage, and potential security events.

Cost optimization is a priority for all three providers, with flexible pricing models and cost management tools available. AWS offers pay-per-use and reserved instances, along with Cost Explorer and Budgets for monitoring and management. Azure provides pay-as-you-go and reserved instances, with Cost Management and Billing for cost control. GCP’s pay-per-use and committed use discounts, combined with Cost Management tools like Cost Explorer and Budgets, help optimize costs.

Ultimately, the choice between AWS, Azure, and GCP for RAG deployment depends on an organization’s specific requirements, existing infrastructure, and familiarity with each platform. AWS’s extensive offerings and flexibility make it a strong contender for businesses seeking customization and integration with a wide range of services. Azure’s emphasis on security and seamless integration with Microsoft products may appeal to organizations already invested in the Microsoft ecosystem. GCP’s Vertex AI and serverless deployment options provide a streamlined approach for businesses prioritizing scalability and cost-effectiveness.

In conclusion, while all three cloud providers offer robust solutions for deploying RAG, each has its own strengths and unique selling points. Organizations should carefully evaluate their specific needs, security requirements, and budget constraints when selecting the most suitable platform for their RAG implementation. By leveraging the powerful tools and services provided by AWS, Azure, or GCP, businesses can harness the potential of Retrieval Augmented Generation to deliver accurate, contextually relevant, and timely responses to user queries, ultimately driving innovation and enhancing customer experiences.

Conclusion and Future Outlook

As the landscape of artificial intelligence continues to evolve, Retrieval Augmented Generation (RAG) is poised to become an increasingly crucial component in the development of accurate, contextually relevant, and timely AI systems. The adoption of RAG solutions across various industries, such as finance, healthcare, and legal services, is expected to grow significantly in the coming years, driven by the need for reliable and transparent AI-generated content.

The future of RAG deployment in the cloud looks promising, with AWS, Azure, and GCP all offering comprehensive and powerful ecosystems for building and scaling RAG applications. As these cloud providers continue to invest in their AI and machine learning capabilities, organizations can expect to see even more advanced tools and services for RAG implementation, such as improved vector search engines, more powerful language models, and streamlined integration with existing enterprise systems.

One of the key trends in the future of RAG deployment will be the increasing emphasis on security and compliance. With the growing importance of data privacy and the need to adhere to industry-specific regulations, cloud providers will likely continue to enhance their security features and services, such as encryption, access control, and monitoring capabilities. This will enable organizations to deploy RAG solutions with confidence, knowing that their sensitive data is protected and compliant with relevant standards.

Another important aspect of the future of RAG deployment is the focus on cost optimization. As businesses increasingly adopt RAG solutions, the demand for cost-effective deployment options will grow. Cloud providers are expected to respond by offering more flexible pricing models, such as consumption-based pricing and reserved instances, along with advanced cost management tools to help organizations monitor and optimize their RAG-related expenses.

The integration of RAG with other emerging technologies, such as edge computing and 5G networks, is also likely to shape the future of RAG deployment. As the volume of data generated by IoT devices and other sources continues to grow, the ability to process and analyze this data in real-time will become increasingly important. By combining RAG with edge computing and 5G, organizations can enable faster, more efficient, and more accurate decision-making, unlocking new possibilities for AI-driven innovation.

In conclusion, the future of Retrieval Augmented Generation in the cloud is bright, with AWS, Azure, and GCP all well-positioned to support the growing demand for powerful, secure, and cost-effective RAG solutions. As organizations continue to recognize the value of RAG in delivering accurate and contextually relevant AI-generated content, the adoption of these solutions is expected to accelerate, driving innovation and transforming industries. By staying informed about the latest trends and developments in RAG deployment and carefully evaluating their specific needs and requirements, businesses can make informed decisions about which cloud provider and deployment strategy best suit their goals, ensuring long-term success in the rapidly evolving world of artificial intelligence.