The support ticket queue is relentless. For every complex issue that requires a seasoned agent’s expertise, there are a dozen repetitive questions: “How do I reset my password?” “What is your return policy?” “Where can I find my invoice?” Each one pulls an agent away from high-priority tasks, leading to longer wait times across the board and mounting customer frustration. The cost isn’t just measured in wasted hours; it’s measured in customer churn and damaged brand reputation. A recent study found that 61% of consumers would switch to a competitor after just one poor customer service experience. In a world of instant gratification, waiting hours for a simple answer is a recipe for disaster.

Enter the era of automation. Traditional chatbots were supposed to be the solution, but they often created more problems than they solved. Limited by rigid, pre-programmed scripts, they falter at the slightest deviation from their designed flow, leading to the dreaded “I’m sorry, I don’t understand that” loop that infuriates users. They lack the ability to access and synthesize information from the vast, unstructured knowledge bases where companies store their most valuable support content—help-desk articles, internal wikis, and historical ticket data. This limitation makes them incapable of answering anything beyond the most basic FAQs, ultimately failing to deflect a significant volume of tickets from human agents.

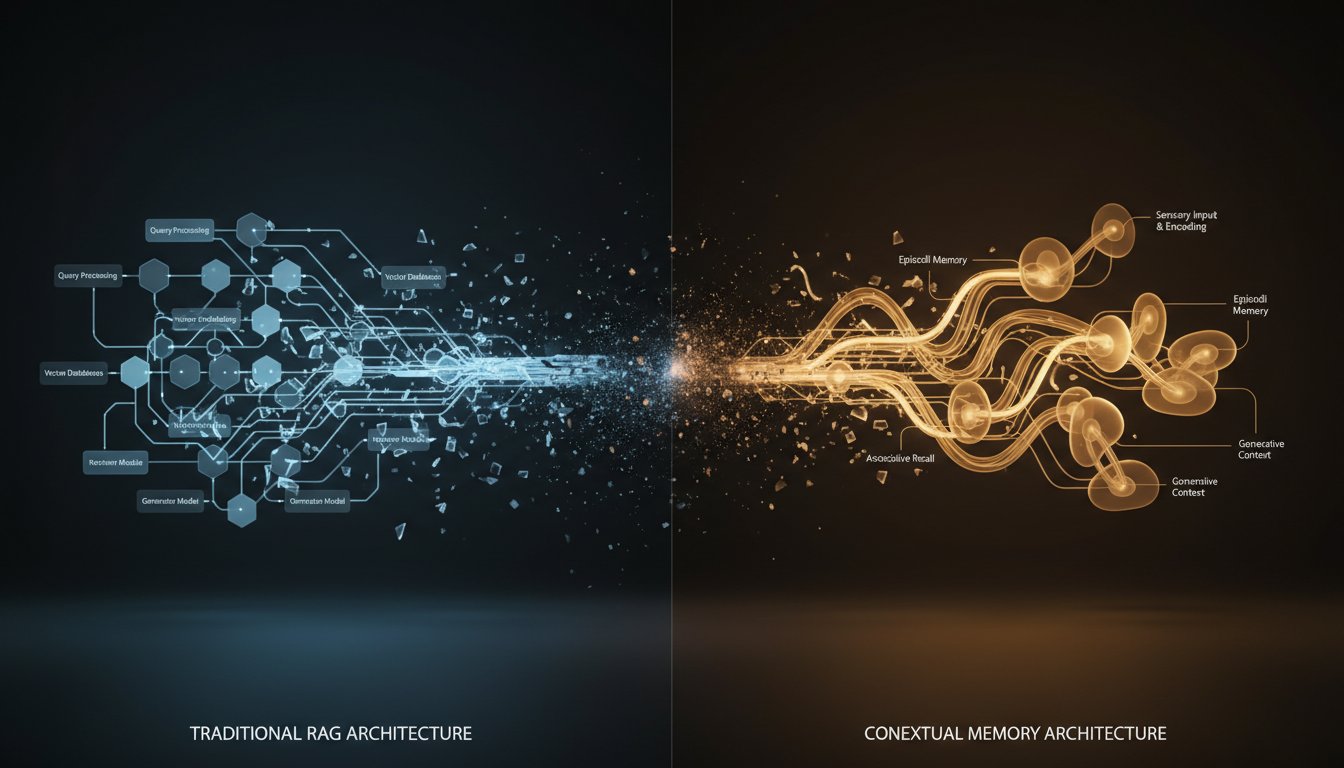

This is where a more intelligent architecture becomes essential. Imagine a system that can not only understand a customer’s query in natural language but can also instantly search your entire knowledge base for the most relevant information, synthesize a precise answer, and deliver it in a natural, human-like voice. This is the power of a Retrieval-Augmented Generation (RAG) system integrated with a leading-edge voice synthesis platform. By connecting a RAG pipeline directly into your Zendesk environment and using ElevenLabs for audio responses, you can build a Tier-1 support bot that doesn’t just answer questions—it resolves them. This article will serve as your technical blueprint. We will walk step-by-step through the process of building an AI-powered voicebot in Zendesk, detailing the architecture, the code, and the API integrations needed to transform your customer support workflow from a bottleneck into a competitive advantage.

The Architectural Blueprint: Connecting Zendesk, RAG, and ElevenLabs

Before diving into the code, it’s crucial to understand the architecture of our automated support system. This isn’t just about plugging in APIs; it’s about creating a seamless flow of data that turns a customer query into a helpful, audible response. The system marries the customer management power of Zendesk, the contextual intelligence of RAG, and the expressive voice synthesis of ElevenLabs.

Why RAG for Customer Support?

Standard Large Language Models (LLMs) are powerful, but they operate in a vacuum. They lack specific knowledge of your company’s products, policies, and procedures. This is why they often “hallucinate” or provide generic, unhelpful answers. RAG solves this problem by grounding the LLM in your reality.

By feeding the LLM with relevant, verified information retrieved from your own knowledge base, you ensure its responses are accurate and specific to your business. This approach dramatically improves First Contact Resolution (FCR) rates, with some enterprises reporting up to a 40% improvement after implementing AI-driven support tools. A RAG system turns your existing documentation into an active, intelligent resource.

System Overview

The workflow is triggered the moment a customer creates a new ticket in Zendesk:

- Zendesk Trigger: A new ticket creation fires a webhook.

- API Endpoint: A cloud function or a small web server (e.g., a Python Flask application) receives the webhook payload containing the ticket details.

- RAG Pipeline Activation: The application extracts the customer’s query from the payload and feeds it into the RAG pipeline.

- Context Retrieval: The RAG system searches a pre-indexed vector database of your company’s knowledge base to find the most relevant information chunks.

- Intelligent Generation: The original query and the retrieved context are sent to an LLM (like GPT-4 or Anthropic’s Claude), which generates a precise, context-aware text answer.

- Voice Synthesis: This text response is then sent to the ElevenLabs API.

- Audio Response: ElevenLabs generates a high-quality, natural-sounding audio file (e.g., MP3) of the response.

- Ticket Update: The application uses the Zendesk API to upload the audio file and the text transcription as a public comment on the ticket, resolving it instantly.

Core Components and Prerequisites

To build this system, you’ll need the following:

- A Zendesk Suite account with administrator access to configure APIs and webhooks.

- An ElevenLabs account for voice generation. You can start with a free or starter plan.

- An LLM API key from a provider like OpenAI, Anthropic, or Cohere.

- Python 3.8+ installed in your development environment.

- A vector database: For this tutorial, we will use ChromaDB, a free, open-source option that runs locally. For production, consider managed services like Pinecone or Weaviate.

- Necessary Python libraries:

flask,requests,langchain,openai,chromadb,tiktoken,beautifulsoup4,elevenlabs-python.

Step 1: Building Your RAG-Powered Knowledge Base

The ‘brain’ of our support bot is the RAG knowledge base. This involves collecting all your support documentation, breaking it down into digestible pieces, and converting it into a format (embeddings) that a machine can search efficiently.

Ingesting Your Zendesk Help Center Articles

First, we need to programmatically pull all the articles from your Zendesk Help Center. You can accomplish this using Zendesk’s API. The following Python script fetches article titles and their HTML content.

import requests

import json

def fetch_zendesk_articles(domain, email, token):

credentials = f"{email}/token", token

url = f"https://{domain}.zendesk.com/api/v2/help_center/articles.json"

articles = []

while url:

response = requests.get(url, auth=credentials)

response.raise_for_status()

data = response.json()

articles.extend(data['articles'])

url = data['next_page']

return articles

# Usage:

# zendesk_domain = "your_company"

# zendesk_email = "[email protected]"

# zendesk_api_token = "your_zendesk_api_token"

# all_articles = fetch_zendesk_articles(zendesk_domain, zendesk_email, zendesk_api_token)

Chunking and Embedding Your Documents

LLMs have context window limitations, so we can’t feed them entire articles at once. We must break the content into smaller, semantically meaningful chunks. LangChain’s text splitters are perfect for this.

After chunking, we convert each chunk into a numerical representation called an embedding using a model from OpenAI or a similar provider. This allows us to perform similarity searches.

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings

from bs4 import BeautifulSoup

# Assuming 'all_articles' is the list from the previous step

docs = []

for article in all_articles:

soup = BeautifulSoup(article['body'], 'html.parser')

text_content = soup.get_text()

docs.append({"title": article['title'], "content": text_content})

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.create_documents([doc['content'] for doc in docs],

metadatas=[{"title": doc['title']} for doc in docs])

Setting Up Your Vector Store

With our documents chunked, we now load them into ChromaDB. ChromaDB will store the embeddings and allow for lightning-fast retrieval of the most relevant chunks based on a user’s query.

import chromadb

from langchain.vectorstores import Chroma

# Initialize OpenAI embeddings model

embedding_model = OpenAIEmbeddings(openai_api_key="your_openai_api_key")

# Create and persist the vector store

vectorstore = Chroma.from_documents(documents=splits,

embedding=embedding_model,

persist_directory="./chroma_db_zendesk")

print("Vector store created successfully!")

Step 2: Developing the Zendesk Webhook and Processing Logic

Now we’ll build the web application that listens for Zendesk events and orchestrates the RAG and voice generation process.

Creating the Zendesk Webhook Trigger

In your Zendesk Admin Center, navigate to Apps and integrations > Webhooks. Create a new webhook with the following configuration:

* Name: AI Voicebot Trigger

* Endpoint URL: This will be the URL of your deployed Flask application (e.g., https://your-app-url.com/webhook).

* Request method: POST

* Request format: JSON

Next, go to Objects and rules > Triggers. Create a new trigger:

* Conditions (Meet ALL): Ticket Is Created

* Actions: Notify active webhook, and select the webhook you just created. For the JSON body, you can send ticket information like this: {"ticket_id": "{{ticket.id}}", "query": "{{ticket.description}}"}.

The Core Python Flask Application

This Flask app will serve as the central hub. It exposes an endpoint to receive the webhook, runs the RAG query, and coordinates with ElevenLabs and Zendesk to post the response.

from flask import Flask, request, jsonify

import openai

app = Flask(__name__)

# ... (load vectorstore and set up clients for OpenAI, ElevenLabs, Zendesk)

retriever = vectorstore.as_retriever()

def query_rag(query_text: str) -> str:

retrieved_docs = retriever.get_relevant_documents(query_text)

context = "\n\n".join([doc.page_content for doc in retrieved_docs])

prompt = f"""You are an expert customer support agent.

Answer the user's question based ONLY on the following context:

Context:

{context}

Question: {query_text}

Answer:"""

response = openai.ChatCompletion.create(

model="gpt-4-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

)

return response.choices[0].message.content

@app.route('/webhook', methods=['POST'])

def zendesk_webhook():

data = request.json

ticket_id = data['ticket_id']

user_query = data['query']

# Generate text response using RAG

text_response = query_rag(user_query)

# ... (code to generate voice with ElevenLabs and post to Zendesk will go here)

return jsonify({"status": "success"}), 200

if __name__ == '__main__':

app.run(debug=True)

Error Handling and Fallbacks

In a production system, not every query will have a clear answer in the knowledge base. It is critical to build a fallback. If the RAG system returns a low-confidence score or a generic “I can’t answer that,” your code should not generate a voice response. Instead, it should use the Zendesk API to post an internal note, assign the ticket to a human support group, and update its status, ensuring a seamless handover.

Step 3: Integrating ElevenLabs for Lifelike Voice Responses

This final step brings our bot to life. We will take the text generated by the RAG system and convert it into a natural-sounding audio file using the ElevenLabs API.

Connecting to the ElevenLabs API

The ElevenLabs Python library makes this incredibly simple. After installing it (pip install elevenlabs), you can instantiate the client with your API key.

from elevenlabs import generate, set_api_key, save

set_api_key("your_elevenlabs_api_key")

You can experiment with different pre-made voices or even clone your own for a truly branded experience. To get started with high-quality AI voices and explore these options, you can try ElevenLabs for free now.

Generating and Attaching the Audio Response

Now, let’s complete our webhook logic. We’ll generate the audio, save it temporarily, and then use the Zendesk API to upload it as a comment on the ticket.

# Inside the zendesk_webhook function, after generating text_response

# 1. Generate audio from text

audio_response = generate(

text=text_response,

voice="Bella", # Choose a voice

model="eleven_multilingual_v2"

)

audio_file_path = f"/tmp/response_{ticket_id}.mp3"

save(audio_response, audio_file_path)

# 2. Upload audio to Zendesk and update the ticket

def post_zendesk_comment_with_audio(ticket_id, text, audio_path):

# First, upload the audio file to get a token

upload_url = f"https://{zendesk_domain}.zendesk.com/api/v2/uploads.json?filename=response.mp3"

headers = {'Content-Type': 'application/binary'}

with open(audio_path, 'rb') as f:

upload_response = requests.post(upload_url, data=f, headers=headers, auth=credentials)

upload_token = upload_response.json()['upload']['token']

# Now, update the ticket with the comment and attachment

update_url = f"https://{zendesk_domain}.zendesk.com/api/v2/tickets/{ticket_id}.json"

payload = {

"ticket": {

"comment": {

"body": text,

"public": True,

"uploads": [upload_token]

},

"status": "solved"

}

}

requests.put(update_url, json=payload, auth=credentials)

# Call the function

post_zendesk_comment_with_audio(ticket_id, text_response, audio_file_path)

With that final piece of code, your system is complete. When a new ticket arrives, it will be automatically processed, answered, and resolved with a helpful voice note in minutes.

By following this guide, you have architected a significant enhancement to your customer support infrastructure. We’ve moved beyond static FAQs and rigid chatbots to create a dynamic, intelligent system that leverages your own knowledge base to provide instant, accurate resolutions. This system doesn’t just close tickets faster; it elevates the customer experience. By automating Tier-1 support, you empower your human agents to apply their skills to the complex, nuanced problems where they create the most value.

Remember that frustrated customer waiting on hold? Imagine that same customer, instead of waiting hours, receiving a helpful, clear, and spoken answer to their question in under a minute. That’s the power you’ve just built. This isn’t just an efficiency gain; it’s a fundamental shift in how you interact with your customers. To explore the powerful voice generation that makes this possible and begin this transformation, click here to sign up for ElevenLabs and start redefining your customer’s support experience today.