A new user, let’s call her Chloe, signs up for your groundbreaking SaaS platform. She’s navigating the dashboard, filled with the initial excitement of discovering a tool that could solve her biggest problems. But that excitement quickly wanes. She’s met with a generic, text-heavy onboarding tour that feels like a user manual dropped on her lap. The pop-ups point to features, but they don’t explain why they matter to her. Within ten minutes, Chloe’s initial enthusiasm turns to confusion and then to apathy. She closes the tab, and the likelihood of her returning plummets. This scenario is a silent killer for growth, a recurring nightmare for product managers and customer success teams who watch their acquisition efforts evaporate into churn statistics. The critical window to deliver the “aha moment” is painfully short, and impersonal, one-size-fits-all onboarding flows simply aren’t cutting it.

The core challenge is scalability versus personalization. Live, one-on-one onboarding is highly effective but financially and logistically impossible to scale. Automated tours are scalable but often fail to connect with users or address their unique questions and goals. They can’t adapt. If a user wonders, “How does this feature help a marketing manager specifically?” the canned tour has no answer. This is where a paradigm shift is needed—away from static guides and toward dynamic, interactive conversations. Imagine Chloe’s experience if, instead of a tooltip, she was greeted by a friendly AI avatar who not only introduces a feature but also asks if she has questions. When she types, “How can I use this to track campaign ROI?”, the avatar provides a concise, spoken explanation with a visual demonstration, all generated in real-time based on her specific query.

This isn’t science fiction. This is the power of combining a conversational AI platform like Intercom, a dynamic AI video generator like HeyGen, and a Retrieval-Augmented Generation (RAG) system as the intelligent core. By architecting these technologies together, you can create an onboarding experience that is both infinitely scalable and deeply personal. It transforms onboarding from a passive tutorial into an active, engaging dialogue that anticipates and responds to user needs, dramatically increasing activation rates and reducing early-stage churn. This article provides a complete technical walkthrough to build this exact system. We will deconstruct the architecture, build the RAG knowledge engine, integrate HeyGen’s API for on-the-fly video creation, and wire everything into Intercom to trigger a truly next-generation onboarding flow.

The Architectural Blueprint: Connecting Intercom, HeyGen, and Your RAG Brain

To build a truly dynamic system, you need specialized tools that excel at their specific tasks. Our architecture relies on a trio of powerful platforms: Intercom for user context and delivery, HeyGen for engaging video avatars, and a custom RAG pipeline to serve as the system’s brain. The synergy between these three components is what makes the magic happen.

Why This Trio? The Synergy Explained

- Intercom: Serves as our frontline. It’s not just a chat tool; it’s a rich customer communications platform that knows who the user is, what plan they are on, and where they are in their journey. We leverage it to trigger the experience and deliver the final video message directly within the product.

- HeyGen: This is our presentation layer. Instead of plain text, HeyGen allows us to generate a video of a realistic AI avatar speaking a script. This adds a human touch that is far more engaging than text-based responses. You can sign up and explore its capabilities—try for free now.

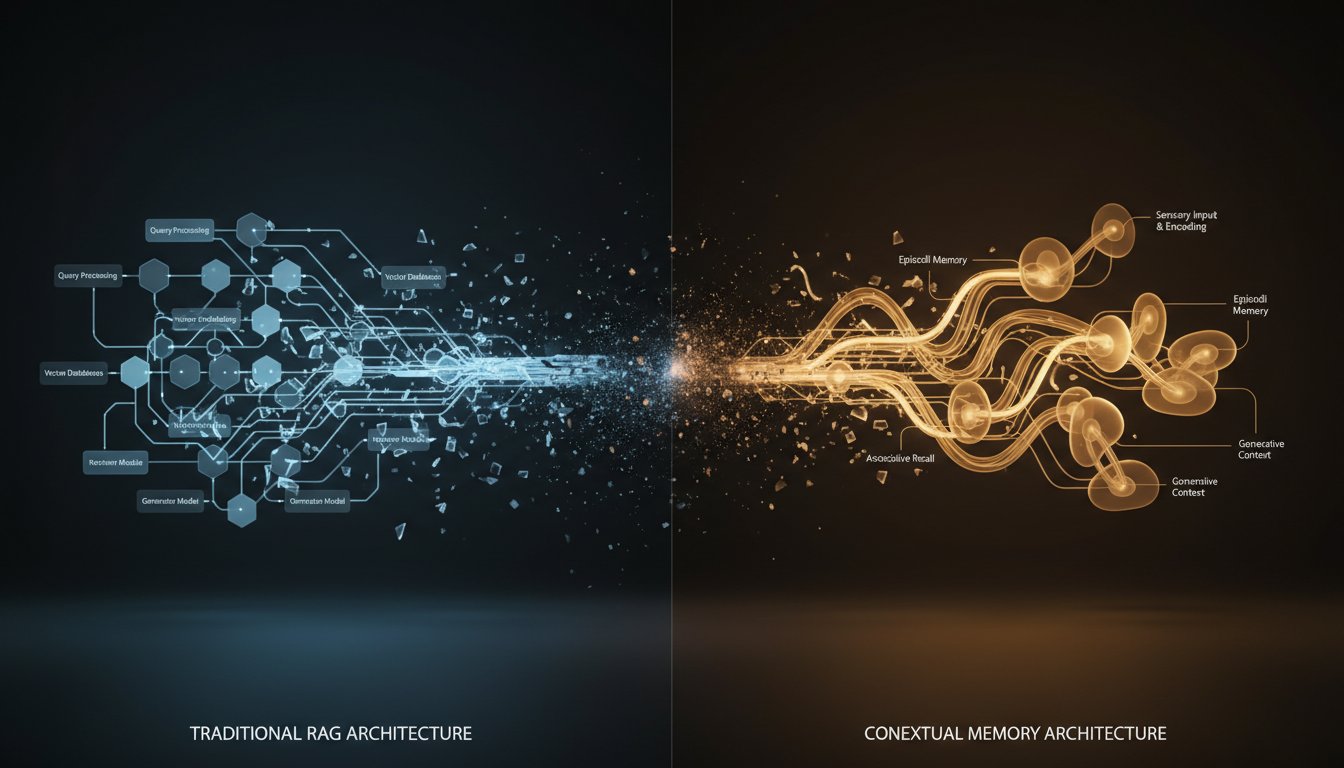

- RAG System: This is the core intelligence. When a user asks a question, we don’t want a generic LLM response. The RAG system retrieves the most relevant, up-to-date information from your actual product documentation, tutorials, and help articles, ensuring the avatar provides accurate, context-aware answers.

System Flow Diagram

The entire process, from user query to video response, flows logically through our architecture. Here’s a step-by-step breakdown:

- Trigger: The user reaches a specific step in an Intercom Product Tour or asks a question via the Intercom Messenger.

- Webhook: Intercom fires a webhook containing the user’s query and relevant metadata (like their role or company) to a middleware endpoint.

- Query RAG: This middleware—a serverless function (e.g., AWS Lambda)—takes the query and sends it to our RAG system.

- Generate Script: The RAG system retrieves relevant context from a vector database and uses an LLM to generate a concise, helpful script for the avatar.

- Call HeyGen API: The serverless function sends this script to the HeyGen API to initiate video generation.

- Receive Video URL: HeyGen processes the request asynchronously. Once the video is ready, it provides a public URL.

- Reply in Intercom: The function takes the video URL and uses the Intercom API to post it back to the user as a reply, closing the loop.

Core Components You’ll Need

Before we start building, make sure you have the following ready:

- An Intercom account with API access.

- A HeyGen account and an API key. You can get one from your account settings after signing up.

- A vector database (e.g., Pinecone, Weaviate, or Qdrant).

- An LLM API key (e.g., from OpenAI, Cohere, or Anthropic).

- A serverless function environment like AWS Lambda, Google Cloud Functions, or Vercel.

Step 1: Building the RAG Knowledge Engine

The “brain” of our operation is the RAG system. Its quality directly determines the quality of the answers your AI avatar will provide. A well-built RAG pipeline ensures responses are not only relevant but also factually grounded in your specific knowledge base.

Curating Your Onboarding Knowledge Base

Garbage in, garbage out. The first step is to gather and clean the source material for your RAG system. This includes:

- Product Documentation

- Help Center Articles

- Step-by-Step Tutorials

- Customer FAQs

- Best Practice Guides and Blog Posts

Organize this content into clean, plain text or Markdown files. The clearer and more structured your source data, the better your retrieval results will be.

Setting Up Your Vector Database

Next, we need to convert this text-based knowledge into a format that a machine can search semantically—vectors. We’ll use a vector database like Pinecone for this.

First, you need to chunk your documents into smaller, digestible pieces. A good rule of thumb is to break them down into paragraphs or sections of 200-300 words. Then, use an embedding model (like all-MiniLM-L6-v2 from the Sentence-Transformers library) to create a vector embedding for each chunk.

Here’s a conceptual Python snippet of what this looks like:

from sentence_transformers import SentenceTransformer

from pinecone import Pinecone

# Initialize models and database

pc = Pinecone(api_key="YOUR_PINECONE_API_KEY")

model = SentenceTransformer('all-MiniLM-L6-v2')

index = pc.Index("onboarding-knowledge-base")

# documents = your list of curated text chunks

for i, doc_chunk in enumerate(documents):

embedding = model.encode(doc_chunk).tolist()

metadata = {'text': doc_chunk}

index.upsert(vectors=[(f'doc_{i}', embedding, metadata)])

The Retrieval and Generation Pipeline

With your knowledge base indexed, you can now build the function that finds context and generates the script. When a user query comes in, you first embed the query using the same model, then search your Pinecone index for the most similar vectors (i.e., the most relevant chunks of text).

import openai

def generate_script_from_rag(query: str) -> str:

# 1. Embed the user's query

query_embedding = model.encode(query).tolist()

# 2. Retrieve relevant context from Pinecone

results = index.query(vector=query_embedding, top_k=3, include_metadata=True)

context = "\n".join([res['metadata']['text'] for res in results['matches']])

# 3. Build a prompt for the LLM

prompt = f"""You are an AI assistant for a SaaS product.

Based on the following context, answer the user's question concisely.

Context: {context}

Question: {query}

Answer: """

# 4. Generate the script using the LLM

response = openai.Completion.create(

model="text-davinci-003",

prompt=prompt,

max_tokens=150

)

return response.choices[0].text.strip()

This function is the core of your RAG engine. It takes a raw question and returns a polished script ready for our AI avatar.

Step 2: Integrating HeyGen for Dynamic Video Creation

Now that we have a script, it’s time to bring it to life with HeyGen. Static text in a chat box is good, but a video of a friendly avatar speaking directly to the user is transformational. Research proves it: according to a Wyzowl report, 73% of people prefer to learn about a product or service by watching a video.

Getting Started with the HeyGen API

Your first step is to get your HeyGen API key. After creating an account, you can find the key in your project settings. This key will authenticate your requests. While you’re there, browse the available stock avatars or even create a custom one to match your brand’s voice and style.

Crafting the API Call

HeyGen’s API makes it simple to generate a video from text. You’ll make a POST request to their video generation endpoint, specifying the avatar you want to use, the voice, and the script we generated from our RAG system.

Here’s an example Python function to call the API:

import requests

import time

HEYGEN_API_KEY = "YOUR_HEYGEN_API_KEY"

HEADERS = {"X-Api-Key": HEYGEN_API_KEY, "Content-Type": "application/json"}

def create_heygen_video(script: str) -> str:

payload = {

"video_inputs": [

{

"character": {

"type": "avatar",

"avatar_id": "YOUR_AVATAR_ID",

"scale": 1.0

},

"voice": {

"type": "text",

"input_text": script

}

}

],

"test": True, # Set to False for production

"aspect_ratio": "16:9"

}

# Initiate video generation

response = requests.post("https://api.heygen.com/v2/video/generate", json=payload, headers=HEADERS)

video_id = response.json()['data']['video_id']

# Poll for video status

video_url = poll_for_video_url(video_id)

return video_url

Handling Asynchronous Video Generation

Video creation isn’t instantaneous. When you call the HeyGen API, it queues a generation job and immediately returns a video_id. You can’t just wait for the request to complete. Instead, you need to periodically poll the status endpoint using the video_id until the status is "completed". At that point, the response will contain the final downloadable video URL.

def poll_for_video_url(video_id: str) -> str:

while True:

status_response = requests.get(f"https://api.heygen.com/v2/video/status?video_id={video_id}", headers=HEADERS)

status_data = status_response.json()['data']

if status_data['status'] == 'completed':

return status_data['video_url']

elif status_data['status'] == 'failed':

raise Exception("HeyGen video generation failed.")

time.sleep(10) # Wait 10 seconds before checking again

Step 3: Triggering a RAG-Powered Avatar in Intercom

With our RAG engine producing scripts and our HeyGen integration creating videos, the final piece is connecting it all to the user experience in Intercom. This is handled by our middleware serverless function, which acts as the central orchestrator.

Setting Up Intercom Webhooks

In your Intercom developer settings, create a new app and configure a webhook. You’ll want this webhook to subscribe to topics relevant to onboarding, such as conversation.user.created (when a user starts a new conversation) or custom events you trigger from within an Intercom Product Tour. Point this webhook to the URL of your serverless function.

The Middleware Glue: Using a Serverless Function

This function is the heart of the integration. It receives the webhook, processes the data, and calls all our other services in the correct sequence. Below is a skeleton of what this function would look like, for example, on AWS Lambda.

# This would be your main handler in a serverless function (e.g., AWS Lambda)

def intercom_webhook_handler(event, context):

# 1. Parse the incoming webhook from Intercom

intercom_payload = json.loads(event['body'])

user_query = intercom_payload['data']['item']['body'] # Example path

conversation_id = intercom_payload['data']['item']['id'] # Example path

# 2. Call the RAG engine to generate a script

script = generate_script_from_rag(user_query)

# 3. Call HeyGen to create the video

try:

video_url = create_heygen_video(script)

except Exception as e:

# Handle error - maybe post a text fallback message

print(e)

return {"statusCode": 500}

# 4. Use the Intercom API to post the video back to the user

post_video_to_intercom(conversation_id, video_url)

return {"statusCode": 200, "body": "Success"}

def post_video_to_intercom(conversation_id, video_url):

# Code to call the Intercom API to reply to the conversation

# The message body would contain the video_url, perhaps with some introductory text

# e.g., "Here is a short video that explains it: [video_url]"

pass

Creating the Final User Experience in Intercom

In Intercom, design your Product Tour. At a key decision point, instead of a simple tooltip, you can create a step that says, “Have a question about this feature? Ask away!” When the user replies, the webhook fires. You can even have the bot respond first with, “Great question! Let me create a personalized video explanation for you. One moment…” This sets expectations while the backend processes the request. A minute later, a new message appears in the chat from your bot, containing the custom-generated HeyGen video. The experience is seamless, responsive, and deeply impressive.

This system transforms onboarding from a static monologue into a dynamic, personalized dialogue. We’ve moved beyond simple text-based bots to a scalable solution that delivers the high-touch feel of a personal guide. We started with the story of Chloe, a user on the brink of churning due to a confusing, impersonal onboarding process. By implementing this RAG and HeyGen-powered system, you change her journey entirely. Instead of closing the tab, Chloe gets an instant, custom video from an AI avatar that answers her exact question about tracking campaign ROI. She feels seen and understood, the “aha moment” is delivered, and she is successfully converted from a tentative new user into an engaged, activated customer.

Ready to transform your user onboarding from a static checklist into a dynamic conversation? The first step is creating your AI avatar and exploring what’s possible. Sign up for HeyGen and start building for free today.