The digital hum of a thousand conversations fills the void, a constant stream of notifications, questions, and updates. This is the reality of enterprise communication platforms like Slack. While they are indispensable for collaboration, they often become victims of their own success—transforming into chaotic digital libraries where critical information is buried under layers of chatter, memes, and status updates. Finding a specific answer to a technical question or clarifying a process detail can feel like a frantic archeological dig through endless channels. The search bar helps, but it lacks context, often surfacing dozens of irrelevant mentions before you find the one nugget of truth you need. This relentless information overload isn’t just an annoyance; it’s a significant drain on productivity, forcing skilled employees to spend valuable time searching instead of doing.

The typical solution has been to deploy a chatbot. Yet, most bots are glorified FAQ machines, responding with pre-programmed answers or, if they are more advanced, a block of text that can easily get lost in the fast-scrolling channel feed. The fundamental challenge remains: how do you deliver precise, contextually-aware information in a way that truly cuts through the noise and commands attention? The answer doesn’t lie in more text, but in a different medium entirely. Imagine asking a complex question in a busy Slack channel and, moments later, receiving a response not as another message to read, but as a crystal-clear, human-like voice speaking the exact answer, synthesized directly from your company’s internal documentation. This is not science fiction; it is the power of combining Retrieval-Augmented Generation (RAG) with advanced voice synthesis, deployed on a scalable cloud architecture.

This article provides a complete technical walkthrough for building such a system. We will guide you step-by-step through the process of creating a voice-enabled RAG bot for Slack. You will learn how to configure a robust RAG pipeline using LangChain to intelligently query your knowledge base, integrate the ElevenLabs API to generate lifelike speech, and deploy the entire solution on AWS for enterprise-grade performance and scalability. Prepare to transform your Slack workspace from a source of digital noise into an intelligent, interactive, and audible knowledge hub.

The Strategic Advantage of Voice in Enterprise Communication

Before diving into the code, it’s crucial to understand why integrating voice is a strategic upgrade, not just a novelty. In a world saturated with visual and text-based information, audio offers a uniquely effective communication channel.

Beyond Text: How Audio Cuts Through the Noise

Text-based chatbot responses compete for attention in a visually crowded space. An audio file, however, is a distinct and engaging artifact in a Slack channel. It provides a more personal, human touch, which can increase engagement and information retention. Furthermore, audio is accessible—it allows for passive consumption, enabling employees to listen to an answer while performing other tasks. It breaks the monotony of text and delivers information with a clarity and nuance that flat text often lacks. The goal is to make accessing knowledge as frictionless as possible, and a spoken answer is often the most direct path from query to comprehension.

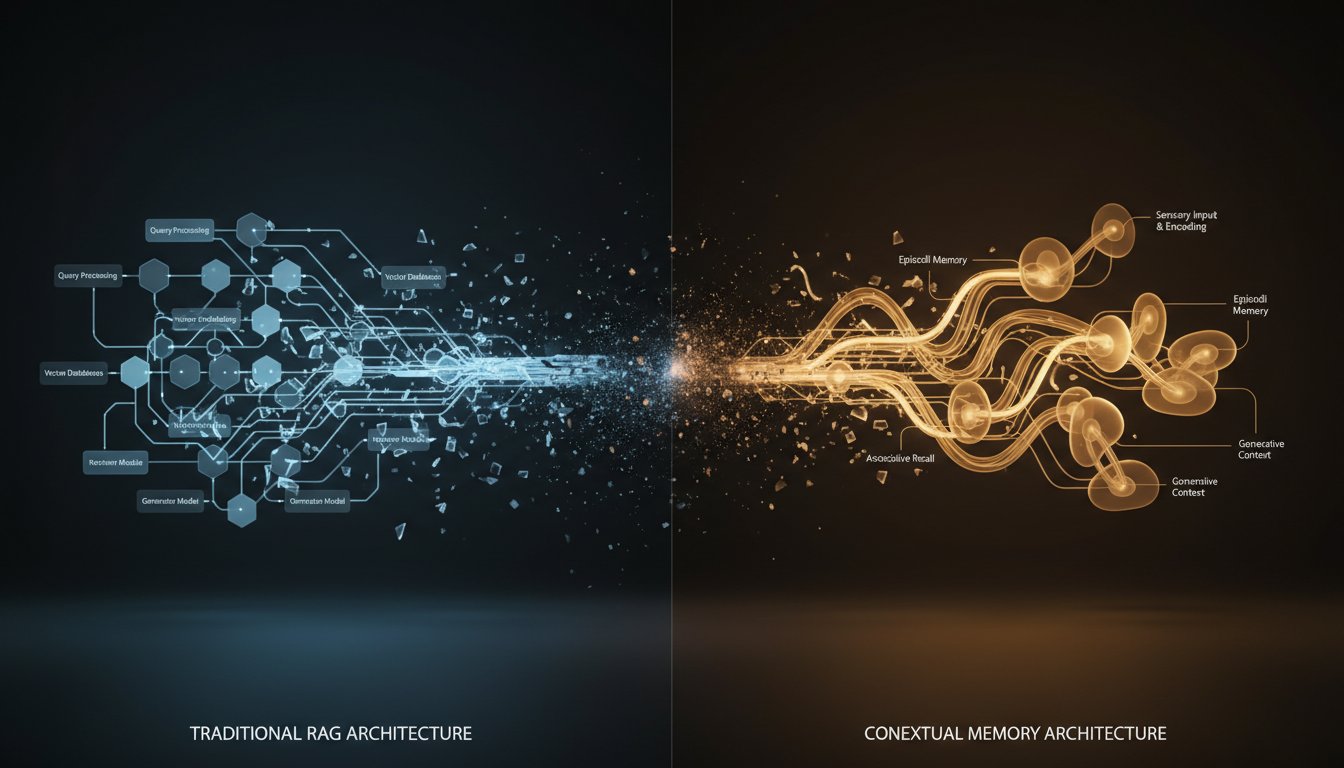

Why RAG is the Brains Behind Your Bot

An articulate voice is useless if the information it delivers is wrong. This is where Retrieval-Augmented Generation becomes the critical engine of our bot. As noted by industry experts, traditional LLMs have inherent limitations. One article on computer.org states, “Standard large language models (LLMs) possess vast knowledge but struggle with limitations like hallucinations and accessing real-time information due to their static training data… Retrieval-Augmented Generation (RAG) emerged as a powerful paradigm to address these shortcomings by integrating external knowledge sources into the generation process.” Our bot won’t rely on a static, generic model. Instead, it will use RAG to fetch relevant, up-to-date information directly from your organization’s private knowledge base—be it technical documentation, project briefs, or internal wikis—ensuring that the answers are not only well-spoken but also precise, relevant, and trustworthy.

Architectural Blueprint: Components of Our Voice-Enabled Slack Bot

Building a production-ready system requires a solid architecture. We’ve chosen a stack that prioritizes scalability, security, and ease of integration. Here are the core components.

AWS for Scalability and Power

We will use AWS as the backbone for our application. Its serverless architecture allows us to build a cost-effective and highly scalable system without managing servers.

- AWS Lambda: This is our serverless compute service. A Lambda function will contain our core Python code, orchestrating the entire workflow from receiving a Slack mention to posting the final audio response.

- Amazon S3: We’ll use an S3 bucket to store the internal documents that form our bot’s knowledge base.

- IAM (Identity and Access Management): A crucial component for security, an IAM role will grant our Lambda function the precise permissions it needs to access S3 and other services.

Slack API for Seamless Integration

The Slack platform provides a rich set of APIs that allow for deep integration. We will create a Slack App and configure it to listen for specific events (app_mention) in channels. When our bot is mentioned, Slack will securely send the event payload to our AWS backend, triggering the RAG process.

LangChain for a Robust RAG Pipeline

LangChain serves as the framework that simplifies the creation of our RAG pipeline. It provides pre-built modules for connecting the various pieces: loading documents from S3, splitting them into manageable chunks, creating vector embeddings, storing them in a vector database, and executing the final retrieval and question-answering chain.

ElevenLabs API for Lifelike Voice Synthesis

This is the component that brings our bot to life. The ElevenLabs API is renowned for its ability to produce high-quality, natural-sounding, and low-latency text-to-speech (TTS). We will send the text output from our RAG pipeline to the ElevenLabs API and receive an audio stream back, which we can then save and upload directly to Slack. The quality of the voice is paramount for a professional, enterprise-grade experience.

Step-by-Step Implementation Guide

Now, let’s move from theory to practice. Follow these steps to build and deploy your voice-enabled RAG bot.

Step 1: Setting Up Your AWS Environment

First, prepare your AWS infrastructure.

- Create an S3 Bucket: Navigate to the S3 console in AWS and create a new bucket. This bucket will hold your knowledge base documents (e.g.,

.txt,.md,.pdffiles). Note the bucket name. - Create an IAM Role: Go to the IAM console and create a new role. Select

AWS serviceas the trusted entity andLambdaas the use case. Attach theAWSLambdaBasicExecutionRolepolicy. Then, create and attach a custom inline policy that grants read access to the S3 bucket you just created. Your policy JSON should look something like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::your-knowledge-base-bucket-name/*"

}

]

}

Step 2: Configuring Your Slack App

Next, set up the interface with Slack.

- Go to

api.slack.com/appsand create a new app from scratch. - Add Permissions: Navigate to

OAuth & Permissions. UnderScopes, add the following Bot Token Scopes:app_mentions:read,chat:write,files:write, andchannels:history. - Install to Workspace: Install the app to your Slack workspace. This will generate a Bot User OAuth Token (it starts with

xoxb-). Save this token securely; you’ll need it for your Lambda function.

Step 3: Building the RAG Pipeline with LangChain and AWS Lambda

This is where we write the core logic. We will create a Lambda function that uses LangChain to process incoming questions.

- Create a Lambda Function: In the AWS Lambda console, create a new function. Choose to author from scratch, use Python 3.11+, and select the IAM role you created in Step 1.

- Package Dependencies: Your Python code will need several libraries (

langchain,boto3,faiss-cpu,openai,slack_sdk, etc.). You’ll need to package these into a Lambda layer or include them in your deployment package ZIP file. - Implement the RAG Logic: In your

lambda_handler.py, you’ll set up the chain. Here’s a conceptual overview of the code:

import boto3

from langchain.document_loaders import S3DirectoryLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.chains import RetrievalQA

from langchain.llms import OpenAI

# NOTE: Best practice is to store secrets in AWS Secrets Manager

S3_BUCKET_NAME = 'your-knowledge-base-bucket-name'

OPENAI_API_KEY = 'your-openai-key'

def setup_rag_chain():

# 1. Load documents from S3

loader = S3DirectoryLoader(S3_BUCKET_NAME)

documents = loader.load()

# 2. Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

docs = text_splitter.split_documents(documents)

# 3. Create embeddings and vector store

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_API_KEY)

db = FAISS.from_documents(docs, embeddings)

# 4. Create retriever and QA chain

retriever = db.as_retriever()

qa_chain = RetrievalQA.from_chain_type(

llm=OpenAI(openai_api_key=OPENAI_API_KEY),

chain_type="stuff",

retriever=retriever

)

return qa_chain

# In the main handler, you'll call this chain

# qa_chain = setup_rag_chain()

# result = qa_chain.run(user_question)

Step 4: Integrating the ElevenLabs API for Voice Output

Now, let’s give the bot its voice. After getting the text answer from your RAG chain, you’ll pass it to ElevenLabs.

- Get an API Key: Sign up for an account with ElevenLabs to get your API key. The platform offers a generous free tier to get you started.

- Implement the TTS Call: Add a function to your Lambda code to handle the API call. You’ll send the text and receive audio data in response.

import requests

# NOTE: Store this in AWS Secrets Manager

ELEVENLABS_API_KEY = 'your-elevenlabs-key'

VOICE_ID = '21m00Tcm4TlvDq8ikWAM' # Example: Rachel's voice

def generate_audio(text):

tts_url = f"https://api.elevenlabs.io/v1/text-to-speech/{VOICE_ID}/stream"

headers = {

"Accept": "application/json",

"xi-api-key": ELEVENLABS_API_KEY

}

data = {

"text": text,

"model_id": "eleven_multilingual_v2",

"voice_settings": {

"stability": 0.5,

"similarity_boost": 0.8,

"style": 0.5,

"use_speaker_boost": True

}

}

response = requests.post(tts_url, json=data, headers=headers, stream=True)

if response.ok:

return response.content

else:

return None

To start creating high-quality, realistic voices for your applications, you can try for free now at ElevenLabs.

Step 5: Connecting Everything and Deploying the Bot

Finally, we wire everything together and post the response back to Slack.

- Update Lambda Handler: Your main

lambda_handlerfunction will now parse the incoming Slack event, extract the user’s question, run it through the RAG chain, send the result togenerate_audio(), save the returned audio content to a temporary file in/tmp/, and use the Slack SDK’sfiles_upload_v2method to post it to the originating channel. - Create an API Gateway Trigger: In the AWS Lambda console, add a trigger to your function. Select

API Gateway, create a new HTTP API, and keep the security asOpenfor now (you’ll secure it later using Slack’s request signing). - Enable Event Subscriptions in Slack: Go back to your Slack App settings. Under

Event Subscriptions, toggle it on. Paste your API Gateway endpoint URL into theRequest URLfield. AWS will verify the URL. Subscribe to theapp_mentionbot event. Save your changes and reinstall the app to your workspace.

Your bot is now live! Mention it in a channel with a question related to your knowledge base documents, and it should respond with a spoken answer.

Optimizing for Performance and User Experience

Deploying the bot is just the beginning. To create a truly enterprise-grade tool, consider these optimizations.

Latency Considerations and Caching Strategies

The RAG process, especially the initial document loading and embedding, can be slow. For a production system, you should not re-index the documents on every invocation. Instead, create embeddings once and store them in a persistent vector database like Amazon OpenSearch, Pinecone, or Redis. You can also implement a simple caching layer (e.g., using DynamoDB) to store answers to frequently asked questions, bypassing the RAG pipeline entirely for common queries.

Choosing the Right Voice and Tone

ElevenLabs provides a wide range of pre-made voices and the ability to clone voices. Select a voice that aligns with your company’s brand identity. Is it professional and authoritative, or more friendly and approachable? Tweaking the voice settings for stability and clarity can significantly enhance the user’s perception of the bot’s intelligence and reliability.

Handling Errors and Fallbacks

What happens when the RAG system can’t find a relevant answer? The bot shouldn’t fail silently or respond with a generic error. Program a graceful fallback message, such as, “I couldn’t find a definitive answer in our knowledge base. Could you try rephrasing your question or asking a human colleague?” This manages user expectations and maintains the bot’s credibility.

We began by facing the overwhelming noise of modern enterprise communication. The endless scroll of text in Slack channels, while necessary, often hides the very information we need. By architecting an intelligent system that combines the contextual power of RAG with the standout clarity of voice, we have built more than just a chatbot; we’ve created a genuine assistant. We designed a blueprint using a scalable AWS backend, a robust LangChain pipeline for intelligence, and the unparalleled voice synthesis of ElevenLabs to give our data a voice.

This is a tangible step toward a more efficient, accessible, and human-centric digital workspace. The bot we’ve built serves as a powerful proof of concept for how advanced AI can solve practical business problems, turning chaotic information streams into on-demand audible knowledge. You’ve seen the blueprint and walked through the construction. Now it’s time to build. Start by creating the voice of your intelligent assistant; try for free now at http://elevenlabs.io/?from=partnerjohnson8503 and bring your enterprise data to life.