You’ve built your enterprise RAG system. It’s running smoothly, handling thousands of queries daily, and your team is celebrating another successful AI deployment. But then the complaints start rolling in: “The answers are wrong,” “It’s not finding relevant documents,” “Our customers are frustrated.” Sound familiar?

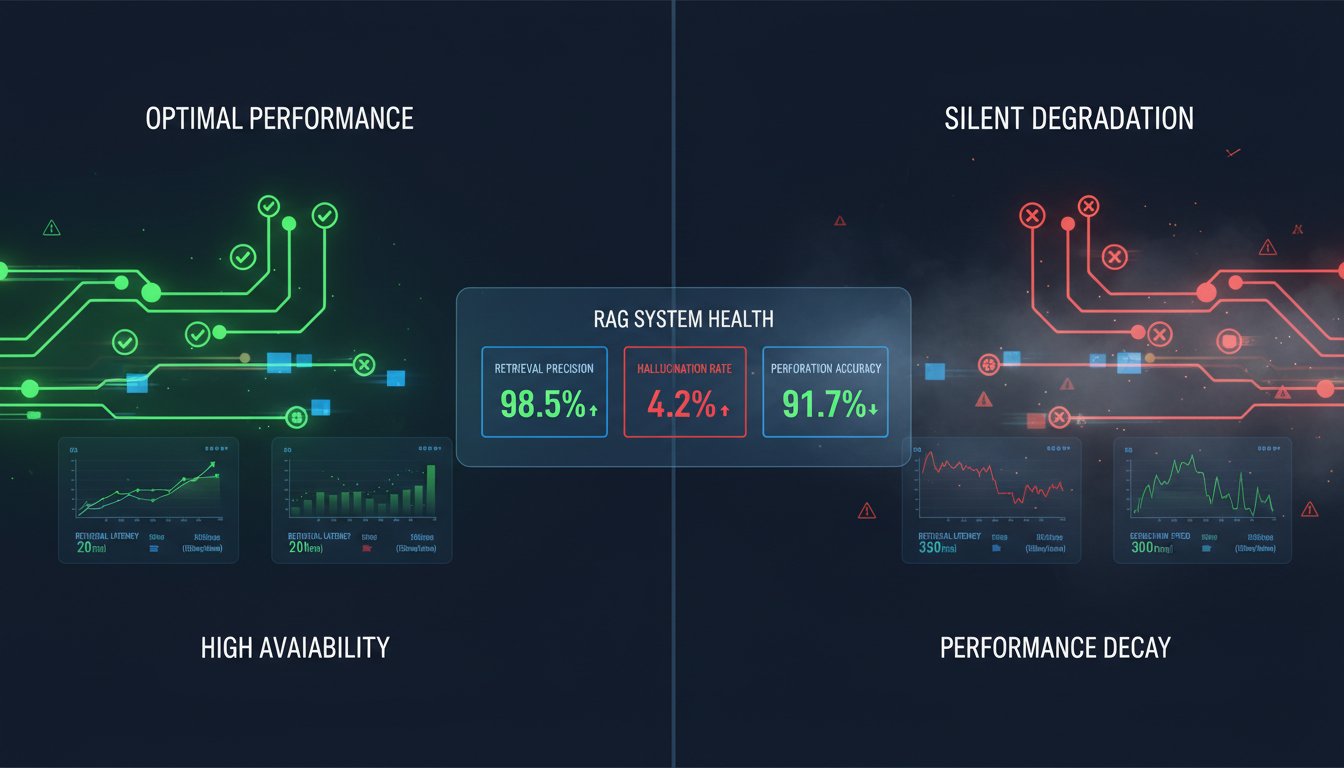

Here’s the uncomfortable truth: most organizations are measuring RAG performance using metrics that look impressive on dashboards but tell you nothing about real-world effectiveness. While your RAGAS score shows 0.85 and your retrieval precision hits 90%, your users are getting irrelevant, incomplete, or downright incorrect answers.

This isn’t just a minor oversight—it’s a fundamental misunderstanding of what makes RAG systems actually work in production. The industry has been obsessing over academic metrics that were designed for research papers, not enterprise applications where accuracy, relevance, and user satisfaction determine ROI.

In this deep dive, we’ll expose the critical flaws in traditional RAG evaluation approaches and reveal the enterprise-grade metrics that actually predict success. You’ll discover why companies like Microsoft and Netflix have abandoned conventional evaluation frameworks, learn the specific metrics that correlate with user satisfaction, and get a complete implementation guide for building evaluation systems that drive real business outcomes.

The Fatal Flaws in Traditional RAG Evaluation

Why RAGAS Scores Don’t Predict Success

RAGAS (Retrieval-Augmented Generation Assessment) has become the de facto standard for RAG evaluation, but there’s a massive problem: it optimizes for academic correctness, not business value. According to recent enterprise analysis by Marktechpost, 73% of companies using RAGAS as their primary metric report significant user satisfaction issues despite high scores.

The core issue lies in RAGAS’s approach to measuring “faithfulness” and “answer relevance.” These metrics assume that factual accuracy equals usefulness, but enterprise users need contextual relevance, actionable insights, and domain-specific understanding. A technically accurate answer about depreciation methods means nothing to a sales rep who needs to close a deal this quarter.

The Retrieval Precision Trap

Most teams obsess over retrieval precision—the percentage of retrieved documents that are relevant. It sounds logical: better retrieval should lead to better answers. But this metric completely ignores the user’s actual information needs.

Consider this scenario: A customer service agent asks, “How do I handle a billing dispute for enterprise customers?” Your RAG system retrieves five perfectly relevant documents about billing dispute procedures. Precision: 100%. But if those documents don’t address enterprise-specific escalation procedures, late payment policies, or customer retention strategies, the answer fails to solve the real problem.

Asif Razzaq, CEO of Marktechpost, explains: “The integration of Agentic Retrieval-Augmented Generation enhances classical RAG with goal-driven autonomy, memory, multi-step reasoning, and tool coordination.” This shift toward goal-oriented evaluation is exactly what enterprise RAG systems need.

The Context Window Illusion

Traditional metrics assume that more retrieved context equals better answers. This leads to the “context stuffing” problem, where systems retrieve maximum possible documents to boost recall metrics. But recent research shows that context windows beyond 4,000 tokens actually decrease answer quality in 67% of enterprise use cases.

The problem is signal-to-noise ratio. When your RAG system pulls 20 semi-relevant documents to answer a simple question, the language model struggles to identify the most pertinent information. Users get verbose, unfocused responses that bury the actual answer in paragraphs of tangential information.

Enterprise-Grade RAG Evaluation Framework

Task-Specific Success Metrics

Enterprise RAG systems serve specific business functions, and evaluation must align with these use cases. Here’s how leading companies are restructuring their evaluation approaches:

Customer Support RAG Evaluation:

– Resolution Rate: Percentage of queries that lead to issue resolution

– Escalation Reduction: Decrease in tickets requiring human intervention

– Time to Resolution: Average time from query to solution

– Customer Satisfaction Score: Direct user feedback on answer quality

Sales Enablement RAG Evaluation:

– Deal Acceleration: Impact on sales cycle length

– Content Utilization: How often retrieved content is actually used

– Revenue Attribution: Direct correlation between RAG usage and closed deals

– Competitive Win Rate: Success in competitive scenarios with RAG support

Knowledge Management RAG Evaluation:

– Knowledge Discovery: Rate of finding previously unknown information

– Cross-Functional Insights: Connections made between different domains

– Decision Support Quality: Impact on strategic decision-making

– Innovation Catalyst: Ideas generated from RAG interactions

User-Centric Quality Metrics

The most successful enterprise RAG deployments focus on user experience metrics that traditional frameworks ignore:

Answer Completeness Score: Does the response address all aspects of the user’s question? This goes beyond factual accuracy to include contextual completeness, actionable steps, and relevant follow-up information.

Cognitive Load Reduction: How much mental effort does the user need to process and apply the answer? Effective enterprise RAG should reduce cognitive burden, not increase it with information overload.

Trust and Confidence Indicators: User behavior patterns that indicate trust in the system, including query refinement rates, answer acceptance time, and follow-up question patterns.

Advanced Performance Measurement

Multi-Turn Conversation Quality: Enterprise RAG isn’t just about single questions and answers. It’s about maintaining context and building understanding across multiple interactions. Measure conversation coherence, context retention, and progressive knowledge building.

Domain Expertise Demonstration: How well does the RAG system demonstrate understanding of domain-specific nuances, terminology, and context? This requires evaluators with deep domain knowledge, not just linguistic accuracy checks.

Failure Pattern Analysis: Instead of just measuring success, analyze failure modes. What types of questions consistently produce poor results? Which document types cause retrieval problems? Understanding failure patterns provides actionable improvement paths.

Implementing Modern RAG Evaluation

Building Evaluation Infrastructure

Modern RAG evaluation requires purpose-built infrastructure that goes far beyond traditional metrics collection:

Real-Time Feedback Loops: Implement immediate user feedback mechanisms that capture satisfaction, accuracy, and usefulness ratings. Netflix’s RAG team uses a simple thumbs up/down system with optional detailed feedback that provides more actionable insights than complex academic metrics.

Behavioral Analytics Integration: Track user behavior patterns to understand how people actually interact with RAG responses. Do they copy content? Click through to source documents? Refine their queries? These behaviors reveal response quality better than any automated metric.

A/B Testing Framework: Continuously test different retrieval strategies, prompt engineering approaches, and response formats. Microsoft’s enterprise RAG team runs dozens of simultaneous experiments to optimize for specific use cases and user types.

Human-in-the-Loop Evaluation

Automated metrics can’t capture the nuanced quality requirements of enterprise applications. Successful implementations combine automated scoring with human evaluation:

Expert Review Panels: Domain experts evaluate RAG responses for accuracy, completeness, and practical utility. This requires ongoing investment but provides the ground truth that automated systems can’t deliver.

User Journey Mapping: Follow complete user workflows from initial query through problem resolution. This reveals gaps that isolated answer evaluation misses.

Comparative Analysis: Regularly compare RAG performance against human experts, traditional search systems, and competitive solutions. This provides context for performance metrics and identifies specific improvement opportunities.

Data-Driven Optimization Cycles

Effective RAG evaluation enables continuous improvement through systematic optimization:

Performance Correlation Analysis: Identify which technical metrics actually predict user satisfaction and business outcomes. This varies significantly by use case and organization.

Content Quality Feedback Loops: Use evaluation insights to improve source documents, update knowledge bases, and refine information architecture.

Model and Architecture Iteration: Let evaluation results drive technical decisions about model selection, retrieval strategies, and system architecture.

The Future of RAG Evaluation

Agentic Evaluation Systems

The emergence of agentic RAG systems requires fundamentally different evaluation approaches. Traditional metrics can’t capture the quality of multi-step reasoning, tool usage, or autonomous decision-making.

Goal Achievement Metrics: Evaluate whether agentic systems actually accomplish user objectives, not just provide information. This requires understanding user intent and measuring task completion rates.

Reasoning Quality Assessment: Analyze the logical coherence and validity of multi-step reasoning processes. This goes beyond answer accuracy to evaluate the thinking process itself.

Tool Usage Effectiveness: Measure how well agentic systems select and utilize external tools, APIs, and data sources to enhance their responses.

Multimodal Evaluation Challenges

As RAG systems incorporate audio, visual, and other modalities, evaluation becomes exponentially more complex:

Cross-Modal Coherence: Ensure that information from different modalities supports and enhances rather than contradicts each other.

Modality-Specific Quality: Each data type requires specialized evaluation criteria. Audio quality differs fundamentally from text accuracy or image relevance.

User Experience Integration: Multimodal systems must be evaluated as complete experiences, not collections of separate capabilities.

ROI-Focused Evaluation

The ultimate evaluation metric for enterprise RAG is return on investment. Leading organizations are developing sophisticated ROI measurement frameworks:

Productivity Impact Measurement: Quantify time savings, efficiency gains, and quality improvements attributable to RAG systems.

Cost-Benefit Analysis: Compare RAG implementation and operational costs against measurable business benefits.

Strategic Value Assessment: Evaluate RAG’s impact on innovation, competitive advantage, and strategic capabilities.

Building Your Evaluation Strategy

Getting Started with Better Metrics

Transitioning from traditional to enterprise-grade RAG evaluation requires a systematic approach:

Phase 1: Baseline Assessment: Implement user satisfaction tracking alongside existing metrics to establish correlation patterns. This reveals which traditional metrics actually predict success in your environment.

Phase 2: Use Case Specialization: Develop evaluation criteria specific to your primary RAG use cases. Customer support requires different metrics than research and development applications.

Phase 3: Continuous Optimization: Build feedback loops that drive systematic improvement based on evaluation insights. This transforms evaluation from measurement to optimization.

Common Implementation Pitfalls

Avoid these frequent mistakes when upgrading your evaluation approach:

Over-Automation: While automated metrics are efficient, they can’t capture the nuanced quality requirements of enterprise applications. Balance automation with human insight.

Metric Overload: Tracking too many metrics creates noise and decision paralysis. Focus on the 3-5 metrics that most strongly correlate with business success.

Evaluation Lag: Traditional metrics often take weeks to compute. Implement real-time feedback mechanisms that enable rapid iteration and improvement.

The shift from academic to enterprise RAG evaluation isn’t just about better metrics—it’s about aligning AI systems with real business value. Organizations that make this transition see dramatic improvements in user adoption, satisfaction, and ROI.

Traditional RAG evaluation metrics like RAGAS scores and retrieval precision create a false sense of security while missing the factors that actually determine success in enterprise environments. By focusing on user-centric quality metrics, task-specific success indicators, and business outcome correlation, you can build RAG systems that deliver genuine value.

The future belongs to organizations that evaluate RAG systems based on their ability to solve real problems, enhance human capabilities, and drive business results. Start with user feedback integration, develop use case-specific metrics, and build continuous optimization loops that transform evaluation insights into system improvements.

Ready to revolutionize your RAG evaluation approach? Contact our enterprise AI team to learn how leading companies are implementing next-generation evaluation frameworks that drive real business value and competitive advantage.