Imagine the all-too-familiar frustration: you’ve been on hold for ten minutes, listening to a distorted loop of elevator music, only to be greeted by a robotic, unhelpful Interactive Voice Response (IVR) system. “For billing, press one. For technical support, press two.” You mash the keypad, navigate a confusing menu tree, and finally reach a human agent who puts you back on hold to “look up your information.” This disjointed, inefficient experience is the bane of modern customer service, costing businesses not just time and money, but customer loyalty. The core challenge isn’t a lack of effort; it’s a fundamental architectural problem. Customer support systems are often hamstrung by siloed data, inconsistent agent training, and technology that creates more friction than it resolves. Traditional chatbots and IVRs fail because they lack two critical components: deep contextual understanding and the ability to communicate naturally.

But what if you could replace that entire frustrating process with a single, seamless interaction? What if your customers could speak to an AI agent that not only understands their issue in real-time but responds with the empathy, tone, and accuracy of your best human support specialist? This isn’t a futuristic vision; it’s a tangible reality made possible by combining two powerful AI technologies: Retrieval-Augmented Generation (RAG) and advanced voice synthesis. The solution lies in building an intelligent agent powered by a RAG system that draws from a comprehensive, up-to-the-minute knowledge base, and giving that agent a voice with a platform like ElevenLabs, which can generate lifelike, low-latency audio. This integration moves beyond simple question-answering to create true conversational experiences. In this technical guide, we’ll dissect the architecture of a voice-enabled RAG system, walk through the core integration steps, and explore the advanced considerations for deploying an enterprise-grade solution that transforms your customer support from a cost center into a powerful competitive advantage.

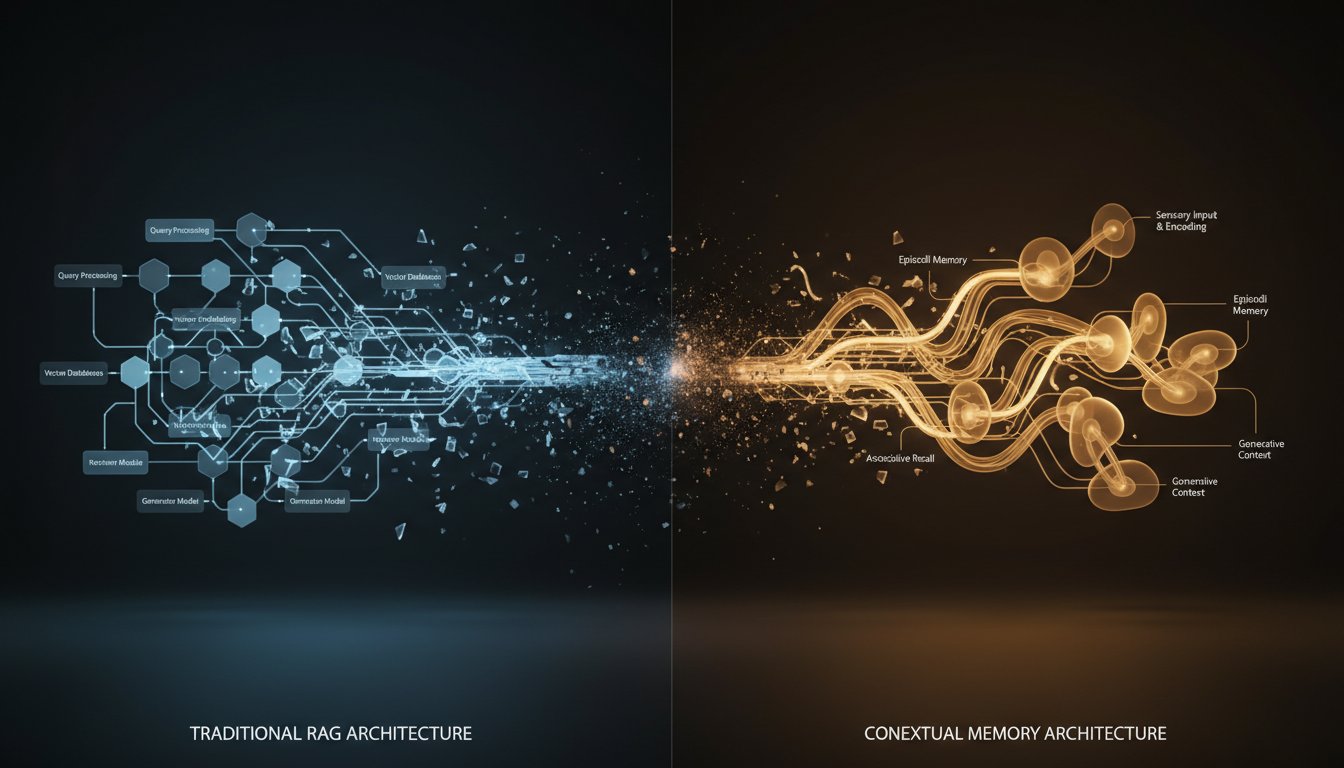

The Architectural Blueprint: Core Components of a Voice-Enabled RAG System

Building an AI that can listen, understand, and respond intelligently in real-time requires a well-orchestrated stack of technologies. Each component plays a distinct role, working in concert to create a fluid conversational experience. Think of it not as a single piece of software, but as a digital central nervous system for your customer support.

H3: The RAG Engine: Your Single Source of Truth

At the heart of the system is the Retrieval-Augmented Generation (RAG) engine. This is the system’s long-term memory and its connection to verified, company-specific information. As Luis Lastras from IBM Research aptly puts it, a standard Large Language Model (LLM) is like taking a closed-book exam, whereas a RAG system is like taking an open-book exam. The RAG engine gives your LLM access to the “book”—your entire repository of enterprise knowledge.

This knowledge base can include:

→ Product documentation and specifications

→ Customer support FAQs and historical ticket data

→ Internal wikis and a knowledge base like Confluence or SharePoint

→ Customer data from your CRM

The RAG process begins by ingesting and indexing this data into a vector database (e.g., Pinecone, Chroma). When a user asks a question, the RAG system’s retriever component searches this database to find the most relevant chunks of information. This retrieved context is then passed to the LLM, ensuring its response is grounded in factual, specific, and up-to-date information, dramatically reducing the risk of “hallucinations” or incorrect answers.

H3: The Large Language Model (LLM): The Conversational Core

If RAG provides the facts, the Large Language Model (LLM) provides the fluency. The LLM (like models from OpenAI, Anthropic, or Cohere) is the component that synthesizes the raw, retrieved data into a coherent, natural-sounding response. It takes the snippets of information from the RAG engine and crafts them into a helpful, conversational answer that directly addresses the user’s query.

Its function is to act as a brilliant conversationalist who has just been handed the exact right notes. This separation of concerns is critical: the LLM isn’t relied upon for its stored knowledge, which can be outdated or generic, but for its powerful reasoning and language generation capabilities.

H3: The Voice Interface: Bringing Your Agent to Life with ElevenLabs

This is where the magic happens for the user experience. A text-based response, no matter how accurate, still feels robotic in a voice setting. Traditional Text-to-Speech (TTS) systems often sound monotonous and have noticeable latency, creating awkward pauses.

This is why integrating a state-of-the-art voice synthesis platform like ElevenLabs is a game-changer. It offers several key advantages for real-time support:

- Low Latency: ElevenLabs is designed for speed, allowing for near-instant audio generation that keeps the conversation flowing without unnatural delays.

- Emotional Nuance: The platform can generate speech with varying tones and inflections, allowing the AI agent to sound empathetic, reassuring, or enthusiastic, depending on the context of the conversation.

- Voice Cloning: Businesses can create a custom voice for their brand, either by cloning the voice of a trusted spokesperson or designing a unique synthetic voice, ensuring brand consistency.

By feeding the LLM’s text output directly to the ElevenLabs API, you transform a simple answer into a rich, human-like interaction.

H3: The Integration Layer: Connecting Speech-to-Text and Orchestration

To complete the conversational loop, two final pieces are needed. First, a Speech-to-Text (STT) service (like OpenAI’s Whisper or Google’s Speech-to-Text) is required to capture the user’s spoken words and accurately transcribe them into text. This text then becomes the initial query for the RAG engine.

Second, an orchestration layer, often built with frameworks like LangChain or LlamaIndex, or even a custom Python application, acts as the conductor. It manages the entire workflow seamlessly: receiving the transcribed text from the STT, passing it to the RAG engine, sending the query and context to the LLM, and finally, delivering the LLM’s text response to ElevenLabs for voice synthesis.

Step-by-Step Integration: Building Your Real-Time Voice Agent

With the architecture defined, let’s walk through a simplified, high-level process for connecting these components. We’ll use Python for our examples, as it’s the lingua franca of AI development.

H3: Step 1: Setting Up Your RAG Knowledge Base

The foundation of any good RAG system is a high-quality, well-structured knowledge base. This involves preparing your data (e.g., cleaning up text from support articles, exporting data from a knowledge base) and loading it into a vector database.

# Example using LangChain and ChromaDB

from langchain.document_loaders import DirectoryLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

# Load documents

loader = DirectoryLoader('./your_knowledge_base/', glob="**/*.txt")

docs = loader.load()

# Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

# Create vector store

vectorstore = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings())

retriever = vectorstore.as_retriever()

This code snippet loads text files, splits them into manageable chunks, and uses OpenAI’s embedding models to create vector representations, which are then stored in a local ChromaDB instance.

H3: Step 2: Orchestrating the RAG and LLM Flow

Next, you’ll create a chain that connects the retriever to the LLM. This chain will automatically handle the process of fetching relevant documents and feeding them into the LLM prompt.

# Example using LangChain

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema.runnable import RunnablePassthrough

from langchain.schema.output_parser import StrOutputParser

# Define the prompt template

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# Define the LLM

model = ChatOpenAI(model_name="gpt-4-turbo")

# Build the RAG chain

rag_chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| model

| StrOutputParser()

)

# Get a text response

text_response = rag_chain.invoke("What are the return policies?")

print(text_response)

This chain takes a user’s question, uses the retriever to find context, formats it all with the prompt, gets a response from the model, and parses the output into a clean string.

H3: Step 3: Integrating ElevenLabs for Voice Output

Now, you take the text_response from the previous step and send it to the ElevenLabs API to generate audio. This is a straightforward API call.

# Example using the ElevenLabs Python SDK

import elevenlabs

elevenlabs.set_api_key("YOUR_ELEVENLABS_API_KEY")

# Generate audio from the RAG chain's text response

audio = elevenlabs.generate(

text=text_response,

voice="Bella", # You can use a pre-made voice or a custom cloned voice ID

model="eleven_multilingual_v2"

)

elevenlabs.play(audio)

This script generates the audio and can play it directly or save it to a file. For a real-time application, you would stream this audio back to the user as it’s being generated to minimize perceived latency.

H3: Step 4: Closing the Loop with Speech-to-Text

Finally, to make the system truly interactive, you’d use an STT service to capture the user’s voice input. While the full implementation is beyond a single snippet, the conceptual flow is simple: the user speaks, the audio is sent to a service like OpenAI’s Whisper, and the resulting text transcript is passed back to rag_chain.invoke() to start the cycle all over again.

Beyond the Basics: Advanced Considerations for Enterprise-Grade Deployment

Building a proof-of-concept is one thing; deploying a robust, scalable system is another. As you move toward production, several advanced challenges must be addressed for a truly enterprise-grade solution.

H3: Managing Latency for Seamless Conversation

The single biggest technical hurdle is latency. The total time from when the user stops speaking to when the AI starts responding must be minimal to feel natural. This involves optimizing every step of the chain:

* Using faster, optimized LLMs.

* Choosing a low-latency vector database and retrieval strategy.

* Streaming the audio output from ElevenLabs, which starts playback before the entire audio clip is generated.

H3: Ensuring Data Freshness and Accuracy

A RAG system is only as good as its data. Addressing the “stale data problem” is critical. The knowledge base cannot be static. You must implement a strategy for continuous updates, such as:

* Event-driven updates: Automatically trigger re-indexing of a document when it’s updated in your CMS or knowledge base.

* Scheduled data pipelines: Run daily or hourly jobs to sync your vector database with primary data sources like your CRM or product database.

H3: Handling Complex Dialogues and Agentic Behavior

Basic Q&A is powerful, but the future lies in agents that can perform actions. Drawing from the concept of “Agentic RAG,” your system can be enhanced to go beyond just retrieving information. You can give it “tools” it can decide to use. For example, if a user asks, “What’s the status of my latest order?” the agent could be empowered to query your company’s internal order management API, retrieve the live status, and then formulate a response. It can also be programmed with rules for escalating complex or sensitive issues to a human agent, ensuring a seamless handover.

The era of clunky IVR systems and customers waiting on hold for an uninformed agent is coming to an end. The barrier to exceptional, scalable customer support is no longer technology but implementation. By architecting a system that combines the factual grounding of Retrieval-Augmented Generation with the expressive power of a voice AI like ElevenLabs, you can build a customer experience that is not only efficient but genuinely helpful and human-like. This is no longer a far-off concept from a science fiction movie; the tools and frameworks are accessible today. Ready to transform your customer experience from a point of friction to a competitive advantage? Building a voice-enabled RAG agent is the first step. You can start experimenting with lifelike voice generation today. Try for free now at http://elevenlabs.io/?from=partnerjohnson8503 and hear the difference for yourself.