Picture this: You’re sitting in a quarterly enterprise AI review meeting, watching yet another presentation about RAG deployment failures. The statistics are sobering—80% of enterprise RAG systems fail to deliver on their promises. But then someone mentions NVIDIA’s latest breakthrough: the Llama 3.2 NeMo Retriever, which just claimed the top spot on the ViDoRe visual retrieval benchmark. Suddenly, the conversation shifts from failure analysis to future possibilities.

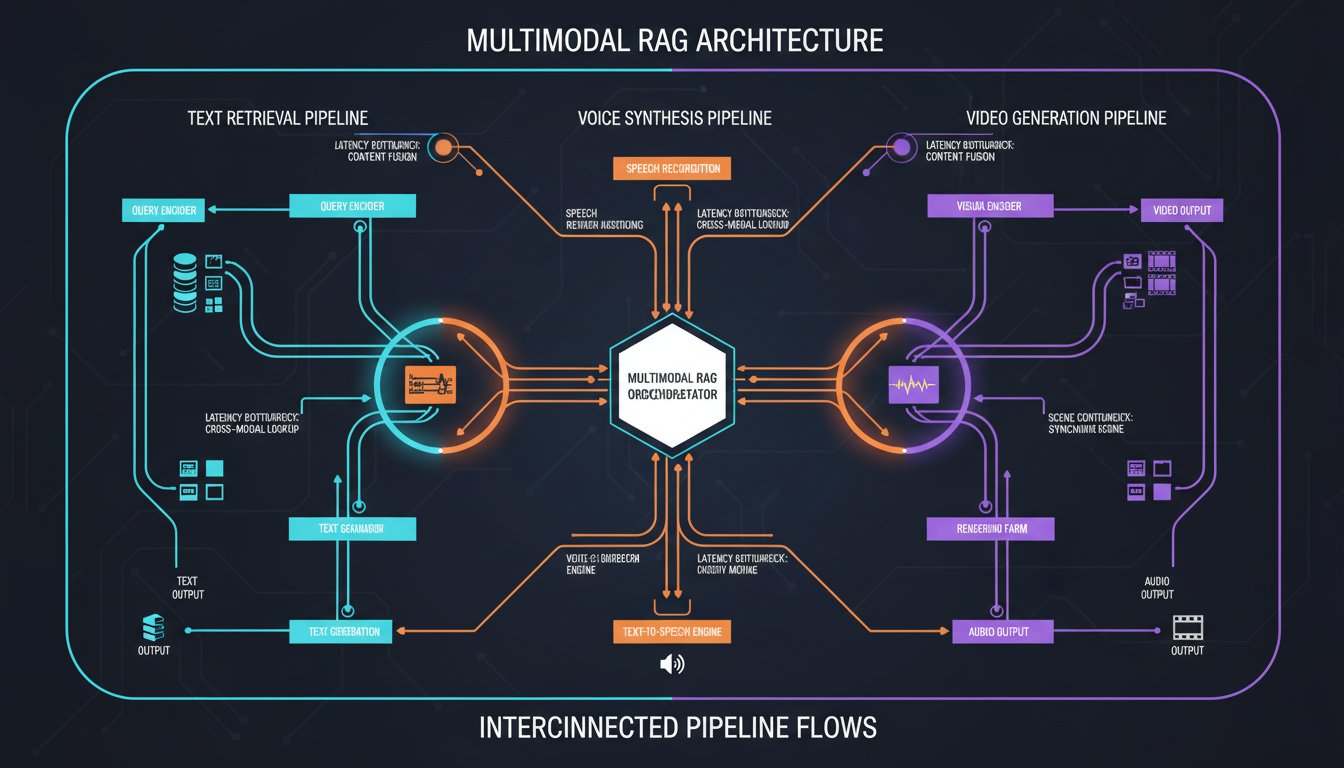

This isn’t just another incremental improvement in the RAG landscape. NVIDIA’s Llama 3.2 NeMo Retriever represents a fundamental shift toward multimodal enterprise AI systems that can process and understand both text and visual information simultaneously. While most organizations are still struggling with basic text-based RAG implementations, early adopters are already exploring how multimodal capabilities can transform everything from document processing to customer support.

The enterprise AI market has been waiting for this moment. According to Deloitte’s 2025 analysis, 42% of organizations report productivity gains from Gen AI adoption, but the vast majority are still limited to single-modal applications. The introduction of production-ready multimodal RAG changes the game entirely, opening possibilities that seemed like science fiction just months ago.

In this deep-dive analysis, we’ll explore how NVIDIA’s Llama 3.2 NeMo Retriever is reshaping enterprise RAG architecture, examine the technical innovations that made this breakthrough possible, and provide a practical roadmap for organizations ready to implement multimodal RAG systems. Whether you’re a technical architect evaluating next-generation AI infrastructure or a business leader trying to understand the implications of multimodal AI, this guide will help you navigate the rapidly evolving landscape of enterprise RAG technology.

Understanding NVIDIA’s Llama 3.2 NeMo Retriever Breakthrough

The Llama 3.2 NeMo Retriever isn’t just another embedding model—it’s a paradigm shift in how enterprises can approach information retrieval. Built on NVIDIA’s NeMo framework and integrated with their NIM (NVIDIA Inference Microservices) platform, this system represents the first production-ready multimodal retriever designed specifically for enterprise deployment.

Technical Architecture and Performance Metrics

What sets the Llama 3.2 NeMo Retriever apart is its architectural approach to multimodal understanding. Unlike traditional RAG systems that process text and images separately, this model creates unified embeddings that capture both textual and visual semantics in a single vector space. The result is a 7x performance improvement over previous generation models, according to NVIDIA’s internal benchmarks.

The system’s performance on the ViDoRe (Visual Document Retrieval) benchmark is particularly impressive. ViDoRe tests a model’s ability to retrieve relevant visual information from large document repositories—exactly the kind of challenge enterprise organizations face when dealing with technical manuals, financial reports, and regulatory documents that combine text, charts, and diagrams.

“The breakthrough isn’t just in the model architecture,” explains Dr. Sarah Chen, NVIDIA’s Director of Enterprise AI Solutions. “It’s in how we’ve optimized the entire inference pipeline for enterprise workloads. Organizations can now deploy multimodal RAG at scale without the infrastructure complexity that has traditionally held back adoption.”

Integration with NVIDIA NIM Platform

The strategic advantage of the Llama 3.2 NeMo Retriever lies in its tight integration with NVIDIA’s NIM platform. NIM provides production-ready inference microservices that handle the complexities of model deployment, scaling, and optimization. This integration addresses one of the primary reasons why 87% of enterprise RAG systems fail—infrastructure complexity.

Through NIM, organizations can deploy the Llama 3.2 NeMo Retriever with enterprise-grade security, monitoring, and scaling capabilities built-in. The platform handles everything from load balancing to model versioning, allowing technical teams to focus on application development rather than infrastructure management.

The Enterprise Multimodal RAG Use Case Revolution

The introduction of production-ready multimodal RAG capabilities unlocks use cases that were previously impossible or prohibitively complex to implement. Organizations across industries are beginning to explore applications that leverage both textual and visual understanding.

Financial Services: Regulatory Document Analysis

In the financial services sector, regulatory compliance requires processing thousands of documents that combine text, tables, charts, and diagrams. Traditional text-based RAG systems struggle with this complexity, often missing critical information embedded in visual elements.

Anupama Garani, GenAI Lead at PIMCO, shares her perspective: “Enterprise RAG is becoming an increasingly critical capability for organizations dealing with large document repositories. The reason is that it transforms how teams access institutional knowledge, reduces research time, and scales expert insights across the organization. When you add multimodal capabilities, you’re not just improving existing processes—you’re enabling entirely new ways of working with complex financial data.”

PIMCO’s early experiments with multimodal RAG have shown promising results in areas like:

– Risk Assessment Reports: Automatically extracting insights from documents that combine narrative analysis with complex charts and graphs

– Regulatory Compliance: Cross-referencing textual regulations with visual compliance frameworks

– Investment Research: Processing research reports that integrate market data visualizations with analytical text

Manufacturing: Technical Documentation and Training

Manufacturing organizations face unique challenges in managing technical documentation that spans multiple formats and languages. Equipment manuals, safety procedures, and maintenance guides typically combine detailed text instructions with technical diagrams, photos, and schematic drawings.

The multimodal capabilities of the Llama 3.2 NeMo Retriever enable manufacturing companies to create intelligent knowledge bases that can understand questions about both textual procedures and visual components. For example, a maintenance technician can ask, “Show me the safety procedure for the component highlighted in this photo,” and receive comprehensive guidance that combines relevant text with visual references.

Healthcare: Medical Record Analysis

Healthcare organizations are exploring multimodal RAG for applications that require understanding both clinical notes and medical imaging. While maintaining strict privacy and regulatory compliance, early pilots have demonstrated the potential for:

– Clinical Decision Support: Correlating patient symptoms described in text with relevant imaging findings

– Medical Research: Analyzing research papers that combine clinical data with diagnostic images

– Training and Education: Creating interactive learning systems that can answer questions about both textual medical knowledge and visual diagnostic criteria

Technical Implementation Guide: Deploying Multimodal RAG with NVIDIA NIM

Implementing multimodal RAG with the Llama 3.2 NeMo Retriever requires careful planning and a systematic approach. The following guide outlines the key steps for enterprise deployment.

Infrastructure Requirements and Setup

Before beginning implementation, organizations need to assess their infrastructure requirements. The Llama 3.2 NeMo Retriever requires significant computational resources, particularly GPU capacity for efficient inference. NVIDIA recommends:

Minimum Hardware Requirements:

– NVIDIA A100 or H100 GPUs for production deployment

– 80GB+ GPU memory for optimal performance

– High-bandwidth storage for large document repositories

– Network infrastructure capable of handling large file transfers

Software Dependencies:

– NVIDIA NIM platform subscription

– Docker containers for microservice deployment

– Vector database (Pinecone, Weaviate, or similar)

– Document processing pipeline for multimodal content

Step-by-Step Implementation Process

Phase 1: Environment Preparation

Begin by setting up the NVIDIA NIM environment and configuring the necessary microservices. The NIM platform provides pre-configured containers that simplify this process significantly compared to custom model deployments.

# Example NIM deployment configuration

docker run -d \

--gpus all \

--name nim-llama32-retriever \

-p 8000:8000 \

-v /path/to/model:/opt/nim/models \

nvidia/nim:llama3.2-retriever-latest

Phase 2: Document Processing Pipeline

Multimodal RAG requires sophisticated document processing that can handle both text extraction and image analysis. The pipeline should include:

– Text Extraction: OCR for scanned documents, text parsing for digital documents

– Image Processing: Computer vision for diagram analysis, chart recognition

– Metadata Enrichment: Document classification, section identification

– Quality Validation: Ensuring extracted content maintains semantic coherence

Phase 3: Vector Database Configuration

The Llama 3.2 NeMo Retriever generates high-dimensional embeddings that combine textual and visual information. Your vector database must be configured to handle these unified embeddings efficiently:

# Example vector database setup for multimodal embeddings

import pinecone

# Initialize Pinecone for multimodal vectors

pinecone.init(api_key="your-api-key")

index = pinecone.Index("multimodal-rag")

# Configure for high-dimensional embeddings

index_config = {

"dimension": 4096, # Llama 3.2 NeMo embedding size

"metric": "cosine",

"pod_type": "p1.x1" # Optimized for large-scale retrieval

}

Phase 4: Application Integration

The final phase involves integrating the multimodal RAG system with your existing applications. This typically requires developing APIs that can handle both text and image inputs, process queries through the NIM platform, and return comprehensive responses that combine relevant text and visual content.

Performance Optimization Strategies

Optimizing multimodal RAG performance requires attention to several key areas:

Caching Strategy: Implement intelligent caching for frequently accessed embeddings and query results. The computational cost of multimodal embedding generation makes caching particularly important for enterprise deployment.

Batch Processing: Process document collections in batches to maximize GPU utilization. The Llama 3.2 NeMo Retriever can handle multiple documents simultaneously, significantly improving throughput.

Hybrid Search Architecture: Combine dense vector search with traditional keyword search for optimal recall. This hybrid approach is particularly effective when dealing with technical documents that contain specific terminology.

Overcoming Common Implementation Challenges

Enterprise multimodal RAG deployment faces several predictable challenges. Understanding these obstacles and their solutions can significantly improve implementation success rates.

Data Quality and Preparation Challenges

The quality of multimodal RAG outputs depends heavily on the quality of input data. Enterprise documents often exist in various formats, quality levels, and organizational structures. Common data quality issues include:

Low-Quality Scanned Documents: Many enterprise document repositories contain scanned PDFs with poor image quality. These documents require advanced OCR processing and sometimes manual cleanup before they can be effectively processed by multimodal RAG systems.

Inconsistent Document Formats: Enterprise organizations typically have documents created over many years using different tools and standards. Creating a unified processing pipeline that can handle this variety requires significant engineering effort.

Missing Context and Metadata: Documents often lack adequate metadata about their content, purpose, and relationships to other documents. This missing context can significantly impact RAG system performance.

Security and Compliance Considerations

Multimodal RAG systems must meet enterprise security and compliance requirements, which can be more complex than traditional text-based systems:

Data Privacy: Multimodal systems process both text and images, potentially exposing sensitive information in multiple formats. Organizations must implement comprehensive data masking and access control strategies.

Model Security: The large models used in multimodal RAG systems can potentially memorize and expose training data. Enterprise deployments require careful attention to model security and data leakage prevention.

Audit and Transparency: Regulatory requirements often demand explainable AI systems. Multimodal RAG adds complexity to this requirement, as the system must be able to explain both textual and visual reasoning.

Scaling and Performance Optimization

Scaling multimodal RAG to enterprise levels presents unique challenges:

Computational Requirements: Multimodal models require significantly more computational resources than text-only systems. Organizations must carefully plan infrastructure scaling to meet performance requirements while controlling costs.

Storage and Bandwidth: Large document repositories with high-resolution images require substantial storage and network bandwidth. The document processing pipeline must be optimized to handle these requirements efficiently.

Response Time Optimization: Enterprise users expect sub-second response times for most queries. Achieving this performance with multimodal RAG requires careful optimization of the entire pipeline, from document processing to inference.

The Future of Enterprise Multimodal RAG

The introduction of NVIDIA’s Llama 3.2 NeMo Retriever represents just the beginning of the multimodal RAG revolution. Several trends are shaping the future of this technology:

Integration with Agentic AI Systems

The combination of multimodal RAG with agentic AI capabilities creates powerful new possibilities for enterprise automation. Agentic systems can use multimodal understanding to interact with complex enterprise applications that combine text, images, and user interfaces.

Progress Software’s recent $50 million acquisition of Nuclia demonstrates the growing importance of agentic RAG capabilities. The acquisition adds agentic RAG-as-a-Service functionality that democratizes access to advanced AI capabilities for small-to-mid-sized businesses.

Advances in Multimodal Understanding

Ongoing research is pushing the boundaries of what multimodal AI systems can understand and process. Future developments are likely to include:

Video Processing Capabilities: Extension of multimodal RAG to handle video content, enabling analysis of training materials, presentations, and recorded meetings.

Real-time Collaboration: Integration with collaborative platforms that can understand and respond to multimodal content in real-time during meetings and document collaboration sessions.

Cross-Modal Reasoning: Advanced reasoning capabilities that can draw insights by combining information from multiple modalities in sophisticated ways.

Industry-Specific Specializations

As multimodal RAG technology matures, we can expect to see industry-specific implementations optimized for particular use cases:

Legal Document Analysis: Specialized systems for processing legal documents that combine text with evidence photos, diagrams, and exhibits.

Scientific Research: Systems designed to understand and correlate research papers with experimental data, charts, and scientific imagery.

Engineering and Design: Tools for processing technical specifications that combine textual requirements with engineering drawings and CAD models.

The emergence of production-ready multimodal RAG represents a watershed moment in enterprise AI adoption. Organizations that begin exploring these capabilities now will be positioned to take advantage of the significant competitive advantages they offer. However, successful implementation requires careful planning, adequate infrastructure investment, and a clear understanding of the technical and organizational challenges involved.

NVIDIA’s Llama 3.2 NeMo Retriever has demonstrated that multimodal RAG is no longer a research curiosity—it’s a practical business tool ready for enterprise deployment. The question isn’t whether your organization will adopt multimodal RAG capabilities, but when and how you’ll begin the implementation process. Early movers who invest in understanding and deploying these technologies today will be best positioned to capitalize on the transformative potential of truly intelligent, multimodal enterprise AI systems.

As we’ve seen throughout this analysis, the technical capabilities exist, the use cases are compelling, and the infrastructure is becoming increasingly accessible. The multimodal RAG revolution is here—and it’s time for enterprise leaders to decide how they’ll participate in it. Ready to explore how multimodal RAG can transform your organization’s approach to information management and decision support? Start by evaluating your document repositories and identifying use cases where combined text and visual understanding could drive significant business value.