Picture this: you’ve spent ten minutes navigating a labyrinthine phone menu, only to be put on hold with mind-numbing music. When you finally connect to a customer service agent, they sound robotic, reading from a script and unable to grasp the nuance of your problem. It’s a universally frustrating experience, one that erodes customer loyalty and strains support teams. For businesses, the challenge is a constant balancing act: how do you provide instant, scalable support without sacrificing the personal, human touch that defines a great customer experience? Hiring and training enough human agents to handle every query immediately is financially unfeasible for most, while traditional chatbots often fall short, providing generic, unhelpful answers that lead to more frustration.

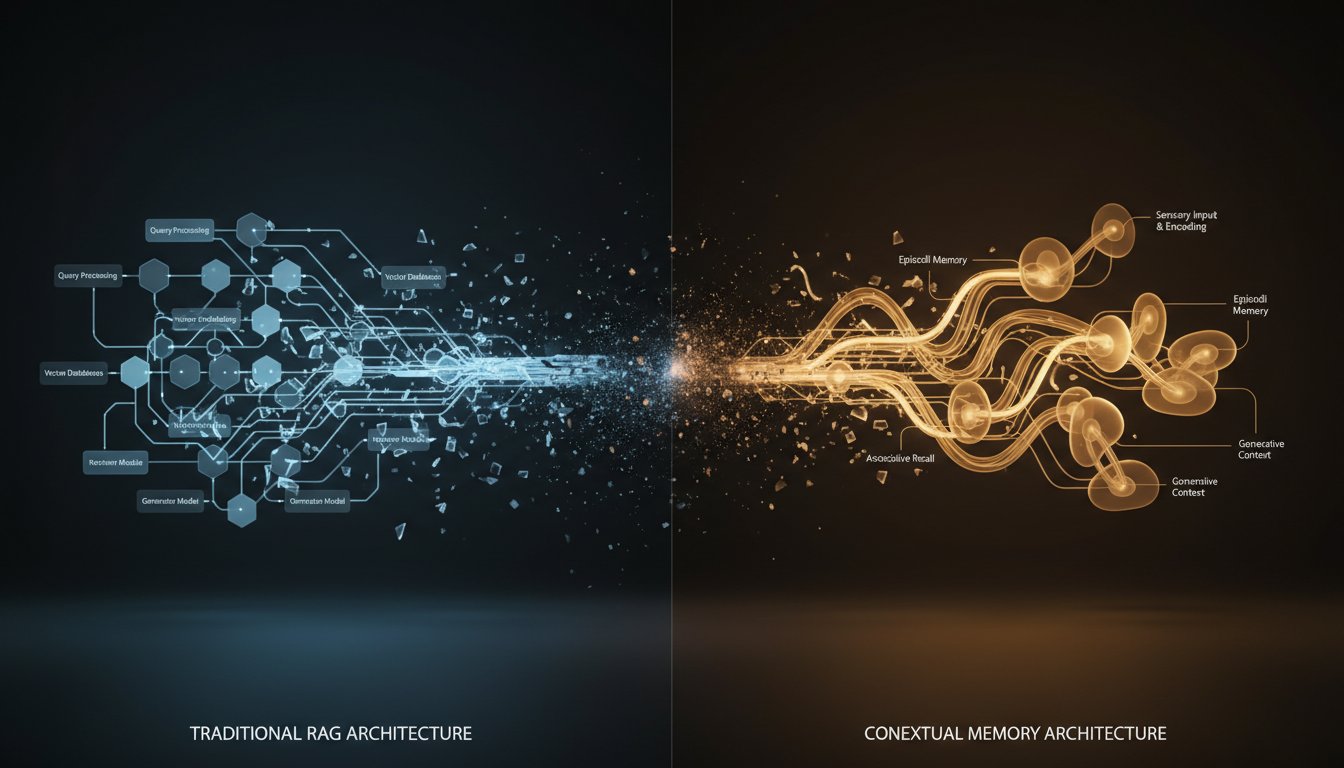

This is the precise dilemma where modern AI is not just offering an alternative, but a revolution. We are moving beyond clunky, keyword-based bots and into an era of intelligent, conversational AI that can understand context, access specific knowledge, and communicate in a strikingly human-like manner. The solution lies in a powerful combination of two cutting-edge technologies: Retrieval-Augmented Generation (RAG) and generative voice AI. RAG acts as the AI’s brain, allowing it to pull real-time, accurate information from a company’s private knowledge base—think product manuals, FAQs, or internal policies. This ensures the answers are not just fluent, but factually correct and relevant. Then, generative voice platforms like ElevenLabs provide the soul, converting that text into rich, emotionally resonant speech that mimics natural human intonation.

In this technical guide, we will walk you through the exact process of building your own voice-enabled AI assistant. We’ll break down the architecture, from setting up the RAG pipeline that fuels its intelligence to integrating ElevenLabs to give it a voice. You will learn the practical steps required to transform your static help documents into a dynamic, interactive conversational agent that can answer customer questions, solve problems, and provide a support experience that is both efficient and genuinely engaging. Forget the hold music; the future of customer service is here, and you are about to build it.

The Architecture of a Modern Voice AI Assistant

Before diving into code and APIs, it’s crucial to understand the components that work together to create a seamless conversational experience. Two years ago, this might have seemed like a lab experiment, but today, as noted by Enterprise Times, RAG “has become the reference architecture for building enterprise-grade generative AI applications.” Combining it with advanced text-to-speech (TTS) creates a truly next-generation system.

What is Retrieval-Augmented Generation (RAG)?

RAG is a technique designed to make Large Language Models (LLMs) smarter and more reliable. Instead of relying solely on its pre-trained data, which can be outdated or generic, an LLM powered by RAG first retrieves relevant information from a specific, up-to-date knowledge source. It then uses this retrieved information, or “context,” to generate its response. As TutorialsDojo puts it, RAG “enhances large language models (LLMs) outputs by incorporating information from external, authoritative knowledge sources.”

For a customer service assistant, this means the AI isn’t just guessing answers. It’s actively looking them up in your official documentation, ensuring the information about your product features, return policies, or troubleshooting steps is always accurate.

Why Voice Matters: The Power of ElevenLabs

While RAG provides the what (the accurate information), generative voice AI provides the how (the delivery). Traditional TTS systems often sound monotonous and robotic. ElevenLabs uses a sophisticated deep learning model that can generate speech with lifelike intonation, pacing, and emotion. You can clone voices, create custom ones, and fine-tune them to perfectly match your brand’s persona—be it empathetic and warm or professional and direct.

This isn’t just about aesthetics; it’s about engagement. A natural-sounding voice builds trust and keeps users engaged in the conversation, making the interaction feel less like talking to a machine and more like talking to a helpful assistant. It bridges the gap between digital efficiency and human connection.

Tying It All Together: The Full Workflow

The process from user query to AI response happens in a continuous, near-instantaneous loop:

1. Speech-to-Text (STT): The user speaks their question. An STT service transcribes the audio into text.

2. RAG Pipeline Query: The transcribed text is used as a query for the RAG system. The system searches its vector database for the most relevant documents (e.g., sections of an FAQ).

3. LLM Prompt Augmentation: The user’s query and the retrieved documents are combined into a single, enriched prompt that is sent to an LLM (like GPT-4 or Claude 3).

4. Text Generation: The LLM uses the provided context to generate a coherent, accurate text-based answer.

5. Text-to-Speech (TTS): This text answer is sent to the ElevenLabs API.

6. Voice Generation & Playback: ElevenLabs converts the text into an audio file with the chosen voice, which is then streamed back to the user.

Step-by-Step Guide: Building Your RAG-Powered Knowledge Base

The core of your AI’s intelligence is its knowledge base. A well-structured RAG pipeline ensures that the information it retrieves is fast, accurate, and relevant. This process involves preparing your data and loading it into a specialized vector database.

Step 1: Choosing and Preparing Your Data Source

Your AI is only as good as the data it has access to. Start by gathering all the documents you want your assistant to learn from. This could include:

* Product documentation

* Website FAQs

* Internal support wikis

* Knowledge base articles

* Previous support ticket resolutions

Clean this data to ensure it’s well-formatted and easy for a machine to parse. Break down large documents (like a 100-page PDF manual) into smaller, semantically related chunks. Each chunk should ideally cover a single topic or sub-topic. This chunking strategy is vital for retrieval accuracy.

Step 2: Setting Up a Vector Database

A standard database searches for keywords. A vector database searches for meaning. It stores your data chunks as numerical representations called “embeddings.” When a user asks a question, their query is also converted into an embedding, and the database finds the chunks with the most similar embeddings (i.e., the most similar semantic meaning).

Popular choices for vector databases include Pinecone, ChromaDB, or Weaviate. For this guide, you can start with an open-source option like ChromaDB, which is easy to set up for development purposes.

Step 3: Creating Embeddings and Indexing Your Documents

To get your data into the vector database, you need an embedding model. These models, often available via APIs from providers like OpenAI, Cohere, or through open-source libraries like SentenceTransformers, are responsible for converting your text chunks into vectors. You’ll write a script that iterates through your prepared data, sends each chunk to the embedding model, and stores the resulting vector (along with the original text) in your vector database.

This is a one-time setup process. Once your documents are indexed, the RAG pipeline is ready to retrieve information instantly.

Integrating ElevenLabs for Lifelike Voice Interaction

With your RAG pipeline ready to provide answers, the next step is to give it a voice. This is where the ElevenLabs API comes in, seamlessly converting the text responses into high-quality audio.

Step 1: Getting Your ElevenLabs API Key

First, you’ll need an ElevenLabs account. The platform offers a generous free tier for development and testing, allowing you to experiment with different voices and features before committing. Once you’ve registered, you can find your API key in your account profile settings. Keep this key secure, as it authenticates your requests.

Ready to get started? Click here to sign up for ElevenLabs and begin exploring the possibilities of generative voice.

Step 2: Choosing the Right Voice for Your Brand

ElevenLabs offers a vast library of pre-made voices in its Voice Library, each with distinct characteristics. You can filter by gender, age, and accent to find one that aligns with your brand. For a more unique experience, you can use the VoiceLab feature to design a custom voice or even create a digital clone of a specific voice (with permission, of course).

For a customer service assistant, you might choose a voice that sounds calm, clear, and empathetic. You can grab the voice_id for your chosen voice from the platform, which you’ll need for the API call.

Step 3: The API Call: Converting RAG Output to Speech

Integrating ElevenLabs is a simple API call. After your RAG and LLM pipeline generates a text response, you send that text to the ElevenLabs TTS endpoint. In your request, you’ll specify the text to be converted, the voice_id you selected, and any model settings you wish to configure (e.g., for stability or clarity).

# Pseudo-code for ElevenLabs API call

import requests

ELEVENLABS_API_KEY = "your_api_key_here"

VOICE_ID = "your_chosen_voice_id_here"

text_from_rag = "Hello! I can help with that. To reset your password, please visit the account settings page."

response = requests.post(

f"https://api.elevenlabs.io/v1/text-to-speech/{VOICE_ID}",

headers={

"Accept": "audio/mpeg",

"Content-Type": "application/json",

"xi-api-key": ELEVENLABS_API_KEY

},

json={

"text": text_from_rag,

"model_id": "eleven_multilingual_v2"

}

)

# The response.content will contain the MP3 audio data

# You can then save this to a file or stream it directly

Putting It All Together: A Practical Implementation

Let’s outline how these pieces connect in a simple Python script. This example assumes you have functions to handle speech-to-text (transcribe_audio), query your RAG pipeline (query_rag_pipeline), and play audio (play_audio_stream).

import elevenlabs

# Configure your API keys

elevenlabs.set_api_key("YOUR_ELEVENLABS_API_KEY")

# ... (configure other APIs like OpenAI)

def handle_customer_query(audio_input):

# 1. Transcribe user's speech to text

user_query_text = transcribe_audio(audio_input)

print(f"User said: {user_query_text}")

# 2. Query RAG pipeline to get context

# This function would query your vector DB and format the prompt

llm_response_text = query_rag_pipeline(user_query_text)

print(f"AI is replying: {llm_response_text}")

# 3. Generate voice with ElevenLabs

audio_stream = elevenlabs.generate(

text=llm_response_text,

voice="your_chosen_voice_id_or_name",

model="eleven_multilingual_v2",

stream=True # Streaming reduces perceived latency

)

# 4. Stream the audio back to the user

play_audio_stream(audio_stream)

# --- This would be triggered by a user starting a conversation ---

# example_audio_input = record_microphone()

# handle_customer_query(example_audio_input)

One critical consideration is latency. For a natural conversation, the response time must be minimal. Using streaming for both the LLM response and the ElevenLabs audio generation is key. This allows the AI to start speaking as soon as the first few words are generated, rather than waiting for the entire response to be complete.

In summary, we’ve broken the walls between static knowledge bases and dynamic, human-like interaction. By pairing the factual accuracy of Retrieval-Augmented Generation with the expressive power of ElevenLabs’ voice AI, you can build a customer service assistant that is not only incredibly efficient but also genuinely pleasant to interact with. This isn’t science fiction; it’s the new standard for enterprise-grade AI applications.

Remember that first frustrating phone call we imagined? Now, picture a customer connecting instantly, asking a complex question, and receiving a clear, helpful, and natural-sounding answer in seconds. That is the experience you can now build. The technology is accessible, the steps are clear, and the impact on customer satisfaction is immense. Ready to build your own? The first step is getting the tools you need. Click here to sign up for ElevenLabs and start bringing your AI assistant to life.