A senior developer, let’s call her Maria, sips her morning coffee, opens her laptop, and is immediately greeted by a queue of five pull requests. One is a simple bug fix, three are minor feature updates, and one is a sprawling refactor. She knows that for the next two hours, her focus will be fragmented. She won’t be architecting the next-gen platform or solving a complex scaling issue. Instead, she’ll be pointing out the same style guide violations, reminding junior developers about edge-case handling, and explaining company-specific best practices for the dozenth time. This scenario is the silent productivity killer in countless development teams. The manual code review process, while essential for quality, is a notorious bottleneck, consuming the valuable time of senior engineers and slowing down the entire development lifecycle.

This isn’t just an anecdotal problem; it’s a systemic inefficiency. While tools like static linters and code analyzers are invaluable for catching syntax errors and basic style issues, they fall short when it comes to context, nuance, and company-specific logic. They can’t understand the intent behind a piece of code or reference a decade of internal architectural decisions documented across Confluence and Google Docs. The result is a reliance on human expertise, creating a process that doesn’t scale and often leads to developer burnout. This is the central challenge facing modern DevOps: how do we maintain high code quality and mentor junior talent without sacrificing the velocity and innovation that senior engineers are uniquely positioned to drive?

The solution lies in a smarter, more dynamic form of automation. Imagine an AI-powered assistant that lives directly within your GitHub workflow. When a pull request is submitted, this assistant doesn’t just spit out a cryptic list of errors. It consults your entire internal knowledge base—your coding standards, your security protocols, your architectural decision records—to understand the full context. It then provides feedback not as a block of text, but as a short, personalized video from a friendly AI avatar who clearly explains the ‘what’ and the ‘why’ behind the requested changes. This isn’t science fiction. This is the power of combining Retrieval-Augmented Generation (RAG) with synthetic media APIs like HeyGen.

In this technical guide, we will walk you through the architecture and key implementation steps to build this exact system. We’ll explore how to ground a large language model in your specific documentation using RAG, how to trigger this process automatically using GitHub Actions, and how to deliver engaging, human-like feedback using HeyGen’s API. Get ready to transform your code review process from a bottleneck into a powerful, scalable engine for quality and education.

Why Traditional Code Review Fails at Scale

The ritual of code review is a cornerstone of modern software development, designed to catch bugs, enforce standards, and share knowledge. Yet, as teams and codebases grow, the traditional, manual approach begins to show significant cracks. It evolves from a collaborative practice into an operational drag, hindering the very agility it was meant to support.

The Bottleneck of Senior Developers

Senior developers are your team’s most valuable strategic resource. Their time is best spent on complex problem-solving, system design, and mentoring. However, they are often disproportionately burdened with code review duties. This creates a critical bottleneck where multiple streams of work must wait for a single point of approval, slowing down deployment frequency and frustrating the entire team.

This isn’t just about speed; it’s about opportunity cost. Every hour a senior engineer spends on a routine review is an hour not spent on high-impact innovation. This repetitive, low-context work is a primary contributor to burnout and can stifle the career growth of your most experienced talent.

The Limits of Static Linters and Analyzers

Automated linters and static analysis tools are a necessary first line of defense. They excel at enforcing consistent formatting, identifying anti-patterns, and flagging potential runtime errors. But their intelligence is limited. They operate on a fixed set of rules and have no awareness of your organization’s unique business logic or architectural philosophy.

A linter can’t tell a junior developer why your team prefers a specific microservice communication pattern or warn them that their new database query violates an unwritten rule about performance on a key table. This is where the most critical—and time-consuming—review feedback occurs, and it’s a domain where traditional tools are blind.

The Rise of Context-Aware AI

The gap between what static tools can do and what senior developers must do is where AI, specifically RAG, enters the picture. As new research from institutions like the Korea Advanced Institute of Science and Technology (KAIST) demonstrates, using RAG can dramatically improve the quality of automated code review comments. By grounding a powerful language model in a specific, relevant knowledge base, you create an agent that understands your rules, your history, and your context.

Architectural Blueprint: Your AI-Powered DevOps Assistant

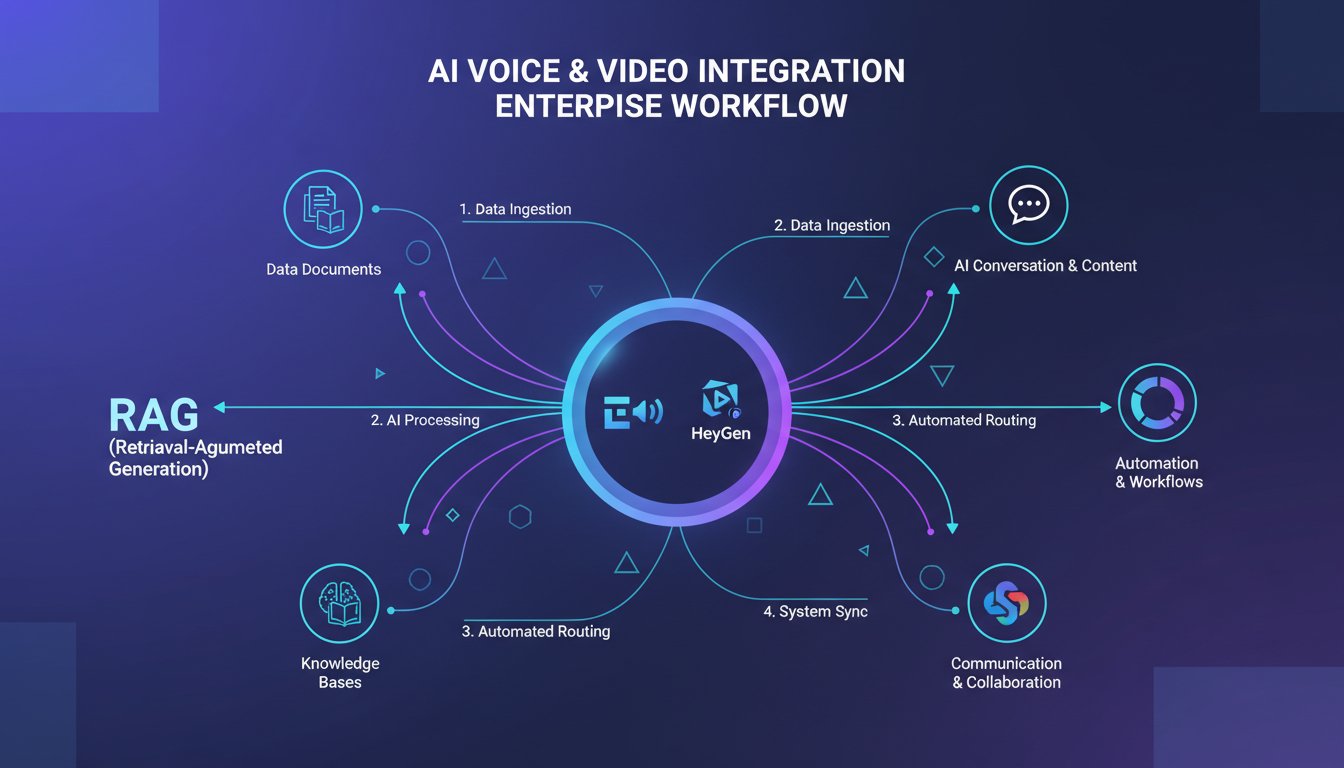

Building this system requires orchestrating a few key cloud-native technologies. The architecture is modular, allowing for flexibility and scalability. Think of it as a four-part harmony: a knowledge base, a RAG pipeline, a trigger, and a presenter.

Component 1: The Knowledge Base (Your Coding Standards)

This is the ground truth for your AI. It’s a collection of all documents defining your engineering best practices: style guides, security checklists, architectural decision records (ADRs), post-mortem analyses, and even well-documented code from your main branch. This content will be converted into a vector database, creating a searchable, semantic representation of your company’s engineering wisdom.

Component 2: The RAG Pipeline (The “Brain”)

This is the core logic. When triggered, the pipeline takes the code changes from a pull request as its input. It first retrieves the most relevant documents from your vector database (the ‘Retrieval’ step). It then feeds this context, along with the code diff, into a large language model (LLM) with a prompt like: “You are a senior software engineer. Based on the following internal coding standards [retrieved context], review this code [code diff] and provide constructive feedback.”

Component 3: The GitHub Action (The “Trigger”)

This is the integration point into your DevOps workflow. You’ll create a simple GitHub Action that listens for a pull_request event. When a new PR is opened or updated, the action triggers your RAG pipeline, passing the necessary code information to it. Once the review is complete, the action can post the results back as a comment on the PR.

Component 4: The HeyGen API (The “Mouth”)

The final, and most innovative, piece. Instead of having the RAG pipeline output a block of text, you’ll have it generate a concise script for the review feedback. This script is then passed to the HeyGen API, which generates a short video of an AI avatar speaking the review. The GitHub Action then posts a link to this video in the pull request comment, offering a much more engaging and personal feedback experience.

Step-by-Step Guide: Building Your RAG-Powered Code Reviewer

Now, let’s move from theory to practice. While a full codebase is beyond the scope of a single article, these steps outline the critical implementation path.

Step 1: Vectorizing Your Internal Documentation

Your first task is to create the knowledge base. Gather all your markdown files, PDFs, and Confluence pages containing your coding standards. Use a data loader framework (like LlamaIndex or LangChain) to parse these documents, split them into manageable chunks, and convert them into vector embeddings using a model like text-embedding-ada-002. Store these vectors in a specialized vector database such as Pinecone, Weaviate, or ChromaDB.

Step 2: Setting Up the GitHub Action on Pull Requests

In your project’s .github/workflows/ directory, create a new YAML file. This workflow will be triggered on the pull_request event. It will check out the code and then use curl or a dedicated action to send a POST request to your RAG pipeline’s API endpoint, including the PR number and repository details in the payload.

Step 3: Implementing the RAG Logic to Analyze Code Diffs

Your RAG pipeline, likely a serverless function (e.g., AWS Lambda, Google Cloud Function), will receive the trigger from GitHub. It will use the GitHub API to fetch the code diff for the specified pull request. This diff is then used to query your vector database for relevant documentation snippets. The diff and the retrieved snippets are combined into a single, comprehensive prompt for your chosen LLM (e.g., GPT-4o, Claude 3).

As the Forbes Technology Council notes, “For enterprises betting big on generative AI, grounding outputs in real, governed data isn’t optional—it’s the foundation of responsible innovation.” This step is that principle in action. By forcing the LLM to base its analysis on your documentation, you mitigate the risk of generic or incorrect feedback and ensure the AI acts as a true extension of your engineering culture.

Step 4: Generating the Review Script and Calling the HeyGen API

Structure your LLM prompt to output a JSON object containing a summary of the review and a concise, spoken-word script. This script should be conversational and constructive. Your serverless function then takes this script and makes a POST request to the HeyGen API’s /v2/video/generate endpoint, specifying your chosen avatar and voice. HeyGen will process this and return a video URL upon completion.

Bringing Your AI Reviewer to Life with HeyGen

A wall of text in a PR comment can feel critical and impersonal. A video, however, feels like a conversation. It’s a fundamental shift in the human-computer interaction paradigm within DevOps, turning a critique into a micro-mentoring session.

Why Video Feedback is More Powerful than Text

Video conveys tone and nuance that text cannot. A friendly avatar delivering feedback feels more collaborative and less accusatory. This can significantly improve the psychological safety of the review process, especially for junior developers, encouraging them to learn without fear of judgment. It’s a more effective way to educate and enforce standards at the same time.

A Practical Walkthrough of the HeyGen API Integration

Integrating with HeyGen is straightforward. After creating your account and obtaining an API key, you can pick a stock avatar or even create a custom one. The API call to generate a video is a simple POST request where you provide the script text, avatar ID, and voice settings. Your code will then need to poll the API for the status of the video generation job. Once it’s complete, you’ll receive the shareable video link to post back to GitHub.

This is the new frontier of DevOps, where AI doesn’t just automate tasks—it enhances communication and understanding. Maria, our senior developer, is now free. She can trust that her AI assistant is handling the first-pass reviews, applying the team’s collective wisdom with perfect consistency and delivering feedback in a way that truly helps her team grow. She can now focus on the complex, creative work that only a human can do. To bring your AI assistant to life and give it a voice and face, click here to sign up for a free trial with HeyGen and start building today: https://heygen.com/?sid=rewardful&via=david-richards